Clustering and pruning

The appropriate number of clusters in the neuro-fuzzy system is equivalent to the number of middle-layer neurons p shown in Figure 1. Determination of the optimal number of fuzzy rules is equivalent to finding a suitable number of clusters for the given data set. This can also be performed using fuzzy c-means clustering (Chen, Linkens, 2001; Linkens, Chen, 1999). Clustering is itself a multiobjective optimization problem that maximizes compactness within clusters, maximizes separation between clusters, and maximizes neuro-fuzzy system performance.

In the previous section we solved for cluster count using a direct approach involving manual tuning (see Table 1). However, we could also solve for cluster count by observing the output singletons zi after training, discarding those that are significantly smaller than the others, and then retraining the network. This is a type of pruning. For example, when using BBO to train the neuro-fuzzy system with 5 middle-layer neurons, a typical result for the output singletons after convergence is

It is seen that the magnitude of z4 (0.392) is smaller by a factor of 4 than any of the other elements of z. This indicates that the corresponding fuzzy rule might be able to be safely removed from the neuro-fuzzy system without sacrificing performance. Retuning should then be performed because the neuro-fuzzy parameters will need to be adjusted to compensate for the network size reduction.

Another way to check if we are using too many middle-layer neurons is by looking at the distance between fuzzy membership function centers. If, after training, two membership function centers are very close to each other, that indicates that those two fuzzy sets could be combined. For example, the matrix of fuzzy centroids after a typical training run with 5 middle-layer neurons (i.e., 5 fuzzy membership sets) is given by

Each row of c corresponds to a fuzzy set centroid, and each column of c corresponds to one dimension of the input data. A cursory look at the c matrix shows that rows 4 and 5 are similar to each other. A matrix of Euclidean distances between centroids (i.e., between columns of c) can be derived as

where![]() is the Euclidean distance between centroids i and j. The Ac matrix indicates that fuzzy centroids 4 and 5 are much closer to each other than the other centroids, which implies that the corresponding membership functions overlap, and so they could be combined. Afterward, the neuro-fuzzy system should be retrained to compensate for the change in its structure.

is the Euclidean distance between centroids i and j. The Ac matrix indicates that fuzzy centroids 4 and 5 are much closer to each other than the other centroids, which implies that the corresponding membership functions overlap, and so they could be combined. Afterward, the neuro-fuzzy system should be retrained to compensate for the change in its structure.

Fine tuning using gradient information

The BBO algorithm that we used, like other Evolutionary Algorithms (EAs), does not depend on gradient information. Therein lies its strength relative to gradient-based optimization methods. Evolutionary Algorithms (EAs) can be used for global optimization since they do not rely on local gradient information. Since the neuro-fuzzy system shown in Figure 1 may have multiple optima, BBO training is less likely to get stuck in a local optima compared to gradient-based optimization.

However, additional performance improvement could be obtained in the neuro-fuzzy classifier by using gradient information in conjunction with EA-based optimization. Gradient-based methods can be combined with EAs in order to take advantage of the strengths of each method. First we can use BBO, as above, in order to find neuro-fuzzy parameters that are in the neighborhood of the global optimum. Then we can use a gradient-based method to fine tune the BBO result. The most commonly-used gradient-based method is gradient descent clustering (Chen, Linkens, 2001; Linkens, Chen, 1999). Gradient descent can be further improved by using an adaptive learning rate and momentum term (Nauck, Klawonn, Kruse, 1997). Kalman filtering is a gradient-based method that can give better fuzzy system and neural network training results than gradient descent (Simon, 2002a, 2002b). Constrained Kalman filtering can further improve fuzzy system results by optimally constraining the network parameters (Simon, 2002c). H-infinity estimation is another gradient-based method that can be used for fuzzy system training to improve robustness to data errors (Simon, 2005).

Training criterion

The ultimate goal of the neuro-fuzzy network is to maximize correct classification percentage. If the neuro-fuzzy output is greater than 0, then the ECG is classified as cardiomyopathy; otherwise, the ECG is classified as non-cardiomyopathy. The bottom plot in Figure 6 shows that while RMS training error is monotonically nondecreasing, the success rate for the training data is non-monotonic. We could more directly address the problem of ECG data classification by using classification success rate as our fitness function rather than trying to minimize the RMS error of Eq. (2). That is, in fact, one of the advantages of EA training relative to gradient-based methods – the fitness function does not have to be differentiable. However, if we use classification success rate as our fitness function, and then try to use a gradient-based method for fine-tuning, the cost functions of the two training methods would be inconsistent.

Conclusion

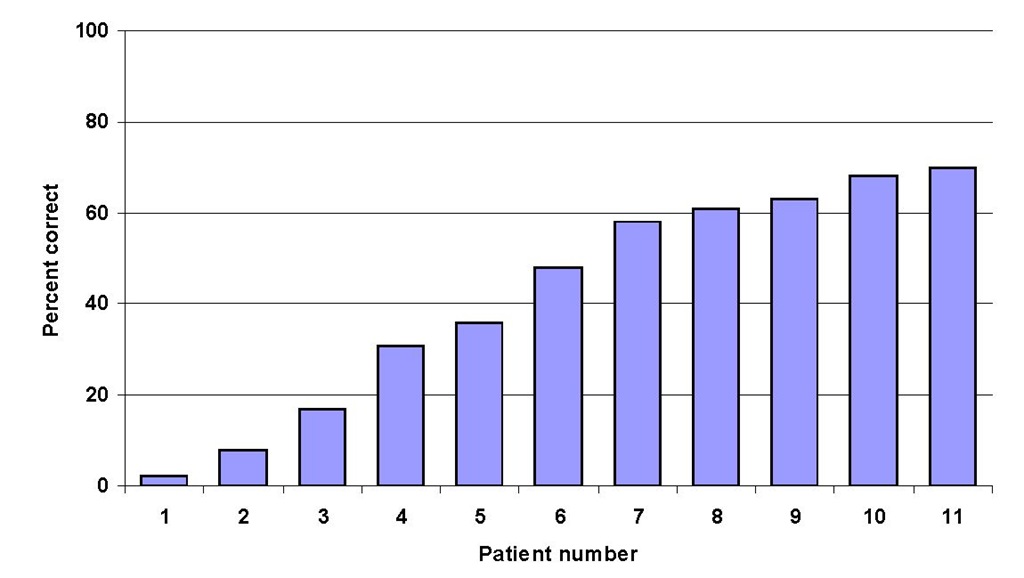

We have shown that clinical ECG data can be correctly classified as cardiomyopathy or non-cardiomyopathy using a neuro-fuzzy network training by biogeography-based optimization (BBO). Our results show a correct classification rate on test data of over 60%. Better results can undoubtedly be attained with further training, but the main goal of this initial research was to demonstrate feasibility and to establish a framework for further refinement. Although our preliminary results are good, there are many enhancements that need to be made in order to improve performance and incorporate this work into a commercial product. For example, demographic information needs to be included with the ECG data. Some of the test ECGs were correctly classified 100% of the time, while others had a very low success rate. Figure 7 shows the classification success rate for the test data as a function of patient ID. Some patients generated ECG data that was successfully classified as cardiomyopathy / non-cardiomyopathy only 2% of the time, while others generated data that was successfully classified 100% of the time. This indicates that demographic data is important and that we should group patients into similar groups for testing and training. Some of these data include gender, race, medication usage, and age. This will become feasible as we perform more clinical studies and collect data from more patients.

Fig. 7. ECG classification success rate for test patients.

We note that our results are based on snapshots of the data at single instants of time. We could presumably get better results by using a "majority rules" strategy for data collected over several minutes. For example, suppose that test accuracy is 60% for a given patient. We could use ECG data at three separate time instants and diagnose cardiomyopathy if the neuro-fuzzy network predicts cardiomyopathy for two or more of the data. This would boost test accuracy from 60% to 65%, assuming that the probability of correct classification is independent from one time instant to the next. We could then further improve accuracy by using more time instants.

A strong reason for investigating this cardiac anomaly is its association to Atrial Fibrillation occurrence. The availability of ECG registrations and efficiency in time and cost savings of such a different approach, especially in cardiovascular surgical patients would imply as a future work, the inclusion of this automated classification algorithm into a bed side monitor indicator that might be used in future classification and/or forecasting algorithms under investigation.

![tmp17-135_thumb[2][2] tmp17-135_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/05/tmp17135_thumb22_thumb.jpg)

![tmp17-136_thumb[2][2] tmp17-136_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/05/tmp17136_thumb22_thumb.jpg)