Graphics Reference

In-Depth Information

arises from the manufacturing process. Digital imagers are sensitive to thermal

noise and have small divisions between pixels.

Since the simple model of a lens as an ideal focusing device and a sensor as

an ideal photon measurement device yields higher image quality than a realistic

camera model, there is little reason to use a more realistic model. Because lens

flare, film grain, bloom, and vignetting are recognized as elements of realism from

films, those are sometimes modeled using a post-processing pass. There is no need

to model the true camera optics to produce these effects, since they are being

added for aesthetics and not realism. Note that this arises purely from camera

culture—except for bloom, none of these effects are observed by the naked eye.

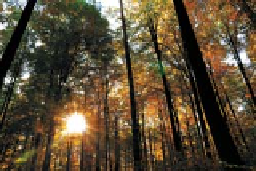

Figure 14.12: The streaks

from the sun and apparently

translucent-colored polygons

and circles along a line through

the sun in this photograph

are a lens flare created by the

intense light reflecting within the

multiple lenses of the camera

objective. Light from all parts of

the scene makes these reflections,

but most are so dim compared to

the sun that their impact on the

image is immeasurable. (Credit:

Spiber/Shutterstock)

This section describes common models of object surfaces. Many rendering algo-

rithms interact only with those surfaces. Some interact with the interior of objects,

whose boundaries can still be represented by these methods. Section 14.7 briefly

describes some representations for objects with substantial internal detail.

Some objects are modeled as thin, two-sided surfaces. A butterfly's wing and a

thin sheet of cloth might be modeled this way. These models have zero volume—

there is no “inside” to the model. More commonly, objects have volume, but the

details inside are irrelevant. For an opaque object with volume, the surface typ-

ically represents the side seen from the outside of the object. There is no need

to model the inner surface or interior details, because they are never seen (see

Chapter 36). To eliminate the inner side of the skin of an object, polygons have

an orientation. The

front face

of a polygon is the side indicated to face outward

and the

back face

is the side that faces inward. A process called

backface culling

eliminates the inward-facing side of each polygon early in the rendering process.

Of course, this model is revealed as a single-sided, hollow skin should the viewer

ever enter the model and attempt to observe the inside, as you saw in Chapter 6.

This happens occasionally in games due to programming errors. Because there is

no detail inside such an object and the back faces of the outer skin are not visible,

in this case the entire model seems to disappear from view once the viewpoint

passes through its surface.

Translucent objects naturally reveal their interior and back faces, so they

require special consideration. They are often modeled either as a translucent, two-

sided shell, or as two surfaces: an outside-to-inside interface and an inside-to-

outside interface. The latter model is necessary for simulating refraction, which is

sensitive to whether light rays are entering or leaving the object.

Surface and object geometry is useful for more than rendering. Intersections

of geometry are used for modeling and simulation. For example, we can model

an ice-cream cone with a bite taken out as a cone topped by a hemisphere ...with

some smaller balls subtracted from the hemisphere. Simulation systems often use

collision proxy geometry

that is substantially simpler than the geometry that is

rendered. A character modeled as a mesh of 1 million polygons might be simulated

as a collection of 20 ellipsoids. Detecting the intersection of a small number of

ellipsoids is more computationally efficient than detecting the intersection of a

large number of polygons, yet the resultant perceived inaccuracy of simulation

may be small.