Graphics Reference

In-Depth Information

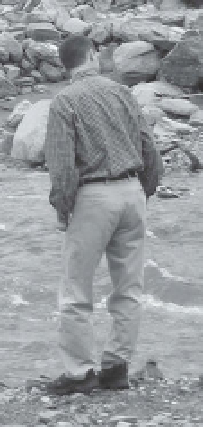

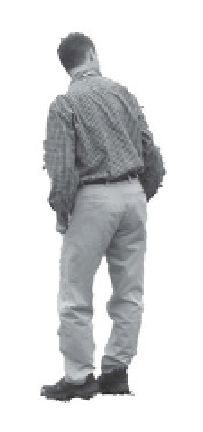

Figure 7.20.

Estimating human pose from

a single image (a) is a difficult computer

vision problem. Even if the human can be

automatically segmented from the image as

in (b), loose clothing, confounding textures,

and kinematic ambiguities mean that many

degrees of freedom are estimated poorly.

(a)

(b)

difficult to individually segment, and the right knee has a bulge of fabric far from the

actual joint. Image edges can help separate an image of a human into body parts for

a bottom-up segmentation, but edges can also be confounding (e.g., a striped shirt).

We also face kinematic ambiguities. For example, in Figure

7.20

the left arm is

foreshortened and the position of the left elbow joint is unclear; the right wrist and

hand are completely obscured. Even with high-resolution cameras, it's also difficult

to resolve rotations of the arm bones around their axes; for example, the orientation

of the left hand in Figure

7.20

is difficult to guess. Sminchisescu and Triggs [

457

] esti-

mated that up to a third of the underlying degrees of freedom in a kinematic model

are usually not observable from a given viewpoint due to self-occlusions and rota-

tional ambiguities. The poses of hands and feet are especially difficult to determine,

which is why markerless systems frequently don't include degrees of freedom for the

wrist and ankle joints.

Manymarkerless algorithms discard the original image entirely in favor of a

silhou-

ette

of the human, estimatedusing background subtraction (i.e.,matting; seeChapter

2

). This exacerbates the problems illustrated in Figure

7.20

, and introduces new ones.

In Figure

7.21

a-b, we can see that it's impossible to disambiguate the right limbs of

the body from the left limbs by looking at the silhouette, leading tomajor ambiguities

in interpretation. It's also difficult to resolve depth ambiguities (e.g., whether a fore-

shortened arm is pointed toward the camera or away from it). More generally, we can

see that two very different poses can have similar silhouettes. Consequently, small

changes in a silhouette can correspond to large changes in pose (e.g., an arm at the

side (Figure

7.21

a) versus an armpointing outward (Figure

7.21

c)). Therefore, several

silhouettes from different perspectives are required to obtain a highly accurate pose

estimate.

Figure

7.22

illustrates that some uncertainties can be mitigated if we also use

edge

information

inside the silhouette. For example, the location of an arm crossed in

front of the body might be better estimated if the boundary between the forearm and

torso can be found with an edge detector.