Hardware Reference

In-Depth Information

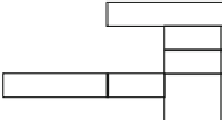

I1

I2

Out-of-order

Branch

Instruction Fetch

Branch Search / Instruction Pre-decoding

I3

ID

E1

E2

E3

E4

E5

E6

E7

Branch

Instruction

Decoding

FPU Instruction

Decoding

Address

Tag

Execution

Data

Load

FPU

Data

Transfer

FPU

Arithmetic

Execution

-

WB

WB

Data

Store

WB

Store Buffer

WB

Flexible Forwarding

BR

INT

LS

FE

Fig. 3.19

Eight-stage superpipeline structure of SH-X2

Figure

3.19

illustrates the pipeline structure of the SH-X2. The I3 stage was

added and performs branch search and instruction predecoding. Then the ID stage

timing was relaxed, and the achievable frequency increased.

Another critical timing path was in first-level (L1) memory access logic. SH-X

had L1 memories of a local memory and I- and D-caches, and the local memory was

unified for both instruction and data accesses. Since all the memories could not be

placed closely, a memory separation for instruction and data was good to relax the

critical timing path. Therefore, the SH-X2 separated the unified L1 local memory of

the SH-X into instruction and data local memories (ILRAM and OLRAM).

With the other various timing tuning, the SH-X2 achieved 800 MHz using a

90-nm generic process from the SH-X's 400 MHz using a 130-nm process. The

improvement was far higher than the process porting effect.

3.1.4.2

Low-Power Technologies of SH-X2

The SH-X2 enhanced the low-power technologies from that of the SH-X explained in

Sect.

3.1.3.4

. Figure

3.20

shows the clock-gating method of the SH-X2. The D-drivers

also gate the clock with the signals dynamically generated by hardware, and the leaf

F/Fs requires no CCP. As a result, the clock tree and total powers are 14% and 10%

lower, respectively, than in the SH-X method.

The SH-X2 adopted a way prediction method to the instruction cache. The SH-X2

aggressively fetched the instructions using branch prediction and early-stage branch

techniques to compensate branch penalty caused by long pipeline. The power con-

sumption of the instruction cache reached 17% of the SH-X2, and the 64% of the

instruction cache power was consumed by data arrays. The way prediction misses

were less than 1% in most cases and were 0% for the Dhrystone 2.1. Then the 56%

of the array access was eliminated by the prediction for the Dhrystone. As a result,

the instruction cache power was reduced by 33%, and the SH-X2 power was reduced

by 5.5%.

Search WWH ::

Custom Search