Hardware Reference

In-Depth Information

The SIMD processing requirements within the SMs place constraints on the

kind of code programmers can run on these units. In fact, each CUDA core must be

running the same code in lock-step to achieve 16 operations simultaneously. To

ease this burden on programmer, NVIDIA developed the CUDA programming lan-

guage. The CUDA language specifies the program parallelism using threads.

Threads are then grouped into blocks, which are assigned to streaming processors.

As long as every thread in a block executes exactly the same code sequence (that

is, all branches make the same decision), up to 16 operations will execute simultan-

eously (assuming there are 16 threads ready to execute). When threads on an SM

make different branch decisions, a performance-degrading effect called branch

divergence will occur that forces threads with differing code paths to execute seri-

ally on the SM. Branch divergence reduces parallelism and slows GPU processing.

Fortunately, there is a wide range of activities in graphics and image processing

that can avoid branch divergence and achieve good speed-ups. Many other codes

have also been shown to benefit from the SIMD-style architecture on graphics

processors, such as medical imaging, proof solving, financial prediction, and graph

analysis. This widening of potential applications for GPUs has earned them the

new moniker of

GPGPU

s(

General-Purpose Graphics Processing Units

).

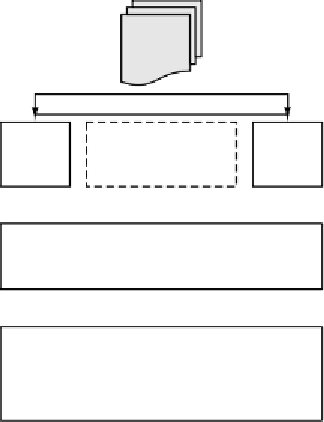

Threads

16-KB

Shared

Memory

48-KB

Shared Memory

or L1 Cache

16-KB

L1

Cache

768-KB

L2 Cache

DRAM

Figure 8-18.

The Fermi GPU memory hierarchy.

With 512 CUDA cores, the Fermi GPU would grind to a halt without signifi-

cant memory bandwidth. To provide this bandwidth, the Fermi GPU implements a

modern memory hierarchy as illustrated in Fig. 8-18. Each SM has both a dedicat-

ed shared memory and a private level 1 data cache. The dedicated shared memory