Information Technology Reference

In-Depth Information

n

y

¦

wx

,

p

ji

i

i

1

where

n

is the number of input layer neurons connected with the activated neuron.

Using the set of weights learnt and stored, the network is capable of recognizing

the pattern once learnt and the patterns in its neighbourhoods because similar

inputs will activate the same Kohonen neuron.

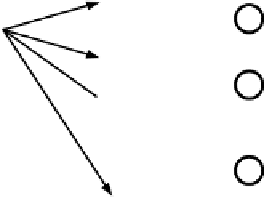

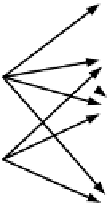

After locating the Kohonen neuron, we turn to the Grossberg layer,

i.e.

the

output layer of the network, and train it. To produce the desired mapping of the

pattern at the network output using the output of the activated Kohonen neuron, all

we need is to connect this neuron with each neuron in the Grossberg layer using

the corresponding weights. As a result, a star connection between the Kohonen

neuron and the network output, known as

Grossberg's outstar

, builds the output

vector

(

yy

,

,...,

y

as shown in Figure 3.10.

),

1

p

2

p

p

Outstar of Counter Propagation

network

y

1p

X

1

p

X

2

p

y

2p

:

:

:

:

:

:

y

mp

X

np

Input Layer

Output Layer

Kohonen Layer

Figure 3.10.

Outstar of counterpropagation network

The input vectors of a counterpropagation network should generally be

normalized,

i.e

. they should satisfy the relation

x

.

1

The normalization can be carried out by decreasing or increasing the vector length

to be on the unit sphere using the relation

x

x

.

x

The question that remains is how to initialize the weight vectors before the network

training starts. The preference of taking the randomized weight vectors has not

always given reliable learning results. It has in some cases even created serious

solution problems. The way out was found in using the

convex combination

Search WWH ::

Custom Search