Information Technology Reference

In-Depth Information

Hidden

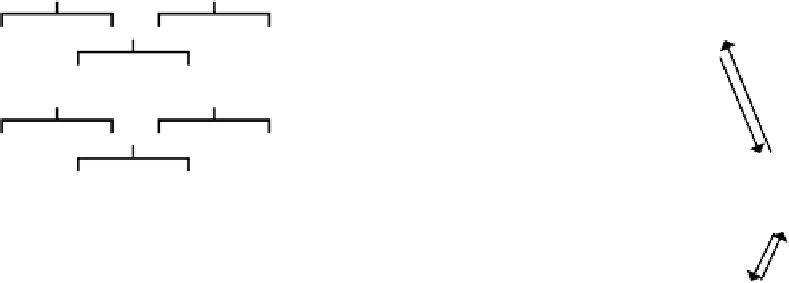

Christo=Penny

Andy=Christi

Marge=Art

Vicky=James

Jenn=Chuck

Colin

Charlot

Rob=Maria

Pierro=Francy

Gina=Emilio

Angela=Tomaso

Lucia=Marco

Alf

Sophia

Figure 6.7:

The family tree structure learned in the

family

trees

task. There are two isomorphic families, one English

and one Italian. The

=

symbol indicates marriage.

Agent_Code

Relation_Code

Patient_Code

6.4.1

Exploration of a Deep Network

Relation

Agent

Patient

Now, let's explore the case of learning in a deep network

using the same family trees task as O'Reilly (1996b)

and Hinton (1986). The structure of the environment is

shown in figure 6.7. The network is trained to produce

the correct name in response to questions like “Rob is

married to whom?” These questions are presented by

activating one of 24 name units in an

agent

input layer

(e.g., “Rob”), in conjunction with one of 12 units in a

relation

input layer (e.g., “Married”), and training the

network to produce the correct unit activation over the

patient

output layer.

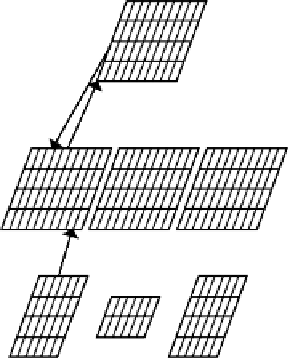

Figure 6.8:

The family tree network, with intermediate

Code

hidden layers that re-represent the input/output patterns.

This will bring up a window displaying the first ten

training events, which should help you to understand

how the task is presented to the network.

Go ahead and scroll down the events list, and see

the names of the different events presented to the net-

work (you can click on any that look particularly interest-

ing to see how they are represented).

Now, let's see how this works with the network itself.

,

!

Open

project

family_trees.proj.gz

in

chapter_6

.

First, notice that the network (figure 6.8) has

Agent

and

Relation

input layers, and a

Patient

output

layer all at the bottom of the network. These layers

have

localist

representations of the 24 different peo-

ple and 12 different relationships, which means that

there is no “overt” similarity in these input patterns

between any of the people. Thus, the

Agent_Code

,

Relation_Code

,and

Patient_Code

hidden lay-

ers provide a means for the network to re-represent

these localist representations as richer distributed pat-

terns that should facilitate the learning of the mapping

by emphasizing relevant distinctions and deemphasiz-

ing irrelevant ones. The central

Hidden

layer is re-

sponsible for performing the mapping between these re-

coded representations to produce the correct answers.

,

!

Press the

Step

button in the control panel.

The activations in the network display reflect the mi-

nus phase state for the first training event (selected at

random from the list of all training events).

,

!

Press

Step

again to see the plus phase activations.

The default network is using a combination of

Hebbian and GeneRec error-driven learning, with the

amount of Hebbian learning set to .01 as reflected by

the

lrn.hebb

parameter in the control panel. Let's

see how long it takes this network to learn the task.

,

!

Open up a graph log to monitor training by press-

ing

View

,

TRAIN_GRAPH_LOG

, turn the

Display

of the

network off, and press

Run

to allow the network to train

on all the events rapidly.

As the network trains, the graph log displays the er-

ror count statistic for training (in red) and the average

number of network settling cycles (in orange).

,

!

Press

View

and

select

EVENTS

on

the

family_trees_ctrl

control panel.

,

!

Search WWH ::

Custom Search