Information Technology Reference

In-Depth Information

is clearly true of the cortex, where in the visual system

for example the original retinal array is re-represented

in a large number of different ways, many of which

build upon each other over many “hidden layers” (Des-

imone & Ungerleider, 1989; Van Essen & Maunsell,

1983; Maunsell & Newsome, 1987). We explore a

multi-hidden layer model of visual object recognition

in chapter 8.

A classic example of a problem that benefits from

multiple hidden layers is the

family trees

problem of

Hinton (1986). In this task, the network learns the

family relationships for two isomorphic families. This

learning is facilitated by re-representing in an inter-

mediate hidden layer the individuals in the family to

encode more specifically their functional similarities

(i.e., individuals who enter into similar relationships

are represented similarly). The importance of this re-

representation was emphasized in chapters 3 and 5 as a

central property of cognition.

However, the deep network used in this example

makes it difficult for a purely error-driven network to

learn, with a standard backpropagation network tak-

ing thousands of epochs to solve the problem (although

carefully chosen parameters can reduce this to around

100 epochs). Furthermore, the poor depth scaling of

error-driven learning is even worse in bidirectionally

connected networks, where training times are twice as

long as in the feedforward case, and seven times longer

than when using a combination of Hebbian and error-

driven learning (O'Reilly, 1996b).

This example, which we will explore in the subse-

quent section, is consistent with the general account of

the problems with remote error signals in deep networks

presented previously (section 6.2.1). We can elaborate

this example by considering the analogy of balancing a

stack of poles (figure 6.6). It is easier to balance one tall

pole than an equivalently tall stack of poles placed one

atop the other, because the corrective movements made

by moving the base of the pole back and forth have a

direct effect on a single pole, while the effects are indi-

rect on poles higher up in a stack. Thus, with a stack

of poles, the corrective movements made on the bot-

tom pole have increasingly remote effects on the poles

higher up, and the nature of these effects depends on

the position of the poles lower down. Similarly, the er-

a)

b)

Self−organizing

Learning

Gyroscopes

Flexibility

Limits

Constraints

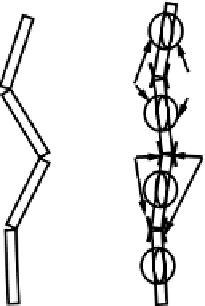

Figure 6.6:

Illustration of the analogy between error-driven

learning in deep networks and balancing a stack of poles,

which is more difficult in

a)

than in

b)

. Hebbian self-

organizing model learning is like adding a gyroscope to each

pole, as it gives each layer some guidance (stability) on how to

learn useful representations. Constraints like inhibitory com-

petition restrict the flexibility of motion of the poles.

ror signals in a deep network have increasingly indirect

and remote effects on layers further down (away from

the training signal at the output layer) in the network,

and the nature of the effects depends on the representa-

tions that have developed in the shallower layers (nearer

the output signal). With the increased nonlinearity as-

sociated with bidirectional networks, this problem only

gets worse.

One way to make the pole-balancing problem easier

is to give each pole a little internal gyroscope, so that

they each have greater self-stability and can at least par-

tially balance themselves. Model learning should pro-

vide exactly this kind of self-stabilization, because the

learning is local and produces potentially useful rep-

resentations even in the absence of error signals. At

a slightly more abstract level, a combined task and

model learning system is generally more constrained

than a purely error-driven learning algorithm, and thus

has fewer degrees of freedom to adapt through learning.

These constraints can be thought of as limiting the range

of motion for each pole, which would also make them

easier to balance. We will explore these ideas in the fol-

lowing simulation of the family trees problem, and in

many of the subsequent chapters.

Search WWH ::

Custom Search