Information Technology Reference

In-Depth Information

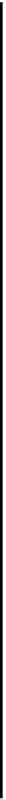

Box 5.1: How to Compute a Derivative

8

6

(x=−2)

4

2

(x=1)

0

−3

−2

−1

0

1

2

3

x

To see how derivatives work, we will first consider a very simple function,

y = x

2

, shown in the above figure, with the goal of

minimizing this function. Remember that eventually we will want to minimize the more complex function that relates a network's

error and weights. In this simple case of

y = x

2

, it is clear that to minimize

y

,wewant

x

to be 0, because

y

goes to 0 as

x

goes to

0. However, it is not always so apparent how to minimize a function, so we will go through the more formal process of doing so.

To minimize

y = x

2

, we need to understand how

y

changes with changes to

x

. In other words, we need the derivative of

y

with

respect to

x

. It turns out that the derivative of

x

2

is

2x

(and more generally, the derivative of

bx

a

is

bax

(a

1)

). This means that the

slope of the function

y = x

2

at any given point equals

2x

. The above figure shows the slope of the function

y = x

2

at two points,

where

x =1

(so the slope equals 2) and where

x =

2

(so the slope = -4). It should be clear from the figure and the formula for

the derivative that when

x

is positive the slope is positive, indicating that

y

increases as

x

increases; and, when

x

is negative the

slope is negative, indicating that

y

decreases as

x

increases. Moreover, the further

x

is from 0, the further we are from minimizing

, and the larger the derivative. To minimize

y

, we thus want to change

x

in the direction opposite the derivative, and in step sizes

according to the absolute magnitude of the derivative. Doing this for any value of

x

will eventually take

x

to 0, thereby minimizing

.

The logic behind minimizing the network's error with respect to its weights is identical, but the process is complicated by the fact

that the relationship between error and weights is not as simple as

y = x

2

. More specifically, the weights do not appear directly in

the error equation 5.2 in the way that

x

appears in the equation for

y

in our simple

y = x

2

example. To deal with this complexity,

we can break the relationship between error and weights into its components. Rather than determining in a single step how the error

changes as the weights change, we can determine in separate steps how the error changes as the network output changes (because

the output does appear directly in the error equation), and how the network output changes as the weights change (because the

weights appear directly in the equation for the network output, simplified for the present as described below). Then we can put

these terms together to determine how the error changes as the weights change.

producing useful representations, for example by allow-

ing units to represent weaker inputs (e.g., section 3.3.1).

There really is no straightforward way to train bias

weights in the Hebbian model-learning paradigm, be-

cause they do not reflect any correlational information

(i.e., the correlation between a unit and itself, which is

the only kind of correlational information a bias weight

could represent, is uninformative). However, error-

driven task learning using the delta rule algorithm can

put the bias weights to good use.

The standard way to train bias weights is to treat them

as weights coming from a unit that is always active (i.e.,

). If we substitute this 1 into equation 5.9, the

bias weight (

k

) change is just:

(5.10)

Thus, the bias weight will always adjust to directly de-

crease the error. For example, to the extent that a unit

is often active when it shouldn't be, the bias weight

change will be more negative than positive, causing the

Search WWH ::

Custom Search