Information Technology Reference

In-Depth Information

Support for the heterarchical view over the hierarchi-

cal one comes from a comparison between the represen-

tations produced by various learning mechanisms and

primary visual cortex representations. To show that the

hierarchical view is inconsistent with these visual cor-

tex representations, Olshausen and Field (1996) ran the

SPCA algorithm on randomly placed windows onto nat-

ural visual scenes (e.g., as might be produced by fixat-

ing randomly at different locations within the scenes),

with the resulting principal components shown in fig-

ure 4.8. As you can see, the first principal compo-

nent in the upper left of the figure is just a big blob,

because SPCA averages over all the different images,

and the only thing that is left after this averaging is a

general correlation between close-by pixels, which tend

to have similar values. Thus, each individual image

pixel has participated in so many different image fea-

tures that the correlations present in any given feature

are washed away completely. This gives a blob as the

most basic thing shared by every image. Then, subse-

quent components essentially just divide this blob shape

into finer and finer sub-blobs, representing the residual

average correlations that exist after subtracting away the

big blob.

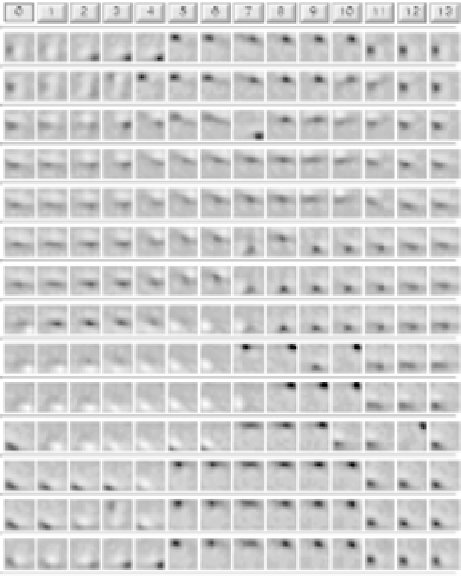

In contrast, models that produce heterarchical repre-

sentations trained on the same natural scenes produce

weight patterns that much more closely fit those of pri-

mary visual cortex (figure 4.9 — we will explore the

network that produced these weight patterns in chap-

ter 8). This figure captures the fact that the visual sys-

tem uses a large, heterogeneous collection of feature

detectors that divide images up into line segments of

different orientations, sizes, widths etc., with each neu-

ron responding preferentially to a small coherent cate-

gory of such line properties (e.g., one neuron might fire

strongly to thin short lines at a 45-degree angle, with ac-

tivity falling off in a graded fashion as lines differ from

this “preferred” or prototypical line).

As Olshausen and Field (1996) have argued, a repre-

sentation employing a large collection of roughly equiv-

alent feature detectors enables a small subset of neurons

to efficiently represent a given image by encoding the

features present therein. This is the essence of the argu-

ment for

sparse distributed representations

discussed in

chapter 3. A further benefit is that each feature will tend

Figure 4.9:

Example of heterarchical feature coding by sim-

ple cells in the early visual cortex, where each unit has a pre-

ferred tuning for bars of light in a particular orientation, width,

location, and so on, and these tunings are evenly distributed

over the space of all possible values along these feature di-

mensions. This figure is from a simulation described in chap-

ter 8.

to be activated about as often as any other, providing a

more equally distributed representational burden across

the neurons. In contrast, the units in the SPCA represen-

tation will be activated in proportion to their ordering,

with the first component active for most images, and so

on.

One way of stating the problem with SPCA is that it

computes correlations

over the entire space of input pat-

terns

, when many of the meaningful correlations exist

only

in particular subsets of input patterns

.Forexam-

ple, if you could somehow restrict the application of the

PCA-like Hebbian learning rule to those images where

lines of roughly a particular orientation, size, width,

Search WWH ::

Custom Search