Information Technology Reference

In-Depth Information

Weight Changes

alizing function. Thus, the units themselves evolve their

own conditionalizing function as a result of a complex

interaction between their own learning experience, and

competition with other units in the network. In the next

chapter, we will see that error-driven task learning can

provide a conditionalizing function based on the pat-

terns of activation necessary to solve tasks. In chapter 6,

we explore the combination of both types of condition-

alizing functions.

Before we proceed to explore these conditionalizing

functions, we need to develop a learning rule based

specifically on the principles of CPCA. To develop this

rule, we initially assume the existence of a condition-

alizing function that turns the units on when the input

contains things they should be representing, and turns

them off when it doesn't. This assumption enables us

to determine that the learning rule will do the appropri-

ate things to the weights given a known conditionalizing

function. Then we can go on to explore self-organizing

learning.

By developing this CPCA learning rule, we develop a

slightly different way of framing the

objective

of Heb-

bian learning that is better suited to the idea that the

relevant environmental structure is only conditionally

present in subsets of input patterns, and not uncondi-

tionally present in every input pattern. We will see that

this framing also avoids the problematic assumption of

a linear activation function in previous versions of PCA.

The CPCA rule is more consistent with the notion of in-

dividual units as hypothesis detectors whose activation

states can be understood as reflecting the underlying

probability of something existing in the environment (or

not).

input 1

input 2

input 3

input 4

...

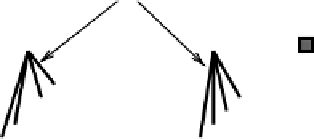

Figure 4.10:

Conditionalizing the PCA computation — the

receiving unit only learns about those inputs that it happens

to be active for, effectively restricting the PCA computation

to a subset of the inputs (typically those that share a relevant

feature, here rightward-leaning diagonal lines).

length, or the like were present, then you would end

up with units that encode information in essentially the

same way the brain does, because the units would then

represent the correlations among the pixels in this par-

ticular subset of images. One way of expressing this

idea is that units should represent the

conditional

prin-

cipal components, where the conditionality restricts the

PCA computation to only a subset of input cases (fig-

ure 4.10).

In the remainder of this section, we develop a ver-

sion of Hebbian learning that is specifically designed for

the purpose of performing

conditional PCA

(

CPCA

)

learning. As you will see, the resulting form of the

learning rule for updating the weights is quite similar to

Oja's normalized PCA learning rule presented above.

Thus, as we emphasized above, the critical difference

is not so much in the learning rule itself but rather in

the accompanying activation dynamics that determine

when individual units will participate in learning about

different aspects of the environment.

The heart of CPCA is in specifying the conditions un-

der which a given unit should perform its PCA compu-

tation — in other words, the

conditionalizing function

.

Mechanistically, this conditionalizing function amounts

to whatever forces determine when a receiving unit is

active, because this is when PCA learning occurs. We

adopt two main approaches toward the conditionalizing

function. Later in this chapter we will use inhibitory

competition together with the tuning properties of Heb-

bian learning to implement a

self-organizing

condition-

4.5.1

The CPCA Learning Rule

The CPCA learning rule takes the conditionalizing idea

literally. It assumes that we want the weights for a given

input unit to represent the conditional probability that

the input unit (

x

i

) was active given that the receiving

unit (

y

j

) was also active. We can write this as:

(4.11)

Search WWH ::

Custom Search