Database Reference

In-Depth Information

()

(7)

H

O

=

h

(

O

/

M

)

3

3

3

3

3

where

h

3

(O

3

)

is a speech synthesizer function to read the sentences.

J(Y)

is a selecting function to choose a speech interaction tool. The currently se-

lected speech module is just enabled. The variable,

M

i

is a given specific domain

knowledge.

M

1

is an acoustic model to recognize the word,

M

2

is not used and

M

3

is

TTS DB. The variable,

P

is procedural knowledge to provide a user-friendly service

such as a helper function.

I(P)

is a function to guide the service scenario according to

the results of the speech interaction tool.

As a result,

Z

is an action to be performed sequentially. The final decision-making,

Z(t)

represents the user's history to be processed when the decision is stored for a long

period of time. This can provide the statistical information when the user frequently

utilizes a specific function.

Sensory fused rules and data fusion rules can be a fusion framework for generation

of gathered information. In addition, extension of application service and integration

can be easily employed based on this framework. From this information, the genera-

tion method of user action statistics is described in next subsection.

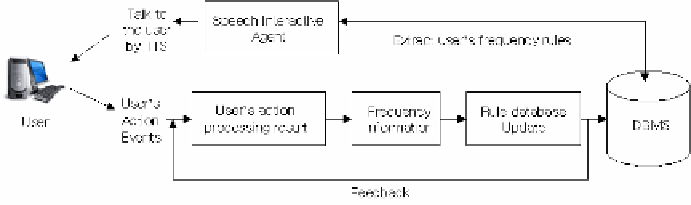

3.3 Active Interaction Rule Generation

Users may interact at various service stages and domain knowledge may be used in

the form of a higher-level specification of the model, or at a more detailed level. In

our system, the speech interactive agent interacts with users using a conversational

tool. This user interaction information is applied to data mining which is inherently

an interactive and iterative process. This is due to the fact that the user has repeated

patterns that he or she frequently uses on specific applications with the car navigation

system. By using this information, the speech interactive agent asks the user whether

the user wants to perform a specific task, which is the statistical information to be

stored and estimated for a period of time according to the procedure in Figure 3. In

addition, the speech interactive agent can start a music player automatically according

to the days' weather broadcasts if the system has not been used for a long time. This

function can be set on or off manually on an application by a user. To obtain some

information for specific tasks, the speech interactive agent downloads and updates the

mined data from the data mining server via the wireless internet.

Fig. 3.

Active interaction procedure using the user's frequency rule

Search WWH ::

Custom Search