Database Reference

In-Depth Information

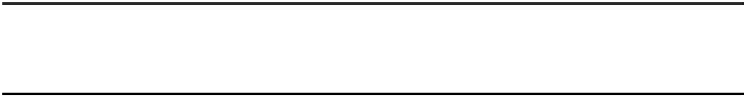

Table 1.

The negotiation rule table according to the priority control

Current

State

Previous

State

eASR is

requested

Application TTS

is requested

CNS TTS is

requested

Hands-

Free But-

ton pushed

Mute

Button

pushed

Hands-Free

button

enable

Disabled

Disabled

Enabled

Not appli-

cable

Not appli-

cable

Mute button

enable

Enabled

Disabled

Enabled

Not appli-

cable

Not appli-

cable

eASR run-

ning

Previous

eASR exits

and new

eASR runs

Previous eASR

exits and eTTS

starts

eASR runs

continuously

and CNS TTS

starts

eASR

exits

eASR

exits

Application

eTTS run-

ning

Previous

eTTS stops

and

Previous eTTS

stops and new

eTTS starts

Application

eTTS pauses

and CNS TTS

starts

eTTS

stops

eTTS

stops

eASR

runs

CNS eTTS

running

CNS eTTS

starts and

eASR runs

Previous CNS

eTTS finishs

and then appli-

cation eTTS

starts

Previous CNS

eTTS stops

and new CNS

eTTS starts

Don't care

Don't care

3.2 Data Fusion Rules from Interface Modalities

When given the sensory fusion result, the speech agent can decide the action to be

performed. Next, the data fusion model for speech interaction can be expressed by

()()()

(3)

Z

=

H

O

⋅

I

P

⋅

J

Y

,

i

=

1

...,

3

i

i

() (

)

(4)

H

O

=

h

O

/

M

,

i

=

1

,...,

3

i

i

i

i

i

where

i

is the number of speech interaction tools and

H

i

(O

i

)

is a speech interaction

tool; 1)embedded speech recognition, 2)distributed speech recognition 3)text-to-

speech. Thus, the variable,

O

1

and

O

2

are speech sampling data and

O

3

is text data.

Thus,

H

i

(O

i

)

is decomposed as follows.

H

O

h

O

/

M

1

1

1

1

1

(5)

arg

max

#

W

L

O

/

W

k

j

j

where

h

1

(O

1

)

is a pattern recognizer using the maximum a posteriori (MAP) decision

rule to find the most likely sequence of words.

()

(6)

H

O

=

h

(

O

/

M

)

=

h

(

O

)

2

2

2

2

2

2

2

where

h

2

(O

2

)

is a front-end feature extractor to pass the speech features into the back-

end distributed speech recognition server.

Search WWH ::

Custom Search