Graphics Reference

In-Depth Information

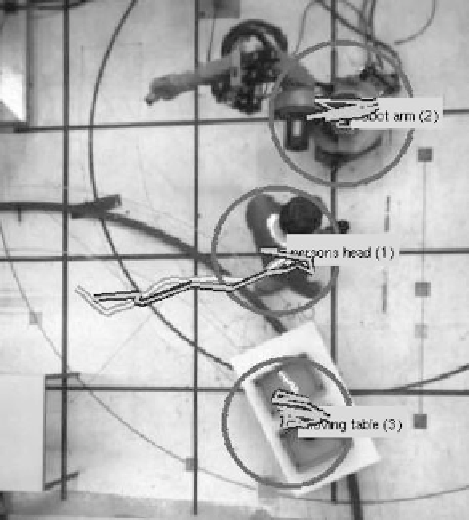

Fig. 7.2

Trajectories of

tracked objects (

dark grey

)

with annotated ground truth

(

light grey

) for a typical

industrial scene

in Sect.

7.2

regarding the industrial production environment). Furthermore, to pre-

cisely predict possible collisions between the human and the robot while they are

working close to each other, it is necessary to detect parts of the human body, espe-

cially the arms and hands of a person working next to the robot, and to determine

their three-dimensional trajectories in order to predict collisions with the robot. Sec-

tion

7.1.2

gives an overview of existing methods in the field of vision-based gesture

recognition. These approaches are certainly useful for the purpose of human-robot

interaction, but since many of them are based on two-dimensional image analy-

sis and require an essentially uniform background, they are largely insufficient for

safety systems. In contrast, the three-dimensional approaches introduced by Hahn

et al. (

2007

,

2010a

) and by Barrois and Wöhler (

2008

) outlined in Sects.

2.2.1.2

and

2.3

yield reasonably accurate results for the pose of the hand-forearm limb

independent of the individual person, even in the presence of a fairly cluttered back-

ground (cf. Sects.

7.3

and

7.4

).

7.1.2 Pose Estimation of Articulated Objects in the Context

of Human-Robot Interaction

Gesture recognition is strongly related to the pose estimation of articulated objects.

An overview of important methods in this field is given in Sect.

2.2.1.2

. Three-

dimensional pose estimation results obtained for the human body or parts of it are

Search WWH ::

Custom Search