Hardware Reference

In-Depth Information

■ A cache controller event that receives a data message and updates the cache has

latency L

rcv_data

.

■ A memory controller incurs L

send_msg

latency when it forwards a request message.

■ A memory controller incurs an additional L

inv

number of cycles for each invalidate that

it must send.

■ A cache controller incurs latency L

send_msg

for each invalidate that it receives (latency is

until it sends the Ack message).

■ A memory controller has latency L

read_memory

cycles to read memory and send a data

message.

■ A memory controller has latency L

write_memory

to write a data message to memory

(latency is until it sends the Ack message).

■ A non-data message (e.g., request, invalidate, Ack) has network latency L

req_msg

cycles.

■ A data message has network latency L

data_msg

cycles.

■ Add a latency of 20 cycles to any message that crosses from chip 0 to chip 1 and

vice

versa

.

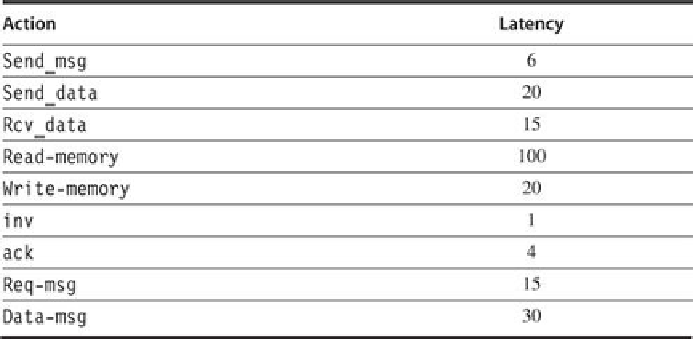

Consider an implementation with the performance characteristics summarized in

Figure

FIGURE 5.41

Directory coherence latencies

.

For the sequences of operations below, the cache contents of

Figure 5.38

, and the directory

protocol above, what is the latency observed by each processor node?

a. [20] <5.4>

P0,0: read 100

b. [20] <5.4>

P0,0: read 128

c. [20] <5.4>

P0,0: write 128 <-- 68

d. [20] <5.4>

P0,0: write 120 <-- 50

e. [20] <5.4>

P0,0: write 108 <-- 80

5.16 [20] <5.4> In the case of a cache miss, both the switched snooping protocol described

earlier and the directory protocol in this case study perform the read or write operation as

soon as possible. In particular, they do the operation as part of the transition to the stable

state, rather than transitioning to the stable state and simply retrying the operation. This

Search WWH ::

Custom Search