Image Processing Reference

In-Depth Information

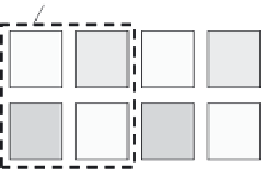

system in which only one image sensor is used, is described. In this system, a color filter

array (CFA) is arranged on a sensor. Figure 1.7 shows the Bayer CFA arrangement,

7

which

is by far the most commonly used CFA, with a pitch formed by 2 × 2 pixels. The pitch is

composed of the three primary colors: red (R), green (G), and blue (B). On each pixel, only

one color part of the filter is arranged. That is, one pixel for color imaging is formed from

each one-color filter and the sensing part is formed under it. Since the human eye has the

highest sensitivity to green, the G filter is arrayed in a checkerboard pattern* to contribute

most to the space resolution. Red and blue are arranged between green on every two lines.

Using this configuration, each pixel contains information from only one color among R, G,

and B. Although the wavelength distribution is continuous and analog, color information is

restricted to only three types. Since the identification of a pixel also means the identification

of its color, wavelength information also contains built-in coordinate points of color in the

system as well as space information. By showing a coordinate point (R, G, or B) in three-

dimensional space as

c

, the output from pixel

k

and color

l

can be expressed as

S

(

r

k

,

c

l

). A set

of signals from each pixel and color coordinate constructs the color still image information.

As a pixel and its color are specified at the same time in a single-sensor color system,

k

and

l

are immediately identified. The information on the physical quantity “wavelength”

is replaced by perception “color.” In this system, the perceptual mechanism of color by the

human eye and brain is availed of, and will be discussed in detail in Section 6.4.

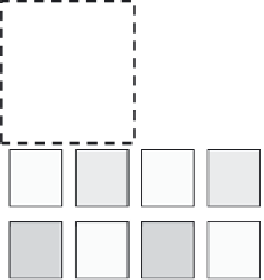

1.2.3 Color Moving Images

The image information of color moving images requires all four factors (light intensity,

space, wavelength, and time), as previously discussed. Almost all moving images are

achieved by the continuous repetitive capturing and reproducing of still pictures, as shown

in Figure 1.8. Thus, the basis of moving images is still images. Despite the name “still”

images, their light intensity information is not that of an infinitesimal time length, but inte-

grated information gathered during an exposure period of a certain length. This operation

mode is called the integration mode. In this way, the signal amount can be increased by

integrating the signal charges being generated throughout the exposure period. Since sen-

sitivity can be drastically improved, almost all image sensors employ the integration mode.

†

One unit

G

R

G

R

B

G

B

G

G

R

G

R

B

G

B

G

FIGURE 1.7

Example of the digitization of wavelength information: Bayer color filter array arrangement.

* The relationship between the arrays will be discussed in Section 7.2.

†

“Almost all image sensors” means the sensors with integration mode.