Image Processing Reference

In-Depth Information

1.2 Image Sensor Output and Structure of Image Signal

In this section, the structure of an image signal captured by image sensors is discussed.

1.2.1 Monochrome Still Images

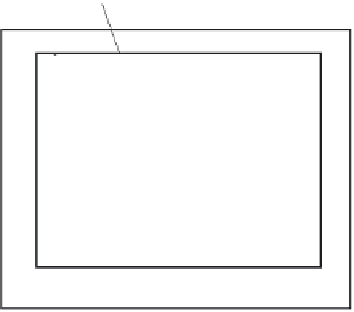

As discussed in Section 1.1, the only image information required for monochrome still

images is light intensity and space (position). The basic device configuration of image sen-

sors is shown in Figure 1.4. Image sensors have an

image area

on which optical images

are focused and are converted to image signals for output. In the image area, unit cells

called pixels are arranged in a matrix in a plane. Each pixel has a sensor part typified by a

photodiode, which absorbs incident light to generate a certain quantity of signal charges

according to the light intensity. Thus, the light intensity information for a pixel is obtained

at each sensor part. Figure 1.5b shows a partially enlarged image of Figure 1.5a, and the

image signal of the same area captured by image sensors is shown in Figure 1.5c. The

density of each rectangular block is the output of each pixel and the light intensity infor-

mation

S

(

x

i

,

y

j

) at the coordinate point (

x

i

,

y

j

) of each pixel. Expressing a two-dimensional

coordinate point (

x

i

,

y

j

) using

r

k

, the signal can be written as

S

(

r

k

). A set of

S

(

r

k

) at all

r

k

in the

image area makes up the information for one monochrome still image.

Dividing the image area into pixels that have a finite area, as shown in Figure 1.6, means

fixing the area size and coordinate point at which light intensity information is picked

up. The figure also shows that the continuous analog quantity, representing the position

information, is replaced by discrete coordinate points. That is,

x

i

and

y

j

cannot take arbi-

trary values, but are built-in coordinate points that are fixed in imaging systems, which

are the digitization of space coordinates. Using digitization in this system, since two-

dimensional space information can be treated as determinate coordinate points, only light

intensity information should be obtained. Therefore, the three-dimensional information

of a monochrome still image is compressed into one dimension. Since the overall total

Sensor part

(photodiode)

Image area

Pixel

Output part

Scanning function part

Image sensor chip

FIGURE 1.4

Basic device configuration of image sensors.