Information Technology Reference

In-Depth Information

We decided for the landing pattern consisting of two colored discs which makes it

very easy to be prepared by an inexperienced user. The user can print any two colored

discs; it is only important that the colors are different enough from each other and

from the typical colors in the landing zone. This makes it much easier to use in real

life (instead of being forced to use specially crafted and unalterable unique pattern).

In addition to this easy customization of the landing pattern, the other advantage is

that such pattern also defines the horizontal orientation.

To preprocess the picture, we straightforwardly use HSV filtering, image erosion,

and image dilatation (these basic image pre-processing algorithms are readily avail-

able and described in the OpenCV library [7]). Then, to identify each disc in the pic-

ture, blob detection algorithm (described in [4]) is used and the center of the disc pair

is calculated to set the target landing point.

The drone is controlled using four independent standard

proportional-integral-

derivative (PID) controllers

[2] fed by the distance to the target. The input for each

PID controller is the coordinate part of the target distance in its specific direction and

the output is one control value for the AD.Drone low-level interface functions (the

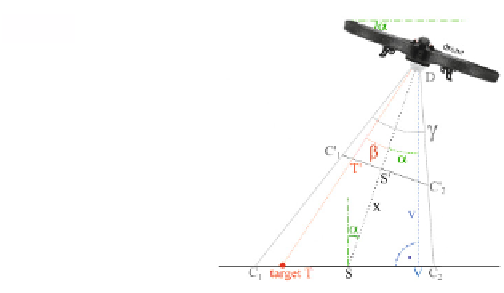

pitch, roll, yaw, and vertical speed). The distance calculation is shown on Figure 1.

More details about the algorithms and their implementation can be found in [1].

Camera:

Side view:

Fig. 1.

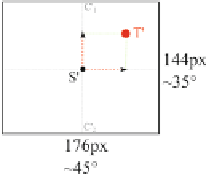

Distance to the target calculation: dist(T,V) = v * tan(α+ʲ),

where ʲ = arctan( tan(ʳ/2) * pixel_dist(T',S') / pixel_dist(C'

1

,S') )

The typical process to use autonomous landing is as follows. The user sets the

HSV parameters of the two color blobs in the picture and optionally selects the hori-

zontal angle (yaw) of the drone with respect to these two blobs. Any time later the

user can invoke the “

land on target

” action to let the drone automatically land or the

“

lock on target

” action to hover above the landing point at the current altitude. When

the landing pattern disappears from the camera view, the system can optionally at-

tempt to find it by flying in the direction where the pattern was lost (“

estimate tar-

get”

).