Information Technology Reference

In-Depth Information

2.6.4.3 What to Do in Practice?

We summarize here the model selection procedure that has been discussed.

For a given complexity (for neural networks, models with a given number

of hidden neurons),

•

Perform trainings, with all available data, with different parameter initial-

izations.

•

Compute the rank of the Jacobian matrix of the models thus generated,

and discard the models whose Jacobian matrix does not have full rank.

•

For each surviving model, compute its virtual leave-one-out score and its

parameter

µ

.

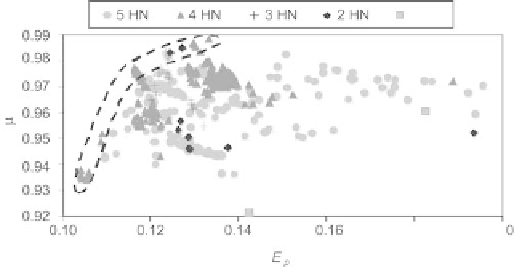

For models of increasing complexity: when the leave-one-out scores become

too large or the parameters

µ

too small, terminate the procedure and select

the model. It is convenient to represent each candidate model in the

E

p

− µ

plane, as shown, for the previous example, on Fig. 2.29. The model should be

selected within the outlined area; the choice within that area depends on the

designer's strategy:

•

If the training set cannot be expanded, the model with the largest

µ

should

be selected among the models that have the smallest

E

p

.

•

If the training set can be expanded through further measurements, then

one should select a slightly overfitted model, and perform further experi-

ments in the areas where examples have large leverages (or large confidence

intervals); in that case, select the model with the smallest virtual leave-

one-out score

E

p

, even though it may not have the largest

µ

.

2.6.4.4 Experimental Planning

After designing a model along the guidelines described in the previous sec-

tions, it may be necessary to expand the database from which the model was

Fig. 2.29.

Assessment of the quality of a model in the E

p

− µ

. plane

Search WWH ::

Custom Search