Information Technology Reference

In-Depth Information

1

0.9

0

0.8

-10

0.7

-20

0.6

-30

value

0.5

-40

0.4

-50

-60

0.3

0.9

1

0.7

0.8

0.5

0.6

0.2

y

0.4

1

0.2

0.3

0.9

0.8

0.7

0.1

0.6

0.5

0.1

0.4

0.3

0

0.1

0.2

0

x

0

0

0.1

0.2

0.3

0.4

0.5

0.6

0.7

0.8

0.9

1

x

(a)

(b)

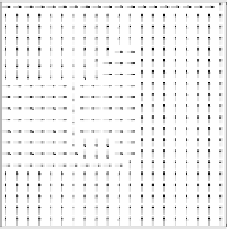

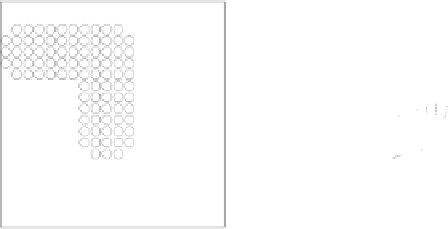

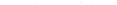

Fig. 2.2.

Optimal policy and value function for a discretised version of the “pudd-

leworld” task [208]. The agent is located on a 1x1 square and can perform steps of

size 0.05 into either of four directions. Each step that the agent performs results in a

reward of -1, expect for actions that cause the agent to end up in a puddle, resulting

in a reward of -20. The zero-reward absorbing goal state is in the upper right corner of

the square. Thus, the task is to reach this state in the smallest number of steps while

avoiding the puddle. The circles in (a) show the location of the puddle. (b) illustrates

the optimal value function for this task, which gives the maximum expected sum of

rewards for each state, and clearly shows the impact of the high negative reward of the

puddle. Knowing this value function allows constructing the optimal policy, as given by

the arrows in (a), by choosing the action in each state that maximises the immediate

reward and the value of the next state.

by the previous hidden state and the performed action. For each such state

transitions the agent receives a scalar

reward

or

payoff

that can depend on the

previous hidden and observable state and the chosen action. The aim of the agent

is to learn which actions to perform in each observed state (called the

policy

)

such that the received reward is maximised in the long run.

Such a task definition is known as a Partially Observable Markov Decision

Process (POMDP) [122]. Its variables and their interaction is illustrated in

Fig. 2.1(a). It is able to describe a large number of seemingly different pro-

blems types. Consider, for example, a rat that needs to find the location of food

in a maze: in this case the rat is the agent and the maze is the environment, and

a reward of -1 is given for each movement that the rat performs until the food

is found, which leads the rat to minimise the number of required movements to

reach the food. A game of chess can also be described by a POMDP, where the

white player becomes the agent, and the black player and the chess board define

the environment. Further examples include path planning, robot control, stock

market prediction, and network routing.

While the POMDP framework allows the specification of complex tasks, fin-

ding their solution is equally complicated. Its diculty arises mostly due to the

agent not having access to the true state of the environment. Thus, most of the

recent work in LCS has focused on a special case of POMDP problems that treat

the hidden and observable states of the environment as equivalent. Such problems

Search WWH ::

Custom Search