Database Reference

In-Depth Information

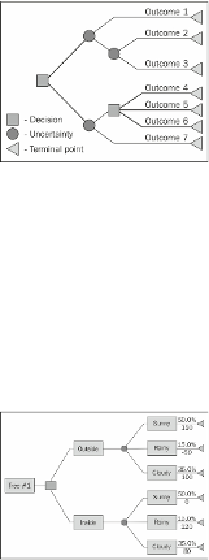

The following figure shows the standard decision tree representation using relevant

notations:

In the image below, the decision tree representation helps analyze if a street shop

vendor should set up his stall outdoors or indoors depending on the weather con-

ditions. This decision tree is based on a prediction clause that has 50 percent pos-

sibility for a sunny day, 15 percent possibility for rain, and 35 percent possibility for

a cloudy day. A rough estimation on what the vendor would earn in a day if he has

his stall outside versus inside is as shown. The overall earnings are computed to be

$102.5 for a stall outside versus $46 for a stall inside. It can be concluded that the

shopkeeper should have his stall outside and probably hope no rains for the day.

In decision trees, to identify the attributes that form an important part of decision

making, we calculate entropy, Gini index, information gain, and reduction in variance

(we will not deal with details on these techniques in this topic).

There are two most popular techniques that help identify the most relevant attribute

in a given data set:

•

CART

(regression and classification tress): These trees are for binary rep-

resentation, whichmeanseverynodecanhaveamaximumoftwooutcomes.

Gini index is used for identification of the impacting attributes.

•

C4.5

: Decision trees that use this technique can have more than two out-

comes and thus multiple binary trees can be created. Due to the inherent

Search WWH ::

Custom Search