Case Study 7: The App Did It

Not long ago, I was performing some incident response that might have had to do with some malicious activity. As is very often the case as a corporate consultant, my initial call with respect to the incident came from the customer, and one common factor among most of my customers is that they are not experienced incident responders. In this case, the issue involved repeated domain name lookups for "suspicious" domains—suspicious in the sense that at least one of the domains appeared to be in China. The customer had Googled the domain name and found that it was associated with an application vulnerability identified in spring 2008. With that, and little else, they called us.

Upon arriving on-site, I found that a specific system had been identified as being the origination point of at least some of the suspicious DNS traffic. This system apparently had been configured with a static IP address (as opposed to using DHCP), so it was relatively easy for the customer to track down and obtain from their employee. Unfortunately, the only steps taken were to shut down the system and remove it from the network; neither the contents of physical memory nor other volatile data were collected from the system prior to shutting it down. Another wrinkle that was thrown into all of this was that when the employee had been informed that there was suspicious traffic originating from his system and that the system would have to be examined, he had reportedly stated that he was going to "securely delete stuff" from the system. At this point, the goals of my examination were twofold: (1) determine if the employee had, in fact, installed and used a secure deletion utility and (2) determine the source of the suspicious DNS lookups.

My first step was to acquire an image of the employee’s desktop hard drive. While this was going on, I attempted to collect information about any logs that may be available from the network. I had been told that a management report illustrating the most frequent DNS lookups had alerted them to the situation, and that there were some logs from a botnet detection appliance that illustrated some of the DNS lookups; however, noticeably absent was any reference to the Chinese domain name that had been the customer’s primary concern during the call for help. I noticed that the network logs showed DNS lookups in alphabetical order, along with time stamps.

Once the acquisition of the hard drive image had completed and been verified, and I had ensured that all of my documentation was in order, I opened my case notes, mounted the acquired image as a read-only file system on my analysis laptop, and initiated a scan with an antivirus scanning application. As part of my process for data reduction and attempting to locate what amounted to an amorphous description of "something suspicious," I scanned the mounted image with several antivirus applications, including targeted microscanners to look for specific malware. My scans did reveal a number of files that may have been malware or remnants of malware, but the file metadata (MAC times) and contents seemed to indicate that these were false positives. My next step was to examine logs from the system, including the installed antivirus application logs and the MS Malicious Software Removal Tool logs.Neither indicated anything that would appear to be related to the issue at hand.

I then moved on to parsing the Windows Event Log. All three Event Logs from the system were 512 kilobytes in size, and the Security Event Log contained no records (I found through analysis of the Security Registry hive file that auditing had not been enabled). The Application Event Log revealed a number of event records generated by the antivirus application, but most important, the System Event Log showed that the system had been rebooted several times during the past two weeks. In each case, following the event record that stated that the Event Log service had started, there was another record stating that a specific antispyware application had started. I made a note of this and created a map illustrating approximate system start times based on these event records. I was able to correlate the system start times with several of the botnet appliance logs that the customer had provided; the three most complete logs (they were actually extracts from the appliance logs, illustrating activity associated only with the system in question) showed DNS lookups starting in almost direct correlation with the system starting up. In fact, the first entry in each log correlated very closely with the time that the antispyware application started. Again, however, the botnet appliance logs contained no reference to the suspicious Chinese domain.

I then ran a search across the entire image for the suspicious Chinese domain name, casting a wide net and fully expecting to see the only results in the pagefile. However, much to my surprise, I found several references to the domain name (as well as others) in several Registry hive files (most notably the NTUSER.DAT file for all users, as well as those that were found in the Windows XP System Restore Points), as well as in the hosts file (the importance of the hosts file with respect to name resolution is discussed at http://support.microsoft.com/kb/172218). Examination of the hosts file revealed that a separate antispyware tool that had been installed on the system had added a number of entries to the file (comments in the hosts file stated that the entries were added by the application), redirecting all of them to the localhost (i.e., 127.0.0.1), effectively "blackholing" attempts to connect to these domains. Examination of the Registry hive files revealed that on the same date (based on key LastWrite times) all of the same domain names, in the same order, had also been added to Registry keys, forcing the domains into the Internet Explorer (IE) "Restricted Zone." This effectively set restrictions on what users could do via Internet Explorer, if they were able to connect to hosts within those domains.

At this point, I was relatively sure that, based on all of the information I had obtained as well as some searching on the Internet, the suspicious activity was not the result of malware (virus, worm, or spyware) on the system but rather the interaction of two antispyware applications; that is, one had modified the Registry and the hosts file to protect the system, and the other performed DNS queries for each domain name found listed in the hosts file. A posting on an Internet forum indicated that this might be the case, and I worked with the customer to perform live testing of the system on the network to verify this information. We booted the system, disabled the antispyware application services and rebooted, reenabled the services and rebooted, and even modified the hosts file to contain specific entries and rebooted. Each time, we saw DNS traffic on the network (via a sniffer on a separate system on the same subnet), as we expected; in the case of rebooting the system with the antispyware application services disabled, we saw no DNS domain name queries at all.

Finally, neither Registry analysis nor analysis of the contents of the Prefetch folder provided any indication that the employee had installed a secure file wiping utility on the system, let alone run one from removable media.

Using a comprehensive investigative methodology and correlating multiple, corroborating sources of data allowed us to determine the source of suspicious and potentially malicious activity. During initial response, the customer had collected only a limited amount of data and then based the assumption of malicious activity on nothing more than a Google search for a single domain name. The lack of appropriate data (i.e., full network captures, more comprehensive network log data, physical memory or portions of volatile data from the suspect system, etc.) resulted in the examination taking considerably more time, in turn resulting in higher cost to the customer. Ultimately, live testing of the system, booted on the network, allowed us to confirm that the activity was the result of a legitimate application (two, actually, interacting) and that the DNS domain name queries were not followed by attempts to connect to hosts in those domains via either UDP or TCP.

Getting Started

One question I see in public forums quite often is, How do you start your examination? Given a set of data—images acquired from multiple systems, packet captures, log files, and so on—where does an examiner start his or her examination? How do you get started?

The catchall, silver-bullet answer that I learned in six months of training at The Basic School in Quantico, Virginia (where all Marine officers receive their initial training), is, "it depends." It applied then and it applies now just as well because it’s true. Let’s say that you have an image acquired from a single system. What was the operating system running or available at the time the image was acquired? What was the platform? Was it documented? You’re probably asking why this matters—but take a look around at your tools and see which ones are capable of handling which file systems. Is the image of a Linux system? If so, is the file system within the image ext2, ext3, or ReiserFS?

Okay, okay … I know that this is a topic about Windows forensic analysis. But I hope you see my point. When starting an examination, there are a number of things that the examiner may need to take into account. One of those "things" is the file system: Do you have the right tools for opening and reviewing the acquired image? However, more important, the examiner has to consider her goals: What does she hope to obtain from the examination? What needs to be achieved through the examination of the available data? See how easy it was for me to circle back around to "it depends"?

The most important step to getting started on an examination is to understand the goals of that examination. Regardless of the environment you’re in, any examination is going to have a reason, or a purpose. If you’re law enforcement, what are you looking for? Are you attempting to locate information about a missing child or determine if the system owner was trafficking in illicit images? If you’re a consultant, before beginning your examination, you should have already met with the customer and thoroughly discussed what they hope to obtain or achieve through your examination. Even if you’re an incident responder, on the move responding to an incident, you need to understand what you’re supposed to achieve as a result of your actions prior to actually performing them. If you’re responding to a malware infection, are you attempting to determine the malware artifacts and possibly obtain a copy of the malware code to provide to your antivirus vendor? Are you responding to unusual traffic terminating at a system on your internal infrastructure? The goals of your examination or response drive your actions.

Tools & Traps…

The Goals of Incident Response

As an incident responder, one of the things I’ve noticed is that many times—in fact, increasingly more often—the initial response by the first responders on-site can expose the organization to greater risk than the incident itself.

Now, I realize that you’re probably reading and re-reading that last sentence and trying to make sense of it. After all, that’s not exactly intuitive, is it? Well, what I’ve seen is that in many cases, the first responders are IT staff, and their response activities and procedures are very IT-centric. Very often, IT staffs are tasked (by senior management) with keeping systems—e-mail servers, Internet access, and so on—up and running as their primary duties. So, if malware is discovered on a system, the IT staff’s goals are to remove it and get the affected system back into service as quickly as possible. This may mean "cleaning" the system by removing the malware, or wiping all data from the system and reinstalling everything (operating system, data, etc.) from clean media or backup, or it may mean replacing the system altogether.

The most important factor that plays into all this comes from regulatory bodies. The state of California started with sB-1386, a law requiring notification of any California resident if their personally identifiable information (thoroughly defined and documented in the text of the law) were exposed as a result of a breach or intrusion. As of this writing, several other states have similar laws, and a federal law may be on the way. Add to that the Visa PCI compliance standards, as well as HIPAA, the SEC, and other regulatory bodies, and you’ve got quite a bit of external stimulus for having a good, solid response plan. In many cases, the regulatory bodies require a response plan to be documented and reviewed in order to be compliant. On the flip side of the coin, the cost of not having that good, solid response plan can include fines as well as both the hard and soft costs of notification and public exposure of a breach and exposure of sensitive data.

The best way to explain how these two pieces of information fit together is with an example. A company gets hit with malware through a browser-borne vector and spends some time cleaning approximately two dozen infected systems. During the course of their response, they find some indications that the malware may include a keystroke logger component or a networking component, although nothing definitive is ever determined (remember, the systems were cleaned, with no root-cause analysis being performed or documented). When corporate legal counsel hears about this—after all of the systems have been cleaned and put back into service—the question then becomes, Was any sensitive data on any of the infected systems exposed? How does the IT staff answer that question? After all, their goal was to clean the systems and get them back into service to keep the business running. No data was collected in order to determine if any sensitive data—personally identifiable information such as names, addresses, and Social Security numbers, or other data such as credit card numbers—was on those systems or had been compromised as a result of the malware infection.

Where the issue of risk comes into play is that some regulatory bodies state that if you are unable to determine definitively that the data was not exposed in any way, then you must notify across the entire range of the data that was on or accessible by that system. Stated simply, if you cannot prove that the sensitive data was not exposed, then you must notify everyone that their data may have been exposed. This makes it absolutely clear that incident response is no longer an IT process: It is an overall business process involving legal counsel, human resources, public relations and communications, and even executive management.

Documentation

The key to any examination or analysis you perform will be your documentation. Documentation must be kept in a manner that will allow you, or someone else, to return to the materials at a later date (e.g., six months, one year, or longer) and understand or even verify the findings of the examination. This means that your documentation should be clear and concise, and it should be detailed enough to provide a clear indication of what you did, what you found, and how you interpreted your findings.

Documentation can be kept in any means that is readily available. There is no need to locate or purchase a special application that saves your documentation in a proprietary format.

In fact, you probably don’t want to do this because you may not be using the application in a year, or someone who needs to review your documentation may not have that application. Something as simple as a text document would suffice, but having access to formatting capabilities in a word processor such as Microsoft Word might be more desirable. For example, with a word processor, you can embed links and images into the document, including such things as hard drive pin-out diagrams, screen captures, and even links to laptop disassembly guides (very useful when provided a laptop that you need to disassemble to gain access to the hard drive). In addition, many word processor document formats (i.e., MS Word and Adobe PDF) can be viewed and accessed from a number of platforms, so the format is somewhat ubiquitous. Even on Linux (and Windows) systems, OpenOffice (www.openoffice.org/) provides access to MS Word format documents and is freely available. Another very useful thing to keep in mind about your documentation format is your reporting format. Using the same word processor for both allows you to keep your case notes and then, when you’re ready, cut and paste items directly into your report. After all, when reporting, you may not need the level of detail found in your case notes, but that information may be easily transferred to your report and modified appropriately.

So, what should you document? As a consultant, one of the items I need to keep track of is hours that will be billed to a customer for the work performed during an examination. In some instances, I may be the senior analyst on an engagement and will track not only the hours of other analysts but also tasks provided to them and their responses, findings, and input. This can be extremely simple, using a table format, and at the same time very effective and easy to understand. Recording this information in my case notes, along with my analysis, allows me to show how the time was spent should I need to justify this information at a later date. At the same time, that same information is entered into the billing application directly from my case notes—there should be no discrepancy between the two. This allows me to minimize mistakes, particularly in this extremely important area of an examination.

Another item I tend to track in my documentation is the data that I have access to in order to examine and also the media that this data is on—that is, USB external hard drives, internal hard drives, CDs or DVDs, and so on. I track this information according to an item’s serial number so that I can easily refer back to the appropriate item throughout the course of the examination.

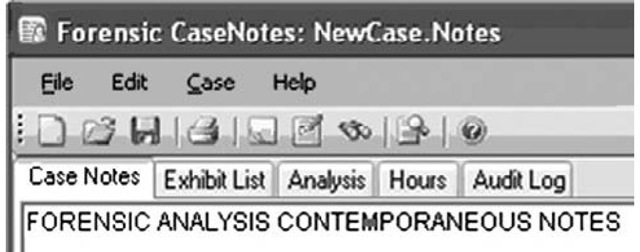

A useful tool for tracking my case notes is the Forensic CaseNotes application from QCC Information Security (www.qccis.com/?section=casenotes). Figure 8.1 illustrates the tabs I have set up to capture all of the previously discussed information.

Figure 8.1 QCCIS Forensic CaseNotes tabs

Regardless of the application you choose to use for maintaining your case notes, they should be accessible and concise, and they should clearly illustrate your analysis activities and results, to the point where those activities can be verified and validated, if necessary.

Tools & Traps …

Supporting Your Analysis

One thing I like to include in my case notes during an examination is any documentation that supports my line of reasoning. There are times when I may add notes based on testing a theory, but I also have an academic background, and one of the things I learned while writing my master’s thesis was that I had to support what I was saying in the thesis. It’s a simple matter to make a statement because you know something, but including similar statements made by others adds credibility to your analysis. An excellent source for this kind of supporting documentation when analyzing Windows systems is the Microsoft Knowledge Base. Many times searching either the Microsoft Knowledge Base itself, or even Google, will provide you with links to valuable information that can answer your questions and support your analysis. In other instances, you may get to the Microsoft Knowledge Base articles by way of another site, such as Eventid.net.

One of the Knowledge Base articles I use quite often for this purpose is http:// support.microsoft.com/?kbid=299648, titled "Description of Ntfs date and time stamps for files and folders." This article describes how file Mac times are affected by copy and move operations between and within file systems (specifically, FAT and NTFS). Other Knowledge Base articles describe the MS Internet Information Server (IIS) Web server status codes visible in the Web server logs, how files are stored in the Recycle Bin on Windows XP and 2003, and so on. There are a number of Knowledge Base articles that can provide support and credibility to your analysis, and can be easily referenced in your case notes as well as your reports.

Goals

A long time ago, I was discussing an examination performed by another analyst from another organization with a member of the security staff in our company. The consultant analyst had located the SubSeven Trojan installed within the acquired image, which was part of the contracted services (i.e., to determine if malware was installed on the system); however, as my colleague pointed out, the consultant did not identify the hidden DOS partition. Why? Because that wasn’t the goal of the examination. When I spoke with the consultant, she stated that she did indeed notice the hidden partition when preparing to examine the image, and she even noted it in her examination notes. However, it was not one of the questions that needed to be answered with respect to the contract, was not pertinent to the examination, and therefore was not presented in the final report.

This is very important for all examiners to remember, regardless of whether you’re a consultant or working for law enforcement. It is possible to go down a never-ending rabbit hole of analysis, looking for "suspicious activity" and never actually finishing, if you don’t have (and adhere to) clearly defined goals for your examination.

Knowing what you should be or need to be looking for also gives you a starting point in your analysis. Is the issue one of a malware infection, or an intrusion? Are the goals of the examination to determine fraud or violations of acceptable use policies by a user? Understanding what you should be looking for helps determine where to start looking.

A friend of mine once told me about a report that he’d reviewed with respect to a malware incident. After collecting data and completing the examination, the examiner wrote up a report, including a thorough dissertation on the capabilities of the malware that went on for about two dozen pages. At the end of the report, my friend said that he had to ask the examiner whether this malware was actually found within the acquired system? After all, that’s the question that the customer was paying them to answer, and in a report that spanned almost three dozen pages, there was no clear statement as to whether the malware had been found within the acquired image or not.

Digital forensic analysis can be an expensive proposition, and goals need to be clearly defined at the starting point. An analyst can spend a great deal of time examining an acquired image for malicious activity, and a team of responders can spend even longer combing through a multitude of systems within an infrastructure if their only guidance is to "find all of the bad stuff". Clearly defined goals help focus the analysis approach, guide the development of a response or analysis plan, as well as define the endpoint of the examination. Analysis goals need to be developed, understood, and clearly documented.

Checklists

A great way to get started with analysis is with checklists. Checklists outline those things that we need to do, and a good checklist will often contain more items than we need, as it is intended to be comprehensive, and require us to justify and validate, through a logical thought process, our reasons for skipping items on the checklist, or not performing certain steps.

Checklists should be simple and straightforward. Your checklist can be something you do for every examination, such as mount the image as a read-only drive letter on your analysis system and scan the file system with antivirus and antispyware applications. You may also include booting the image and scanning the "live" system with rootkit detection tools.You may include a search for credit card numbers, Social Security numbers, or other sensitive data as part of your checklist, or searches for e-mail (Web-based and otherwise) and chat logs based on the type of examination you’re conducting.

One example of a checklist would be to document information about each image you’re analyzing. For example, you may want to document the system name, last shutdown time, and other basic information extracted from the image that may be pertinent to your examination. Some settings, such as whether updating of last access times was disabled, or if the system was set to bypass the Recycle Bin when files are deleted, or if the pagefile was set to be cleared at shutdown, might have a significant impact on the rest of your analysis.

Another example of a checklist might include steps you would follow if malware was suspected to be on the system. This might be as simple as mounting the image with SmartMount and scanning the file system with a single antivirus application, or it might be more comprehensive, including several antivirus and antispyware applications (with their versions documented) as well as a number of other steps included in order to be as thorough as possible. This might also include booting the image with LiveView and performing scans for rootkits. Generally, when I perform antivirus scans of an acquired image, the first thing I do is look within the image to determine if there was already an antivirus product installed, and if so, the application’s vendor and version. From there, I can review the application logs to see if (or when) malware may have been detected, and if so, what actions were taken by the application (quarantined, attempted to quarantine but failed, etc.). Also, I usually check the Event Logs to determine if there were any notifications posted there, such as malware being detected or the antivirus application being abnormally stopped (a well-documented tactic of some malware). There’s also the mrt.log file,which will provide me with some indication of protection mechanisms put in place on the system during Windows Updates.

However concise or expansive the checklist, it should include enough documentation to allow another analyst to replicate and verify your steps, should the need arise.

Checklists are not intended to be a step-by-step, check-the-box way of performing analysis. Checklist should be considered the beginning of an examination, a way of ensuring that specific tasks are completed (or at least documented as to why they weren’t completed), rather than being considered the entire examination. What checklists allow you to do is ensure that you’ve covered all or most of your bases, and allow you to replicate those steps that need to be replicated across every examination, without having to worry about forgetting a step. Like your analysis applications and utilities, checklists are tools that you can use to your advantage. Checklists are a process, and by having a process, you also have something you can improve upon (conversely, if you don’t remember what you did on your last examination, how can you improve?).

A sample checklist titled Incident Analysis Checklist.doc can be found on the media accompanying this topic. The sample checklist is simply a Word document that contains some of the same fields identified by the tabs visible in Figure 8.1. The checklist provides some basic fields for identifying the incident and examiner, as well as the start and end dates for which the analysis occurs. There are also tables for identifying the items to be analyzed (acquired images, network traffic captures, log files, etc.), as well as checkboxes that identify some basic goals and analysis steps that may be used. This section can include items that are part of your organization’s standard in-processing procedures, such as identification of an acquired image’s operating system, any user accounts on the system, extraction of data from the Registry, scans for malware, and so on. While the checklist itself is only one page, additional pages may be added as the examiner conducts his analysis, and results may be recorded directly into the checklist. Again, this checklist serves only as a sample and an example, and may be expanded or modified to meet your specific needs.

Tools & Traps…

Which Version of Windows?

Often when working on live incident response, or even examining an acquired image, I will want to know which version of Windows I am working with. From what I see in public forums, often this isn’t a concern for responders or examiners, but having conducted or assisted with a fair number of live response and forensic analysis engagements, what some people consider to be subtle nuances between the versions of Windows can actually be pretty significant differences. From a forensic analysis perspective, there are artifacts available on some versions of Windows that are not available on others; one notable example is that System Restore Points are found on Windows XP but not on Windows 2000 or Windows 2003.

Perhaps the most well-known method for determining which operating system (OS) version of Windows you’re working with is to check the contents of several Registry keys. Under the Microsoft\Windows NT\CurrentVersion key within the Software hive, you will find values such as CSDVersion, BuildLab, and ProductID that can be used to determine the Windows version. Microsoft Knowledge Base article 189249 (http://support.microsoft.com/kb/189249) provides information for program-matically determining the version for a live system, and it also provides indications for how to do the same during an examination.

Another way to determine the OS version is to locate the ntoskrnl.exe file in the system32 directory and parse the file version information from that executable file. This will also work with files such as cmd.exe and winver.exe (note that on a live system, running winver.exe at the command prompt opens an About Windows dialog box that displays some basic information about the OS, including the version and amount of physical memory).

Finally, if you’re working with a version of Windows XP and need to determine whether it is the Home or Professional edition, locate the prodspec.ini in the system32 directory and look for (you may have to scroll down) the "[Product Specification]" entry. On my system, I see "Product=Windows XP Professional".

When it comes down to it, knowing the version of Windows that you’re examining can guide you in what artifacts to look for, where to look, and also what should be there that may not be. All of these can have a significant impact on your findings.

Now What?

Now that you have your documentation started, you understand your goals, and you have a checklist—then what? What happens next? Well, this is where you start your actual analysis. Let’s say that you’re analyzing an image acquired from a Windows system, and after documenting the acquisition, the operating system, and any user accounts on the system, you have several other analysis activities that you need to perform as part of your standard case processing, such as a keyword search and a malware scan. If the keyword list is relatively short, include at list of keywords directly in your analysis documentation and run the search. Or, identify why the search was not run, if you opted not to do so. Similarly, identify the application (or applications) used in your malware scan, or clearly state why the scan was not conducted.

Searching Google for "digital forensic analysis checklists" identifies a wide range of approaches used for these checklists. Some include file signature analysis, identification of graphics images (including movies and still images), parsing of Web browser activity, parsing of Recycle Bin and Windows shortcut (.lnk) files, and so on. All of these may be important to your examination or simply part of your organization’s standard case processing procedures. Either way, your analysis activities should be thoroughly and concisely documented, particularly if you’re going "off script" and pursuing a line of analysis that is outside the norm (some might call this a "hunch"). Documenting your hunches will expand your knowledge as well as your ability to go back at a later date and see what it was you did so that you can replicate those activities, as necessary.

Extending Timeline Analysis

As discussed in next topic, the timeline information that an analyst obtains as a result of using fls and mactime.pl is isolated to just the files and directories within the acquired image and does not take into consideration other events or artifacts within the acquired image that also contain time-stamped information. In part to address this issue, Michael Cloppert wrote a tool called Ex-Tip, which is available on Sourceforge (http://sourceforge.net/projects/ex-tip/). In addition, Michael’s paper on the development and usage of Ex-Tip is available from SANS (https://www2.sans.org/reading_room/whitepapers/forensics/32767.php). Ex-Tip takes additional sources of time-stamped data, such as Registry keys and the contents of antivirus application scanning logs, into consideration, parses and normalizes them into a common time (Unix epoch time) format, and presents them in a slightly different, albeit text-based format.

Tools and utilities such as RegRipper can provide additional functionality for extracting time-stamped values from Registry hive files. Whereas the module provided with Ex-Tip extracts all keys and their LastWrite times from a hive file, RegRipper takes more of a surgical approach and can provide only the keys of interest, providing a modicum of data reduction (so as to not overwhelm the analyst with data points), as well as providing context to the data that is retrieved. For example, as discussed in next topic, the RecentDocs key and its subkeys all contain MRU lists, and the most recently accessed file is relatively easily identified in the MRUList (or MRUListEx) value. Therefore, RegRipper plugins can provide not only the LastWrite time from the key but also the name of the most recently accessed file. Furthermore, Registry values such as those within the UserAssist keys contain time-stamped data and can be extracted by the appropriate plugin and then incorporated into a body file in the appropriate format.

Other files can be parsed for time-stamped data as well. For example, as discussed in next topic, Windows Event Logs contain time stamps for when each event record was generated and written. Rp.log files from within Windows XP System Restore Points contain information about not only when the restore point was created but also the reason (system checkpoint, installation of a driver, etc.) for the restore point creation. This information adds context to the available data and can then be correlated to information derived from the output of fls.exe. There are also antivirus log files and a number of other files available that contain information that can (and perhaps should) be included in timeline analysis. An additional source of information can include events that are manually entered, such as the creation of crash dump files, and so on.

As discussed in Michael’s paper, a number of facilities exist for parsing the available time-stamped data (once that data has undergone some form of normalization to a common data format, data reduction, etc.) and presenting that data in an understandable visual format. For example, Zeitline (http://projects.cerias.purdue.edu/forensics/timeline.php) and EasyTimeline (http://en.wikipedia.org/wiki/Wikipedia:EasyTimeline) are two such options. Zeitline is based on Java Swing, and it has apparently not been updated or seen any significant activity since 2006. EasyTimeline presents a graphical approach to representing time-stamped data. Another such tool that presents a great deal of potential is Simile Timeline, originally available from MIT and now available as a Google widget (http://code.google.com/p/simile-widgets/). Simile Timeline provides the capability not only for representing both point (an event that occurred at a single point in time, such as file last access date) and duration (an event that occurred over a span of time, such as an antivirus scan) events but also for presenting time-based data on separate, adjacent bands so that the information can be distinctly separate but viewed in correlation with other data.

One aspect to using a text-based output format or one of the graphically based output formats mentioned previously may include changing how analysts identify events, such as the output of the fls.exe utility.

Summary

Most analysts will find that they won’t be using one single area of an acquired image, such as the file system, to piece together their exams, and their cases. They’ll end up using data from the Registry, files found in the file system, and even memory dumps and network packet captures to build a complete picture of their cases. After all, why hinge your conclusions on one piece of data when you can seal everything up airtight with multiple pieces of data to support your findings? Regardless of how much data you use, however, the key to everything is going to be your documentation.

Solutions Fast Track

Case Studies

- Case studies have always been a great way of illustrating how seemingly disparate bits of information and analysis techniques can be brought together into a cohesive framework to obtain far greater insight into analysis. Many people like to see what others have done, and in many cases this will get readers to consider what they’ve seen, try their own techniques, and even extend the technique.

Getting Started

- Key items to keep in mind throughout your analysis are to stick to your analysis goals (getting off track or off focus can easily stall or delay your analysis) and to concisely and completely document your analysis.

Extending Timeline Analysis

- Representing available data in a timeline format can be an extremely powerful tool for an analyst.

- Multiple sources of data within an acquired image can contain valuable time-based information, including the contents of log files, Registry keys and values, and the contents of the Recycle Bin.

Frequently Asked Questions

Q: I have an examination in which I need to determine when a user was logged into the system; however, my initial work shows that auditing of logon events was not enabled, and I do not see any indications of those events in the Security Event Logs. How do I determine when the user was logged in?

A: There are several sources of information within the Windows operating system to determine when a user account was used to log into a system. Parsing the SAM hive file will give you the last logon date for the user, and that can be correlated to the last modification time on the NTUSER.DAT file within the user’s profile to get the last time the user logged off of the system. Parsing the user’s NTUSER.DAT file will provide a considerable amount of information about the user’s activity, particularly from the UserAssist and RecentDocs keys. Also, the LastWrite times on keys used to maintain MRU lists can be very helpful. Because the NTUSER.DAT file maintains a great deal of information about the user’s interaction with the Windows shell, a considerable amount of time-based information can be found in that file. In addition, parsing the same information from corresponding hive files in the System Restore Points in Windows XP will show you a historical progression of the data as well. This analysis technique uses a combination of file system and Registry analysis to develop a more comprehensive picture of the user’s activity on the system.

Q: During incident response activities, I collected network traffic captures as well as volatile data from the system I thought was infected. How do I correlate these two pieces of data and tie them together?

A: Network traffic captures contain two important pieces of information that can be used to tie that data to a particular system—the source or destination IP address and port. The IP address will allow you to tie the traffic to a particular system (the MAC address in the Ethernet frames can also be used).