Introduction

Throughout the topic so far, we’ve covered a great deal of very technical information, but in each case that information has been very specific to one particular area—Windows memory, the Registry, files, and so on. However, most of the incident response that a responder is required to do, or computer forensic analysis that an examiner will be required to do, involves more than one of these areas. For example, suspicious network traffic or a suspicious process may lead to a file on the system, which in turn will lead to the persistence mechanism for the malware, which may be a Registry key. Understanding the relationship between these various components and being able to understand and recognize the need to go from one to the other may very well mean the difference between understanding how an incident occurred and not understanding how it occurred.

Forensic examinations should not rely on analysis of the file system alone, particularly when the analyst is examining an image acquired from a Windows system. There is simply too much information available from the Registry, as well as from within various other files such as Windows Event Logs, for an analyst to rely solely on the most basic examination procedures and techniques.

In this topic, I present scenarios and past examinations—let’s call them "case studies"— that have utilized several of the techniques presented thus far in the topic in order to achieve their goals. In each case, I’ll try to be as technically complete as the situation allows, understanding that many specific details need to be either sanitized or omitted. In some case studies, the information may be drawn from a number of incidents or examinations, but the overall point remains the same—to demonstrate how information from different topics in this topic can lead to and be correlated with other information to build as complete a picture as possible of an incident.

Case Studies

Case Study 1: The Document Trail

I received an examination that involved multiple hard drives, each with a single primary user, and each with the Windows XP SP2 operating system installed. The incident background was simply that fraudulent activity had been occurring against accounts maintained by the customer’s organization, and their own investigation into the issue led them to the point where they suspected that the fraud may have been the result of the actions of a malicious employee.

The first step was to attempt to determine what I was looking for; to do that, I got in touch with the customer and walked through the particulars of their own investigation into the relevant activity. It appeared that the issue, from their perspective, centered around the accounts themselves, and in particular the numbers used to track the accounts.

Getting information about these account numbers led me fairly quickly to the realization that attempting to look for any numbers matching the structure of the account numbers (using a Perl regular expression) would be difficult and likely lead to a great many false positives. I needed some way to reduce the amount of data I was going to have to review.

The customer agreed to send me a list of the account numbers that they had determined had been affected by the fraud. Using those numbers as a keyword list, I ran a search across each of the acquired images and found hits in only one image, with the user profile for one specific user. In fact, the search hits were focused primarily in one file that, according to an article I located in the Microsoft Knowledge Base, appeared in the directory used by Outlook to store files that are opened from an e-mail attachment. My next step at that point was to extract a copy of that file (a spreadsheet) from the image for analysis. Opening the spreadsheet on my analysis system, I could see the content but I had no idea what it represented, other than it contained account information, and given the hits I had received from the search, the accounts correlated to those against which the suspicious activity had been committed.

Because I had already extracted the Registry hive files from their locations within the image, I parsed information from the user’s RecentDocs Registry key, as well as the key that listed the Excel spreadsheets that the user had opened. I found a filename reference to the spreadsheet from the Outlook temporary storage directory (the user’s Outlook.pst file was not located on the system) as well as to other spreadsheets, one of which appeared to be located on a file server, possibly in the user’s document directory (many organizations have their employees store documents on a file server so that they can be part of a regular backup process). I wasn’t able to locate the other spreadsheets that appeared to be referenced and located on the user’s system, and none of the results from my search were found in unallocated space, indicating that files containing the search terms hadn’t been deleted recently.

My next step was to extract metadata from the Excel spreadsheet. Microsoft Office documents (Word documents, Excel spreadsheets, and even PowerPoint presentations) use a compound storage, "file-system-within-a-file" structure for storing data. As such, a great deal of metadata can be (and is) stored within the document structure and can be extracted for analysis and use. Using the oledmp.pl Perl script found on the media that accompanies this topic, I was able to extract the metadata and see that the user in question had opened, edited, and printed the spreadsheet. These metadata fields within the spreadsheet included dates and times that showed when these actions took place, and these dates and times correlated to file system and Registry time stamps as well.

Once I had pulled all of this information together into a comprehensible timeline, I provided it in a report to the customer. Like many analysts, I don’t often have visibility into how engagements progress beyond the point of my final report, and this was yet another example of that sort of situation. However, this examination did illustrate how multiple analysis techniques can be employed to really drill down and get a great deal of information about an incident. In this case, a keyword search provided a great deal of data reduction and led to a specific document, whose location within the file system revealed the likely source of document (an Outlook e-mail attachment). Then, Registry analysis illustrated that the user had accessed the document, as well as other documents with similar titles, in addition to the fact that at least one of those documents was located on a file server. Finally, analysis of metadata extracted from the document revealed that whoever had access to the user account had modified and printed the file, and gave the dates of these actions. This provided a great deal of information to the customer to assist them in identifying the source of the fraudulent activity.

Case Study 2: Intrusion

This case study involved an intrusion into a corporate infrastructure that started with the compromise of an employee’s home system. This type of incident is probably more prevalent than one would think. Home user systems, in addition to systems used by regular users (students using laptops, corporate employee desktop and laptop systems, etc.), are very often subject to compromise because they are seen as easy targets; there are a lot of them out there (i.e., a "target-rich environment") and, for the most part, they are poorly managed. Many home users don’t realize what data of value is actually on their systems. Home users do online banking and file their tax returns each year from those computer systems. Gamers access online games, and believe it or not, there is actually an economy for selling online gaming characters. So, besides hard drive space, RAM, and processing power being added to a botnet, home computer systems can offer quite a bit of treasure to an intruder.

Tools & Traps…

The Value of a System

One of the few things that many people seem to be able to accept or understand is the value that their system—a desktop that they use at home, a laptop that a student uses for schoolwork, and so on—can represent to a "bad guy." Several years ago, I was teaching a Windows 2000 incident response course at the University of Texas at Austin, and one of the young ladies in the class got this strange look on her face. I asked her to share her thoughts with the class, and she blurted out, "Why would anyone want my computer?!"

Think about what your computer, or any computer, can offer to someone. First, what do you use your computer for? Do you do your taxes each year on your computer? Do you do online banking or make online purchases with your computer? Simply loading a keystroke logger on your system will provide the intruder with that information as well. Besides getting access to your personal information, your computer offers resources to the intruder, such as a bot host that can be added to an overall botnet and rented out to others for spam or denial-of-service attacks (DoS).

That being said, in this case, an intruder accessed an employee’s home system and installed a keystroke logger (this was later confirmed via separate analysis of the employee’s home system). From there, the intruder discovered that the employee logged into the corporate infrastructure via the Windows Remote Desktop Client, and because he had captured the employee’s keystrokes, he had the employee’s login username and password. From that point, it was a simple matter for him to fire up his own Remote Desktop Client, launch it against the right IP address, and provide his newly discovered credentials … and he was on the corporate network, appearing for all intents and purposes to be the employee.

It turned out that the intruder was easy to track. By accessing the infrastructure via the Remote Desktop Client, the intruder had shell-level access, meaning that his actions caused him to interact with the Windows Explorer shell just like a normal user sitting at the desktop. Due to this, many of the intruder’s actions were recorded via the Registry. Also, the intruder had a fairly high level of access due to the fact that the stolen credentials were for a user who managed user accounts. Even so, the intruder activated a dormant domain administrator account—one that had been set up but simply never used. This meant that each time the intruder accessed another system within the corporate infrastructure, a profile for the domain administrator account was created on that system. This made the intruder’s movements throughout the infrastructure fairly easy to track, at least initially (i.e., we did not want to make the mistake of assuming that this was all the intruder had done).

Working closely with the on-site IT staff, we created a script that would search all systems within the domain for indications of the user profile in question. We first identified systems on which the profile existed, which gave us an initial count of the systems the intruder had accessed. Acquiring each one, we then began the process of developing a timeline of activity, using the creation date of the profile directory as an indicator of when the intruder first accessed each system and the last modification time of the profile’s NTUSER.DAT Registry hive file as an indication of when the intruder last accessed the system. These time windows were later confirmed as we examined the contents of the UserAssist keys.

Tip::

This is an excellent example of an engagement in which, had the customer maintained Event Logs in a central log repository, a great deal of corroborating information would have been available. Although it wasn’t absolutely necessary (the creation date on the NTUSER.DAT files within the user profile gave us the date that the intruder first logged onto each system, and Registry artifacts gave us indications of the intruder’s periods of activity), had the audit configuration been set appropriately and the Event Log records collected and archived in a central location, we would have been able to narrow down a complete list of affected systems much quicker.

Once we had mapped the intruder’s travels through the network, the next step was to determine what the intruder had done or tried to do on each system. Again, the fact that the intruder was accessing each system through Windows Explorer provided us with a great deal of very valuable information. This particular customer had already spent a great deal of time and effort mapping sensitive data within their infrastructure and had a list of where this sensitive data (as defined by state notification laws such as California’s SB-1386, as well as the Visa Payment Card Industry [PCI] Data Security Standard) existed. Again turning to Registry analysis, we focused on the user profile’s NTUSER.DAT file, checking the RecentDocs key, as well as lists of recently accessed documents such as Excel spreadsheets and MSWord documents, and any other indications that we could find. We were able to focus our efforts by checking the RecentDocs keys to see what file types had been accessed (i.e., .xls, .doc, .jpg, etc.) and then checking for the most recently used (MRU) lists for the applications normally used to access those files. Interestingly, not many files had been accessed, perhaps due in part to what we found in the ACMru Registry key. It seems that the intruder had conducted searches by clicking Start | Search | For Files and Folders and had attempted to identify files with certain keywords. This had likely gone unnoticed by employees because some of the systems were housed in the data center, but the information the intruder was looking for using keywords wasn’t something that the organization really maintained. However, it was clear from indications on a few systems that the intruder had looked for and found a spreadsheet containing passwords, and the appropriate steps were taken to address this compromised information.

Again, multiple sources of data were used pursuant to this response and examination. VPN logs were used to confirm access and identify the intruder’s IP address, and then file system and MAC time analysis was used to confirm the intruder’s movements throughout the network. Finally, Registry analysis provided a clear picture of the intruder’s actions, including searches and file accesses. This last bit of analysis proved to be extremely valuable in determining whether or not the intruder had accessed sensitive data; thorough Registry analysis provided us with a strong argument that the intruder had not accessed files that had been previously determined to contain sensitive data.

Case Study 3: DFRWS 2008 Forensic Rodeo

In August 2008, Cory Altheide and I attended the DFRWS 2008 conference. We had a great time there, and on Tuesday night, Cory participated in the Forensic Rodeo. I didn’t so much want to participate as I wanted to observe, to look over other people’s shoulders and see how they approached the task at hand. Sitting in my office, usually performing analysis of some kind by myself, I don’t often get such an opportunity to not only engage with others about the work we do and the burdens we share but also actually see them in action. Looking back, it’s kind of funny to hear myself saying, ". in action," because truth be told, there’s about as much action in forensic analysis as there is in watching hair grow. Overall, however, this was a very enlightening experience, with the additional benefit of allowing others to try their hand at this sort of thing. That’s right, the forensic rodeo scenario and files can be found at www.dfrws.org/2008/rodeo.shtml.

The Forensic Rodeo challenge involved a memory dump and an image acquired from a thumb drive. The goal of the challenge was to analyze the two pieces of data and answer some of the questions provided by the referees, Dan Kalil. Dr. Michael Cohen won the rodeo, having been judged to have completed more of the provided questions than anyone else. I don’t want to provide any tips or inside information with respect to the rodeo data, but I will say that pursuing the rodeo involved memory analysis and data carving (no Registry analysis this time!).

Case Study 4: Copying Files

A question I see (and get asked) very often is whether it is possible to determine files that had been copied to or from a thumb drive or external storage device. I see this question many times in public listservs, and when I attended the first SANS Forensic Summit in October 2008, I was asked this question from two attendees as well as from one of my own team members who was fielding the question for a customer. Given how ubiquitous USB thumb drives are these days, as well as other removable storage media such as digital cameras, iPods, and so on, this is a very real concern for many organizations with respect to data exfiltration (i.e., theft of data such as intellectual property, etc.). Unfortunately, far too often it is a concern after the fact rather than something that is addressed proactively.

Using analysis techniques from both topics, we can determine not only when a device was first plugged into a system but also when it was last disconnected from a system. This can be very useful information when it comes to mapping the connection of removable storage devices to a system or across a number of systems. This also gives us something with which to start a timeline.

Now, one of the issues with respect to the original question is that most modern operating systems (and I say "most" simply because I haven’t seen them all) do not audit or log the copy or move operations within the file system. However, many folks seem to think that because forensic analysis can recover deleted files, other kinds of magic can be performed as well—magic such as determining who copied a file from one location to another and when they did this. Contrary to popular TV shows such as CSI, this simply isn’t the case in most instances. If the analyst were to have both pieces of media—the source and the destination drives or volumes for the copy—then he or she could determine by analyzing the files on both pieces of media (and their time stamps) which piece of media was the source and which was the destination. However, in most instances, the analyst does not have both pieces of media.

Having only one piece of media to examine may not allow the analyst to definitively determine files that were copied from that media, but the analyst may be able to determine indications of files that may have been copied to the media, using information provided in Microsoft Knowledge Base article 299648, titled "Description of NTFS date and time stamps for files and folders" (http://support.microsoft.com/?kbid=299648). This Knowledge Base article gives a clear description of how file times are affected by copy or move operations from one media to another. For example, if a file is copied from a FAT partition (most thumb drives are formatted with the FAT file system by default) to an NTFS partition, the last modification date of the file remains unchanged, but the creation date of the file is updated to the current time on the system. The same holds true if the file is copied from an NTFS directory to an NTFS subdirectory. However, if the file is moved (rather than copied), the file’s creation date is updated to that of the file in the original location. According to the article, "In all examples, the modified date and time of a file does not change unless a property of the file has changed. The created date and time of the file changes depending on whether the file was copied or moved."

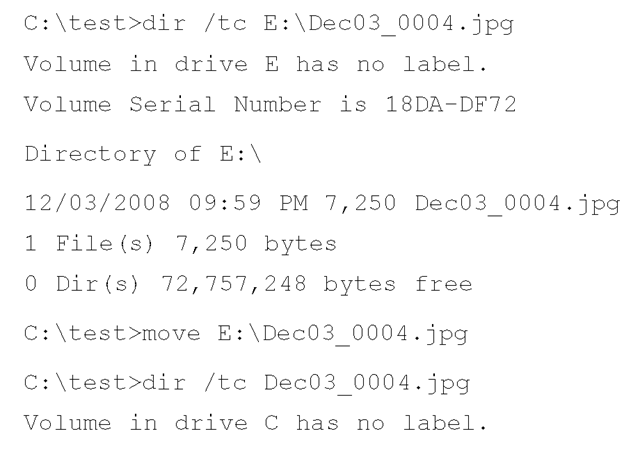

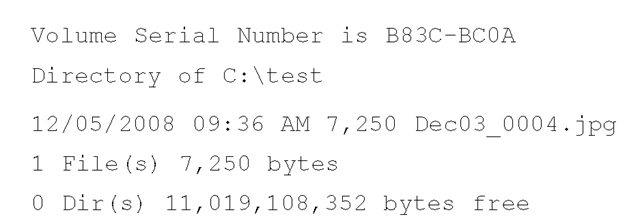

As a caveat, however, the Knowledge Base article does not describe the method used to move the file. For example, consider the following command line "move" commands, in which a file is copied from a FAT-formatted removable storage device (E:\) to an NTFS directory:

As you can see from this example, the create date of the file did not remain the same after the move operation took place, which is contrary to what is stated in Microsoft Knowledge Base article 299648. This clearly illustrates the need for testing and examination of tools and techniques.

This points out several important factors regarding our analysis, the first of which is that if the user copies a file and then modifies the file in some way, we’ve lost information that may indicate a file that was copied from one location to another; specifically, a file with a modification date older than the creation date might indicate that the file was copied. Consider that statement for a moment—shouldn’t a file have to be created first and then, at some point after it was created, modified? By default, MS Word will automatically save a copy of a file you are editing approximately every 10 minutes, so after the first 10 minutes, you would expect to have a creation date 10 minutes older than the modification date (in ideal circumstances, of course). Another factor to consider is that with only a single piece of media to analyze, you may not be able to definitively determine files that had been moved from one location to another simply due to the fact that the file times are updated to that of the original file (assuming the Cut&Paste menu option is used rather than the move command at the CLI).

Finally, although the file times associated with the file system are affected by a copy or move operation, times embedded within the contents of the file as metadata will not be modified and can therefore be used in some modicum of analysis. Depending on the type of document and the extent of metadata maintained within the document, you may be able to clearly determine that the document originated from another location besides the media being analyzed.

Analyzing an acquired image in an attempt to determine files that may have been copied to the system can involve Registry analysis as well as file system and MAC time analysis. In some instances, depending on the type of document that was copied, file metadata analysis may shed some light on the situation.

Case Study 5: Network Information

There are times during incident detection or response activities when network operations personnel may have access to firewall logs from egress filtering or to network traffic captures that show traffic (and possibly data) leaving the internal infrastructure. Regardless of the source (logs or traffic captures), someone will have access to data that clearly shows the source IP address of the traffic (as it originates from inside the network infrastructure), which can be traced to an active system on the internal network, either through tracing the system via a static, unchanging IP address or through DHCP logs. Another piece of information, the source port of the traffic (part of the TCP or UDP header), will help you tie that outbound traffic to a process running on the system.

Before proceeding with this description, an important fact needs to be pointed out and understood: Nothing happens on a computer system without a process executing. More appropriately, threads are the execution quantum on Windows systems, but the fact is that for traffic to be generated from a system, there has to be some code executing on the system to generate any network traffic. That being the case, an immediate response to the discovery of the network traffic details (i.e., source IP address and port) would lend itself to a term I heard Aaron Walters use—"temporal proximity." Although it sounds very "Star Trek-y," this term refers to responding immediately and relatively close to the time in which an incident is detected, as opposed to waiting hours or even days to respond. By observing temporal proximity with respect to the incident, a responder would be more likely to collect fresher (and perhaps more complete) data; the output of netstat.exe (or the network connections visible in a memory dump) might still show indications of that output connection and refer the responder to the process from which the traffic originated. For example, on my test system, the command line netstat -ano returns the following entry, an excerpt of the output of the command:

This excerpt of the output of the netstat command illustrates the source IP address and port used by the process with the PID of 3536, which in this case is firefox.exe. This same information would be clearly visible in a network traffic capture (as described previously), or it may be visible in firewall logs or logs maintained by other network devices. Knowing how to smoothly transition from network-based to host-based data collection and analysis can significantly reduce the amount of time it takes to identify and respond to an incident. In such a situation, device logs can be correlated with network traffic captures and host-based data (i.e., memory dump) to determine the volume and type of data that was leaving the network.

Case Study 6: SQL Injection

In the latter half of 2007, a number of SQL injection attacks occurred and as the weeks and months passed, they seemed to increase not only in frequency but also in sophistication. SQL injection is a technique that takes advantage of weaknesses in the application layer between a Web server and a database system. An intruder will submit specially crafted queries to the Web server, which will pass those queries on to the database with no validation of the user input, no bounds checking, and so on. In turn, the database will process those commands for the intruder.

Tools & Traps…

SQL Injection

A quick Google search for "SQL injection" reveals a number of links explaining the technique in detail, providing links to presentations and "cheat sheets" for how to perform these attacks, as well as providing videos demonstrating SQL injection attacks. The fact that there is no end of extremely detailed resources available for perpetrating these attacks should be more than enough to convince ClOs and CISOs to commit resources to protecting organizations. This can be accomplished through a thorough infrastructure assessment that takes the storage and processing of sensitive data into account and results in a prioritized approach to protecting the data and the infrastructure.

In early spring 2008, there was a great deal of media attention toward a certain type of SQL injection attack, in which apparently automated software would inject specially crafted links to JavaScript into the database, and those links would then be processed by a user’s Web browser as the database provided those links back to the Web server as dynamic Web content. This type of attack received media attention because it was very visible, whereas the attacks that weren’t being talked about publicly were those in which the intruder used SQL injection to get deep within the target’s infrastructure and, in many cases, remain on the network with extremely high privileges for a considerable period of time.

The basis of the SQL injection attack takes advantage of sound infrastructure design in that the publicly available Web server is positioned in the "demilitarized zone" or DMZ portion of the infrastructure, and the database resides on the internal infrastructure. The intruder’s commands would be received by the Web server and passed on to the database server, completely bypassing the firewall (because communication between the Web and database servers is a business requirement). From an incident response perspective, the intruder’s commands were clearly visible in the Web server logs in ASCII format, initially with no special encoding. Extracting the logs, a responder could clearly see initial contact, testing of the vulnerability, reconnaissance into the network (intruder-issued commands such as ipconfig /all and net view), and even branching out to other systems. Invariably at some point the intruder would establish a foothold on systems they had access to by downloading software to those systems. Initially, this would be accomplished through the use of the TFTP client using the UDP protocol to download files to the system. Then there was the creation and execution of FTP scripts (i.e., create the scripts using the "echo" command and then launch them using ftp -sfilename) to download archives to the platform. It appeared that in several cases the downloaded archives were self-extracting executables because the Web server logs showed the intruder launching the downloaded.exe file and then either checking for (via dir) the resulting files or simply running the commands.

During the course of the incident response, several samples were collected of executable files that were downloaded to systems. At first, these samples were not detected by antivirus scanners or identified when submitted to sites such as VirusTotal.com. As winter passed, incident response activities continued to include SQL injection attacks that were increasing in sophistication. Within a few short months, the search terms used to locate SQL injection attacks in Web server logs were useless because the attackers were using new techniques to encode (hexidecimal encoding or, in some cases, character set encoding) their commands. Therefore, search criteria needed to be updated in order to detect the attacks. One method for doing this is to identify the page being requested and then look for abnormally long requests being submitted to that page. Adding newly discovered keywords to the search criteria helped narrow down false positives, and custom Perl scripts helped quickly decode the queries into human-readable format. Another technique that the attacker used to get executable code onto the system was to break an executable file into 512-byte chunks and submit each chunk in a numbered sequence into database fields (remember, through SQL injection, the attacker is executing commands on the database at the same privilege level as the database, which for MS SQL Server is usually System) and then reassemble and execute the code within the file system of the database server. In instances in which this technique was used, we were able to extract and reassemble the executable code from the Web server logs and then validate that we had a correctly formatted executable file by parsing the PE header.If we had similarly named executables in our archive from previous SQL injection attacks, we used Jesse Kornblum’s ssdeep.exe (http://ssdeep.sourceforge.net/) to perform fuzzy hash comparison and, in most cases, determined that the files were 98 percent or 99 percent similar. Parsing the PE header to break the executable file down into sections allowed us to identify sections that had changed (through MD5 hash comparison) from previous versions of the files.

When responding to SQL injection attacks, techniques for tracking the attacker within the infrastructure included Registry analysis because the attacker was able at some point to interact with the Windows Explorer shell of the compromised systems. In a few cases, collective (or communal) administrative accounts were compromised (i.e., easily guessed passwords), but in most cases the attacker would create domain administrator-level user accounts (in some cases, the existence of the accounts was corroborated by Event Log entries showing the account creation) and then used those accounts to access other systems within the infrastructure. File system analysis illustrated the creation of user profiles on the systems and provided an initial timeline for the use of those accounts, whereas Registry analysis provided indications of the attacker’s activities on those systems, as well as the use of persistence mechanisms employed by the attacker for malware added to compromised systems. Because the Web server is not compromised during a SQL injection attack, the Web server logs provided a clear picture of the attacker’s initial activities (in some cases, reconnaissance probes reached back weeks or months) in gaining access to the infrastructure. File or malware analysis provided indications that similar (more advanced) tools were being used as time went by.