Dynamic Analysis

Dynamic analysis involves launching an executable file in a controlled and monitored environment so that you can observe and document its effects on a system. This is an extremely useful analysis mechanism, in that it gives you a more detailed view of what the malware does on and to a system, and especially in what order. This is most useful in cases in which the malware is packed or encrypted, as the executable image must be unpacked or decrypted (or both) in memory prior to being run. So, not only will you see the tracks in the snow and the broken tree limbs, as it were, but using techniques for capturing and parsing the contents of memory you can actually see the Abominable Snowman, live and in action.

Testing Environment

If you intend to perform dynamic analysis of malware, one of your considerations will be the testing or host environment. After all, it isn’t a good idea to see what a piece of malware does by dropping it onto a production network and letting it run amok. It’s bad enough that these things happen by accident; you don’t want to actually do this on purpose.

One way to set up your testing environment is to have a system on a separate network, with no electrical connectivity (notice here that I don’t say "logical connection" or "VLAN on a switch") to the rest of your network. There has to be that "air gap" there; I strongly recommend that you don’t even mess with having a knife switch to separate your malware "cage" from your infrastructure, because we all know that one day, when you’re testing something really nasty, someone’s going to look up and realize that he forgot to throw the switch and separate the networks. Also, if you’re undergoing an audit required by any sort of regulatory body, the last thing you want to have is a way for malware that will potentially steal sensitive personal data to get into a network where sensitive personal data lives. If your lab is accredited or certified by an appropriate agency, you can seriously jeopardize that status by running untrusted programs on a live network. Losing that accreditation will make you very unpopular with what is left of your organization. This applies not only to labs accredited for forensic analysis work, but also to other regulatory agencies, as well.

One of the drawbacks of having a "throwaway" system or two is that you have to reinstall the operating system after each test; how else are you going to ensure that you’re collecting clean data and that your results aren’t being tainted by another piece of malware? One way to accomplish this is with virtualization.

Virtualization

If you don’t have a throwaway system that you can constantly reinstall and return to a pristine state (who really wants to do that?), virtualization is another option available to you. A number of freeware and commercial virtualization tools are available to you, such as:

■ Bochs Runs on Windows, Linux, and even Xbox, and is free and open source (http://bochs.sourceforge.net/).

■ Parallels Runs on the Mac platform as well as Windows and Linux (www.parallels.com).

■ Microsoft Virtual PC Runs on Windows as the host operating system; can run DOS, Windows, and OS/2 guest operating systems, and is freely available (www.microsoft.com/windows/products/winfamily/virtualpc/default.mspx).

■ Virtual Iron "Bare metal install" (meaning it is not installed on a host operating system) and can reportedly run Windows and Linux at near-native speeds (www.virtualiron.com/).

■ Win4Lin Runs on Linux; allows you to run Windows applications (http://win4lin.net/content/).

■ VMware Runs on Windows and Linux, and allows you to host a number of guest operating systems. The VMware Server and VMware Player products are freely available. VMware is considered by many to be the de facto standard for virtualization products, and is discussed in greater detail in the following sections (www.vmware.com).

Tip::

In January 2009, as I was preparing the manuscript for this topic to go to the developer, an interesting tool called Zero Wine (http://zerowine.sourceforge.net/) caught my eye. Zero Wine is a QEMU-based virtual environment with a Debian Linux guest operating system installed. The guest operating system has Wine installed as well, and when run it starts a Web-based dynamic malware analysis platform. Essentially, you can upload a malware executable image file much as you would to the VirusTotal.com Web site, only with Zero Wine, the malware is executed within the virtual environment and all system activity (access to API functions, Registry activity), as well as static analysis parsing is recorded and made available to the analyst.

This is by no means a complete list, of course. The virtualization option you choose depends largely on your needs, environment (i.e., available systems, budget, etc.), and comfort level in working with various host and guest operating systems. If you’re unsure as to which option is best for you, take a look at the "Comparison of virtual machines" page (http:// en.wikipedia.org/wiki/Comparison_of_virtual_machines) on Wikipedia. This may help you narrow down your choices based on your environment, your budget, and the level of effort required to get a virtualization platform up and running.

The benefit of using a virtual system when analyzing malware is that you can create a "snapshot" of that system and then "infect" it, and perform all of your testing and analysis. Once you’ve collected all of your data, you can revert back to the snapshot, returning the system to a pristine, prior-to-infection state. In this way, not only can systems be more easily recovered, but multiple versions of similar malware can be tested against the same platform for a more even comparison.

Perhaps the most commonly known virtualization platform is VMware. VMware provides several virtualization products for free, such as VMware Player, which allows you to play virtual machines (although not create them), and VMware Server. In addition, a number of prebuilt virtual machines or appliances are available for download and use. As of this writing, I saw ISA Server and Microsoft SQL Server virtual appliances available for download.

There is a caveat to using VMware, and it applies to other virtualization environments, as well. Not long ago, there were discussions about how software could be used to detect the existence of a virtualization environment. Soon afterward, analysts began seeing malware that would not only detect the presence of a virtualization environment, but also actually behave differently or simply not function at all. On November 19, 2006, Lenny Zeltser posted an ISC handler’s diary entry (http://isc.sans.org/diary.php?storyid=1871) that discussed virtual machine detection in malware through the use of commercial tools. This is something you should keep in mind, and consider when performing dynamic malware analysis. Be sure to thoroughly interview any users who witnessed the issue, and determine as many of the potential artifacts as you can before taking your malware sample back to the lab. That way, if you are seeing radically different behavior in the malware when running in a virtual environment, you may have found an example of malware that includes this code.

Throwaway Systems

If virtualization is simply not an option (due to price, experience, comfort level, etc.) you may opt to go with throwaway systems that can quickly be imaged and rebuilt. Some corporate organizations use tools such as Symantec’s Norton Ghost to create images for systems that all have the same hardware. That way, a standard build can be used to set up the systems, making them easier to manage. Other organizations have used a similar approach with training environments, allowing the IT staff to quickly return all systems to a known state. For example, when I was performing vulnerability assessments, I performed an assessment for an organization that had a training environment. They proudly told me that using Norton Ghost, they could completely reload the operating systems on all 68 training workstations with a single diskette.

If this is something you opt to do, you need to make sure the systems are not attached to a corporate or production network in any way. You might think that this goes without saying, but quality assurance and testing networks have been taken down due to a rushed administrator or an improperly configured virtual local area network (VLAN) on a switch. You should ensure that you have more than just a logical gap between your testing platform and any other networks. An actual air gap is best.

Once you’ve decided on the platform you will use, you can follow the same data collection and analysis processes that you would use in a virtual environment on the throwaway systems; the process really does not differ. On a throwaway system, however, you will need to include some method for capturing the contents of memory on your platform (remember, VMware sessions can simply be suspended), particularly if you are analyzing obfuscated malware.

Tools

You can use a variety of tools to monitor systems when testing malware. For the most part, you want to have all of your tools in place before you launch your malware sample. Also, you want to be familiar with what your tools are capable of as well as how to use them.

One of the big differences between malware analysis and incident response is that as the person analyzing the malware, you have the opportunity to set up and configure the test system prior to being infected. Although it’s true, in theory, that system administrators have this same opportunity, it’s fairly rare that you’ll find major server systems that have been heavily configured with security and especially incident response in mind.

When testing malware, there are some challenges that you have to be aware of. For example, you do not know what the malware is going to do when launched. I know it sounds simple, but more than once I’ve talked to people who’ve not taken this into account. What I mean is that you don’t know whether the malware is going to open up and sit there, waiting to be analyzed, or whether it’s going to do its job quickly and disappear. I’ve seen some malware that would open a port awaiting connections (backdoor), other malware that has attempted to connect to systems on the Internet (IRCbots), and malware that has taken only a fraction of a second to inject its code into another running process and then disappear. When doing dynamic analysis, you have the opportunity to repeat the "crime" over and over again to try to see the details. When we perform incident response activities, we’re essentially taking snapshots of the scene, using tools to capture state information from the system at discrete moments in time. This is akin to trying to perform surveillance with a Polaroid camera. During dynamic analysis, we want to monitor the scene with live video, where we can capture information over a continual span of time rather than at discrete moments. That way, hopefully we’ll be able to capture and analyze what goes on over the entire lifespan of the malware.

So, what tools do we want to use? To start, we want to log any and all network connectivity information, as malware may either attempt to communicate out to a remote system or open a port to listen for connections, or both. One way we can do this is to run a network sniffer such as Wireshark (formerly known as Ethereal, found at www.wireshark.org) on the network. If you’re using a stand-alone system you’ll want to have the sniffer on another system, and if you’re using VMware you’ll want to have Wireshark running on the host operating system, while the malware is being executed in one of the guest operating systems. The reason we do this will be apparent in a moment.

Another tool you’ll want to install on your system is Port Reporter (http://support.micro-soft.com/kb/837243), which is freely available from Microsoft. Port Reporter runs as a service on Windows systems and records Transmission Control Protocol (TCP) and User Datagram Protocol (UDP) port activity. On Windows XP and Windows 2003 systems, Port Reporter will record the network ports that are used, the process or service that uses those ports, the modules loaded by the process, and the user account that runs the process.

Less information is recorded on Windows 2000 systems. Port Reporter has a variety of configuration options, such as where within the file system the log files are created, whether the service starts automatically on system boot or manually (which is the default), and so forth. You can control these options through command-line parameters added to the service launch after installing Port Reporter. Before installing Port Reporter, be sure to read through the Knowledge Base article so that you understand how it works and what information it can provide.

Tip::

Some malware may stop functioning and simply shut down if it is unable to connect to a system on the Internet, such as a command and control server. One way around this is to take a look at the network traffic being generated by the process and see whether it does a domain name system (DNS) lookup for a specific host name. You can then modify your hosts file (located in the %WinDir%\ system32\drivers\etc directory) to point your system to a specific system on your network, rather than one on the Internet. See Microsoft Knowledge Base article 172218 (http://support.microsoft.com/kb/172218) for specific information on how Windows systems resolve TCP/Internet Protocol (IP) host names.

Port Reporter creates three types of log files: an initialization log (i.e., PR-INITIAL-* .log, with the asterisk replacing the date and time in 24-hour format for when the log was created) that records state information about the system when the service starts; a ports log (i.e., PR-PORTS-*.log) that maintains information about network connections and port usage, similar to netstat.exe; and a process ID log (i.e., PR-PIDS-*.log) that maintains process information.

Microsoft also provides a WebCast (http://support.microsoft.com/kb/840832) that introduces the Port Reporter tool and describes its functionality. Microsoft also has the Port Reporter Parser (http://support.microsoft.com/kb/884289) tool available to make parsing the potentially voluminous Port Reporter logs easier and much more practical.

With these monitoring tools in place, you may be wondering, why do I need to run a network sniffer on another system? Why can’t I run it on the same dynamic analysis platform with all of my other monitoring tools? However, the short answer is that rootkits allow malware to hide its presence on a system by preventing the operating system from "seeing" the process, network connections, and so on. As of this writing, thorough testing has not been performed using various rootkits, so we want to be sure we collect as much information as possible. By running the network sniffer on another platform, separate from the testing platform, we ensure that part of our monitoring process is unaffected by the malware once it has been launched and is active.

Tip::

It may also be useful during dynamic malware analysis to run a scan of the "infected" system from another system. This scan may show a backdoor that is opened on the system but hidden through some means, such as a rootkit.You can use tools such as Nmap (http://nmap.org/) and PortQry (http://support.microsoft.com/kb/832919) to quickly scan the "infected" system and even attempt to determine the nature of the service listening on a specific port. Although issues of TCP/IP connectivity and "port knocking" are beyond the scope of this topic, there is always the possibility that certain queries (or combinations of queries) sent to an open port on the "infected" system may cause the process bound to that port to react in some way.

Remember, one of the things we need to understand as forensic examiners is that the absence of an artifact is in itself an artifact. In the context of dynamic malware analysis, this means that if we see network traffic emanating from the testing platform and going out to the Internet (or looking for other systems on the local subnet), but we do not observe any indications of the process or the network traffic being generated via the monitoring tools on the testing platform, we may have a rootkit on our hands.

As a caveat and warning, this is a good opportunity for me to express the need for a thorough and documented dynamic malware analysis process. I have seen malware that does not have rootkit capabilities, but instead injects code into another process’s memory space and runs from there. This is something you need to understand, as making the assumption that a rootkit is involved will lead to incorrect reporting, as well as incorrect actions in response to the issue. If you document the process and tools you use, the idea is that someone else will be able to verify your results. After all, using the same tools and the same process and the same malware, someone else should be able to see the same outcome, right? Or that person will be able to look at your process and inquire as to the absence or use of a particular tool, which will allow for a more thorough examination and analysis of the malware.

Tip::

When performing dynamic malware analysis, you have to plan for as much as you possibly can, but at the same time you should not overburden yourself or load your system down with so many tools that you’re spending so much time managing the tools that you’ve lost track of what you’re analyzing. I was working on a customer engagement once when we found an unusual file. The initial indication of the file was in the Registry; when launched, it added a value to the user’s Run key, as well as to the RunOnce key. Interestingly enough, it added the value to the RunOnce key by prefacing the name of the file with "*"; this tells the operating system to parse and launch the contents of the key even if the system is started in Safe Mode (pretty tricky!). We had to resort to dynamic analysis, as static analysis quickly revealed that the malware was encrypted, and PEiD was unable to determine the encryption method used. After launching the malware on our platform and analyzing the captured data, we could see where the malware would launch the Web browser invisibly (the browser process was running, but the GUI was not visible on the desktop) and then inject itself into the browser’s process space. From this we were able to determine that once the malware had been launched, we should be looking for the browser process for additional information. It also explained why, during volatile data analysis, we were seeing that the browser process was responsible for the unusual network connections, and there was no evidence of the malware process running.

It’s also a good idea to enable auditing for Process Tracking events in the Event Log, for both success and failure events. The Event Log can help you keep track of a number of different activities on the system, including the use of user privileges, logons, object access (this setting requires that you also configure access control lists [ACLs] on the objects— files, directories, Registry keys, etc.—that you specifically want monitored), and so forth. Because we’re interested in processes during dynamic malware analysis, enabling auditing for Process Tracking for both success and failure events will provide us with some useful data. Using auditpol.exe from the Resource Kit,we can configure the audit policy of the dynamic analysis platform, as well as confirm that it is set properly prior to testing. For example, use the following command line to ensure that the proper auditing is enabled:

To confirm that the proper auditing is still enabled prior to testing, simply launch auditpol. exe from the command line with no arguments.

Tip::

You may also want to enable auditing of System events, but be sure to not enable too much auditing. There is such a thing as having too much data, and this can really slow down your analysis, particularly if the data isn’t of much use to you. Some people may feel they want to monitor everything so that they ensure that they don’t miss anything, but there’s a limit to how much data you can effectively use and analyze. Thoroughly assess what you’re planning to do, and set up a standard configuration for your testing platform and stick with it, unless there is a compelling reason to change it. Too much data can be as hazardous to an investigation as too little data.

As mentioned earlier, one way to monitor access to files and Registry keys is to enable object access auditing, set ACLs on all of the objects you’re interested in, and once you’ve executed the malware, attempt to make sense of the contents of the Event Log. Or you could look at two ways to monitor access to files and Registry keys: One is to take before and after snapshots and compare the two, and the other is to use real-time monitoring. When performing dynamic malware analysis, your best bet is to do both, and to do that you’ll need some tools. You can go to the Microsoft Web site and download the FileMon and RegMon tools (which let you monitor file system and Registry activity in real time), or you can download Process Monitor. The benefit of using real-time monitoring tools instead of snapshot tools is that not only do you see files and Registry keys that were created or modified, but also you get to see files and Registry keys that may have been searched for but were not located. Further, you get to see a timeline of activity, seeing the order in which the files or Registry keys were accessed. This can be an important part of your analysis of the malware.

Tip::

FileMon and RegMon are excellent monitoring tools available from Microsoft’s Sysinternals Web site (http://technet.microsoft.com/en-us/sysinternals/bb795535.aspx). Although each of these tools is still provided separately, both of them have had their functionality added to the Process Monitor tool, also available from the same site.

We will discuss some of the snapshot-based tools that are available in the next section.

Process

The process for setting up your testing platform for dynamic analysis of malware is pretty straightforward and simple, and the key is to actually have a process or a checklist. As with volatile data collection or forensic analysis, you don’t want to try to perform dynamic analysis from memory every time, as sometimes you’re going to be rushed or you’re simply going to forget an important step in the process. We’re all capable and guilty of this; I’ve had my share of analysis scenarios where I had to start all over because I forgot to enable one of my tools. I had to go back and completely clean and refresh the now-infected system, and then ensure that my tools were installed and that my system configuration was correct. I’m sure that I don’t have to describe how frustrating this can be.

The first thing you want to do is ensure that you’ve identified, downloaded, and installed all of the tools you’re going to need. I’ve addressed a good number of tools in this topic, but in the future, there may be other tools that you’ll be interested in using. Keep a list of the tools you’re using for dynamic analysis and keep it updated. Every now and then, share it with others, and add new tools, remove old ones, and so on.

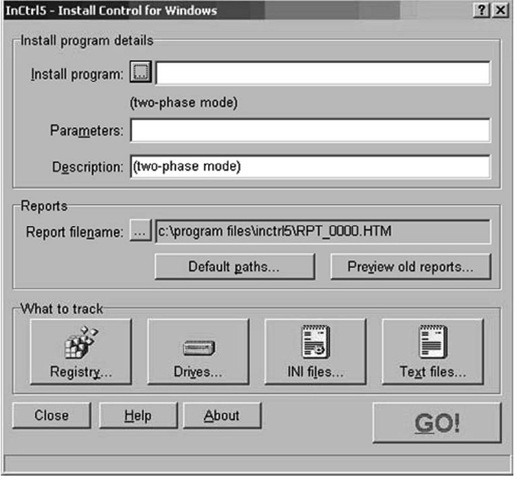

Once you have all of your tools in place, be sure that you understand how they are used, and ensure that you know and understand the necessary configuration options. Most of the tools will be started manually, and you need to have a checklist of the order in which you’re going to start your tools. For example, tools such as Regshot (http://sourceforge.net/projects/regshot/), illustrated in Figure 6.15, and InControl5, illustrated in Figure 6.16, take snapshots of the system for comparison, so you want to launch the first phase (collect the baseline snapshot) first, and then start the real-time monitoring tools.

Figure 6.15 Regshot GUI

Regshot saves its output in plain text or HTML format. When using snapshot and monitoring tools such as Regshot, you should keep in mind that most tools will be able to monitor changes only within their own user context or below. This means running the tools within an Administrator account will allow you to monitor changes made at that user context and below, but not changes made by SYSTEM-level accounts.

Figure 6.16 InControl5 GUI

InControl5 provides you with a nice report (HTML, spreadsheet, or text) of files and Registry keys that were added, modified, or deleted. InControl5 will also monitor specific files for changes, as well, although the list of files monitored is fairly limited. You can also select an install program, such as an MSI file, for InControl5 to monitor. However, I haven’t seen many Trojans or worms propagate as Microsoft installer files.

Once you’ve launched your malware and collected the data you need, you want to halt the real-time monitoring tools and then run the second phase of the snapshot tools for comparison. At this point, it’s your decision as to whether you want to save the logs from the real-time monitoring tools before or after you run the second phase of the snapshot tools. Your testing platform is for your use, and it’s not going to be used as evidence, so it’s your decision as to the order of these final steps. Personally, I save the data collected by the real-time monitoring tools first, and then complete the snapshot tools processes. I know I’m going to see the newly created files from the real-time monitoring tools in the output of the snapshot tools, and I know when and how those files were created. Therefore, I can easily separate that data from data generated by the malware.

To take things a step further, it’s a good idea to create a separate directory for all of your log files. This makes separating the data during analysis easier, as well as making it easier to collect the data off the system when you’ve completed the monitoring. In fact, you may even consider adding a USB removable storage device to the system and sending all of your log files to that device.

In short, the process looks something like this:

■ Ensure that all monitoring tools are updated/installed; refer to the tool list.

■ Ensure that all monitoring tools are configured properly.

■ Create a log storage location (local hard drive, USB removable storage, etc.).

■ Prepare the malware to be analyzed (copy the malware file to the analysis system, document the location with the file system).

■ Launch the baseline phase of the snapshot tools.

■ Enable the real-time monitoring tools.

■ Launch the malware (document the method of launch; scheduled task, double-click via shell, launch from command prompt, etc.).

■ Stop the real-time monitoring tools, and save their data to the specified location.

■ Launch the second phase of the snapshot tools; save their data to the specified location.

I know this is pretty simple, but you’d be surprised how much important and useful data gets missed when a process like this isn’t followed. Hopefully, by starting from a general perspective, you have a process that you can follow, and from there you can drill down and provide the names of the tools you’re going to use. These tools may change over time. For example, for quite a while, RegMon and FileMon from Sysinternals.com were the tools of choice for monitoring Registry and file system accesses, respectively, by processes.

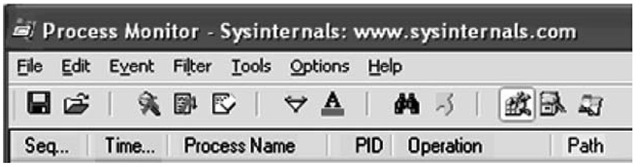

Figure 6.17 illustrates the Process Monitor toolbar.

Figure 6.17 The Process Monitor Toolbar, Showing the RegMon and FileMon Icons

If you’ve used RegMon or FileMon in the past, the Process Monitor toolbar illustrated in Figure 6.17 should seem familiar; most of the icons are the same ones and in the same order as the two legacy applications.

When using Process Monitor to capture Registry and file system access information, you need to be aware that all accesses are captured and that this can make for quite a bit of data to filter through. For example, click the magnifying glass with the red X through it and just sit and watch, without touching the keyboard or mouse. Events will immediately start appearing in the Process Monitor window, even though you haven’t done a thing! Obviously, quite a lot happens on a Windows system every second that you never see. When viewing information collected in Process Monitor, you can click an entry and choose Exclude | Process Name to filter out unnecessary processes, and remove extraneous data.

Tip::

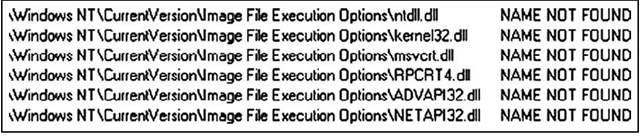

Remember the Image File Execution Options Registry key that we discussed in next topic? Process Monitor is great for showing how the Windows system accesses this key. As a test, open a command prompt and type the command net use, but do not press Enter. Open Process Monitor and begin capturing Registry access information. Go back to the command prompt and press Enter, and once you see the command complete, halt the Process Monitor capture by clicking the magnifying glass so that the red X appears. Figure 6.18 illustrates a portion of the information captured, showing how the net.exe process attempts to determine whether there are any Image File Execution Options for the listed DLLs.

Figure 6.18 Excerpt of Process Monitor Capture Showing Access to the Image File Execution Options Registry Key

You might consider using some other tools, as well. For example, the July 2007 edition of toolsmith (written by Russ McRee and available from http://holisticinfosec.org/toolsmith/docs/july2007.pdf), which was titled "Malware Analysis Software Tools," demonstrates SysAnalyzer from iDefense (http://labs.idefense.com/software/malcode.php). SysAnalyzer allows you to monitor the live system runtime state while executing malware during dynamic analysis. SysAnalyzer will monitor various aspects of the system while the malware is executing, so it goes without saying that the system will be infected; however, using a virtual system makes reverting back to a previous, pristine state extremely simple.

One final step to keep in mind is that you may want to dump the contents of physical memory (RAM) using one of the methods discussed in next topic. Not only will you have all of the data from dynamic analysis that will tell you what changes the malware made on the system, but in the case of obfuscated malware, you will also have the option of extracting the executable image from the RAM dump, giving you a view of what the malware really looks like, enhancing your analysis.

Tip::

This topic presented a great deal of very useful information to help you understand Windows PE files, including information about their structure. However, in summer 2008:Investigating and Analyzing Malicious Code and the authors should be credited with producing perhaps the most useful and comprehensive guide on this subject available today, addressing both Windows and Linux malware from a number of perspectives. One of the many valuable aspects of the topic is the number of freely available tools listed that can be used in a wide range of analysis scenarios.

Summary

In this topic, we looked at two methods you can use to gather information about executable files. By understanding the specific structures of an executable file, you know what to look for as well as what looks odd, particularly when specific actions have been taken to attempt to protect the file from analysis. The analysis methods we discussed in this topic allow you to determine what effects a piece of software (or malware) has on a system, as well as the artifacts it leaves behind that would indicate its presence. Sometimes this is useful to an investigator, as antivirus software may not detect it, or the antivirus vendor’s write-up and description do not provide sufficient detail. As a first responder, these artifacts will help you locate other systems within your network infrastructure that may have been compromised. As an investigator, these artifacts will provide you with a more comprehensive view of the infection, as well as what the malware did on the system. In the case of Trojan backdoors and remote access/control software, the artifacts will help you establish a timeline of activities on the system.

Each analysis technique presented has its benefits and drawbacks, and like any tool, each should be thoroughly justified and documented. Static analysis lets you see what kinds of things may be possible with the malware, and it will give you clues as to what you may expect when you perform dynamic analysis. However, static analysis many times provides only a limited view into the malware. Dynamic analysis can also be called "behavioral" analysis, as when you execute the malware in a controlled, monitored environment, you get to see what effects the malware has on the "victim" system, and in what order. However, dynamic analysis has to be used with great care, as you’re actually running the malware, and if you’re not careful you can end up infecting an entire infrastructure.

Even if you’re not going to actually perform any analysis of the malware, be sure to fully document it—where you found it within the file system, any other files that are associated with it, compute cryptographic hashes, and so forth. Malware authors don’t always name their applications with something that stands out as bad, such as "syskiller.exe". Many times, the name of the malware is innocuous, or even intended to mislead the investigator, so fully documenting the malware will be extremely important.

Solutions Fast Track

Static Analysis

- Documenting any suspicious application or file you find during an investigation is the first step in determining what it does to a system and its purpose.

- The contents of a suspicious executable may be incomprehensible to most folks, but if you understand the structures used to create executable files, you will begin to see how the binary information within the file can be used during an investigation.

- Do not rely on filenames alone when investigating a suspicious file. Even seasoned malware analysts have been known to fall prey to an intruder who takes even a few minutes to attempt to "hide" his malware by giving it an innocuous name.

Dynamic Analysis

A dynamic analysis process will let you see what effects malware has on a system.

- Using a combination of snapshot-based and real-time monitoring tools will show you not only the artifacts left by a malware infection, but also the order (based on time) in which they occur.

- When performing dynamic analysis, it is a good idea to use monitoring tools that do not reside on the testing platform so that information can be collected in a manner unaffected by the malware.

- Once dynamic malware analysis has been completed, the testing platform can be subject to incident response as well as postmortem computer forensic analysis. This not only allows an analyst to hone her skills, but it will also provide additional verification of malware artifacts.

Frequently Asked Questions

Q: When performing incident response, I found that a file called svchost.exe was responsible for several connections on the system. Is this system infected with malware?

A: Well, the question isn’t really whether the system is infected, but rather whether svchost. exe is a malicious piece of software. Reasoning through this, the first question I would ask is, what did you do to view the network connections? Specifically, what is the status of the connections? Are they listening, awaiting connections, or have the connections been established to other systems? Second, what ports are involved in the network connections? Are they normally seen in association with svchost.exe? Finally, where within the file system did you find the file? The svchost.exe file is normally found in the system32 directory, and is protected by Windows File Protection (WFP), which runs automatically in the background. If there are no indications that WFP has been compromised, have you computed a cryptographic hash for svchost.exe and compared it to a known-good exemplar? Many times during incident response, a lack of familiarity with the operating system leads the responder down the wrong road to the wrong conclusions.

Q: I found a file during an investigation, and when I open it in a hex editor, I can clearly see the "MZ" signature and the PE header. However, I don’t see the usual section names, such as ".text", ".idata", and ".rsrc". Why is that?

A: PE file section header names are not used by the PE file itself for anything in particular, and can be modified without affecting the rest of the PE file. Although "normal" PE files and some compression tools have signatures of "normal" section header names, these can be easily changed. Section header names act as one small piece of information that you can use to build a "picture" of the file.

Q: I’ve completed both static and dynamic analysis of a suspicious executable file, and I have a pretty good idea of what it does and what artifacts it leaves on a system. Is there any way I can verify this?

A: Once you’ve completed your own analysis, it may be a good idea to use an available antivirus software package to scan the malware. In most cases, an investigator will do this first, but this does not always guarantee a result. Many an incident responder has shown up on-scene to find a worm clearly running amok on a network, even though there are up-to-date antivirus utilities on all affected systems. If you do not get any results from the available utilities, try uploading the file to a site such as www.virustotal.com, which will scan the file with more than two dozen antivirus engines and return a result. If your results are still limited, submit the file for analysis, including all of your documentation, to your antivirus vendor.

Q: I am interested in reading more about executable file and malware analysis. Can you recommend any resources?

A: The best possible resource at this time is Malware Forensics: Investigating and Analyzing Malicious Code, written by James Aquilina. This topic covers analysis of malicious binaries on both Windows and Linux systems, and is perhaps the most complete and comprehensive topic available on the subject to date. Depending on the amount of time you have to invest in something going further with this subject, a number of additional resources are available on "reverse engineering" executable code. Many of the techniques discussed pertain equally well to malware analysis. Some such sites include REblog (http://malwareanalysis.com/communityserver/blogs/geffner/default.aspx) and OpenRCE (www.openrce.org/articles/).