Abstract

We report on work towards an architecture that incorporates accessible design methods, guidelines and support tools for building and testing adaptive and accessible user interfaces (UI) for users with mild impairments. We especially address interaction constraints for elderly people, both during application design time and at run time, targeting on hybrid TV platforms. The functional principle of our architecture is twofold: At runtime, it lets users interact with hybrid TV applications through an ensemble of accessible UIs that cover different input and output modalities and are jointly adapted via user profiles and real-time feedback. At design time, it allows developers to re-use UIs and representative user personas in simulating the effect of the UI modalities on different impairments.

Keywords: Inclusive Design, Accessibility Guidelines, Development Methods, Access to the Web, Hybrid TV, Connected TV, Interaction Techniques, Usability, and User Experience, Virtual User, User Simulation, Context-awareness, Architectures and Tools for Universal Access, Adaptive and augmented Interaction.

Introduction

In this paper we address key research questions on accessible user interface and application design as well as multimodal interaction for elderly people through a hybrid TV platform in the living room. Aging and accessibility are highly correlated topics. It is hardly possible to refer to one without thinking of the other. According to [1], aging is a demographic trend in the age distribution of a population towards older ages, whereby, it is also a known fact that approximately 50% of elderly (persons age 65 years or older) suffer from some kind of (typically mild) disability such as visual, cognitive or motor impairment. This poses several problems and challenges to their social interaction [cf. [2], [3]] and interaction with information technology cf. [4].

While extensive research on web accessibility [5], [6] has yielded significant results (such as the developments of accessibility APIs, tools, recommendations and guidelines in delivering broadband service on the web), there is little research work done thus far, to address accessibility problems in other environments such as digital broadcast and hybrid TV platforms. This means, considerable research effort is still required to bridge the accessibility gap between broadband and broadcast service platforms. In real life, this accessibility gap has far reaching consequences for the aging population (elderly people), who spend more time in their homes and are used to the TV in the living room as an interaction device rather than the desktop computer. According to recent reports [7], this segment of the world’s population is increasing steadily at an estimated rate of 870,000 per month.

However it is also worth noting, that currently, information and telecommunications (ICT) services experience a paradigm shift. Novel standards and platforms, such as recently released hybrid TV standard-HbbTV [8], or the MeeGo or GoogleTV platforms, will be commonplace in the homes of the audience. Hybrid TV platforms, unify broadcast services and broadband (managed IP delivery solutions or via access to the web) applications.

This innovation has two main advantages: (1) developers of ICT applications (such as video-conferencing, e-learning or home automation), will soon be able to deploy their applications as important services on the Hybrid TV infrastructure; (2) knowledge gained through research on web accessibility can become the basis for understanding and addressing accessibility challenges on digital TV and hybrid TV platforms.

We view these current developments as motivation to craft engineering tools and frameworks that can on the one hand assist ICT application and user interface (UI) developers to adopt inclusive design principles in their implementations, and on the other hand, foster support for accessible features in mainstream products within the industry. Currently a significant percentage of potential beneficiaries of new ICT applications are left out, due to the fact that accessible design patterns are not yet an integral part of the development cycle of mainstream products and services.

Overview of Challenge: Develop for the Elderly

Inclusive design in practice is often, an expensive process that requires specific knowledge of the target user group and, related to this, may require specific UI designs to be implemented and tested with these users. In the case of UIs for elderly people, a spectrum of common impairments needs to be considered.

Design of inclusive ICT applications thus poses some obstacles to application developers: (1) There often is a significant knowledge gap with regard to the special needs of this particular group of users, (2) the development cost of specific UI components needed for personalized interaction and adapted to the user’s impairments may be too high (given that, athough there are a number of accessibility APIs [10], [11], [12], [13], available for various platforms that allow developers to provide accessibility features within their applications, today none of them provides features for the automatic adaptation of multimodal interfaces, being capable to automatically fit the individual requirements of users with different kinds of impairments) and (3) performing user trials for validation of designs require substantial efforts and is often expensive and time consuming. Furthermore, it is hard to get a large number of respondents in the trials that are representative of the population of this target group of users.

Another challenge developing for the elderly, is the design of runtime frameworks that support multiple user interfaces for accessible applications like i2home [14], TSeniority [15] and PERSONA [16] and also provide design time support tools that allow developers perceive at design time the effects of a visual, cognitive or motor impairment on a user’s experience of application content at runtime and to perform necessary adaptation on the user interface at design time.

Our Approach

Our goal is to reduce these obstacles for developers of ICT applications, especially targeting hybrid TV platforms such as the emerging connected TV standard HbbTV [8], where according to [9]; hybrid or connected TV is to create a huge market in Europe by 2015. For this purpose, we are developing an architecture that is tailored to meet the requirements of accessible UI design and support developers even without major expertise in inclusive design. From previous research work, especially in the area of ambient assisted living [cf. [14], [15] and [16]], we know that runtime platforms have succeeded in supporting a multitude of user interfaces in a multimodal interaction setting with accessible applications. Thus, adding development support to such runtime platforms (architectures and tools for universal access) seems a worthwhile approach. Collecting detailed requirements from the different application stakeholders, including end-users, developers, platform providers, resulted in a set of features of our architecture and proposed development method.

Requirements

At user level, the major design criterion is inclusive access to the ICT application. To cope with the scope of impairments in the target group, we have identified a need for multiple UI modalities and adaptation parameters to be provided as standard UI components, including adaptable speech IO, gesture and haptic interfaces as well adaptive visual rendering [17]. In addition, we identify significant accessibility challenges posed by rich internet application and advance multimedia applications developed using Ajax, and some related web technologies for broadband services. According to [6], while applications using these technologies provide rich multimedia and dynamic content, they however, cannot be perceived by people with some visual or cognitive impairment or used by people with some motor impairment. In addition, considering the huge research efforts and achievements in addressing problems of web accessibility by [5], the idea would be to explore the extent to which concepts of web accessibility can be applied to hybrid TV platforms in a digital TV environment as well as identify accessibility gaps in deploying broadband services in an internet-based infrastructure and broadcast services in digital TV environment. Further, we identify the requirement that, inclusive design for the broad spectrum of user capabilities in the target group is best supported by actually adapting parameters across modalities, making use of relevant recent research results in inclusive multimodal interaction[18], [19].

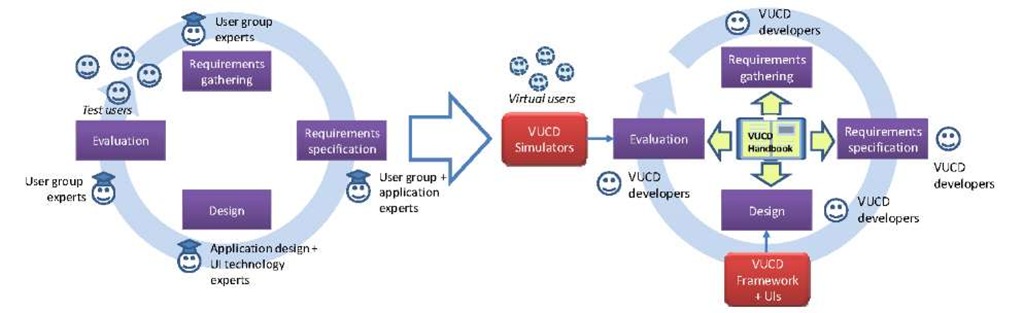

At developer level, the foremost requirement is to streamline the inclusive development process using the envisioned runtime framework with design time support tools. Taking into account a user-centered cyclical development process as depicted in Fig. 1, the idea is to bypass the major obstacles noted above: (1) Lack of knowledge on elderly users and their specific requirements is addressed by a knowledge repository (accessibility design guidelines or a developer handbook) that supports developers throughout the UCD cycle, incorporating guidelines such as [20] with target-group specific knowledge in the context Hybrid TV, which we elicit during user studies, (2) the benefit of providing re-usable adaptable UI components is clear, but to (3) simplify testing of these UIs within application contexts has been identified as an equally important issue. We therefore have based our design on virtual users [21] and simulation components [22] as central facilitators, leading to a "virtual user centered design" (VUCD) cycle as shown in Fig.1. This way a great portion of test with real end users may be substituted with virtual user simulations at design time.

Fig. 1. Traditional user-centered design cycle vs. virtual-user centered design cycle

At the level of set top box manufacturers, connected TV (hybrid TV) platform providers, a major criterion is to incorporate the envisioned runtime UI framework supporting accessibility design patterns into underlying software framework of their middleware software stack. In this way, accessible design can become integral part of mainstream products and thus ultimately break down the product market boundaries and accessibility barriers. A further criterion is the open software framework requirement for digital TV and other connected TV platforms as identified in [23]. Such a framework should at least provide support for seamless connectivity of accessible input and assistive technologies that are required in managing user interaction and also allow for service interoperability.

Development Strategy

Based on the conditions as well as requirements presented in our approach, we designed a high-level architecture showing all components and their roles in Fig. 2: A runtime-framework supports ICT applications by providing interfaces for seamless communication between these and adaptable UI components through an abstract UI representation standard [24],[25]. The UI components and modal transformations themselves may be reused by developers, and for each UI modality, a simulator component that is part of a design time toolbox will be provided to evaluate user— application interaction given impairment profiles [22]. The user model underlying these profiles is derived from results of quantitative user trials. During the trials the interaction behavior of group elderly users was monitored to identify requirements and variables [26] for multimodal adaptation under impairment conditions and to provide important inputs to the design knowledge base as well as training data for deployment at runtime.

Fig. 2. An architecture for multimodal adaptive design and run-time support

It is worth noting that, in this paper, the research challenge is not in the creation of a multimodal software framework that enables an ensemble of UI components (speech input and output, remote controls, visual gestures, multi-touch control, avatars, haptic feedback, video and audio rendering as well as graphical user interfaces) to seamlessly interact with application content in a multimodal setting, as this has been widely explored and addressed in previous work [cf. runtime frameworks like [14], [15] and [16]], but rather to develop methods and tools that enable existing mul-timodal UI runtime frameworks to address fundamental questions of accessible and inclusive design. Specifically, this paper builds on a promising UI runtime framework [16] which provides the underlying software framework for seamless connectivity of accessible user interface technologies and service interoperability between ICT applications. We focus on adding engineering tools for runtime multimodal UI adaptation and user simulation [22] at design time, which allows developers to perceive the effects of a visual, cognitive or motor impairment on a user’s experience of application content at runtime. Having this information at design time is crucial in ensuring an inclusive design practice during the development phase of mainstream products. In addition we claim that our approach will greatly simplify the UCD process for developers with little expertise in this area.

In the remaining sections of this topic, we present concepts on our development methodology in realizing design time support for developers through a user simulation tool as well as multimodal adaptation at runtime. In topic 5 we present some related work which also reintroduces the baseline runtime UI framework on which this paper is based and explores criteria for its choice for developments on target hybrid TV platforms. In topic 6, we take a look at initial evaluation results from developer focus group sessions organized assess concepts on our proposed accessible UI design development framework and support tools. Topic 7 presents a summary conclusion and an outlook into future work.

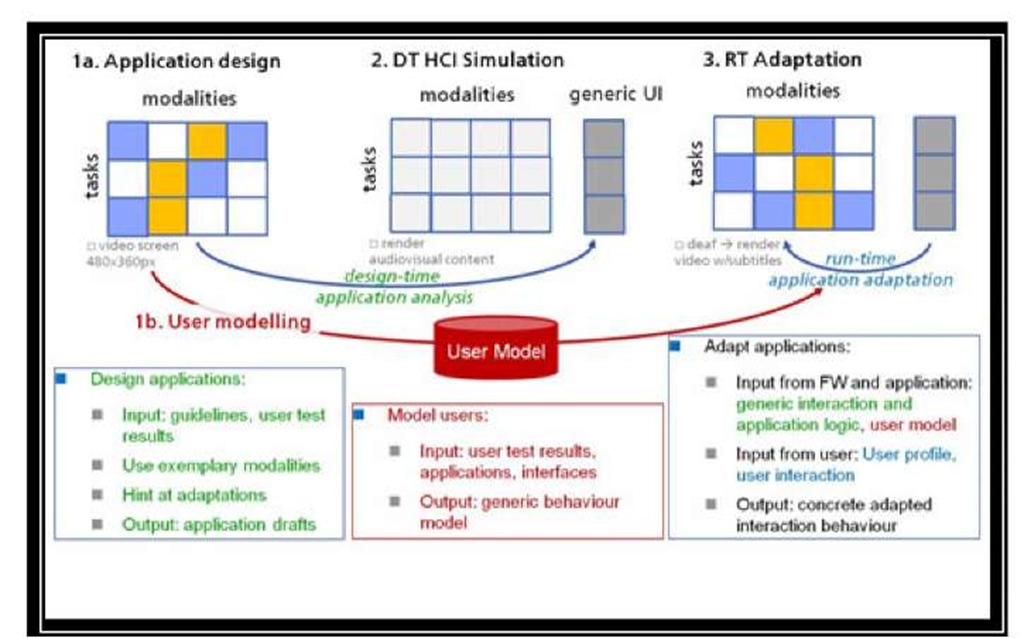

Our approach consists of three main steps in ensuring accessible design practice in ICT applications development at design time (DT) and multimodal adaptation at runtime (RT). Fig. 3 shows a schematic representation of these three steps, through which we aim at overcoming challenges discussed in topic 2, that developer of accessible ICT applications face: a knowledge gap, cost of user trials and development effort of accessible Uls. In this process the driving "facilitators" are a user model and a simulation engine. In section 4.1 we explain in detail how our development process achieves a simplified UCD approach for an accessible ICT application developer while in section 4.2 we present methods for realizing runtime multimodal adaptation.

Fig. 3. A development process for design time support and runtime adaption 4.1 UCD, with the Expensive Steps Simplified

Our strategy to ensure a simplified UCD approach for ICT applications developer is based on the idea of adopting a "virtual" user centered design methodology. This is achieved by adding extensions to an existing runtime UI framework such as [16], that allow for the integration of design time support tools such as a virtual user simulation tool (comprising of a simulation engine and user personas). The simulation is a realization of different algorithms predicting [22] the performance of accessible UI components. These UIs render a graphical representation of the visual, motor and cognitive abilities of virtual users (personas) derived in a pre-design phase of user modeling, where data acquired in comprehension user trials is quantitatively analyzed to elicit parameters for classifying and categorizing user body structural and functional impairments. As shown in Fig.3, the result of this pre-design phase is a user model, which characterizes the generic behavior of a set of user personas and drives both application design process as well as its runtime adaption.

At design time, the ICT developer defines the application logic through the specification of a set of interaction tasks that the application can accomplish in some known modalities. This initially does not take into consideration special accessibility needs of users with impairments due to the knowledge gap. However based on our development approach, the application developer can in a second step, load profiles of user personas from our generic user model, that represent real world users with some visual, cognitive or motor impairment conditions. In addition, our runtime UI framework with extensions for design time support tools comes with a set of generic UI components, with which the application developer can run simulations to test their performance against visual, cognitive and motor capabilities of different personas. This process occurs as follows:

1. based on an initial step at application design time in preparing content to be viewed by an end user performing an interaction task, the developer, can query the user model for the profile of a user persona with for example, a given level of visual impairment.

2. Using this profile information, runtime UI framework will select the set of UI components that can render application content in the modalities of that user persona.

3. In a next step, the runtime UI framework will use its simulation tool extension to predict the performance of the set of selected UI components on application content for that user persona’s profile.

4. Finally in the last step, the application developer is able to decide if some form of adaptation is needed at the level of content preparation in order to accommodate special accessibility need of user with such impairments

In is worth noting that, this simulation process occurs in a close loop where the developer can set break points in analogy to operating in the debug mode.

By using the proposed "virtual user centered design" approach, we ensure that developers of ICT applications are able to overcome the challenges discussed in topic 2. Specifically, this approach enables developers bypass time consuming and costly end user tests as well as bridges the knowledge gap on accessibility needs of elderly people or users with impairments.

Realizing Runtime Multimodal Adaptation

In the previous section we showed that a virtual user centered design approach can create awareness among ICT application developers, of special accessibility needs of elderly people. To address these needs in a real life setting where the elderly user is interacting with a application running on a set-top box or hybrid TV platform in the live room, our proposed development method and framework, must provide mechanisms for runtime multimodal adaption.

We make one underlying assumption: our baseline runtime UI framework provides generic interfaces for seamless connectivity and integration of multiple UI components and ICT applications on a set-top box or hybrid TV platform.

The transition from design time performance tests of UIs rending application content to runtime multimodal adaptation occurs through (1) a replacement model of virtual user profiles (personas) with real world elderly user profiles (2) additional adaptation measures taken by the developer on application content as a result of knowledge gained through user simulations at design time and (3) the runtime framework supports generic interactions and application logic, both of which are driven by the same user model employed at design time. Following these three points, a user profile of the elderly user is instantiated from the generic user model at runtime with initial parameters for adaptation. In addition, the application provides semantic annotations using accessibility standard guidelines such as [6] in describing needed adaptation measures identified during user simulations at design time. Finally the runtime UI framework must provide mechanisms for integrating the different levels of adaptation in a coherent way during user interaction with an ICT application.

Related Work

Our development method and concepts towards a virtual-user centered design framework as presented in this paper, takes into consideration an important research principle of re-use and extend rather than re-invent with new features of the state of the art. In particular, this work takes into consideration, promising state of the art runtime multimodal interaction UI frameworks such as the one presented in [16] that was developed in the context of reference architecture for Ambient Assisted Living (AAL) environments. The PERSONA UI framework as present in [16], is an open distributed runtime framework, designed for adaptive user interaction in AAL environments. It provides groundwork for adding engineering tools that extend the capabilities of such a runtime framework to accommodate design time development support and promote concepts of accessible UI design. Other UI frameworks like in [14], [15] provide the basic functions of a runtime multimodal UI framework, but are still based on device-based interoperability where requests are tightly-coupled with syntactic bindings such as with service interfaces in web services, thus making it difficult to realize runtime multimodal adaptation.

Initial Evaluation Results of Proposed Development Method

To verify the concepts presented in this paper on accessible UI design and a "virtual user centered" development approach, we carried out two empirical research investigations based on qualitative studies: (1) in an online survey and (2) in developer focus groups sessions.

The goal of the online survey was to gain feedback on current development practice in the industry, especially for the set-top box or hybrid TV platform providers and as well as accessibility demands for future accessible TV platforms. The online survey had 79 responses were received on line from 16 countries and 30 companies or institutions.

Results from the survey clearly show that the concept of virtual user centered design is new. Only 10 out of 79 respondents declared having used simulation tools realizing such a virtual user centered approach and thought, the simulation process must accompany the various design and validation cycles, whereby graphical user interfaces and input devices are considered as most important for simulation. They also observed that user testing is very time consuming and that using those tools would save much time during the application development. Nevertheless, attention must be paid to reliability of such simulation tools.

However regarding questions on runtime multimodal adaptation and the integration of accessibility features into ICT applications, the following results were obtained:

• There is a significant difference between the user interface technology developers (preferring automatic runtime multimodal adaptation (61.1%)) and the STB / TV connected manufacturers (preferring manual adaptation (83.3%)).

• An accessibility API managed by a user interface mark-up language is more preferred (rating 41%). This ratio is still higher among the STB / TV connected manufacturers (66.7%) and the software frameworks, middleware or tool developers (58.8%).

The focus group sessions offered the opportunity to better in-depth discuss some points needing clarifications or complete the analysis of the results.

Conclusions and Future Work

In this paper we propose a development method for accessible UI design and multi-modal interaction through set-top box or hybrid TV platform. We identify common challenges facing ICT application developers in designing accessible UIs and applications. We propose a solution to this problem based on a virtual user centered design methodology, which allows developers to bypass time consuming and costly end user tests as well as bridges the knowledge gap on accessibility needs of elderly people or users with impairments. Our approach is based on adding extensions to an existing runtime UI framework such as [16] that allows for the integration of design time support tools such as a virtual user simulation tool. Finally we present a detailed development strategy that simplifies the UCD process and ensures runtime multimodal adaptation. Initial evaluation results of this development method and architecture show that the concept of virtual user centered design is new and not know in the industry, but respondents in a survey observed that user testing is very time consuming and that using our approach would save much time during the accessible UI and application development. Nevertheless, attention must be paid to the reliability of such a simulation tool. With regards to future work, we expect to realize a first reference implementation of this approach and report on the results in a subsequent publication.