The iPhone and iPad use a multitouch-capable capacitive touchscreen. Users access the device by tapping around with their finger. But a finger isn’t a mouse. Generally, a finger is larger and less accurate than a more traditional pointing device. This disallows certain traditional types of UI that depend on precise selection. For example, the iPhone and iPad don’t have scrollbars. Selecting a scrollbar with a fat finger would either be an exercise in frustration or require a huge scrollbar that would take up a lot of the iPhone’s precious screen real estate. Apple solved this problem by allowing users to tap anywhere on an iPhone screen and then flick in a specific direction to cause scrolling.

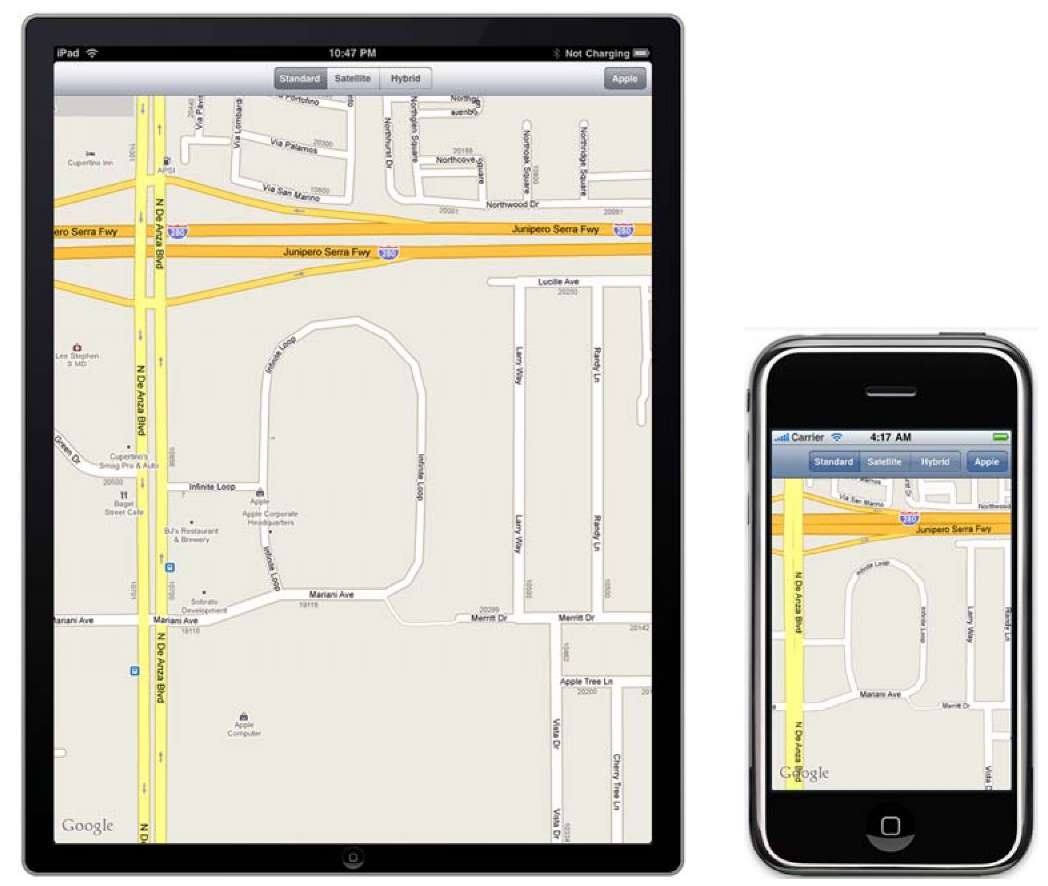

Figure 1.1 The iPad and iPhone side by side. The primary difference between the two—the available screen real estate—is readily apparent.

Another interesting element of the touchscreen is shown off by the fact that a finger isn’t necessarily singular. Recall that the iPhone and iPad touchscreens are multi-touch. This allows users to manipulate the device with multifinger gestures. Pinch-zooming is one such example. To zoom into a page, you tap two fingers on the page and then push them apart; to zoom out, you similarly push them together.

Finally, a finger isn’t persistent. A mouse pointer is always on the display, but the same isn’t true for a finger, which can tap here and there without going anywhere in between. As you’ll see, this causes issues with some traditional web techniques that depend on a mouse pointer moving across the screen. It also provides limitations that may be seen throughout SDK programs. For example, there’s no standard for cut and paste, a ubiquitous feature for any computer produced in the last couple of decades.

In addition to some changes to existing interfaces, the input interface introduces a number of new touches (one-fingered input) and gestures (two-fingered input), as described in table 1.1.

Table 1.1 iPhone and iPad touches and gestures allow you to accept user input in new ways.

|

Input |

Type |

Summary |

|

Bubble |

Touch |

Touch and hold. Pops up an info bubble on clickable elements. |

|

Flick |

Touch |

Touch and flick. Scrolls the page. |

|

Flick, two-finger |

Gesture |

Touch and flick with two fingers. Scrolls the scrollable element. |

|

Pinch |

Gesture |

Move fingers in relation to each other. Zooms in or out. |

|

Tap |

Touch |

A single tap. Selects an item or engages an action such as a button or link. |

|

Tap, double |

Touch |

A double tap. Zooms a column. |

When you’re designing with the SDK, many of the nuances of finger mousing are taken care of for you. Standard controls are optimized for finger use, and you have access only to the events that work on the iPhone or iPad. Topic 6 explains how to use touches, events, and actions in iOS; as an iOS developer, you’ll need to change your way of thinking about input to better support the new devices.