Representation of Media Objects

MPEG-4 standardizes a number of primitive types of media objects, which can represent both natural and synthetic content types. These media objects can be either two- or three-dimensional. Primitive media objects types include:

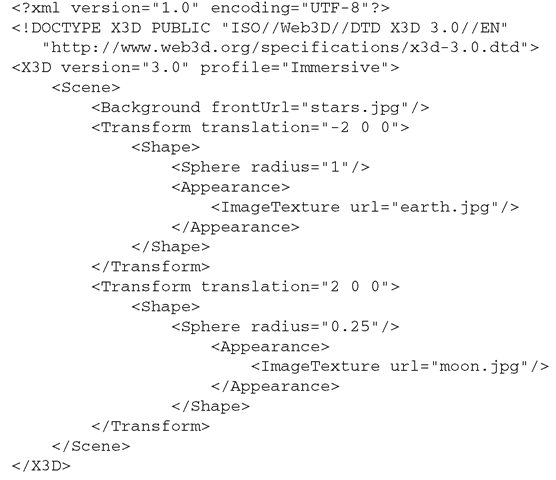

List. 2.2 Code of the VRML scene from List. 2.1 encoded in X3D format

• still images,

• video objects,

• audio objects,

• text and graphics,

• synthetic objects,

• synthetic sound.

Each coded media object consists of descriptive information that enables proper handling of the object in an audiovisual scene and streaming data associated with the object if necessary. Each media object can be represented independently of its surroundings or background.

Composition of Audiovisual Scenes

Audiovisual scenes in MPEG-4 are organized in a form of trees of media objects (similarly as in VRML and X3D). Leaves of the trees correspond to primitive media objects, while sub-trees correspond to compound media objects. For example, a visual object can be tied together with a corresponding sound to form a new compound media object. Hierarchical grouping allows authors to construct complex audiovisual scenes and enables users to manipulate meaningful sets of objects in the receiver.

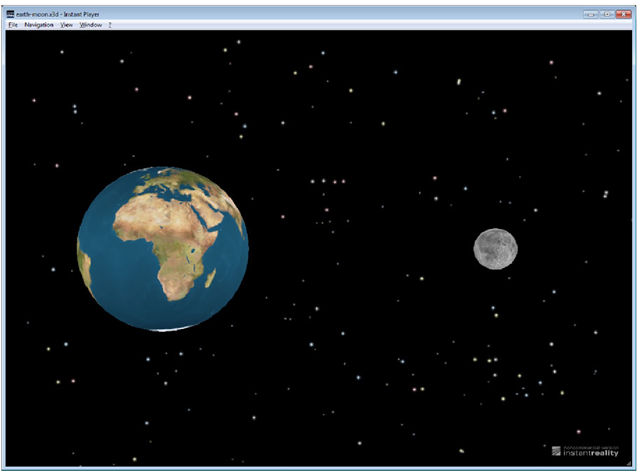

Fig. 2.4 The X3D scene from List. 2.2 displayed in the instant reality browser

The MPEG-4 standard enables positioning media objects in the coordinate system, applying transformations to media objects to change their geometrical or aural appearance, grouping primitive media objects in order to form complex media objects, applying streaming data to media objects in order to modify their visual or aural appearance, and changing the user’s viewing and listening points in the scene.

The scene representation in MPEG-4 standard is based on the scene representation of VRML/X3D and extended to cover full MPEG-4 functionality. The representation uses two encodings: binary BIFS and textual BIFS-Text. Although BIFS-Text is not part of the standard, it is commonly used.

An example of an MPEG-4 scene displayed in the Osmo4 player [22] is presented in Fig. 2.5 (3D model courtesy of [56]).

Interaction with Audiovisual Scenes

A user observes an MPEG-4 scene in the form that has been designed by its author. However, the author can enable the user to interact with the MPEG-4 scene. Depending on the degree of freedom allowed by the author, the user may have different possibilities of interaction with the audiovisual scene. Similarly as in VRML/X3D, the following operations may be allowed:

Fig. 2.5 Example of a 3D MPEG-4 scene displayed in the Osmo4 player

• change the viewing/listening point in the scene (navigate),

• drag/rotate/resize objects in the scene (manipulate),

• trigger events by clicking, pointing at, or approaching a specific object.

More complex kinds of behavior can be programmed by the use of scripting mechanisms based on Java (through MPEG-J [52]) or ECMAScript.

Extensible MPEG-4 Textual Format

The Extensible MPEG-4 Textual format (XMT) is a framework for representing MPEG-4 scenes using a textual XML syntax. XMT allows content authors to exchange their content with other authors, tools or service providers, and facilitates interoperability with both the X3D and the Synchronized Multimedia Integration Language (SMIL) by the W3C Consortium [10, 53].

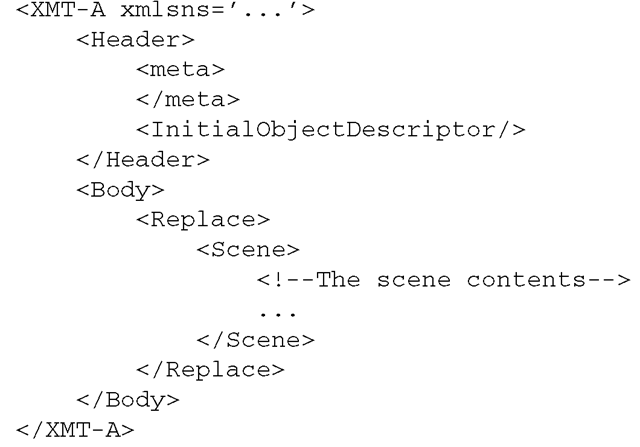

The XMT framework consists of two levels of textual syntax and semantics: XMT-Aformat and XMT-Ω format. XMT-A is an XML-based version of MPEG-4 content which contains a subset of X3D and extends it to represent MPEG-4 specific features. XMT-A provides one-to-one mapping between the textual and binary formats. In List. 2.3, the overall structure of XMT-A representation of an MPEG-4 scene is presented. XMT-Ω is a high-level abstraction of MPEG-4 elements. The goal of XMT-Ω is to facilitate content interchange and provide interoperability with the W3C SMIL language.

List. 2.3 Structure of XMT-A representation of an MPEG-4 scene

Structure of the MPEG-4 Standard

MPEG-4 consists of separate but closely interrelated parts that can be implemented individually or combined with other parts. The basis is formed by Part 1— Systems, Part 2—Visual and Part 3—Audio. Part 4—Conformance defines implementation testing, Part 5—Reference Software provides reference implementations, while Part 6—DMIF (Delivery Multimedia Integration Framework) defines an interface between MPEG-4 applications and network/storage. All parts of the MPEG-4 standard available or being developed at the time of writing this topic are listed in Table 2.2. Relevant parts are highlighted and then described shortly.

Part 1—Systems—specifies system-level functionalities for communication of interactive audio-visual scenes, including a terminal model, representation of metadata of elementary streams, representation of object content information, interface to IP management and protection systems as well as representation of synchronization information and representation of individual elementary streams in a single stream [29].

Part 2—Visual—provides elements related to the encoded representation of visual information, including: specification of video coding tools, mapping of still textures into visual scenes, human face and body animation based on face/body models, and animation of 2D deformable meshes [30].

Part 11—Scene description and application engine—specifies the coded representation of interactive audio-visual scenes and applications. In particular, it defines the following elements: spatio-temporal positioning of audio-visual objects as well as their behavior in response to interaction, representation of synthetic twodimensional (2D) and three-dimensional (3D) objects, the Extensible MPEG-4 Textual format (XMT), and description of an application engine [31].

Part 16—Animation Framework eXtension (AFX)—specifies extensions for representing 3D graphics content. It provides higher-level synthetic objects for specifying geometry, texture, and animation as well as dedicated compression methods. AFX also specifies a backchannel for progressive streaming of view-dependent information. In addition, the standard defines profiles for using MPEG-4 3D graphics tools in applications [32].

Table 2.2 Parts of the MPEG-4 standard—ISO/IEC 14496 (as of July 2011)

|

1 |

Systems |

|

2 |

Visual |

|

3 |

Audio |

|

4 |

Conformance testing |

|

5 |

Reference software |

|

6 |

Delivery Multimedia Integration Framework (DMIF) |

|

7 |

Optimized reference software for coding of audio-visual objects |

|

8 |

Carriage of ISO/IEC 14496 contents over IP networks |

|

9 |

Reference hardware description |

|

10 |

Advanced Video Coding (AVC) |

|

11 |

Scene description and application engine |

|

12 |

ISO base media file format |

|

13 |

Intellectual Property Management and Protection (IPMP) extensions |

|

14 |

MP4 file format |

|

15 |

Advanced Video Coding (AVC) file format |

|

16 |

Animation Framework eXtension (AFX) |

|

17 |

Streaming text format |

|

18 |

Font compression and streaming |

|

19 |

Synthesized texture stream |

|

20 |

Lightweight Application Scene Representation (LASeR) and Simple Aggregation Format (SAF) |

|

21 |

MPEG-J Graphics Framework eXtensions (GFX) |

|

22 |

Open Font Format |

|

23 |

Symbolic Music Representation |

|

24 |

Audio and systems interaction |

|

25 |

3D Graphics Compression Model |

|

26 |

Audio conformance |

|

27 |

3D Graphics conformance |

|

28 |

Composite font representation |

Part 20—Lightweight Application Scene Representation (LASeR) and Simple Aggregation Format (SAF)—defines a scene representation format (LASeR) and an aggregation format (SAF) suitable for representing and delivering rich-media services to resource-constrained devices such as mobile phones. A rich media service is a dynamic, interactive collection of multimedia data such as audio, video, graphics, and text. Example services range from simple movies enriched with graphic overlays and interactivity to complex services with fluid interaction and a variety of media types [33].

Part 21—MPEG-J Graphics Framework eXtensions (GFX)—describes a lightweight programmatic environment for interactive multimedia applications on devices with limited resources, e.g., mobile phones. GFX offer a framework that combines a subset of the MPEG Java application environment (MPEG-J) with a Java API for accessing 3D renderers, and other Java APIs. The framework enables creation of applications that combine audio and video streams with 3D graphics rendering and user interaction. By reusing existing mobile APIs, most Java applications designed for mobile devices may be ported to a system using GFX. A GFX implementation is a thin layer of classes and interfaces over standard Java and MPEG-J APIs [34].

Part 25—3D Graphics Compression Model—defines methods of applying 3D graphics compression tools defined in MPEG-4 to potentially any XML-based representation format for scene graphs and graphics primitives. The model has been implemented for XMT, COLLADA (Sect. 2.1.5), and X3D (Sect. 2.1.2) [35,49].

Universal 3D

Universal 3D (U3D) is a file format developed by Intel and the 3D Industry Forum (3DIF) [1] including companies such as Intel, Boeing, Hewlett-Packard, Adobe Systems, Bentley Systems, Right Hemisphere, and others. The format has been standardized by ECMA International as ECMA-363 [51].

The U3D file format has been designed to enable repurposing and presentation of 3D CAD models. Examples of applications of the U3D file format include:

• development of training tools based on interactive simulations created from 3D CAD models;

• electronic owner’s manuals providing interactive guides for maintaining and repairing products;

• online catalogues equipped with interactive 3D models created during product development.

The most important features of the U3D file format include possibility of runtime modification of geometry, continuous-level-of-detail, domain-specific compression, progressive data streaming and playback, free-form surfaces, key-frame and bones-based animation, and extensibility.

The U3D format is supported by PDF; 3D objects in U3D format can be inserted into PDF documents and interactively visualized by Adobe Reader (Fig. 2.6) [2] (3D model courtesy of [56]).