INTRODUCTION

The Internet as a video distribution medium has seen a tremendous growth in recent years with the advent of new broadband access networks and the widespread adoption of media terminals supporting video reception and storage. This growth of Internet video transmission resulted from the advances in video encoding solutions and the increase in the bandwidth of terminals. However, it has also placed new challenges in the developments of video standards, due to the heterogeneous characteristics of current terminals and of the wired and wireless distribution networks.

As the terminal capabilities increase in terms of display definition, processing power, and bandwidth, users tend to expect higher qualities from the received video streams. Additionally, as different types of terminals will likely coexist in the same network, it will be necessary to adapt the content transmitted according to the receiving terminal. Instead of re-encoding (or transcoding) the bitstream, which requires a high computational power on intermediate nodes, rate adaptation would preferably be done by extracting parts of the original bitstream.

In terms of network scalability, encoding and transmitting the same video sequence in a large-scale live video distribution system is a challenge, which may only rely on point-to-multipoint transmissions like IP multicast or broadcast. The traditional solution for point-to-multipoint video transmission in heterogeneous networks and with terminals with very different capabilities relies on a process usually called simulcast or replicated streams transmission. In this process, a discrete number of independent video streams are encoded and distributed through the multicast or broadcast path. Terminals request and decode the video stream that better fits their characteristics and communication rate, switching between video streams according to bandwidth variations. However, the major drawback of simulcast is that much of the information carried in one stream is also carried in adjacent streams, and therefore the total rate required for a video transmission is much higher than the rate of a single stream. In these scenarios it would be preferable to encode different levels of quality—one base layer quality and one or more enhancement layers—which could be used to increase the quality of the base layer. Accordingly, terminals with lower bandwidth or computational power could request the reception of lower layers, and terminals with higher capabilities could request additional enhancement layers.

In this article we analyze scalable video transmission, from the perspective of video coding standards and the necessary developments in protocols that support media distribution in current and future network architectures. In the next section we start by describing the first contributions to this topic and following developments in related video coding standards. We then describe the structure of a scalable video bitstream, taking the novel H.264/SVC standard as reference, and we further proceed with an analysis of the protocols that can be used for the description, signaling, and transport of scalable video. We describe different network scenarios and examples where scalable video offers significant advantages, before moving on to some remarks on future trends in this area, discussing those mechanisms that must be associated with SVC techniques to achieve an efficient and robust transmission system, and concluding the article.

BACKGROUND

The use of layered video transmission in IP multicast was originally proposed by Deering (1993), who suggested the transport of different video layers in different multicast groups. With this solution the encoder would produce a set of interdependent layers (one base layer and one or more enhancement layers), and the receiver, starting with a base layer, could adapt his quality byjoining and leaving multicast trees, each one carrying a different quality layer.

Deering’s proposal was followed by several protocols like: receiver driven layered multicast (RLM) protocol (Mc- Canne, Jacobson, & Vetterli, 1996), layered video multicast with retransmission (LVMR) protocol (Li, Paul, Pancha, & Ammar, 1997; Li, Paul, & Ammar, 1998), and ThinStreams (Wu, Sharma, & Smith, 1997).

The advantages of layered video multicast were confirmed by Kim and Ammar (2001) for scenarios where receivers are distributed in the same domain, with multiple streams sharing the same bottleneck link, as occurs in many video distribution scenarios.

In terms of video coding, layered video transmission requires a layered encoding of video, a process usually referred to as scalable video coding (SVC). Video coding standards like ITU-T Recommendation H.263from the International Telecommunication Union-Telecommunication (ITU-T, 2000) and MPEG-2 Video from the ITU-T and the International Organization for Standardization/International Electrotechnical Commission (ITU-T & ISO/IEC, 1994) included several tools that supported the most important scalability options. However, none of these scalable extensions was broadly implemented since they imply a loss in coding efficiency and also a significant increase in terms of decoder complexity.

In January 2005, the Joint Video Team (JVT) from ISO/ IEC Moving Picture Experts Group (MPEG) and the ITU-T Video Coding Experts Group (VCEG) started developing a scalable video coding extension for the H.264 Advanced Video Coding standard (ITU-T & ISO/IEC, 2003), known as H.264 Scalable Video Coding (ITU-T & ISO/IEC, 2007). The H.264 SVC augments the original encoder’s functionality to generate several layers of quality. Enhancement layers may enhance the content represented by lower layers in terms of temporal resolution (i.e., the frame rate), spatial resolution (i.e., image size), and the quality—specified as signal-to-noise ratio resolution (i.e., SNR).

By using the H.264 SVC, different levels of quality could be transmitted efficiently over both wired and wireless networks, allowing seamless adaptation to available bandwidth and to the characteristics of the terminal. However, the transport of SVC presents many challenges which must be considered in order to take full advantage of its potential.

CODING AND TRANSMISSION OF SCALABLE VIDEO

The most adequate technique for efficiently transmitting scalable video is highly dependent on the video encoding technology itself. Hence, for this article we have used the scalable video extensions to the H.264 standard as reference since these represent the most advanced technology currently available in this area.

The H.264 Advanced Video Coding (AVC) standard is currently emerging as the preferred solution for video services in third-generation (3G) mobile networks, which include packet-switched streaming services, messaging services, conversational services, and multimedia broadcast/multicast services (MBMS) (3GPP TS 26.346, 2005). It will also be used for mobile TV distribution to handheld devices (DVB-H) (ETSI TR 102 377, 2005).

SVC Bitstream Structure

The scalable extension of H.264/AVC includes several layers of quality. Relative to the base layer of an SVC bitstream, and for compatibility purposes, the JVT decided to make it compatible with the H.264/AVC profile.

The SVC bitstream may be composed of multiple spatial, temporal, and SNR layers of combined scalability. Temporal scalability is a technique that allows supporting multiple frame rates. In SVC, temporal scalability is usually implemented by using hierarchical B-pictures.

Quality (or SNR) scalability relies on both coarse-grain quality scalable (CGS) and medium-grain quality scalable (MGS) coding. While CGS encodes the transform coefficients in a nonscalable way, in MGS, which is a variation of CGS, fragments of transform coefficients are split into several network adaptation layer (NAL) units, enabling a more graceful degradation of quality when these units are discarded for rate adaptation purposes. The JVT also considered the possibility of including another form of scalability named fine-grain scalability (FGS), which was proposed in MPEG-4 Visual. FGS arranges the transform coefficients as an embedded bitstream, enabling truncation of these NAL units at any arbitrary point. However, in the first specification of SVC (Phase I) (ITU-T & ISO/IEC, 2007), FGS layers were not supported.

Spatial scalability provides support for several display resolutions (e.g., 4CIF, CIF, or QCIF) and is implemented by decomposing the original video into a spatial pyramid.

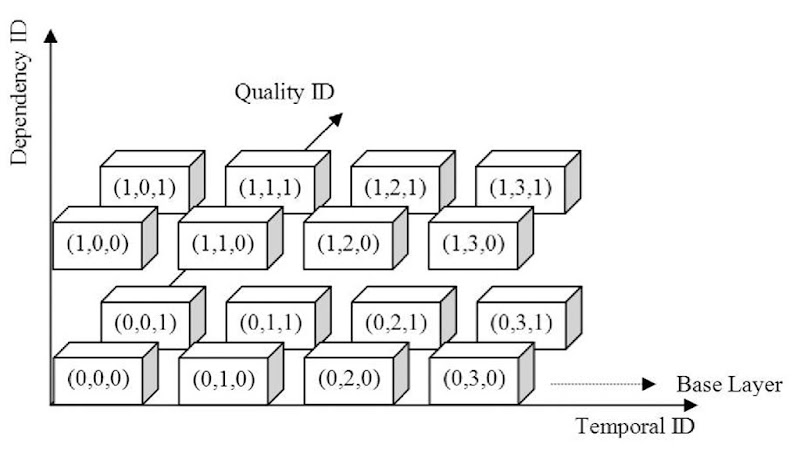

These spatial, temporal, and SNR layers are identified using a triple identification (ID), consisting of the dependency ID identifying the spatial definition, the temporal ID, and the quality (i.e., SNR) ID, which is referred as tuple (D,T,Q). For instance, a base layer NAL unit of the lowest temporal resolution and SNR scalability should be identified as (D,T,Q)=(0,0,0). Accordingly the network adaptation layer structure of H.264/AVC has been extended to include these three IDs. These three layers may be represented using a three-dimensional graph, such as the one in Figure 1.

SVC layers can be highly interdependent from each other, which means that the loss of an NAL unit of a certain layer may cause a severe reduction of quality or even prevent the correct decoding of other layers. This implies that lower layers should be protected from bit errors or packet losses.

Figure 1. Example of a coded video sequence with four temporal layers, two image definitions, and two quality layers per image definition

Protocols for Scalable Video Description, Signaling, and Transport

Scalable transmission of H.264 SVC is based on the Internet Engineering Task Force (IETF)-defined architecture and suit of protocols. SVC NAL units are encapsulated in real-time transport protocol (RTP) (Schulzrinne, Casner, Frederick, & Jacobson, 2003) packets, which are usually carried over user datagram protocol (UDP) (Postel, 1980) and Internet protocol (IP) (Postel, 1981) datagrams.

Concerning the RTP payload format definition, the IETF is currently working on such a draft (Wenger, Wang, & Schierl, 2007). Special attention is being taken in order to make the SVC payload format an extension of the original H.264 payload format defined in RFC 3984 (Wenger, Hannuksela, Stockhammer, Westerlund, & Singer, 2005), so that SVC servers can distribute data to legacy receiver equipment. The main mechanisms for fragmentation and aggregation defined in RFC 3984 are being maintained in Wenger et al. (2007).

In terms of session signaling, two main protocols are used to initiate and control streaming sessions, namely the session initiation protocol (SIP) (Rosenberg et al., 2002) and the real-time streaming protocol (RTSP) (Schulzrinne, Rao, & Lanphier, 1998). Both of these protocols use the session description protocol (SDP) (Handley, Jacobson, & Perkins, 2006) to describe terminals’ capabilities. Thus SVC information must be conveyed using SDP, and this protocol must be updated to include the description of interlayer dependencies.

The communication topology defined for SVC transmission considers the possibility of including one or more intermediate nodes, named Media Aware Network Element (MANE) (Wenger et al., 2005), which could adapt unicast and multicast transmission modes, aggregate several streams, or perform rate-adaptation tasks adapting a bitstream according to the capabilities of a particular receiver. These elements also constitute session signaling endpoints between servers and clients.

Scenarios Where Scalable Video Offers Significant Advantages

Layered video transmission was initially intended for IP multicast networks. However, IP multicast is not being largely used on the Internet. Instead, it is currently considered as an important solution for edge IP networks, like those of service providers. Nevertheless, even in these networks, IP multicast constitutes a good solution for scalable transmission of video with the advantage that it is easier to associate it with quality of service (QoS) solutions that guarantee a higher priority to video or other sensitive data.

Two basic video distribution scenarios for SVC can be considered. In a first scenario IP multicast is used between a server and clients. As previously explained, this may be the case for a large private IP network of a service provider, as for example MBMS (3GPP TS 26.346, 2005) in mobile 3G networks. In this scenario receivers request the SVC layers according to their capacity, dynamically joining or leaving multicast groups. The signaling for session establishment is also performed on an end-to-end basis. In this architecture, it is important to specify a protocol or mechanism that guarantees equality between receivers, since a greedy or naive user could request a rate far beyond the network capacity, thus leading the network to a persistent congested state.

One of the problems pointed out to this solution is the number of firewall ports opened in receivers, which may constitute a security problem.

In a second scenario, one or more MANEs could be placed between a server and clients. MANEs constitute a signaling and RTP endpoint. They can perform rate adaptation tasks, removing packets from an incoming RTP stream and rewriting RTP headers, or even aggregate several video layers in one unique RTP flow of video in case an endpoint does not have the processing power or display size to decode all layers. Using the example of a mobile 3G network, the MANE should be placed close to a base station, with access to both signaling and media traffic. This element can also be associated with other modules or functions which, for instance, perform adaptation between unicast and multicast transmission modes, QoS broking, adaptation between QoS-capable and best-effort networks, or even admission control tasks. This scenario could constitute part of an overlay network that manages the distribution of media at the application layer.

FUTURE TRENDS

The integration of scalable video transmission techniques in current and emerging network architectures still presents some challenges that must be considered prior to being able of taking the most of it.

QoS solutions should be considered for giving higher priority to video data when compared with less sensitive data. QoS solutions for wireless networks, like for instance the IEEE Standard 802.11e (2005), usually include several mechanisms like queue management and automatic repeat request (ARQ) solutions. Link layer ARQ solutions can be associated with QoS provisioning, quickly recovering from packet losses in more important layers.

Concerning large-scale multicast and broadcast networks, the retransmission of lost packets could constitute a scalability problem, and therefore the protection of lower layers may be performed by applying forward error correcting (FEC) codes. In such scenarios the use of raptor codes (Shokrol-lahi, 2006) is gaining popularity due to their high flexibility and effectiveness. As an example, the use of raptor codes was proposed for H.264/AVC video transmission in mobile MBMS networks (3GPP TS 26.346, 2005).

Finally, in terms of coding, current H.264/SVC encoding and decoding processes present additional complexity when compared with the AVC standard. In particular, decoding time must be minimized in order to encourage the widespread adoption of this novel technology.

CONCLUSION

In this article we describe the main issues associated the transmission of scalable video coding. Although the basic mechanisms of scalable video transmission were defined more than a decade ago, there is still a lack of encoding and transmission mechanisms to support it. In terms of video coding, the flexibility offered by the scalable extension of the H.264/AVC provides considerable opportunities to extend current video distribution networks without the penalty of having to change or upgrade H.264/AVC terminals: its base layer and signaling mechanisms are designed to guarantee the compatibility with legacy equipments.

In terms of network support, several challenges remain that must be considered. However, the advent of mobile and wireless networks with very different terminal capabilities, combined with wired access networks capable of delivering high-quality videos, will require a flexible technology that supports easier rate-adaptation mechanisms with graceful degradation when facing bandwidth reduction.

KEY TERMS

Automatic Repeat Request (ARQ): A method for error control in packets or frames that uses redundant bits, acknowledgment packets, and timeouts to detect and retransmit data affected by errors.

Broadcast: Process of transmitting a flow of data from a source to all receivers of the broadcast domain or of the network where the terminal operates.

Forward Error Correction (FEC): A method for error correction that uses redundant bits to detect and correct errors in data without the need for retransmission.

IP Multicast: The transmission of Internet protocol (IP) datagrams from a source to all the receivers that belong to a certain IP multicast group. In IP multicast, terminals that wish to receive data must register with that multicast group. IP multicast routing protocols are used to create distribution trees.

Network Adaptation Layer (NAL): An H.264 syntax structure that represents a frame or part of it, integrating information about its encoding parameters. NAL structures are appropriate for the transport of H.264 over several types of networks.

Quality of Service (QoS): Process of providing different priorities to different flows of data or to guarantee a certain level of quality to a data flow. Examples of quality parameters are transmission rate, delay, delay variation (jitter), and packet loss.

Simulcast: Also referred to as replicated streaming, the process of simultaneously encoding and transmitting a same video sequence through a discrete number of quality video streams to several receivers.

Transcoding: The process of partially or totally decoding and re-encoding a certain video stream in order to change its characteristics in terms of definition, frame rate, or transmission rate.

Unicast: Process of transmitting a flow of data from a source to a particular receiver.