This topic shows you how to make data manipulations more efficient. We optimize and reduce the amount of code that is necessary to store objects and discuss the most efficient processing options. You should be familiar with the basic object states and the persistence interfaces; the previous topics are required reading to understand this topic.

First we’ll show you how transitive persistence can make your work with complex object networks easier. The cascading options you can enable in Hibernate and Java Persistence applications significantly reduce the amount of code that’s otherwise needed to insert, update, or delete several objects at the same time.

We then discuss how large datasets are best handled, with batch operations in your application or with bulk operations that execute directly in the database.

Finally, we show you data filtering and interception, both of which offer transparent hooks into the loading and storing process inside Hibernate’s engine. These features let you influence or participate in the lifecycle of your objects without writing complex application code and without binding your domain model to the persistence mechanism.

Let’s start with transitive persistence and store more than one object at a time.

Transitive persistence

Real, nontrivial applications work not only with single objects, but rather with networks of objects. When the application manipulates a network of persistent objects, the result may be an object graph consisting of persistent, detached, and transient instances. Transitive persistence is a technique that allows you to propagate persistence to transient and detached subgraphs automatically.

For example, if you add a newly instantiated Category to the already persistent hierarchy of categories, it should become automatically persistent without a call to save() or persist(). “Mapping a parent/children relationship,” when you mapped a parent/child relationship between Bid and Item. In this case, bids were not only automatically made persistent when they were added to an item, but they were also automatically deleted when the owning item was deleted. You effectively made Bid an entity that was completely dependent on another entity, Item (the Bid entity isn’t a value type, it still supports shared reference).

There is more than one model for transitive persistence. The best known is persistence by reachability; we discuss it first. Although some basic principles are the same, Hibernate uses its own, more powerful model, as you’ll see later. The same is true for Java Persistence, which also has the concept of transitive persistence and almost all the options Hibernate natively provides.

Persistence by reachability

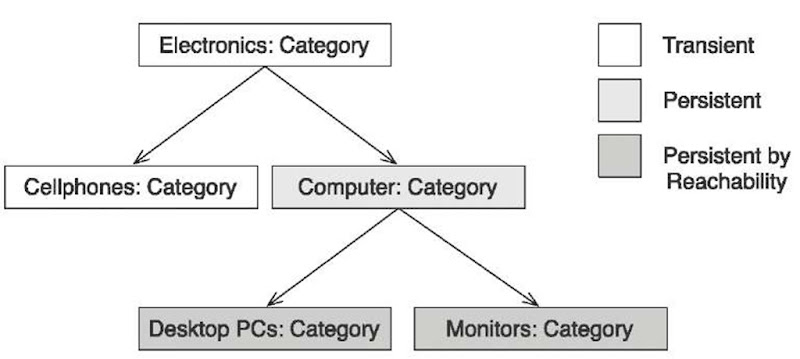

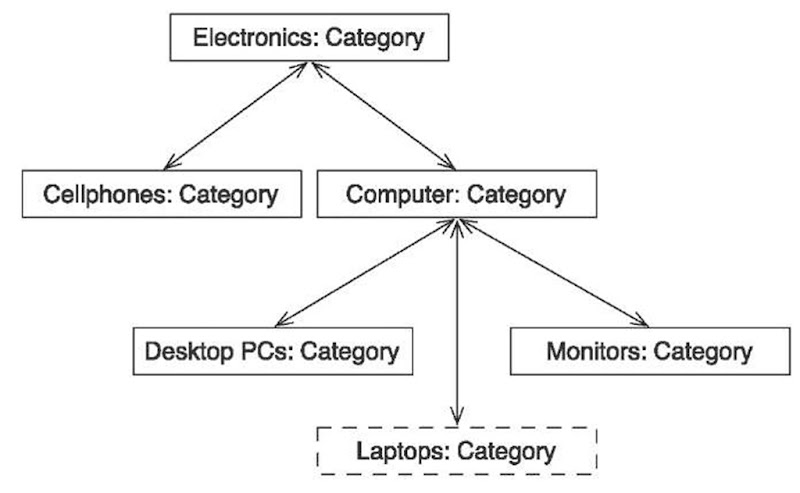

An object persistence layer is said to implement persistence by reachability if any instance becomes persistent whenever the application creates an object reference to the instance from another instance that is already persistent. This behavior is illustrated by the object diagram (note that this isn’t a class diagram) in figure 12.1.

In this example, Computer is a persistent object. The objects Desktop PCs and Monitors are also persistent: They’re reachable from the Computer Category instance. Electronics and Cellphones are transient. Note that we assume navigation is possible only to child categories, but not to the parent—for example, you can call computer.getChildCategories(). Persistence by reachability is a recursive algorithm. All objects reachable from a persistent instance become persistent either when the original instance is made persistent or just before in-memory state is synchronized with the datastore.

Persistence by reachability guarantees referential integrity; any object graph can be completely re-created by loading the persistent root object. An application may walk the object network from association to association without ever having to worry about the persistent state of the instances. (SQL databases have a different approach to referential integrity, relying on declarative and procedural constraints to detect a misbehaving application.)

In the purest form of persistence by reachability, the database has some top-level or root object, from which all persistent objects are reachable. Ideally, an instance should become transient and be deleted from the database if it isn’t reachable via references from the root persistent object.

Figure 12.1 Persistence by reachability with a root persistent object

Neither Hibernate nor other ORM solutions implement this—in fact, there is no analog of the root persistent object in an SQL database and no persistent garbage collector that can detect unreferenced instances. Object-oriented data stores may implement a garbage-collection algorithm, similar to the one implemented for in-memory objects by the JVM. But this option is not available in the ORM world; scanning all tables for unreferenced rows won’t perform acceptably.

So, persistence by reachability is at best a halfway solution. It helps you make transient objects persistent and propagate their state to the database without many calls to the persistence manager. However, at least in the context of SQL databases and ORM, it isn’t a full solution to the problem of making persistent objects transient (removing their state from the database). This turns out to be a much more difficult problem. You can’t remove all reachable instances when you remove an object—other persistent instances may still hold references to them (remember that entities can be shared). You can’t even safely remove instances that aren’t referenced by any persistent object in memory; the instances in memory are only a small subset of all objects represented in the database.

Let’s look at Hibernate’s more flexible transitive persistence model.

Applying cascading to associations

Hibernate’s transitive persistence model uses the same basic concept as persistence by reachability: Object associations are examined to determine transitive state. Furthermore, Hibernate allows you to specify a cascade style for each association mapping, which offers much more flexibility and fine-grained control for all state transitions. Hibernate reads the declared style and cascades operations to associated objects automatically.

By default, Hibernate doesn’t navigate an association when searching for transient or detached objects, so saving, deleting, reattaching, merging, and so on, a Category has no effect on any child category referenced by the childCategories collection of the parent. This is the opposite of the persistence by reachability default behavior. If, for a particular association, you wish to enable transitive persistence, you must override this default in the mapping metadata.

These settings are called cascading options. They’re available for every entity association mapping (one-to-one, one-to-many, many-to-many), in XML and annotation syntax. See table 12.1 for a list of all settings and a description of each option.

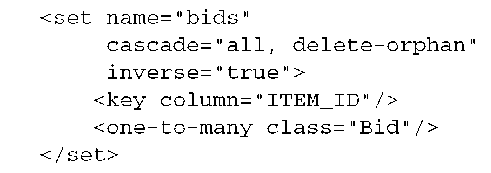

In XML mapping metadata, you put the cascade=”…” attribute on <one-to-one> or <many-to-one> mapping element to enable transitive state changes. All collections mappings (<set>, <bag>, <list>, and <map>) support the cascade attribute. The delete-orphan setting, however, is applicable only to collections. Obviously, you never have to enable transitive persistence for a collection that references value-typed classes—here the lifecycle of the associated objects is dependent and implicit. Fine-grained control of dependent lifecycle is relevant and available only for associations between entities.

Table 12.1 Hibernate and Java Persistence entity association cascading options

Table 12.1 Hibernate and Java Persistence entity association cascading options (continued)

FAQ What is the relationship between cascade and inverse? There is no relationship; both are different notions. The noninverse end of an association is used to generate the SQL statements that manage the association in the database (insertion and update of the foreign key column(s)). Cascading enables transitive object state changes across entity class associations.

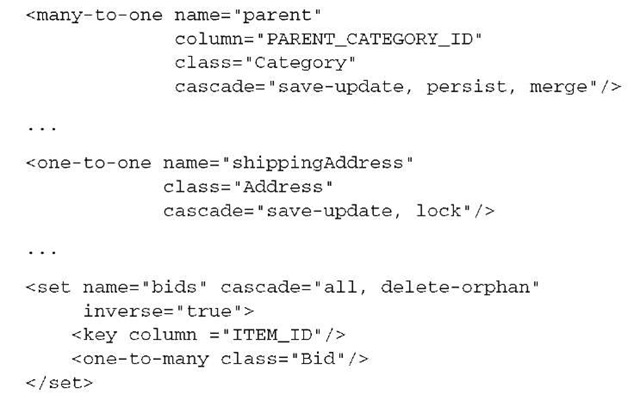

Here are a few examples of cascading options in XML mapping files. Note that this code isn’t from a single entity mapping or a single class, but only illustrative:

As you can see, several cascading options can be combined and applied to a particular association as a comma-separated list. Further note that delete-orphan isn’t included in all.

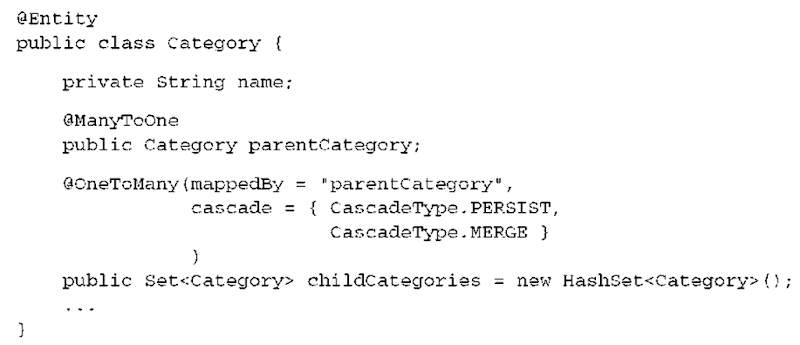

Cascading options are declared with annotations in two possible ways. First, all the association mapping annotations, @ManyToOne, @OneToOne, @OneToMany, and @ManyToMany, support a cascade attribute. The value of this attribute is a single or a list of javax.persistence.CascadeType values. For example, the XML illustrative mapping done with annotations looks like this:

Obviously, not all cascading types are available in the standard javax.persis-tence package. Only cascading options relevant for EntityManager operations, such as persist() and merge(), are standardized. You have to use a Hibernate extension annotation to apply any Hibernate-only cascading option:

A Hibernate extension cascading option can be used either as an addition to the options already set on the association annotation (first and last example) or as a stand-alone setting if no standardized option applies (second example).

Hibernate’s association-level cascade style model is both richer and less safe than persistence by reachability. Hibernate doesn’t make the same strong guarantees of referential integrity that persistence by reachability provides. Instead, Hibernate partially delegates referential integrity concerns to the foreign key constraints of the underlying SQL database.

There is a good reason for this design decision: It allows Hibernate applications to use detached objects efficiently, because you can control reattachment and merging of a detached object graph at the association level. But cascading options aren’t available only to avoid unnecessary reattachment or merging: They’re useful whenever you need to handle more than one object at a time.

Let’s elaborate on the transitive state concept with some example association mappings. We recommend that you read the next section in one turn, because each example builds on the previous one.

Figure 12.2 Category class with associations to itself

Working with transitive state

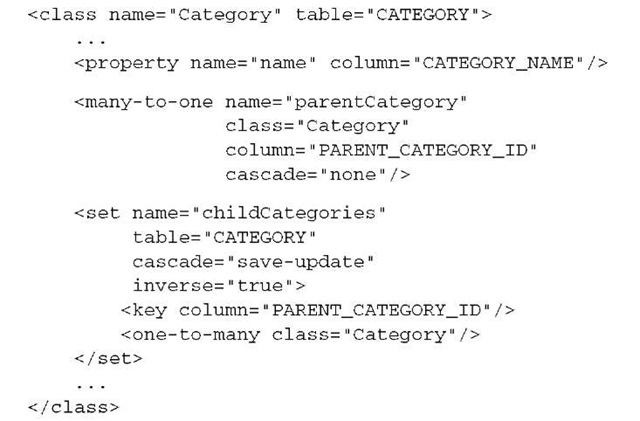

CaveatEmptor administrators are able to create new categories, rename categories, and move subcategories around in the category hierarchy. This structure can be seen in figure 12.2. Now, you map this class and the association, using XML:

This is a recursive bidirectional one-to-many association. The one-valued end is mapped with the <many-to-one> element and the Set typed property with the <set>. Both refer to the same foreign key column PARENT_CATEGORY_ID. All columns are in the same table, CATEGORY.

Creating a new category

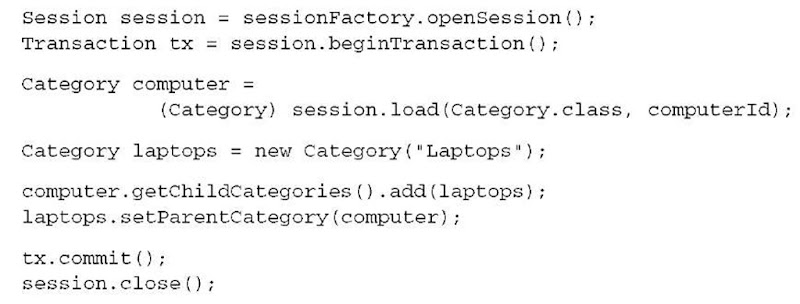

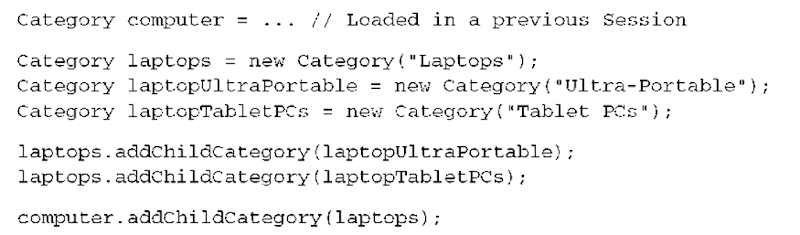

Suppose you create a new Category, as a child category of Computer; see figure 12.3.

You have several ways to create this new Laptops object and save it in the database. You can go back to the database and retrieve the Computer category to which the new Laptops category will belong, add the new category, and commit the transaction:

The computer instance is persistent (note how you use load() to work with a proxy and avoid the database hit), and the childCategories association has cascade-save enabled. Hence, this code results in the new laptops category becoming persistent when tx.commit() is called, as Hibernate cascades the persistent state to the childCategories collection elements of computer. Hibernate examines the state of the objects and their relationships when the persistence context is flushed and queues an INSERT statement.

Figure 12.3

Adding a new Category to the object graph

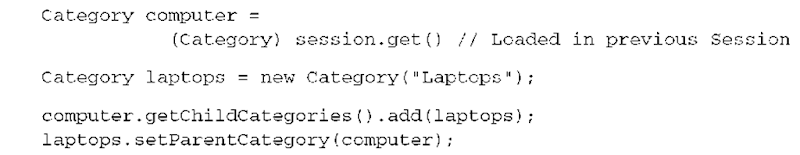

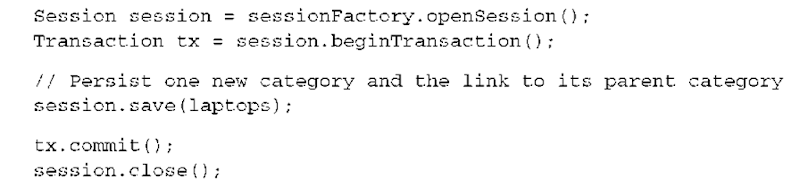

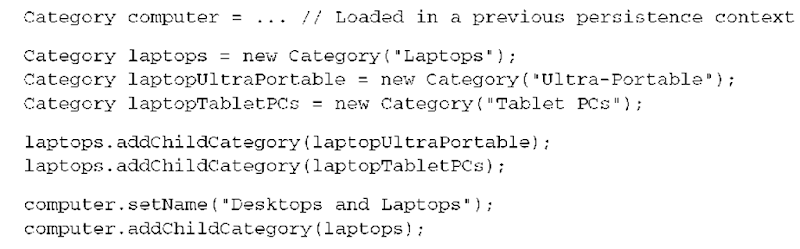

Creating a new category in a detached fashion

Let’s do the same thing again, but this time create the link between Computer and Laptops outside of the persistence context scope:

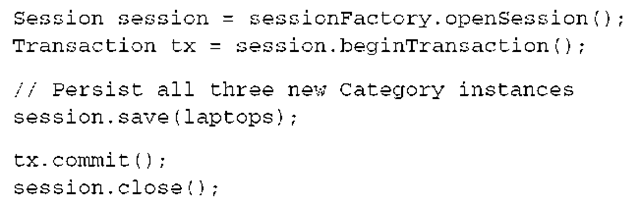

You now have the detached fully initialized (no proxy) computer object, loaded in a previous Session, associated with the new transient laptops object (and vice versa). You make this change to the objects persistent by saving the new object in a second Hibernate Session, a new persistence context:

Hibernate inspects the database identifier property of the laptops.parentCate-gory object and correctly creates the reference to the Computer category in the database. Hibernate inserts the identifier value of the parent into the foreign key field of the new Laptops row in CATEGORY.

You can’t obtain a detached proxy for computer in this example, because com-puter.getChildCategories() would trigger initialization of the proxy and you’d see a LazyInitializationException: The Session is already closed. You can’t walk the object graph across uninitialized boundaries in detached state.

Because you have cascade=”none” defined for the parentCategory association, Hibernate ignores changes to any of the other categories in the hierarchy (Computer, Electronics)! It doesn’t cascade the call to save() to entities referred by this association. If you enabled cascade=”save-update” on the <many-to-one> mapping of parentCategory, Hibernate would navigate the whole graph of objects in memory, synchronizing all instances with the database. This is an obvious overhead you’d prefer to avoid.

In this case, you neither need nor want transitive persistence for the parent-Category association.

Saving several new instances with transitive persistence

Why do we have cascading operations? You could save the laptop object, as shown in the previous example, without using any cascade mapping. Well, consider the following case:

(Notice that the convenience method addChildCategory() sets both ends of the association link in one call, as described earlier in the topic.)

It would be undesirable to have to save each of the three new categories individually in a new Session. Fortunately, because you mapped the childCategories association (the collection) with cascade=”save-update”, you don’t need to. The same code shown earlier, which saved the single Laptops category, will save all three new categories in a new Session:

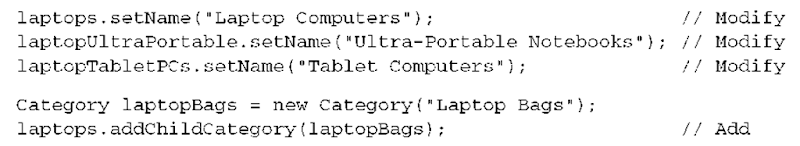

You’re probably wondering why the cascade style is called cas-cade=”save-update” rather then merely cascade=”save”. Having just made all three categories persistent previously, suppose you make the following changes to the category hierarchy in a subsequent event, outside of a Session (you’re working on detached objects again):

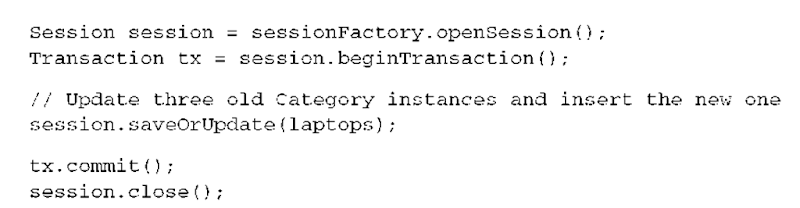

You add a new category (laptopBags) as a child of the Laptops category, and modify all three existing categories. The following code propagates all these changes to the database:

Because you specify cascade=”save-update” on the childCategories collection, Hibernate determines what is needed to persist the objects to the database. In this case, it queues three SQL UPDATE statements (for laptops, laptop-UltraPortable,laptopTablePCs) and one INSERT (for laptopBags). The save-OrUpdate() method tells Hibernate to propagate the state of an instance to the database by creating a new database row if the instance is a new transient instance or updating the existing row if the instance is a detached instance.

More experienced Hibernate users use saveOrUpdate() exclusively; it’s much easier to let Hibernate decide what is new and what is old, especially in a more complex network of objects with mixed state. The only (not really serious) disadvantage of exclusive saveOrUpdate() is that it sometimes can’t guess whether an instance is old or new without firing a SELECT at the database—for example, when a class is mapped with a natural composite key and no version or time-stamp property.

How does Hibernate detect which instances are old and which are new? A range of options is available. Hibernate assumes that an instance is an unsaved transient instance if:

■ The identifier property is null.

■ The version or timestamp property (if it exists) is null.

■ A new instance of the same persistent class, created by Hibernate internally, has the same database identifier value as the given instance.

■ You supply an unsaved-value in the mapping document for the class, and the value of the identifier property matches. The unsaved-value attribute is also available for version and timestamp mapping elements.

■ Entity data with the same identifier value isn’t in the second-level cache.

■ You supply an implementation of org.hibernate.Interceptor and return Boolean.TRUE from Interceptor.isUnsaved() after checking the instance in your own code.

In the CaveatEmptor domain model, you use the nullable type java.lang.Long as your identifier property type everywhere. Because you’re using generated, synthetic identifiers, this solves the problem. New instances have a null identifier property value, so Hibernate treats them as transient. Detached instances have a nonnull identifier value, so Hibernate treats them accordingly.

It’s rarely necessary to customize the automatic detection routines built into Hibernate. The saveOrUpdate() method always knows what to do with the given object (or any reachable objects, if cascading of save-update is enabled for an association). However, if you use a natural composite key and there is no version or timestamp property on your entity, Hibernate has to hit the database with a SELECT to find out if a row with the same composite identifier already exists. In other words, we recommend that you almost always use saveOrUpdate() instead of the individual save() or update() methods, Hibernate is smart enough to do the right thing and it makes transitive “all of this should be in persistent state, no matter if new or old” much easier to handle.

We’ve now discussed the basic transitive persistence options in Hibernate, for saving new instances and reattaching detached instances with as few lines of code as possible. Most of the other cascading options are equally easy to understand: persist, lock, replicate, and evict do what you would expect—they make a particular Session operation transitive. The merge cascading option has effectively the same consequences as save-update.

It turns out that object deletion is a more difficult thing to grasp; the delete-orphan setting in particular causes confusion for new Hibernate users. This isn’t because it’s complex, but because many Java developers tend to forget that they’re working with a network of pointers.

Considering transitive deletion

Imagine that you want to delete a Category object. You have to pass this object to the delete() method on a Session; it’s now in removed state and will be gone from the database when the persistence context is flushed and committed. However, you’ll get a foreign key constraint violation if any other Category holds a reference to the deleted row at that time (maybe because it was still referenced as the parent of others).

It’s your responsibility to delete all links to a Category before you delete the instance. This is the normal behavior of entities that support shared references. Any value-typed property (or component) value of an entity instance is deleted automatically when the owning entity instance is deleted. Value-typed collection elements (for example, the collection of Image objects for an Item) are deleted if you remove the references from the owning collection.

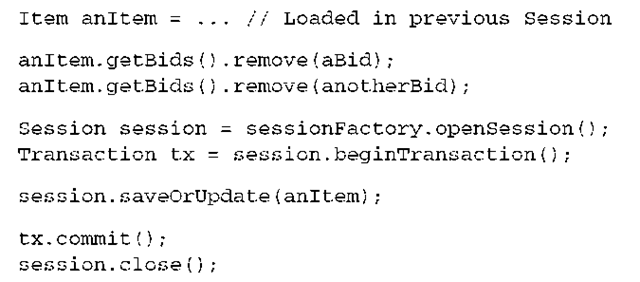

In certain situations, you want to delete an entity instance by removing a reference from a collection. In other words, you can guarantee that once you remove the reference to this entity from the collection, no other reference will exist. Therefore, Hibernate can delete the entity safely after you’ve removed that single last reference. Hibernate assumes that an orphaned entity with no references should be deleted. In the example domain model, you enable this special cascading style for the collection (it’s only available for collections) of bids, in the mapping of Item:

You can now delete Bid objects by removing them from this collection—for example, in detached state:

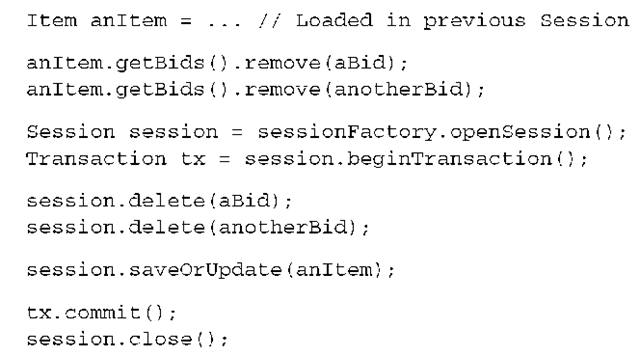

If you don’t enable the delete-orphan option, you have to explicitly delete the Bid instances after removing the last reference to them from the collection:

Automatic deletion of orphans saves you two lines of code—two lines of code that are inconvenient. Without orphan deletion, you’d have to remember all the Bid objects you wish to delete—the code that removes an element from the collection is often in a different layer than the code that executes the delete() operation. With orphan deletion enabled, you can remove orphans from the collection, and Hibernate will assume that they’re no longer referenced by any other entity. Note again that orphan deletion is implicit if you map a collection of components; the extra option is relevant only for a collection of entity references (almost always a <one-to-many>).

Java Persistence and EJB 3.0 also support transitive state changes across entity associations. The standardized cascading options are similar to Hibernate’s, so you can learn them easily.

Transitive associations with JPA

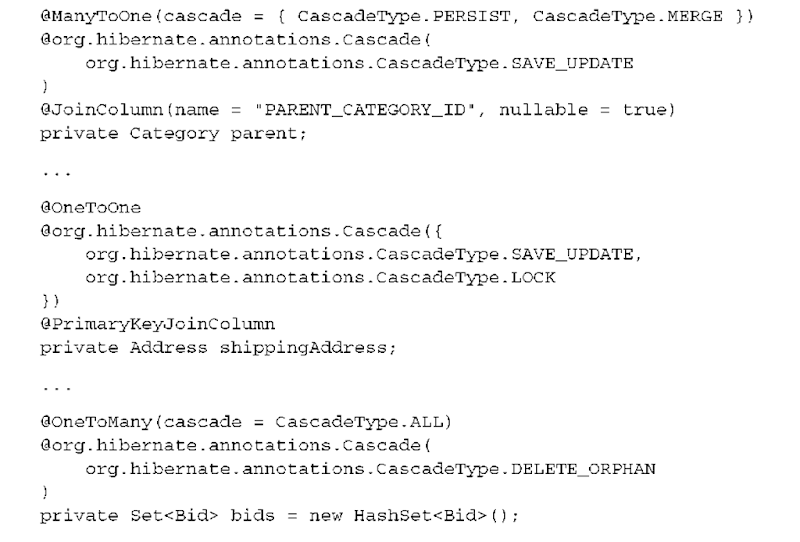

The Java Persistence specification supports annotations for entity associations that enable cascading object manipulation. Just as in native Hibernate, each Entity-Manager operation has an equivalent cascading style. For example, consider the Category tree (parent and children associations) mapped with annotations:

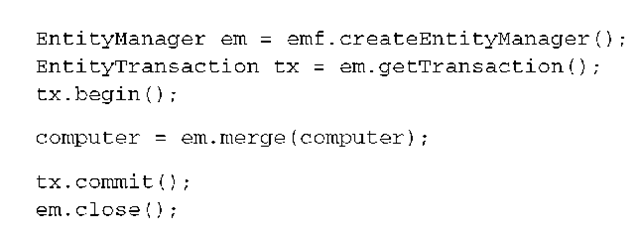

You enable standard cascading options for the persist() and merge() operations. You can now create and modify Category instances in persistent or detached state, just as you did earlier with native Hibernate:

A single call to merge() makes any modification and addition persistent. Remember that merge() isn’t the same as reattachment: It returns a new value that you should bind to the current variable as a handle to current state after merging.

Some cascading options aren’t standardized, but Hibernate specific. You map these annotations (they’re all in the org.hibernate.annotations package) if you’re working with the Session API or if you want an extra setting for Entity-Manager (for example, org.hibernate.annotations.CascadeType.DELETE_ ORPHAN). Be careful, though—custom cascading options in an otherwise pure JPA application introduce implicit object state changes that may be difficult to communicate to someone who doesn’t expect them.

In the previous sections, we’ve explored the entity association cascading options with Hibernate XML mapping files and Java Persistence annotations. With transitive state changes, you save lines of code by letting Hibernate navigate and cascade modifications to associated objects. We recommend that you consider which associations in your domain model are candidates for transitive state changes and then implement this with cascading options. In practice, it’s extremely helpful if you also write down the cascading option in the UML diagram of your domain model (with stereotypes) or any other similar documentation that is shared between developers. Doing so improves communication in your development team, because everybody knows which operations and associations imply cascading state changes.

Transitive persistence isn’t the only way you can manipulate many objects with a single operation. Many applications have to modify large object sets: For example, imagine that you have to set a flag on 50,000 Item objects. This is a bulk operation that is best executed directly in the database.

Bulk and batch operations

You use object/relational mapping to move data into the application tier so that you can use an object-oriented programming language to process that data. This is a good strategy if you’re implementing a multiuser online transaction processing application, with small to medium size data sets involved in each unit of work.

On the other hand, operations that require massive amounts of data are best not executed in the application tier. You should move the operation closer to the location of the data, rather than the other way round. In an SQL system, the DML statements UPDATE and DELETE execute directly in the database and are often sufficient if you have to implement an operation that involves thousands of rows. More complex operations may require more complex procedures to run inside the database; hence, you should consider stored procedures as one possible strategy.

You can fall back to JDBC and SQL at all times in Hibernate or Java Persistence applications. In this section, we’ll show you how to avoid this and how to execute bulk and batch operations with Hibernate and JPA.

Bulk statements with HQL and JPA QL

The Hibernate Query Language (HQL) is similar to SQL. The main difference between the two is that HQL uses class names instead of table names, and property names instead of column names. It also understands inheritance—that is, whether you’re querying with a superclass or an interface.

The JPA query language, as defined by JPA and EJB 3.0, is a subset of HQL. Hence, all queries and statements that are valid JPA QL are also valid HQL. The statements we’ll show you now, for bulk operations that execute directly in the database, are available in JPA QL and HQL. (Hibernate adopted the standardized bulk operations from JPA.)

The available statements support updating and deleting objects directly in the database without the need to retrieve the objects into memory. A statement that can select data and insert it as new entity objects is also provided.

Updating objects directly in the database

In the previous topics, we’ve repeated that you should think about state management of objects, not how SQL statements are managed. This strategy assumes that the objects you’re referring to are available in memory. If you execute an SQL statement that operates directly on the rows in the database, any change you make doesn’t affect the in-memory objects (in whatever state they may be). In other words, any direct DML statement bypasses the Hibernate persistence context (and all caches).

A pragmatic solution that avoids this issue is a simple convention: Execute any direct DML operations first in a fresh persistence context. Then, use the Hibernate Session or EntityManager to load and store objects. This convention guarantees that the persistence context is unaffected by any statements executed earlier. Alternatively, you can selectively use the refresh() operation to reload the state of a persistent object from the database, if you know it’s been modified behind the back of the persistence context.

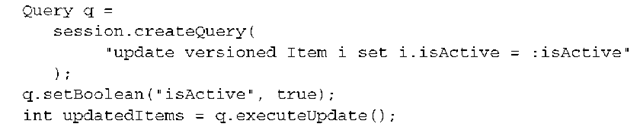

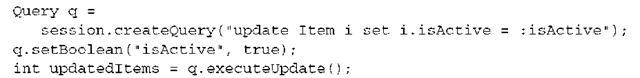

Hibernate and JPA offer DML operations that are a little more powerful than plain SQL. Let’s look at the first operation in HQL and JPA QL, an UPDATE:

(The versioned keyword is not allowed if your version or timestamp property relies on a custom org.hibernate.usertype.UserVersionType.)

This HQL statement (or JPA QL statement, if executed with the EntityManager) looks like an SQL statement. However, it uses an entity name (class name) and a property name. It’s also integrated into Hibernate’s parameter binding API. The number of updated entity objects is returned—not the number of updated rows. Another benefit is that the HQL (JPA QL) UPDATE statement works for inheritance hierarchies:

The persistence engine knows how to execute this update, even if several SQL statements have to be generated; it updates several base tables (because Credit-Card is mapped to several superclass and subclass tables). This example also doesn’t contain an alias for the entity class—it’s optional. However, if you use an alias, all properties must be prefixed with an alias. Also note that HQL (and JPA QL) UPDATE statements can reference only a single entity class; you can’t write a single statement to update Item and CreditCard objects simultaneously, for example. Subqueries are allowed in the WHERE clause; any joins are allowed only in these subqueries.

Direct DML operations, by default, don’t affect any version or timestamp values of the affected entities (this is standardized in Java Persistence). With HQL, however, you can increment the version number of directly modified entity instances:

The same rules as for UPDATE statements apply: no joins, single entity class only, optional aliases, subqueries allowed in the WHERE clause.

Just like SQL bulk operations, HQL (and JPA QL) bulk operations don’t affect the persistence context, they bypass any cache. Credit cards or items in memory aren’t updated if you execute one of these examples.

The last HQL bulk operation can create objects directly in the database.

Creating new objects directly in the database

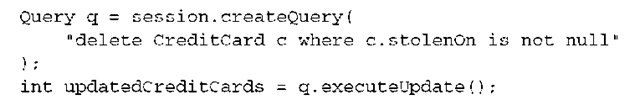

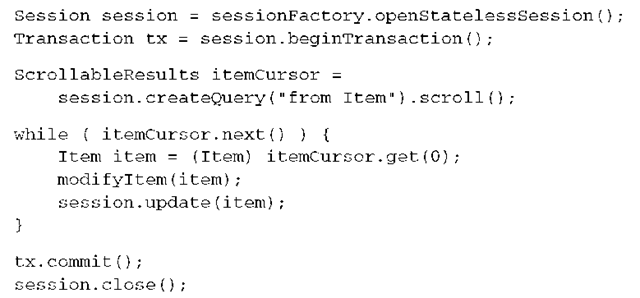

The second HQL (JPA QL) bulk operation we introduce is the DELETE:

Let’s assume that all your customers’ Visa cards have been stolen. You write two bulk operations to mark the day they were stolen (well, the day you discovered the theft) and to remove the compromised credit-card data from your records. Because you work for a responsible company, you have to report the stolen credit cards to the authorities and affected customers. So, before you delete the records, you extract everything that was stolen and create a few hundred (or thousand) StolenCreditCard objects. This is a new class you write just for that purpose:

You now map this class to its own STOLEN_CREDIT_CARD table, either with an XML file or JPA annotations (you shouldn’t have any problem doing this on your own). Next, you need a statement that executes directly in the database, retrieves all compromised credit cards, and creates new StolenCreditCard objects:

This operation does two things: First, the details of CreditCard records and the respective owner (a User) are selected. The result is then directly inserted into the table to which the StolenCreditCard class is mapped.

Note the following:

■ The properties that are the target of an INSERT . . . SELECT (in this case, the StolenCreditCard properties you list) have to be for a particular subclass, not an (abstract) superclass. Because StolenCreditCard isn’t part of an inheritance hierarchy, this isn’t an issue.

■ The types returned by the SELECT must match the types required for the INSERT—in this case, lots of string types and a component (the same type of component for selection and insertion).

■ The database identifier for each StolenCreditCard object will be generated automatically by the identifier generator you map it with. Alternatively, you can add the identifier property to the list of inserted properties and supply a value through selection. Note that automatic generation of identifier values works only for identifier generators that operate directly inside the database, such as sequences or identity fields.

■ If the generated objects are of a versioned class (with a version or time-stamp property), a fresh version (zero, or timestamp of today) will also be generated. Alternatively, you can select a version (or timestamp) value and add the version (or timestamp) property to the list of inserted properties.

Finally, note that INSERT … SELECT is available only with HQL; JPA QL doesn’t standardize this kind of statement—hence, your statement may not be portable.

HQL and JPA QL bulk operations cover many situations in which you’d usually resort to plain SQL. On the other hand, sometimes you can’t exclude the application tier in a mass data operation.

Processing with batches

Imagine that you have to manipulate all Item objects, and that the changes you have to make aren’t as trivial as setting a flag (which you’ve done with a single statement previously). Let’s also assume that you can’t create an SQL stored procedure, for whatever reason (maybe because your application has to work on database-management systems that don’t support stored procedures). Your only choice is to write the procedure in Java and to retrieve a massive amount of data into memory to run it through the procedure.

You should execute this procedure by batching the work. That means you create many smaller datasets instead of a single dataset that wouldn’t even fit into memory.

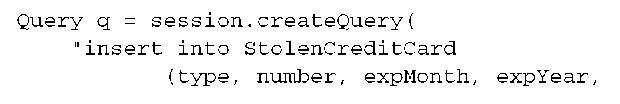

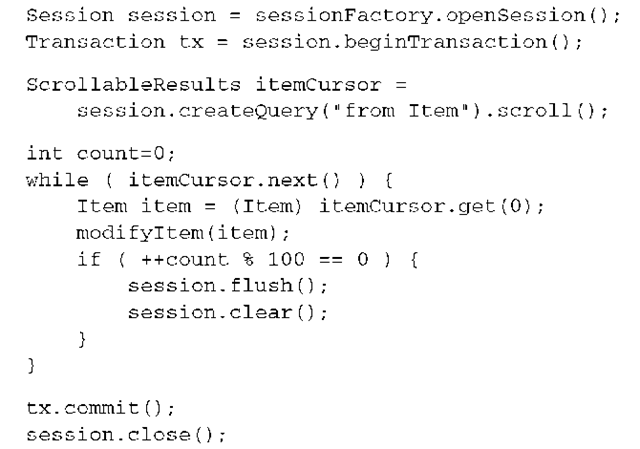

Writing a procedure with batch updates

The following code loads 100 Item objects at a time for processing:

You use an HQL query (a simple one) to load all Item objects from the database. But instead of retrieving the result of the query completely into memory, you open an online cursor. A cursor is a pointer to a result set that stays in the database. You can control the cursor with the ScrollableResults object and move it along the result. The get(int i) call retrieves a single object into memory, the object the cursor is currently pointing to. Each call to next() forwards the cursor to the next object. To avoid memory exhaustion, you flush() and clear() the persistence context before loading the next 100 objects into it.

A flush of the persistence context writes the changes you made to the last 100 Item objects to the database. For best performance, you should set the size of the Hibernate (and JDBC) configuration property hibernate.jdbc.batch_size to the same size as your procedure batch: 100. All UDPATE statements that are executed during flushing are then also batched at the JDBC level.

(Note that you should disable the second-level cache for any batch operations; otherwise, each modification of an object during the batch procedure must be propagated to the second-level cache for that persistent class. This is an unnecessary overhead. You’ll learn how to control the second-level cache in the next topic.)

The Java Persistence API unfortunately doesn’t support cursor-based query results. You have to call org.hibernate.Session and org.hibernate.Query to access this feature.

The same technique can be used to create and persist a large number of objects.

Inserting many objects in batches

If you have to create a few hundred or thousand objects in a unit of work, you may run into memory exhaustion. Every object that is passed to insert() or per-sist() is added to the persistence context cache.

A straightforward solution is to flush and clear the persistence context after a certain number of objects. You effectively batch the inserts:

Here you create and persist 100,000 objects, 100 at a time. Again, remember to set the hibernate.jdbc.batch_size configuration property to an equivalent value and disable the second-level cache for the persistent class. Caveat: Hibernate silently disables JDBC batch inserts if your entity is mapped with an identity identifier generator; many JDBC drivers don’t support batching in that case.

Another option that completely avoids memory consumption of the persistence context (by effectively disabling it) is the StatelessSession interface.

Using a stateless Session

The persistence context is an essential feature of the Hibernate and Java Persistence engine. Without a persistence context, you wouldn’t be able to manipulate object state and have Hibernate detect your changes automatically. Many other things also wouldn’t be possible.

However, Hibernate offers you an alternative interface, if you prefer to work with your database by executing statements. This statement-oriented interface, org.hibernate.StatelessSession, feels and works like plain JDBC, except that you get the benefit from mapped persistent classes and Hibernate’s database portability.

Imagine that you want to execute the same “update all item objects” procedure you wrote in an earlier example with this interface:

The batching is gone in this example—you open a StatelessSession. You no longer work with objects in persistent state; everything that is returned from the database is in detached state. Hence, after modifying an Item object, you need to call update() to make your changes permanent. Note that this call no longer reattaches the detached and modified item. It executes an immediate SQL UPDATE; the item is again in detached state after the command.

Disabling the persistence context and working with the StatelessSession interface has some other serious consequences and conceptual limitations (at least, if you compare it to a regular Session):

■ A StatelessSession doesn’t have a persistence context cache and doesn’t interact with any other second-level or query cache. Everything you do results in immediate SQL operations.

■ Modifications to objects aren’t automatically detected (no dirty checking), and SQL operations aren’t executed as late as possible (no write-behind).

■ No modification of an object and no operation you call are cascaded to any associated instance. You’re working with instances of a single entity class.

■ Any modifications to a collection that is mapped as an entity association (one-to-many, many-to-many) are ignored. Only collections of value types are considered. You therefore shouldn’t map entity associations with collections, but only the noninverse side with foreign keys to many-to-one; handle the relationship through one side only. Write a query to obtain data you’d otherwise retrieve by iterating through a mapped collection.

■ The StatelessSession bypasses any enabled org.hibernate.Interceptor and can’t be intercepted through the event system (both features are discussed later in this topic).

■ You have no guaranteed scope of object identity. The same query produces two different in-memory detached instances. This can lead to data-aliasing effects if you don’t carefully implement the equals() method of your persistent classes.

Good use cases for a StatelessSession are rare; you may prefer it if manual batching with a regular Session becomes cumbersome. Remember that the insert(), update(), and delete() operations have naturally different semantics than the equivalent save(), update(), and delete() operations on a regular Session. (They probably should have different names, too; the StatelessSession API was added to Hibernate ad hoc, without much planning. The Hibernate developer team discussed renaming this interface in a future version of Hibernate; you may find it under a different name in the Hibernate version you’re using.)

So far in this topic, we’ve shown how you can store and manipulate many objects with the most efficient strategy through cascading, bulk, and batch operations. We’ll now consider interception and data filtering, and how you can hook into Hibernate’s processing in a transparent fashion.

Data filtering and interception

Imagine that you don’t want to see all the data in your database. For example, the currently logged-in application user may not have the rights to see everything. Usually, you add a condition to your queries and restrict the result dynamically. This becomes difficult if you have to handle a concern such as security or temporal data (“Show me only data from last week,” for example). Even more difficult is a restriction on collections; if you iterate through the Item objects in a Category, you’ll see all of them.

One possible solution for this problem uses database views. SQL doesn’t standardize dynamic views—views that can be restricted and moved at runtime with some parameter (the currently logged-in user, a time period, and so on). Few databases offer more flexible view options, and if they’re available, they’re pricey and/or complex (Oracle offers a Virtual Private Database addition, for example).

Hibernate provides an alternative to dynamic database views: data filters with dynamic parameterization at runtime. We’ll look at the use cases and application of data filters in the following sections.

Another common issue in database applications is crosscutting concerns that require knowledge of the data that is stored or loaded. For example, imagine that you have to write an audit log of every data modification in your application. Hibernate offers an org.hibernate.Interceptor interface that allows you to hook into the internal processing of Hibernate and execute side effects such as audit logging. You can do much more with interception, and we’ll show you a few tricks after we’ve completed our discussion of data filters.

The Hibernate core is based on an event/listener model, a result of the last refactoring of the internals. If an object must be loaded, for example, a Load-Event is fired. The Hibernate core is implemented as default listeners for such events, and this system has public interfaces that let you plug in your own listeners if you like. The event system offers complete customization of any imaginable operation that happens inside Hibernate, and should be considered a more powerful alternative to interception—we’ll show you how to write a custom listener and handle events yourself.

Let’s first apply dynamic data filtering in a unit of work.

Dynamic data filters

The first use case for dynamic data filtering is related to data security. A User in CaveatEmptor has a ranking property. Now assume that users can only bid on items that are offered by other users with an equal or lower rank. In business terms, you have several groups of users that are defined by an arbitrary rank (a number), and users can trade only within their group.

You can implement this with complex queries. For example, let’s say you want to show all the Item objects in a Category, but only those items that are sold by users in the same group (with an equal or lower rank than the logged-in user). You’d write an HQL or Criteria query to retrieve these items. However, if you use aCategory.getItems() and navigate to these objects, all Item instances would be visible.

You solve this problem with a dynamic filter.

Defining a data filter

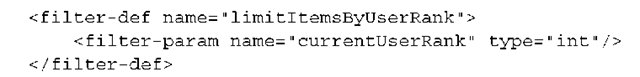

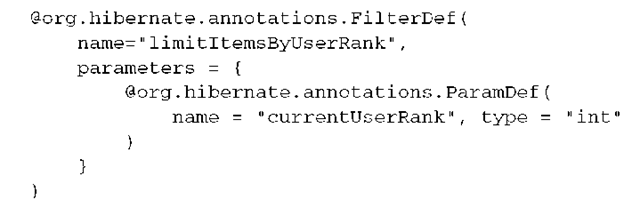

A dynamic data filter is defined with a global unique name, in mapping metadata. You can add this global filter definition in any XML mapping file you like, as long as it’s inside a <hibernate-mapping> element:

This filter is named limitItemsByUserRank and accepts one runtime argument of type int. You can put the equivalent @org.hibernate.annotations.FilterDef annotation on any class you like (or into package metadata); it has no effect on the behavior of that class:

The filter is inactive now; nothing (except maybe the name) indicates that it’s supposed to apply to Item objects. You have to apply and implement the filter on the classes or collections you want to filter.

Applying and implementing the filter

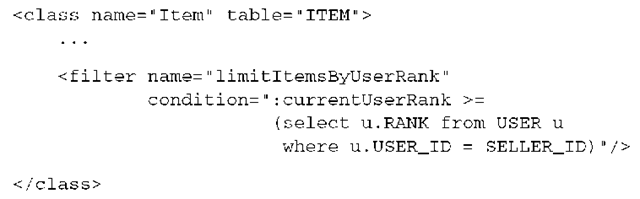

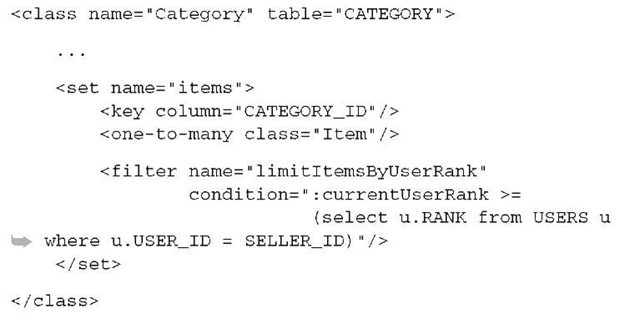

You want to apply the defined filter on the Item class so that no items are visible if the logged-in user doesn’t have the necessary rank:

The <filter> element can be set for a class mapping. It applies a named filter to instances of that class. The condition is an SQL expression that’s passed through directly to the database system, so you can use any SQL operator or function. It must evaluate to true if a record should pass the filter. In this example, you use a subquery to obtain the rank of the seller of the item. Unqualified columns, such as SELLER_ID, refer to the table to which the entity class is mapped. If the currently logged-in user’s rank isn’t greater than or equal than the rank returned by the subquery, the Item instance is filtered out.

Here is the same in annotations on the Item entity:

You can apply several filters by grouping them within a @org.hibernate.annota-tions.Filters annotation. A defined and applied filter, if enabled for a particular unit of work, filters out any Item instance that doesn’t pass the condition. Let’s enable it.

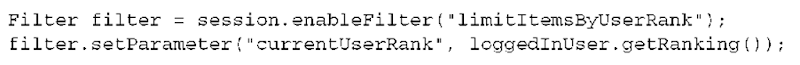

Enabling the filter

You’ve defined a data filter and applied it to a persistent class. It’s still not filtering anything; it must be enabled and parameterized in the application for a particular Session (the EntityManager doesn’t support this API—you have to fall back to Hibernate interfaces for this functionality):

You enable the filter by name; this method returns a Filter instance. This object accepts the runtime arguments. You must set the parameters you have defined. Other useful methods of the Filter are getFilterDefinition() (which allows you to iterate through the parameter names and types) and vali-date() (which throws a HibernateException if you forgot to set a parameter). You can also set a list of arguments with setParameterList(), this is mostly useful if your SQL condition contains an expression with a quantifier operator (the IN operator, for example).

Now every HQL or Criteria query that is executed on the filtered Session restricts the returned Item instances:

Two object-retrieval methods are not filtered: retrieval by identifier and navigational access to Item instances (such as from a Category with aCategory.get-Items()).

Retrieval by identifier can’t be restricted with a dynamic data filter. It’s also conceptually wrong: If you know the identifier of an Item, why shouldn’t you be allowed to see it? The solution is to filter the identifiers—that is, not expose identifiers that are restricted in the first place. Similar reasoning applies to filtering of many-to-one or one-to-one associations. If a many-to-one association was filtered (for example, by returning null if you call anItem.getSeller()), the multiplicity of the association would change! This is also conceptually wrong and not the intent of filters.

You can solve the second issue, navigational access, by applying the same filter on a collection.

Filtering collections

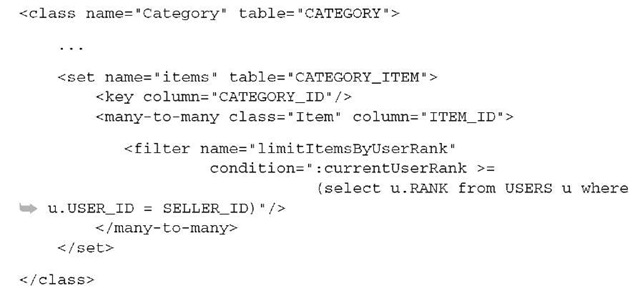

So far, calling aCategory.getItems() returns all Item instances that are referenced by that Category. This can be restricted with a filter applied to a collection:

In this example, you don’t apply the filter to the collection element but to the <many-to-many>. Now the unqualified SELLER_ID column in the subquery references the target of the association, the ITEM table, not the CATEGORY_ITEM join table of the association. With annotations, you can apply a filter on a many-to-many association with @org.hibernate.annotations.FilterJoin-Table(s) on the @ManyToMany field or getter method.

With annotations, you just place the @org.hibernate.annotations.Filter(s) on the right field or getter method, next to the @OneToMany or @ManyToMany annotation.

If you now enable the filter in a Session, all iteration through a collection of items of a Category is filtered.

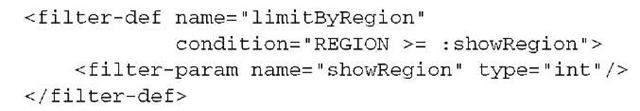

If you have a default filter condition that applies to many entities, declare it with your filter definition:

If applied to an entity or collection with or without an additional condition and enabled in a Session, this filter always compares the REGION column of the entity table with the runtime showRegion argument.

There are many other excellent use cases for dynamic data filters.

Use cases for dynamic data filters

Hibernate’s dynamic filters are useful in many situations. The only limitation is your imagination and your skill with SQL expressions. Typical use cases are as follows:

■ Security limits—A common problem is the restriction of data access given some arbitrary security-related condition. This can be the rank of a user, a particular group the user must belong to, or a role the user has been assigned.

If the association between Category and Item was one-to-many, you’d created the following mapping:

■ Regional data—Often, data is stored with a regional code (for example, all business contacts of a sales team). Each salesperson works only on a dataset that covers their region.

■ Temporal data—Many enterprise applications need to apply time-based views on data (for example, to see a dataset as it was last week). Hibernate’s data filters can provide basic temporal restrictions that help you implement this kind of functionality.

Another useful concept is the interception of Hibernate internals, to implement orthogonal concerns.

Intercepting Hibernate events

Let’s assume that you want to write an audit log of all object modifications. This audit log is kept in a database table that contains information about changes made to other data—specifically, about the event that results in the change. For example, you may record information about creation and update events for auction Items. The information that is recorded usually includes the user, the date and time of the event, what type of event occurred, and the item that was changed.

Audit logs are often handled using database triggers. On the other hand, it’s sometimes better for the application to take responsibility, especially if portability between different databases is required.

You need several elements to implement audit logging. First, you have to mark the persistent classes for which you want to enable audit logging. Next, you define what information should be logged, such as the user, date, time, and type of modification. Finally, you tie it all together with an org.hibernate.Interceptor that automatically creates the audit trail.

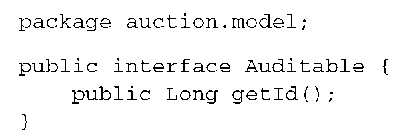

Creating the marker interface

First, create a marker interface, Auditable. You use this interface to mark all persistent classes that should be automatically audited:

This interface requires that a persistent entity class exposes its identifier with a getter method; you need this property to log the audit trail. Enabling audit logging for a particular persistent class is then trivial. You add it to the class declara-tion—for example, for Item:

Of course, if the Item class didn’t expose a public getId() method, you’d need to add it.

Creating and mapping the log record

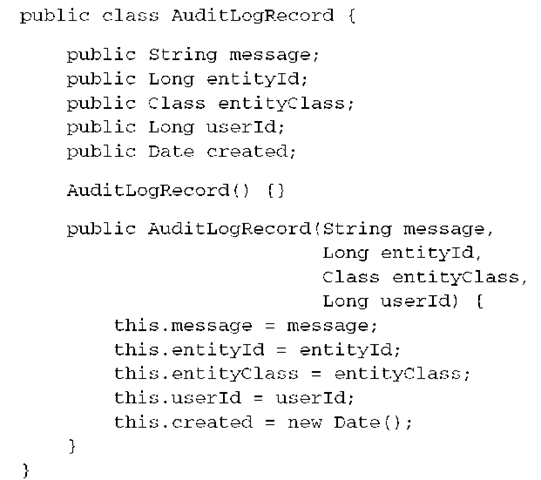

Now create a new persistent class, AuditLogRecord. This class represents the information you want to log in your audit database table:

You shouldn’t consider this class part of your domain model! Hence you expose all attributes as public; it’s unlikely you’ll have to refactor that part of the application. The AuditLogRecord is part of your persistence layer and possibly shares the same package with other persistence related classes, such as HibernateUtil or your custom UserType extensions.

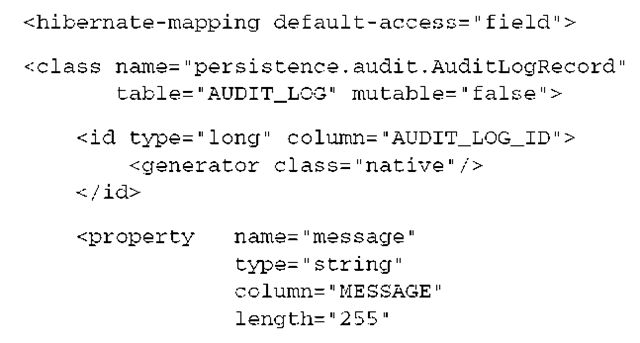

Next, map this class to the AUDIT_LOG database table:

You map the default access to a field strategy (no getter methods in the class) and, because AuditLogRecord objects are never updated, map the class as muta-ble=”false”. Note that you don’t declare an identifier property name (the class has no such property); Hibernate therefore manages the surrogate key of an AuditLogRecord internally. You aren’t planning to use the AuditLogRecord in a detached fashion, so it doesn’t need to contain an identifier property. However, if you mapped this class with annotation as a Java Persistence entity, an identifier property would be required. We think that you won’t have any problems creating this entity mapping on your own.

Audit logging is a somewhat orthogonal concern to the business logic that causes the loggable event. It’s possible to mix logic for audit logging with the business logic, but in many applications it’s preferable that audit logging be handled in a central piece of code, transparently to the business logic (and especially when you rely on cascading options). Creating a new AuditLogRecord and saving it whenever an Item is modified is certainly something you wouldn’t do manually. Hibernate offers an Interceptor extension interface.

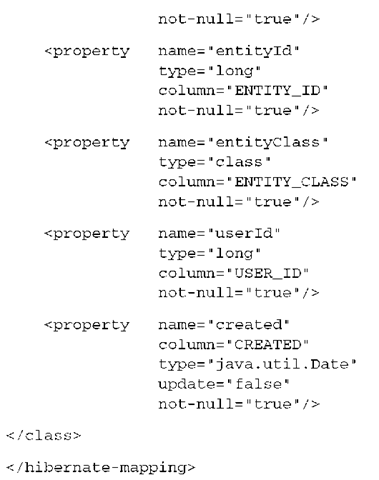

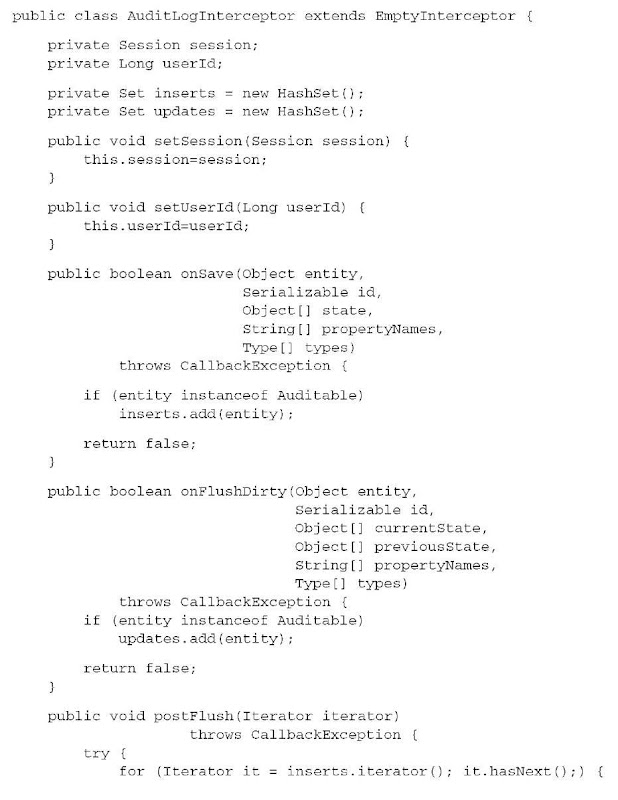

Writing an interceptor

A logEvent() method should be called automatically when you call save(). The best way to do this with Hibernate is to implement the Interceptor interface. Listing 12.1 shows an interceptor for audit logging.

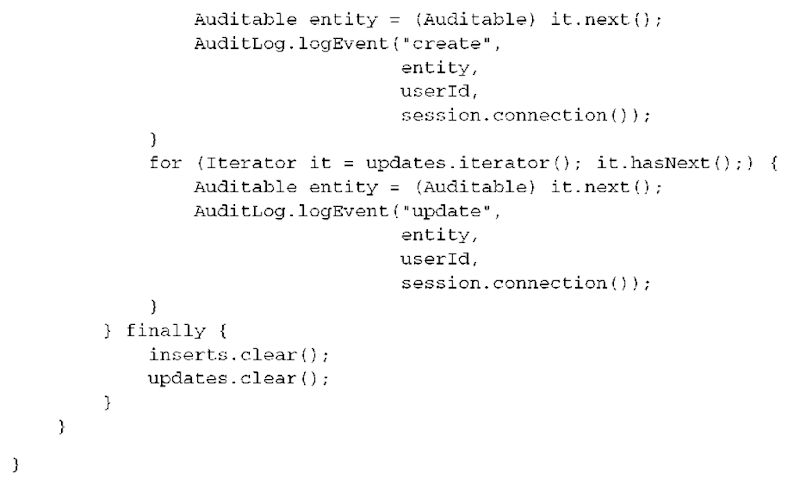

Listing 12.1 Implementation of an interceptor for audit logging

The Hibernate Interceptor API has many more methods than shown in this example. Because you’re extending the EmptyInterceptor, instead of implementing the interface directly, you can rely on default semantics of all methods you don’t override. The interceptor has two interesting aspects.

This interceptor needs the session and userId attributes to do its work; a client using this interceptor must set both properties. The other interesting aspect is the audit-log routine in onSave() and onFlushDirty(): You add new and updated entities to the inserts and updates collections. The onSave() interceptor method is called whenever an entity is saved by Hibernate; the onFlush-Dirty() method is called whenever Hibernate detects a dirty object.

The actual logging of the audit trail is done in the postFlush() method, which Hibernate calls after executing the SQL that synchronizes the persistence context with the database. You use the static call AuditLog.logEvent() (a class and method we discuss next) to log the event. Note that you can’t log events in onSave(), because the identifier value of a transient entity may not be known at this point. Hibernate guarantees to set entity identifiers during flush, so post-Flush() is the correct place to log this information.

Also note how you use the session: You pass the JDBC connection of a given Session to the static call to AuditLog.logEvent(). There is a good reason for this, as we’ll discuss in more detail.

Let’s first tie it all together and see how you enable the new interceptor.

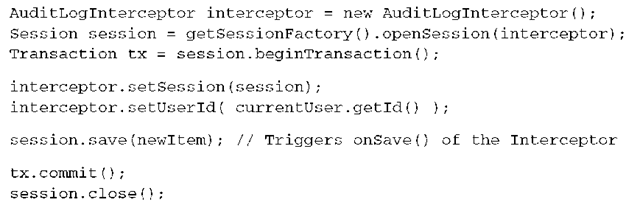

Enabling the interceptor

You need to assign the Interceptor to a Hibernate Session when you first open the session:

The interceptor is active for the Session you open it with.

If you work with sessionFactory.getCurrentSession(), you don’t control the opening of a Session; it’s handled transparently by one of Hibernate’s built-in implementations of CurrentSessionContext. You can write your own (or extend an existing) CurrentSessionContext implementation and supply your own routine for opening the current Session and assigning an interceptor to it.

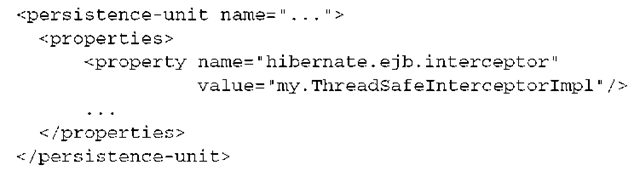

Another way to enable an interceptor is to set it globally on the Configuration with setInterceptor() before building the SessionFactory. However, any interceptor that is set on a Configuration and active for all Sessions must be implemented thread-safe! The single Interceptor instance is shared by concurrently running Sessions. The AuditLogInterceptor implementation isn’t thread-safe: It uses member variables (the inserts and updates queues).

You can also set a shared thread-safe interceptor that has a no-argument constructor for all EntityManager instances in JPA with the following configuration option in persistence.xml:

Let’s get back to that interesting Session-handling code in the interceptor and find out why you pass the connection() of the current Session to AuditLog. logEvent().

Using a temporary Session

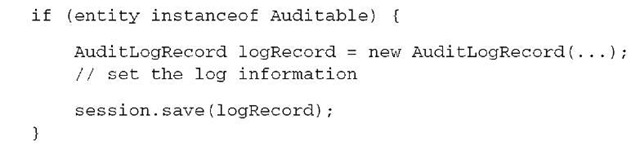

It should be clear why you require a Session inside the AuditLogInterceptor. The interceptor has to create and persist AuditLogRecord objects, so a first attempt for the onSave() method could be the following routine:

This seems straightforward: Create a new AuditLogRecord instance and save it, using the currently running Session. This doesn’t work.

It’s illegal to invoke the original Hibernate Session from an Interceptor callback. The Session is in a fragile state during interceptor calls. You can’t save() a new object during the saving of other objects! A nice trick that avoids this issue is opening a new Session only for the purpose of saving a single AuditLogRecord object. You reuse the JDBC connection from the original Session.

This temporary Session handling is encapsulated in the AuditLog class, shown in listing 12.2.

Listing 12.2 The AuditLog helper class uses a temporary Session

The logEvent() method uses a new Session on the same JDBC connection, but it never starts or commits any database transaction. All it does is execute a single SQL statement during flushing.

This trick with a temporary Session for some operations on the same JDBC connection and transaction is sometimes useful in other situations. All you have to remember is that a Session is nothing more than a cache of persistent objects (the persistence context) and a queue of SQL operations that synchronize this cache with the database.

We encourage you to experiment and try different interceptor design patterns. For example, you could redesign the auditing mechanism to log any entity, not only Auditable. The Hibernate website also has examples using nested interceptors or even for logging a complete history (including updated property and collection information) for an entity.

The org.hibernate.Interceptor interface also has many more methods that you can use to hook into Hibernate’s processing. Most of them let you influence the outcome of the intercepted operation; for example, you can veto the saving of an object. We think that interception is almost always sufficient to implement any orthogonal concern.

Having said that, Hibernate allows you to hook deeper into its core with the extendable event system it’s based on.

The core event system

Hibernate 3.x was a major redesign of the implementation of the core persistence engine compared to Hibernate 2.x. The new core engine is based on a model of events and listeners. For example, if Hibernate needs to save an object, an event is triggered. Whoever listens to this kind of event can catch it and handle the saving of the object. All Hibernate core functionalities are therefore implemented as a set of default listeners, which can handle all Hibernate events.

This has been designed as an open system: You can write and enable your own listeners for Hibernate events. You can either replace the existing default listeners or extend them and execute a side effect or additional procedure. Replacing the event listeners is rare; doing so implies that your own listener implementation can take care of a piece of Hibernate core functionality.

Essentially, all the methods of the Session interface correlate to an event. The load() method triggers a LoadEvent, and by default this event is processed with the DefaultLoadEventListener.

A custom listener should implement the appropriate interface for the event it wants to process and/or extend one of the convenience base classes provided by Hibernate, or any of the default event listeners. Here’s an example of a custom load event listener:

This listener calls the static method isAuthorized() with the entity name of the instance that has to be loaded and the database identifier of that instance. A custom runtime exception is thrown if access to that instance is denied. If no exception is thrown, the processing is passed on to the default implementation in the superclass.

Listeners should be considered effectively singletons, meaning they’re shared between requests and thus shouldn’t save any transaction related state as instance variables. For a list of all events and listener interfaces in native Hibernate, see the API Javadoc of the org.hibernate.event package. A listener implementation can also implement multiple event-listener interfaces.

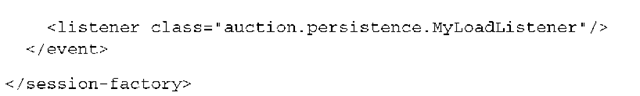

Custom listeners can either be registered programmatically through a Hibernate Configuration object or specified in the Hibernate configuration XML (declarative configuration through the properties file isn’t supported). You also need a configuration entry telling Hibernate to use the listener in addition to the default listener:

Listeners are registered in the same order they’re listed in your configuration file. You can create a stack of listeners. In this example, because you’re extending the built-in DefaultLoadEventListener, there is only one. If you didn’t extend the DefaultLoadEventListener, you’d have to name the built-in DefaultLoad-EventListener as the first listener in your stack—otherwise you’d disable loading in Hibernate!

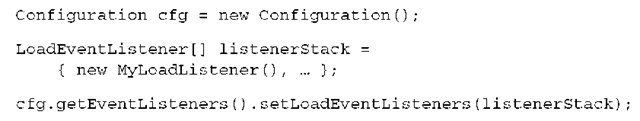

Alternatively you may register your listener stack programmatically:

Listeners registered declaratively can’t share instances. If the same class name is used in multiple <listener/> elements, each reference results in a separate instance of that class. If you need the capability to share listener instances between listener types, you must use the programmatic registration approach.

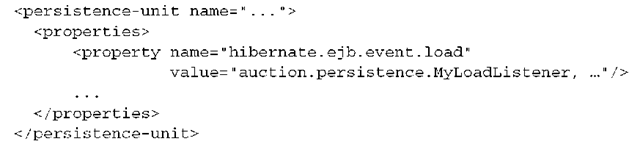

Hibernate EntityManager also supports customization of listeners. You can configure shared event listeners in your persistence.xml configuration as follows:

The property name of the configuration option changes for each event type you want to listen to (load in the previous example).

If you replace the built-in listeners, as MyLoadListener does, you need to extend the correct default listeners. At the time of writing, Hibernate EntityManager doesn’t bundle its own LoadEventListener, so the listener that extends org. hibernate.event.DefaultLoadEventListener still works fine. You can find a complete and up-to-date list of Hibernate EntityManager default listeners in the reference documentation and the Javadoc of the org.hibernate.ejb.event package. Extend any of these listeners if you want to keep the basic behavior of the Hibernate EntityManager engine.

You rarely have to extend the Hibernate core event system with your own functionality. Most of the time, an org.hibernate.Interceptor is flexible enough. It helps to have more options and to be able to replace any piece of the Hibernate core engine in a modular fashion.

The EJB 3.0 standard includes several interception options, for session beans and entities. You can wrap any custom interceptor around a session bean method call, intercept any modification to an entity instance, or let the Java Persistence service call methods on your bean on particular lifecycle events.

Entity listeners and callbacks

EJB 3.0 entity listeners are classes that intercept entity callback events, such as the loading and storing of an entity instance. This is similar to native Hibernate interceptors. You can write custom listeners, and attach them to entities through annotations or a binding in your XML deployment descriptor.

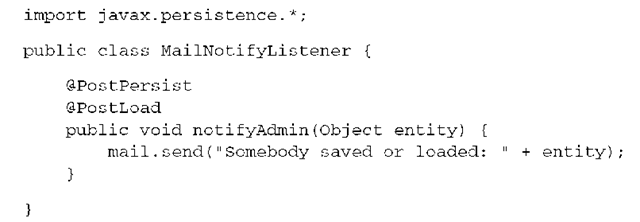

Look at the following trivial entity listener:

An entity listener doesn’t implement any particular interface; it needs a no-argument constructor (in the previous example, this is the default constructor). You apply callback annotations to any methods that need to be notified of a particular event; you can combine several callbacks on a single method. You aren’t allowed to duplicate the same callback on several methods.

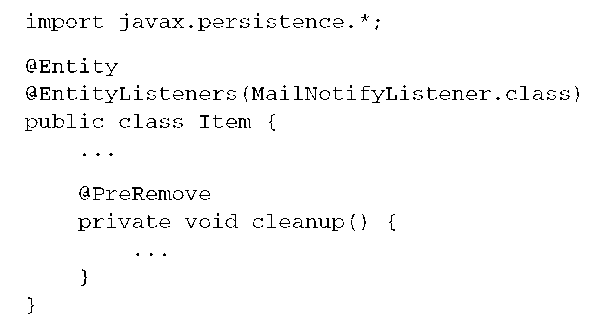

The listener class is bound to a particular entity class through an annotation:

The @EntityListeners annotation takes an array of classes, if you need to bind several listeners. You can also place callback annotations on the entity class itself, but again, you can’t duplicate callbacks on methods in a single class. However, you can implement the same callback in several listener classes or in the listener and entity class.

You can also apply listeners to superclasses for the whole hierarchy and define default listeners in your persistence.xml configuration file. Finally, you can exclude superclass listeners or default listeners for a particular entity with the @ExcludeSuperclassListeners and @ExcludeDefaultListeners annotations.

All callback methods can have any visibility, must return void, and aren’t allowed to throw any checked exceptions. If an unchecked exception is thrown, and a JTA transaction is in progress, this transaction is rolled back.

A list of available JPA callbacks is shown in Table 12.2.

Table 12.2 JPA event callbacks and annotations

|

Callback annotation |

Description |

|

@PostLoad |

Triggered after an entity instance has been loaded with find() or getReference(), or when a Java Persistence query is executed. Also called after the refresh() method is invoked. |

|

@PrePersist, @PostPersist |

Occurs immediately when persist() is called on an entity, and after the database insert. |

|

@PreUpdate, @PostUpdate |

Executed before and after the persistence context is synchronized with the database—that is, before and after flushing. Triggered only when the state of the entity requires synchronization (for example, because it’s considered dirty). |

|

@PreRemove, @PostRemove |

Triggered when remove() is called or the entity instance is removed by cascading, and after the database delete. |

Unlike Hibernate interceptors, entity listeners are stateless classes. You therefore can’t rewrite the previous Hibernate audit-logging example with entity listeners, because you’d need to hold the state of modified objects in local queues. Another problem is that an entity listener class isn’t allowed to use the EntityManager. Certain JPA implementations, such as Hibernate, let you again apply the trick with a temporary second persistence context, but you should look at EJB 3.0 interceptors for session beans and probably code this audit-logging at a higher layer in your application stack.

Summary

In this topic, you learned how to work with large and complex datasets efficiently. We first looked at Hibernate’s cascading options and how transitive persistence can be enabled with Java Persitence and annotations. Then we covered the bulk operations in HQL and JPA QL and how you write batch procedures that work on a subset of data to avoid memory exhaustion.

In the last section, you learned how to enable Hibernate data filtering and how you can create dynamic data views at the application level. Finally, we introduced the Hibernate Interceptor extension point, the Hibernate core event system, and the standard Java Persistence entity callback mechanism.

Table 12.3 shows a summary you can use to compare native Hibernate features and Java Persistence.

In the next topic, we switch perspective and discuss how you retrieve objects from the database with the best-performing fetching and caching strategy.

Table 12.3 Hibernate and JPA comparison chart

|

Hibernate Core |

Java Persistence and EJB 3.0 |

|

Hibernate supports transitive persistence with cascading options for all operations. |

Transitive persistence model with cascading options equivalent to Hibernate. Use Hibernate annotations for special cases. |

|

Hibernate supports bulk UPDATE, DELETE, and INSERT … SELECT operations in polymorphic HQL, which are executed directly in the database. |

JPA QL supports direct bulk UPDATE and DELETE. |

|

Hibernate supports query result cursors for batch updates. |

Java Persistence does not standardize querying with cursors, fall back to the Hibernate API. |

|

Powerful data filtering is available for the creation of dynamic data views. |

Use Hibernate extension annotations for the mapping of data filters. |

|

Extension points are available for interception and event listeners. |

Provides standardized entity lifecycle callback handlers. |

.