Regularized Morphable Model

The correspondence estimation, detailed in Sect. 6.2.2, may, for some scans, be wrong in some regions. In this section, we present a scheme aiming to improve the correspondence by regularizing it using statistics derived from scans that do not present correspondence errors. This is achieved by modifying the model construction: probabilistic PCA [42] is used instead of PCA, which regularizes the model by allowing the exemplars to be noisy.

Probabilistic PCA

Instead of assuming a linear model for the shape, as in the previous section, we assume a linear Gaussian model

where Cs, whose columns are the regularized shape principal components, has dimensions 3Nv x Ns, and the shape coefficients α and the noise ε have a Gaussian distribution with zero mean and covariance I and σ 2I, respectively.

Tipping and Bishop [42] use the EM algorithm [18] to iteratively estimate Cs and the projection of the example vectors to the model,![]() The algorithm starts with Cs = A; and then at each iteration it computes a new estimate of the shape coefficients K (expectation step, or e-step) and of the regularized principal components Cs (maximization step, or m-step). The coefficients of the example shapes, the unobserved variables, are estimated at the e-step.

The algorithm starts with Cs = A; and then at each iteration it computes a new estimate of the shape coefficients K (expectation step, or e-step) and of the regularized principal components Cs (maximization step, or m-step). The coefficients of the example shapes, the unobserved variables, are estimated at the e-step.

This is the maximum a posteriori estimator of K; that is, the expected value of K given the posterior distribution p(u | CS). At the m-step, the model is estimated by computing the Cs, which maximizes the likelihood of the data, given the current estimate of K and B.

These two steps are iterated in sequence until the algorithm is judged to have converged. In the original algorithm, the value of σ2 is also estimated at the m-step as

but in our case, with![tmpdece482_thumb[2] tmpdece482_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece482_thumb2_thumb.png) this would yield an estimated value of zero. Therefore, we prefer to estimate σ2 by replacing A and K in (6.9) with a data matrix of test vectors (vectors not used in estimating Ci ) and its corresponding coefficients matrix obtained via (6.7). If a test set is not available, we can still get an estimate of σ2 by cross validation.

this would yield an estimated value of zero. Therefore, we prefer to estimate σ2 by replacing A and K in (6.9) with a data matrix of test vectors (vectors not used in estimating Ci ) and its corresponding coefficients matrix obtained via (6.7). If a test set is not available, we can still get an estimate of σ2 by cross validation.

Segmented Morphable Model

Our Morphable Model is derived from statistics computed on 200 example faces. As a result, the dimensions of the shape and texture spaces, Ns and Nt , are limited to 199. This might not be enough to account for the rich variations of individualities present in humankind. Naturally, one way to augment the dimension of the face subspace would be to use 3D scans of more persons but they are not available. Hence we resort to another scheme: We segment the face into four regions (nose, eyes, mouth, and the rest) and use a separate set of shape and texture coefficients to code them [6]. This method multiplies by four the dimensionality of the Morphable Model and results in an increased flexibility. However, this process must be taken with care, since a segmented model looses the correlation between the segments. More formally, segmenting is the same as assuming zero covariance between the segments. The fitting results in Sect. 6.4 and the identification results in Sect. 6.5 are based on a segmented Morphable Model with Ns = Nt = 100 for all segments. In the rest of the topic, we denote the shape and texture parameters by α and β when they can be used interchangeably for the global and the segmented parts of the model. When we want to distinguish them, we use, for the shape parameters,![]() for the global model (full face) and

for the global model (full face) and![]() for the segmented parts (the same notation is used for the texture parameters).

for the segmented parts (the same notation is used for the texture parameters).

Identity/Expression Separated 3D Morphable Model

The 3D Morphable Model separates shape and albedo parameters from pose and lighting, which makes pose and lighting-invariant recognition possible. The same idea can be used for expression-invariant face recognition from 3D shape [2] (see Sect. 6.5.5).

For these experiments, an identity/expression separated 3D Morphable Model [10] built from 270 subjects was used. It was built from one neutral expression face scan per identity and 135 expression scans of a subset of the subjects. The identity model was built from the 270 neutral expression scans as in Sect. 6.2.3.

Additionally, for each of the 135 expression scans, we calculated an expression vector as the difference between the expression scan and the corresponding neutral scan of that subject. This data is already mode-centered, if we regard the neutral expression as the natural mode of expression data. On these offset vectors again PCA was applied to get Ne expression components SE and expression coefficients ai, such that the complete expression model is

This model assumes, that it is possible to transfer the expression deformation from one face to another. Even if this should not be strictly true, which is what authors using for example, tensor based models [46] assume, it is a good enough assumption to make the method invariant to expressions. And a big advantage of this independence assumption is that we can train on far less data than in a tensor framework, because we do not need the full Cartesian product of expressions and identities. In fact we have not even had any expression scan available for most of the training subjects, but were able to learn useful statistics from the available scans.

We perform identification by fitting the model from (6.10), to new scans, taking the Gaussian prior over the identity and expression coefficients into account. The maximum likelihood coefficients given a new observation are unique, even if the shape and expression basis are not linearly independent.

We use the registered scans and a mirrored version of each registered scan to increase the variability of the model. This allows us to calculate a model with more than 175 neutral coefficients.

Morphable Model to Synthesize Images

One part of the analysis by synthesis loop is the synthesis (i.e., the generation of accurate face images viewed from any pose and illuminated under any condition). This process is explained in this section.

Shape Projection

To render the image of a face, the 3D shape must be projected to the 2D image frame. This is performed in two steps. First, a 3D rotation and translation (i.e., a rigid transformation) maps the object-centered coordinates, S, to a position relative to the camera.

The angles φ and θ control in-depth rotations around the vertical and horizontal axis, and γ defines a rotation around the camera axis; tw is a 3D translation. A projection then maps a vertex k to the image plane in (xj,yj). We typically use one of two projections, either the perspective or the weak perspective projection.

where f is the focal length of the camera, which is located in the origin; and (tx,ty) defines the image-plane position of the optical axis.

Illumination and Color Transformation

Ambient and Directed Light

We simulate the illumination of a face using an ambient light and a directed light. The effects of the illumination are obtained using the standard Phong model, which approximately describes the diffuse and specular reflection on a surface [21]; see [7] for further details. The parameters of this model are the intensity of the ambient light ![tmpdece491_thumb[2] tmpdece491_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece491_thumb2_thumb.png) the intensity of the directed light

the intensity of the directed light![]() its direction

its direction![]() the specular reflectance of human skin (ks), and the angular distribution of the specular reflections of human skin (ν).

the specular reflectance of human skin (ks), and the angular distribution of the specular reflections of human skin (ν).

Color Transformation

Input images may vary a lot with respect to the overall tone of color. To be able to handle a variety of color images as well as gray level images and even paintings, we

For brevity, the illumination and color transformation parameters are regrouped in the vector ι. Hence, the illuminated texture depends on the coefficients of the linear combination regrouped in β, on the light parameters ι, and on a and ρ used to compute the normals and the viewing direction of the vertices required for the Phong illumination model. Similarly to the shape, we denote the color of a vertex i by the vector ti (θ), where θ denotes the ensemble of model parameters.

Using the Morphable Model framework, the image of the face of any individual seen from any angle and illuminated from any direction can be obtained from the shape parameters ai, the texture parameters βi, the shape projection parameters, and the illumination parameters by

where Xi and yi are computed by (6.12).

In a nutshell, the prior models accounting for the variations of the face image are devised as follows: Gaussian probability models for the registered 3D shape and albedo, a Phong reflectance model, a single directed light source for the illumination model, and rigid pose variations.

Image Analysis with a 3D Morphable Model

In the analysis by synthesis framework, an algorithm seeks the parameters of the model that render a face as close to the input image as possible. These parameters explain the image and can be used for high-level tasks such as identification. This algorithm is called a fitting algorithm. It is characterized by the following four features.

• Efficient: The computational load allowed for the fitting algorithm is clearly dependent on the applications. Security applications, for instance, require fast algorithms (i.e., near real time).

• Robust (against non-Gaussian noise): The assumption of normality of the difference between the image synthesized by the model and the input image is generally violated owing to the presence of accessories or artifacts (glasses, hair, specular highlight).

• Accurate: The accuracy of the reconstruction must be sufficient to allow the subsequent use of the reconstructed parameters.

Apply gains gr, gg, gb, offsets or, og, ob, and a color contrast c to each channel [6]. ![]() This is a linear transformation that multiplies the RGB color of a vertex (after it has been illuminated) by the matrix M and adds the vector o =[or, og, ob]T, where

This is a linear transformation that multiplies the RGB color of a vertex (after it has been illuminated) by the matrix M and adds the vector o =[or, og, ob]T, where ![tmpdece502_thumb[2] tmpdece502_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece502_thumb2_thumb.png)

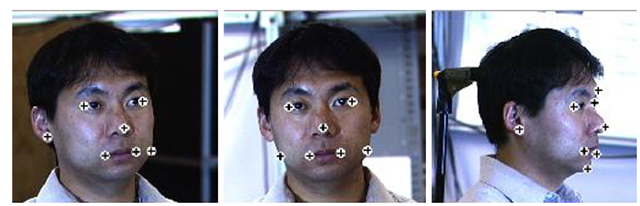

Fig. 6.5 Initialization: Seven landmarks for front and side views and eight for the profile view are manually labeled for each input image

• Automatic: The fitting should require as little human intervention as possible, optimally with no initialization. For frontal images, the process has been completely automated in [13].

An algorithm capable of any of the four aforementioned features is difficult to set up. An algorithm capable of all four features is the holy grail of model-based computer vision. In this topic, we present two fitting algorithms. The first one, called stochastic newton optimization (SNO) is accurate but computationally expensive: a fitting takes 4.5 minutes on a 2 GHz Pentium IV. SNO is detailed elsewhere [7].

Romdhani and Vetter [39] improved the fitting by using a cost function that, as well as the pixel intensity, uses various image features such as the edges or the location of the specular highlights. The overall cost function obtained is smoother and, hence, a stochastic optimization algorithm is not needed to avoid the local minima problem. This leads to the Multi-Features Fitting (MFF) algorithm that has a wider radius of convergence and a higher level of precision.

In Sect. 6.5, we compare identification results for both algorithms. Both, the SNO and the MMF fitter uses the MPI Morphable Model detailed in [6]. Additionally, we show results obtained with the MMF fitter and the BFM (see Sect. 6.2).

As initialization, the algorithms require the correspondences between some of the model vertices (typically eight) and the input image. In the experiments shown here, these correspondences are set manually, while we expect that these points could also be found manually using methods such as [22,24]. The landmark points are required to obtain a good initial condition for the iterative algorithm. The 2D positions in the image of these Nl points are set in the matrix L2xNl . They are in correspondence with the vertex indices set in the vector vNl x1. The positions of these landmarks for three views are shown in Fig. 6.5.

Maximum a Posteriori Estimation of the Parameters

Both algorithms presented aim to find the model parameters u, ρ, β, ι that explain an input image. To increase the robustness of the algorithms, these parameters are estimated by a maximum a posteriori (MAP) estimator, which maximizes![]() Applying the Bayes rule and neglecting the dependence between parameters yield

Applying the Bayes rule and neglecting the dependence between parameters yield

The expression of the priors ρ(α) and ρ(β), is given by (6.5). For each shape projection and illumination parameter, we assume a Gaussian probability distribution with mean Pi and Ti and variance σ2 { and σ2. These values are set manually.

Assuming that the X and y coordinates of the landmark points are independent and that they have the same Gaussian distribution with variance σ|, we arrive at the energy

Stochastic Newton Optimization

The likelihood of the input image given the model parameters is expressed in the image frame. Assuming that all the pixels are independent and that they have the same Gaussian distribution with variance σ^, gives:

The sum is carried out over the pixels that are projected from the vertices in![]()

For each pixel location, the norm is computed over the three color channels. The overall energy to be minimized is then:

This log-likelihood is iteratively minimized by performing a Taylor expansion up to the second order (i.e., approximating the log-likelihood by a quadratic function) and computing the update that minimizes the quadratic approximation. The update is added to the current parameter to obtain the new parameters.

We use a stochastic minimization to decrease the odds of getting trapped in a local minima and to decrease the computational time: Instead of computing Ej and its derivatives on all pixels of Ψ(α, ρ), it is computed only on a subset of 40 pixels thereof. These pixels are randomly chosen at each iteration. The first derivatives are computed analytically using the chain rule. The Hessian is approximated by a diagonal matrix computed by numeric differentiation every 1000 iterations. This algorithm was further detailed by Blanz and Vetter [7]. The SNO algorithm is extremely accurate (see the experiments in Sect. 6.5).

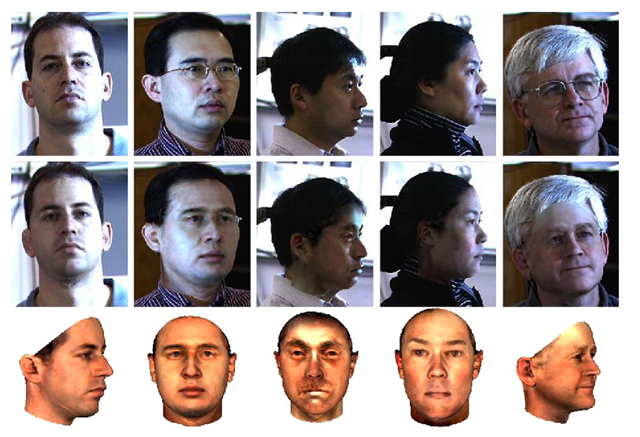

Fig. 6.6 Stochastic Newton optimization fitting results: Three-dimensional reconstruction from CMU-PIE images using the SNO fitting algorithm using the MPI model. Top : originals. Middle: reconstructions rendered into original. Bottom: novel views. The pictures shown here are difficult to fit due to harsh illumination, profile views, or eyeglasses. Illumination in the third image is not fully recovered, so part of the reflections are attributed to texture

Fitting Results

Several fitting results and reconstructions are shown in Fig. 6.6. They were obtained with the SNO algorithm and the MPI model on some of the PIE images (see Sect. 13.5.4 of Chap. 13). These images are illuminated with ambient light and one directed light source. The algorithm was initialized with seven or eight landmark points (depending on the pose of the input image) (Fig. 6.5). In the third column, the separation between the albedo of the face and the illumination is not optimal: part of the specular reflections were attributed to the texture by the algorithm. This may be due to shortcomings of the Phong illumination model for reflections at grazing angles or to a prior probability inappropriate for this illuminationcondition. (The prior probabilities of the illumination and rigid parameters,are kept constant for fitting the 4488 PIE images.)![tmpdece513_thumb[2] tmpdece513_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece513_thumb2_thumb.png)

Multiple Feature Fitting

In [39], an improvement over the SNO algorithm is proposed. It is based on the assumption that the prior by the texture and shape PCA models are not strong enough to obtain an accurate estimate of the 3D shape when only a few manually set anchor points are used as input. This is because the cost function to be minimized is highly nonconvex and exhibits many local minima. In fact, the shape model requires the correspondence between the input image and the reference frame to be found for every visible vertices. Using only facial color information to recover, the correspondence is not optimal and may be trapped in regions that present similar intensity variations (eyes/eyebrows, for instance). This is why, we use not only the pixel intensities but also other features of the input image to obtain a more accurate estimate of the correspondence and, as a result, of the 3-D shape. One example of such a feature is the edges. Other features that improve the shape and texture estimate are the specular highlights and the texture constraints. The specular highlight feature uses the specular highlight location, detected on the input image, to refine the normals and, thereby, the 3-D shape of the vertices affected. The texture constraint enforces that the estimated texture lies within a specific range (typically [0, 255]), which improves the illumination estimate. The overall resulting cost function is smoother and easier to minimize, making the system more robust and reliable. A question raised by this problem is how to fuse the different image cues to form the optimal parameter estimate. We chose a Bayesian framework and maximize the posterior probability of the parameters given the image and its features.

This analysis algorithm, called the Multiple Feature Fitting algorithm, is briefly outlined here; a more detailed explanation is provided in [38, 39]. It is demonstrated that, if the features (pixel intensities, edges, and specular highlights) are independent and extracted from the input image by a deterministic algorithm, then the overall cost function is a linear combination of the cost function of each feature taken separately

where Ej denotes the pixel intensity feature (6.17),![tmpdece516_thumb[2] tmpdece516_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece516_thumb2_thumb.png)

![tmpdece517_thumb[2] tmpdece517_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece517_thumb2_thumb.png) denotes the prior feature and Ee,Es and Et denote, respectively, the edge, specular highlights, and texture constraints cost functions. The τ’s are weighting parameters. A detailed explanation of these cost functions is provided in [39]. The overall cost function is minimized using a Levenberg-Marquardt optimization algorithm.

denotes the prior feature and Ee,Es and Et denote, respectively, the edge, specular highlights, and texture constraints cost functions. The τ’s are weighting parameters. A detailed explanation of these cost functions is provided in [39]. The overall cost function is minimized using a Levenberg-Marquardt optimization algorithm.

The image edges provide information about the 2-D shape independent of the texture and of the illumination. Hence, the cost function used to fit the edge features provides a more direct constraint on the correspondences and on the shape and pose parameters. The edge feature is useful to recover the correspondences of specific facial characteristics (eyes, eyebrows, mouth, nose). On the other hand, it does not carry much depth information. So it is beneficial to use the edge and intensity features in combination. The specular highlights are easy to detect: the pixels with a specular highlight saturate. Additionally, they give a direct relationship between the 3-D geometry of the surface at these points, the camera direction, and the light direction: a point on a specular highlight has a normal that has the direction of the bisector of the angle formed by the light source direction and the camera direction.

Hence, the specular highlight cost function is used to refine the shape estimate for the vertices that are projected onto specular highlights of the input image.

In order to accurately estimate the 3-D shape, it is necessary to recover the texture, the light direction, and its intensity. To separate the contribution of the texture from light in a pixel intensity value, a Gaussian texture prior model is used (see (6.5)). However, it appears that this prior model is not restrictive enough and is able to instantiate invalid textures (negative and overflowing color values). To constrain the texture model and to improve the separation of light source strength from albedo, we introduce a feature that constrains the range of valid albedo values.

Experimental Evaluation

We evaluated the 3D Morphable Model and the fitting algorithms on two applications: identification and verification. In the identification task, an image of an unknown person is provided to our system. The unknown face image is then compared to a database of known people, called the gallery set. The ensemble of unknown images is called the probe set. In the identification task, it is assumed that the individual in the unknown image is in the gallery. In a verification task, the individual in the unknown image claims an identity. The system must then accept or reject the claimed identity. Verification performance is characterized by two statistics: The verification rate is the rate at which legitimate users are granted access. The false alarm rate is the rate at which impostors are granted access. See Sect. 14.1 of Chap. 14 for more detailed explanations of these two tasks.

We evaluated our approach on three data sets. Set 1: a portion of the FERET data set containing images with various poses. In the FERET nomenclature, these images correspond to the series ba through bk. We omitted the images bj as the subjects present an expression that is not accounted for by our 3D Morphable Model. This data set includes 194 individuals across nine poses at constant lighting condition except for the series bk, which used a frontal view at another illumination condition than the rest of the images. Set 2: a portion of the CMU-PIE data set including images of 68 individuals at a neutral expression viewed from 13 different angles at ambient light. Set 2: a portion of the CMU-PIE data set containing images of the same 68 individuals at three poses (frontal, side, and profile) and illuminated by 21 different directions and by ambient light only. Among the 68 individuals in Set 2, a total of 28 wear glasses, which are not modeled and could decrease the accuracy of the fitting. None of the individuals present in these three sets was used to construct the 3D Morphable Model. These sets cover a large ethnic variety, not present in the set of 3D scans used to build the model. Refer to Chap. 13 for a formal description of the FERET and PIE set of images.

Identification and verification are performed by fitting an input face image to the 3D Morphable Model, thereby extracting its identity parameters, a and β. Then recognition tasks are achieved by comparing the identity parameters of the input image with those of the gallery images. We defined the identity parameters of a face image, denoted by the vector c, by stacking the shape and texture parameters of the global and segmented models (see Sect. 6.2.5) and rescaling them by their standard deviations.

We defined two distance measures to compare two identity parameters c1 and c2. The first measure, dA, is based on the angle between the two vectors (it can also be seen as a normalized correlation), and is insensitive to the norm of both vectors. This is favorable for recognition tasks, as increasing the norm of c produces a caricature (see Sect. 6.2.4) which does not modify the perceived identity. The second distance [7], dW, is based on discriminant analysis [19] and favors directions where identity variations occur. Denoting by Cw the pooled within-class covariance matrix, these two distances are defined by:

Results on Sets 1 and 3 use the distance dW with, for Set 1, a within-class covariance matrix learned on Set 2, and vice versa.

Pose Variation

In this section, we present identification and verification results for images of faces that vary in pose. Table 6.1 compares percentages of correct rank 1 identification obtained with the SNO and MFF fitting algorithm on Set 1 (FERET). Table 6.2 shows more details for the SNO fitting. The 10 poses were used to constitute gallery sets. The results are detailed for each probe pose. The results for the front view gallery (here in bold) were first reported in [7]. The first plot of Fig. 6.7 shows the ROC for a verification task for the front view gallery and the nine other poses in the probe set. The verification rate for a false alarm rate of 1% is 87.9%.

Pose and Illumination Variations

In this section, we investigate the performance of our method in the presence of combined pose and illumination variations. The SNO and the MMF algorithm was applied to the images of Set 2, CMU-PIE images of 68 individuals varying with respect to three poses, 21 directed light and ambient light conditions. Table 6.3 presents the rank 1 identification performance averaged over all lighting conditions for front, side, and profile view galleries. Illumination 13 was selected for the galleries. The second plot of Fig. 6.7 shows the ROC for a verification using as gallery a side view illuminated by light 13 and using all other images of the set as probes. The verification rate for a 1% false alarm rate was 77.5%. These results were first reported by Blanz and Vetter [7].

Table 6.1 Rank 1 identification results obtained on Set 1 (subset of the FERET database) for the SNO or MFF, resp. with the MPI or BFM, resp

|

Probe view |

Pose Φ |

Identification rate |

|

|

|

SNO, MPI [7] |

MFF, MPI [38] |

MFF, BFM [34] |

||

|

bb |

38.9° |

96.4% |

92.7% |

97.4% |

|

bc |

27.4° |

99.0% |

99.5% |

99.5% |

|

bd |

18.9° |

99.5% |

99.5% |

100.0% |

|

be |

11.2° |

Gallery |

||

|

ba |

1.1° |

100.0% |

96.9% |

99.0% |

|

bf |

-7.1° |

97.4% |

99.5% |

99.5% |

|

bg |

-16.3° |

96.4% |

95.8% |

97.9% |

|

bh |

-26.5° |

95.9% |

89.6% |

94.8% |

|

bi |

-37.9° |

91.2% |

77.1% |

83.0% |

|

bk |

0.1° |

94.3% |

80.7% |

90.7% |

|

Mean |

96.7% |

92.4% |

95.8% |

Table 6.2 SNO identification performances on Set 1 (subset of the FERET database)

|

|

Performance (%) by probe view |

|

|||||||||

|

Parameter |

bi |

bh |

bg |

bf |

ba |

be |

bd |

bc |

bb |

bk |

Mean |

|

φ |

-37.9° |

-26.5° |

-16.3° |

-7.1° |

1.1° |

11.2° |

18.9° |

27.4° |

38.9° |

0.1° |

|

|

Gallery view |

|||||||||||

|

bi |

- |

98.5 |

94.8 |

87.6 |

85.6 |

87.1 |

87.1 |

84.0 |

77.3 |

76.8 |

86.5 |

|

bh |

99.5 |

- |

97.4 |

95.9 |

91.8 |

95.9 |

94.8 |

92.3 |

83.0 |

86.1 |

93.0 |

|

bg |

97.9 |

99.0 |

- |

99.0 |

95.4 |

96.9 |

96.9 |

91.2 |

81.4 |

89.2 |

94.1 |

|

bf |

95.9 |

99.5 |

99.5 |

- |

97.9 |

96.9 |

99.0 |

94.8 |

88.1 |

95.4 |

96.3 |

|

ba |

90.7 |

95.4 |

96.4 |

97.4 |

- |

99.5 |

96.9 |

95.4 |

94.8 |

96.9 |

95.9 |

|

be |

91.2 |

95.9 |

96.4 |

97.4 |

100.0 |

- |

99.5 |

99.0 |

96.4 |

94.3 |

96.7 |

|

bd |

88.7 |

97.9 |

96.9 |

99.0 |

97.9 |

99.5 |

- |

99.5 |

98.5 |

92.3 |

96.7 |

|

bc |

87.1 |

90.7 |

91.2 |

94.3 |

96.4 |

99.0 |

99.5 |

- |

99.0 |

87.6 |

93.9 |

|

bb |

78.9 |

80.4 |

77.8 |

80.9 |

87.6 |

94.3 |

94.8 |

99.0 |

- |

74.7 |

85.4 |

|

bk |

83.0 |

88.1 |

92.3 |

95.4 |

96.9 |

94.3 |

93.8 |

88.7 |

79.4 |

- |

90.2 |

The overall mean of the table is 92.9%. φ is the average estimated azimuth pose angle of the face. Ground truth for φ is not available. Condition bk has different illumination than the others. The row in bold is the front view gallery (condition ba)

Table 6.3 Mean percentage of correct identification obtained after a SNO or MMF fitting, resp. on Set 2, averaged over all lighting conditions for front, side, and profile view galleries SNO [7]

|

Gallery view |

Performance (%) by probe view |

|

Mean |

|

|

Front |

Side |

Profile |

||

|

Front |

99.8% (97.1-100) |

97.8% (82.4-100) |

79.5% (39.7-94.1) |

92.3% |

|

Side |

99.5% (94.1-100) |

99.9% (98.5-100) |

85.7% (42.6-98.5) |

95.0% |

|

Profile |

83.0% (72.1-94.1) |

86.2% (61.8-95.6) |

98.3% (83.8-100) |

89.0% |

|

Mean |

92.1% |

|||

MMF (with MPI model) [38]

|

Gallery view |

Performance (%) by probe view |

|

Mean |

|

|

Front |

Side |

Profile |

||

|

Front |

99.9% (98.5-100.0) |

98.4% (91.0-100.0)% |

75.6% (38.8-94.0) |

91.3% |

|

Side |

96.4% (89.6-100.0) |

99.3% (97.0-100.0) |

83.7% (52.2-100.0) |

93.1% |

|

Profile |

76.3% (64.2-91.0) |

86.0% (67.2-98.5) |

89.4% (64.2-98.5) |

83.9% |

MFF (with BFM) [34]

|

Gallery view |

Performance (%) by probe view |

|

Mean |

|

|

Front |

Side |

Profile |

||

|

Front |

98.9% |

96.1% |

75.7% |

90.2% |

|

Side |

96.9% |

99.9% |

87.8% |

94.9% |

|

Profile |

79.0% |

89.0% |

98.3% |

88.8% |

|

Mean |

91.6% |

95.0% |

87.3% |

91.3% |

Numbers in parenthesis are percentages for the worst and best illumination within each probe set

![tmpdece480_thumb[2] tmpdece480_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece480_thumb2_thumb.png)

![tmpdece481_thumb[2] tmpdece481_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece481_thumb2_thumb.png)

![tmpdece488_thumb[2] tmpdece488_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece488_thumb2_thumb.png)

![tmpdece489_thumb[2] tmpdece489_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece489_thumb2_thumb.png)

![tmpdece490_thumb[2] tmpdece490_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece490_thumb2_thumb.png)

![tmpdece498_thumb[2] tmpdece498_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece498_thumb2_thumb.png)

![tmpdece499_thumb[2] tmpdece499_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece499_thumb2_thumb.png)

![tmpdece500_thumb[2] tmpdece500_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece500_thumb2_thumb.png)

![tmpdece507_thumb[2] tmpdece507_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece507_thumb2_thumb.png)

![tmpdece508_thumb[2] tmpdece508_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece508_thumb2_thumb.png)

![tmpdece511_thumb[2] tmpdece511_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece511_thumb2_thumb.png)

![tmpdece520_thumb[2] tmpdece520_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece520_thumb2_thumb.png)

![tmpdece521_thumb[2] tmpdece521_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece521_thumb2_thumb.png)