Related Works

Applying the idea of manifold learning, that is, exploring local geometry information of data distribution, into semisupervised or transductive subspace selection leads to a new framework of dimension reduction by manifold regularization. One example is the recently proposed manifold regularized sliced inverse regression (MRSIR) [4]. Sliced inverse regression (SIR) was proposed for sufficient dimension reduction. In a regression setting, with the predictors X and the response Y, the sufficient dimension reduction (SDR) subspace B is defined by the conditional independency Y±X | BTX. Under the assumption that the distribution of X is elliptic symmetric, it has been proved that the SDR subspace B is related to the inverse regression curve E(X | Y). It can be estimated at least partially by a generalized eigendecomposition between the covariance matrix of the predictors Cov(X) and the covariance matrix of the inverse regression curve Cov(E(X | Y)). If Y is discrete, this is straightforward. While Y is continuous, it is discretized by slicing its range into several slices so as to estimate E(X | Y) at each slice.

Fig. 3.11 Point p is the projection of query x onto the feature line![tmpdece-252_thumb[2][2] tmpdece-252_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece252_thumb22_thumb.png)

Suppose Γ and Σ are respectively the empirical estimates of Cov(E(X | Y)) and Cov(X) based on a training data set. Then, the SDR subspace B is given by

To construct the manifold regularization, [4] uses the graph Laplacian L of the training data![tmpdece-255_thumb[2][2] tmpdece-255_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece255_thumb22_thumb.png) Letting

Letting![tmpdece-256_thumb[2][2] tmpdece-256_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece256_thumb22_thumb.png)

with D being the degree matrix, then MRSIR is defined by

where η is a positive weighting factor. The use of manifold regularization extends SIR in many ways, that is, it utilizes the local geometry that is ignored originally and enables SIR to deal with the tranductive/semisupervised subspace selection problems.

So far we have introduced subspace selection methods that exploit local geometry information of data distribution. Based on these methods, classification can be performed in the low dimensional embedding. However, as the final goal is classification, an alternative approach is to do classification directly using the local geometry information. This generally leads to nonparametric classifiers, for example, nearest neighbor (NN) classifier. The problem is that simple NN classifier cannot provide satisfying recognition performance when data are of very high dimensions as in face recognition. To this end, Li and Liu proposed the nearest feature line (NFL) for face recognition [25, 26]. In NFL, a query is projected onto a line segment between any two instances within each class, and the nearest distance between the query and the projected point is used to determine its class label. Figure 3.11 shows an example of projecting a query x onto the feature line spanned by instances xi and x2, where the projected point p is given by

with

One extension of NFL is the nearest linear combination (NLC) [25]. There a query is projected onto a linear subspace spanned by a set of basis vectors, where the basis vectors can be any form from a subspace analysis or a set of local features, and the distance between the query and the projection point is used as the metric for classification. Empirical studies shown that NFL and NLC produces significantly better performance than the simple nearest neighborhood (NN) when the number of prototype templates (basis vectors representing the class) is small.

Another method related to NLC and NS approach is the sparse representation classifier (SRC) [47], which treats the face recognition problem as searching for an optimal sparse combination of gallery images to represent the probe one. SRC differs from the standard NLC in the norm used to define the projection distance. Instead of using the 2-norm as in NLC [25], SRC uses the 1- or 0-norm, such that the sparsity emerges.

Transfer Subspace Learning

Conventional algorithms including subspace selection methods are built under the assumption that training and test samples are independent and identically distributed (i.i.d.). For practical applications, however, this assumption cannot be hold always. Particularly, in face recognition, the difference of expressions, postures, aging problem and lighting conditions makes the distributions of training and test face different. To this end, a transfer subspace learning (TSL) framework is proposed [38]. TSL extends conventional subspace learning methods by using a Bregman divergence based regularization, which encourages the difference between the training and test samples in the selected subspace to be minimized. Thus, we can approximately assume the samples of training and test are almost i.i.d. in the learnt subspace.

TSL Framework

The TSL framework [38] is presented by the following unified form

where F(U) is the objective function of a subspace selection method, for example, FLDA or PCA et al., and DU(Pl \\Pu) is the Bregman divergence between the training data distribution Pl and the test data distribution Pu in the low dimension subspace U, and parameter λ controls the balance between the objective function and the regularization. Note that generally the objective function F(U) only depends on the training data.

For example, when F(U) is chosen to be FLDA’s objective, (32) will give a subspace in which the training and test data distributions are close to each other and the discriminative information in the training data is partially preserved.

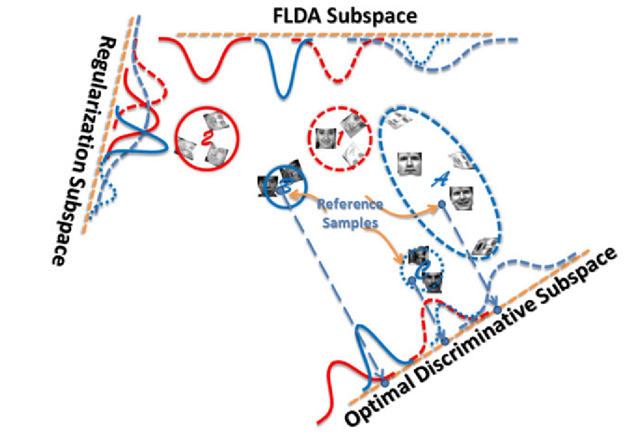

Fig. 3.12 Two classes of training samples are marked as 1 and 2, while three classes of test samples are marked as A, B and C. Blue circles A and C are merged together in the FLDA subspace, where discrimination of the training samples can be well preserved. Blue circles A and B are mixed in the regularization subspace, where there exists the smallest divergence between training domain (1, 2) and test domain (A, B and C). Blue circles A, B and C can be well separated in the discriminative subspace, which is obtained by optimizing the combination of the proposed regularization (the divergence between training sets 1, 2 and test sets A, B, C) and FLDA

In particular, suppose we have two classes of training samples, represented by two red circles (1 and 2, e.g., face images in the FERET dataset), and three classes of test samples, represented by three blue circles (A, B and C, e.g., face images in the YALE dataset), as shown in Fig. 3.12. On one hand, FLDA finds a subspace that fails to separate the test circle A from the test circle C, but the subspace is helpful to distinct different subjects in the training set. On the other hand, the minimization of the Bregman divergence between training and test distributions would give a subspace that makes the training data and test data almost i.i.d., but give little discriminative power. Apparently, neither of them individually can find a best discriminative subspace for test. However, as shown in the figure, a combination of FLDA and the Bregman regularization does find the optimal subspace for discrimination, wherein A, B and C can be well separated and samples in them can be correctly classified with given references. It is worth emphasizing that the combination works well because the training and test samples are coming from different domains but both domains share some common properties.

The authors suggest solving (3.36) by gradient descent method [38],

where τ is the learning rate, that is, step size for updating. As F(U) is usually known, so is its derivative. The problem remaining is how to estimate DU(Pl\\Pu) and its derivatives.

Definition 1 (Bregman divergence regularization) Let![tmpdece-265_thumb[2][2] tmpdece-265_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece265_thumb22_thumb.png) be a convex function defined on a closed convex set

be a convex function defined on a closed convex set![]() We denote the first order derivative

We denote the first order derivative

of f as f ‘, whose inverse function as![tmpdece-267_thumb[2][2] tmpdece-267_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece267_thumb22_thumb.png) The probability density for the training and test samples in the projected subspace U are pl(y) and pu(y) respectively, wherein y = UTx is the low-dimensional representation of the sample x. The difference at %(pi(y)) between the function f and the tangent line to f at

The probability density for the training and test samples in the projected subspace U are pl(y) and pu(y) respectively, wherein y = UTx is the low-dimensional representation of the sample x. The difference at %(pi(y)) between the function f and the tangent line to f at ![tmpdece-268_thumb[2][2] tmpdece-268_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece268_thumb22_thumb.png) is given by:

is given by:

Based on (3.38), the Bregman divergence regularization, which measures the distance between pl(y) and pu (y), is a convex function given by

where άμ is the Lebesgue measure.

By taking a special form![tmpdece-275_thumb[2][2] tmpdece-275_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece275_thumb22_thumb.png) can be expressed as [38]

can be expressed as [38]

Further, the kernel density estimation (KDE) technique is used to estimate Pl (y) and pu (y). Suppose there are nl training instances![]() i _ and nu test instances then through projection

i _ and nu test instances then through projection![tmpdece-280_thumb[2][2] tmpdece-280_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece280_thumb22_thumb.png) we have the estimates [38]

we have the estimates [38]

and

where 0Σ1 (y) is a Gaussian kernel with covariance Σ1, so is 0Σ2(y). With these estimates, the quadratic divergence (3.40) is rewritten as [38]

where![]() Further, by basis matrix calculus, we have

Further, by basis matrix calculus, we have

Cross Domain Face Recognition

Based on the YALE, UMIST and a subset of FERET datasets, cross-domain face recognition is performed by applying the TSL framework. In detail, we have (1) Y2F: the training set is on YALE and the test set is on FERET; (2) F2Y: the training set is on FERET and the test set is on YALE; and (3) YU2F: the training set is on the combination of YALE and UMIST and the test set is on FERET. In the training stage, the labeling information of test images is blind to all subspace learning algorithms. However, one reference image for each test class is preserved so that the classification can be done in the test stage. The nearest neighbor classifier is adopted for classification, i.e., we calculate the distance between a test image and every reference image and predict the label of the test image as that of the nearest reference image.

We compare TSL algorithms, for example, TPCA, TFLDA, TLPP, TMFA, and TDLA, with conventional subspace learning algorithms, for example, PCA [23], FLDA [14], LPP [20], MFA [48], DLA [54] and the semi-supervised discriminant analysis (SDA) [8]. Table 3.2 shows the recognition rate of each algorithm with the corresponding optimal subspace dimension. In detail, conventional subspace learning algorithms, for example, FLDA, LPP and MFA, perform poorly because they assume training and test samples are i.i.d. variables and this assumption is unsuitable for cross-domain tasks. Although SDA learns a subspace by taking test samples into account, it assumes samples in a same class are drawn from an identical underlying manifold.

|

|

Y2F |

F2Y |

YU2F |

|

LDA |

39.71(70) |

36.36(30) |

29.57(30) |

|

LPP |

44.57(65) |

44.24(15) |

45.00(35) |

|

MFA |

40.57(65) |

34.54(60) |

27.85(70) |

|

DLA |

50.43(80) |

50.73(15) |

50.86(65) |

|

SDA |

44.42(65) |

41.81(40) |

32.00(35) |

|

MMDR |

45.60(60) |

42.00(75) |

49.75(80) |

|

TLDA |

57.28(15) |

50.51(20) |

55.57(45) |

|

TLPP |

58.28(30) |

53.93(25) |

58.42(30) |

|

TMFA |

63.14(70) |

56.96(35) |

65.42(70) |

|

TDLA |

63.12(60) |

61.82(30) |

65.57(70) |

Table 3.2 Recognition rates of different algorithms under three experimental settings. The number in the parenthesis is the corresponding subspace dimensionality

Therefore, SDA is not designed for the cross-domain tasks. Although MMDR considers the distribution bias between the training and the test samples, it ignores the discriminative information contained in the training samples. We have given an example in the synthetic data test to show that the training discriminative information is helpful to separate test classes. Example TSL algorithms perform consistently and significantly better than others, because the training discriminative information can be properly transferred to test samples by minimizing the distribution distance between the training and the test samples. In particular, TDLA performs best among all TSL examples because it inherits the merits of DLA in preserving both the discriminative information of different classes and the local geometry of samples in an identical class.

![tmpdece-254_thumb[2][2] tmpdece-254_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece254_thumb22_thumb.png)

![tmpdece-261_thumb[2][2] tmpdece-261_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece261_thumb22_thumb.png)

![tmpdece-262_thumb[2][2] tmpdece-262_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece262_thumb22_thumb.png)

![tmpdece-264_thumb[2][2] tmpdece-264_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece264_thumb22_thumb.png)

![tmpdece-273_thumb[2][2] tmpdece-273_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece273_thumb22_thumb.png)

![tmpdece-274_thumb[2][2] tmpdece-274_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece274_thumb22_thumb.png)

![tmpdece-277_thumb[2][2] tmpdece-277_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece277_thumb22_thumb.png)

![tmpdece-284_thumb[2][2] tmpdece-284_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece284_thumb22_thumb.png)

![tmpdece-285_thumb[2][2] tmpdece-285_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece285_thumb22_thumb.png)

![tmpdece-286_thumb[2][2] tmpdece-286_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece286_thumb22_thumb.png)

![tmpdece-289_thumb[2][2] tmpdece-289_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/06/tmpdece289_thumb22_thumb.png)