Abstract

Spam has become a major issue in computer security because it is a channel for threats such as computer viruses, worms and phishing. Many solutions feature machine-learning algorithms trained using statistical representations of the terms that usually appear in the e-mails. Still, these methods require a training step with labelled data. Dealing with the situation where the availability of labelled training instances is limited slows down the progress of filtering systems and offers advantages to spammers. Currently, many approaches direct their efforts into Semi-Supervised Learning (SSL). SSL is a halfway method between supervised and unsupervised learning, which, in addition to unlabelled data, receives some supervision information such as the association of the targets with some of the examples. Collective Classification for Text Classification poses as an interesting method for optimising the classification of partially-labelled data. In this way, we propose here, for the first time, Collective Classification algorithms for spam filtering to overcome the amount of unclassified e-mails that are sent every day.

Keywords: Spam filtering, collective classification, semi-supervised learning.

Introduction

Flooding inboxes with annoying and time-consuming messages, more than 85% of received e-mails are spam1.

Several approaches have been proposed by the academic community to solve the spam problem [1,2,3,4]. Among them, the termed as statistical approaches [5] use machine-learning techniques to classify e-mails. These approaches have proved their efficiency detecting spam and are the most extended technique to fight it. In particular, the use of the Bayes’ theorem is widely used by the anti-spam filters (e.g., SpamAssasin [6], Bogofilter [7], and Spamprobe [8]).

These statistical approaches are usually supervised, i.e., they need a training set of previously labelled samples. These techniques perform better as more training instances are available. It means that a significant amount of previous labelling work is needed to increase the accuracy of the models. This work includes a gathering phase in which as many e-mails as possible are collected. Then, each e-mail has to be classified as spam or legitimate. Finally, machine-learning models are generated based upon the labelled data.

This task is usually performed for text categorisation. Since text classification mostly uses the content of the documents and external sources to build accurate document classifiers, there is a great effort in the scientific community [9,10,11] directed towards the link structure among documents, to improve the performance of document classification.

The connections that can be found within documents vary from the most common citation graph, such as papers citing other papers or websites linking other websites, to links constructed from relationships including: co-author, co-citation, appearance at a conference venue, and others. The combination of these connections leads to the creation of an interlinked collection of text documents.

In some cases, it is interesting to determine the topic of not just a single document, but to infer it for a collection of unlabelled documents. Collective classification tries to collectively optimise the problem taking into account the connections present among the documents. This is a semi-supervised technique, i.e., uses both labelled and unlabelled data – typically a small amount of labelled data and a large amount of unlabelled data – that reduces the labelling work.

Given this background, we propose the first spam filtering system that uses collective classification to optimise the classification performance. Through this approach, we minimise the necessity of labelled e-mails without a significant penalisation of the accuracy of detection.

Summarising, our main findings are the following: (i) we describe how to adopt collective classification for spam filtering, (ii) we try to determine which is the optimal size of the labelled dataset for collective-classification-based spam filtering, and (iii) we show that this approach can reduce the efforts of labelling e-mails while maintaining a high accuracy rate.

The reminder of this paper is organised as follows. Section 2 describes the process of using collective classification applied to the spam filtering problem. Section 3 details the experiments performed and presents the results. Finally, Section 4 concludes and outlines avenues for future work.

Collective Classification for Spam Filtering

Collective classification is a combinatorial optimization problem, in which we are given a set of documents, or nodes, D ={di,…, dn} and a neighbourhood function N, where ,![]() , which describes the underlying network structure [12]. Being D a random collection of documents, it is divided into two sets X and y where X corresponds to the documents for which we know the correct values and y are the documents whose values need to be determined. Therefore, the task is to label the nodes

, which describes the underlying network structure [12]. Being D a random collection of documents, it is divided into two sets X and y where X corresponds to the documents for which we know the correct values and y are the documents whose values need to be determined. Therefore, the task is to label the nodes![]() with one of a small number of labels, L = {ll,…,lq}.

with one of a small number of labels, L = {ll,…,lq}.

Since the spam problem can be tackled as a text classification problem, we use the Waikato Environment for Knowledge Analysis (WEKA) [13] and its

Semi-Supervised Learning and Collective Classification plugin2. In the remainder of this section we review the collective algorithms used in the empirical evaluation.

CollectiveIBk

It uses internally WEKA’s classic IBk algorithm, implementation of the K-Nearest Neighbour (KNN), to determine the best k on the training set and builds then, for all instances from the test set, a neighbourhood consisting of k instances from the pool of train and test set (either a nai’ve search over the complete set of instances or a k-dimensional tree is used to determine neighbours). All neighbours in such a neighbourhood are sorted according to their distance to the test instance they belong to. The neighbourhoods are sorted according to their ‘rank’, where ‘rank’ means the different occurrences of the two classes in the neighbourhood.

For every unlabelled test instance with the highest rank, the class label is determined by majority vote or, in case of a tie, by the first class. This is performed until no further unlabelled test instances remain. The classification terminates by returning the class label of the instance that is about to be classified.

CollectiveForest

It uses WEKA’s implementation of RandomTree as base classifier to divide the test set into folds containing the same number of elements. The first iteration trains using the original training set and generates the distribution for all the instances in the test set. The best instances are then added to the original training set (being the number of instances chosen the same as in a fold).

The next iterations train with the new training set and generate then the distributions for the remaining instances in the test set.

CollectiveWoods and CollectiveTree

CollectiveWoods works like CollectiveForest using CollectiveTree instead of Ran-domTree.

Collective tree is similar to WEKA’s original RandomTree classifier, it splits the attribute at that position that divides the current subset of instances (training and test instances) into two halves. The process finishes if one of the following conditions is met:

— Only training instances would be covered (the labels for these instances are already known).

— Only test instances in the leaf, case in which distribution from the parent node is taken.

— Only training instances of one class, case in which all test instances are considered to have this class.

To calculate the class distribution of a complete set or a subset, the weights are summed up according to the weights in the training set, and then normalised. The nominal attribute distribution corresponds to the normalised sum of weights for each distinct value and, for the numeric attribute, distribution of the binary split based on median is calculated and then the weights are summed up for the two bins and finally normalised.

RandomWoods

It works like WEKA’s classic RandomForest but using CollectiveBagging (classic Bagging, a machine learning ensemble meta-algorithm to improve stability and classification accuracy, extended to make it available to collective classifiers) in combination with CollectiveTree in contrast to RandomForest, which uses Bagging and RandomTree.

Empirical Evaluation

To evaluate the collective algorithms we used the Ling Spam3 and SpamAssas-sin4 datasets. Ling Spam consists of a mixture of both spam and legitimate messages retrieved from the Linguistic list, an e-mail distribution list about linguistics. It comprises 2,893 different e-mails, of which 2,412 are legitimate e-mails obtained by downloading digests from the list and 481 are spam e-mails retrieved from one of the authors of the corpus (for a more detailed description of the corpus please refer to [14,15]). From the 4 different datasets provided in this corpus, each of one with different pre-process steps, we choose the Bare dataset, which has no pre-processing.

The SpamAssassin public mail corpus is a selection of 1,897 spam messages and 4,150 legitimate e-mails. Unfortunatelly, due to computational restrictions we were obliged to reduce the dataset to a 50%, so the final used dataset comprises 3,023 e-mails, of which 964 are spam e-mails and 2,059 are legitimate messages.

In addition, we performed for both datasets a Stop Word Removal [16] based on an external stop-word list5 and removed any non alpha-numeric character.

We then used the Vector Space Model (VSM) [17], an algebraic approach for Information Filtering (IF), Information Retrieval (IR), indexing and ranking, to create the model. This model represents natural language documents in a mathematical manner through vectors in a multidimensional space.

We extracted the top 1,000 attributes using Information Gain [18], an algorithm that evaluates the relevance of an attribute by measuring the information gain with respect to the class:![]()

P(Ci)) where Ci is the i-th class, vj is the value of the j-th interpretation, P (vj, Ci) is the probability that the j-th attribute has the value vj in the class

Ci, P(vj) is the probability that the j-th interpretation has the value Vj in the training data, and P(Ci) is the probability of the training dataset belonging to the class Ci.

We constructed an ARFF file [19] (i.e., Attribute Relation File Format) with the resultant vector representations of the e-mails to build the aforementioned WEKA’s classifiers.

To evaluate the results, we measured the most frequently used for spam: precision, recall and Area Under the ROC Curve (AUC). We measured the precision of the spam identification as the number of correctly classified spam emails divided by the number of correctly classified spam e-mails and the number of legitimate e-mails misclassified as spam,![]() , where

, where ![]() is the number of correctly classified spam and

is the number of correctly classified spam and![]() is the number of legitimate e-mails misclassified as spam.

is the number of legitimate e-mails misclassified as spam.

Additionally, we measured the recall of the spam e-mail messages, which is the number of correctly classified spam e-mails divided by the number of correctly classified spam e-mails and the number of spam e-mails misclassified as legitimate,![]() .

.

Finally, we measured the Area Under the ROC Curve (AUC), which establishes the relation between false negatives and false positives [20]. The ROC curve is represented by plotting the rate of true positives (TPR) against the rate of false positives (FPR). Where the TPR is the number of spam messages correctly detected divided by the total number of junk e-mails, TPR = TP/(TP + FN), and the FPR is the number of legitimate messages misclassified as spam divided by the total number of legitimate e-mails, FPR = FP/(FP + TN).

For our experiments we tested the different configurations of the collective algorithms with sizes for the X set of known instances, varying from a 10% to a 90% of the instances used for training (i.e., instances known during the test).

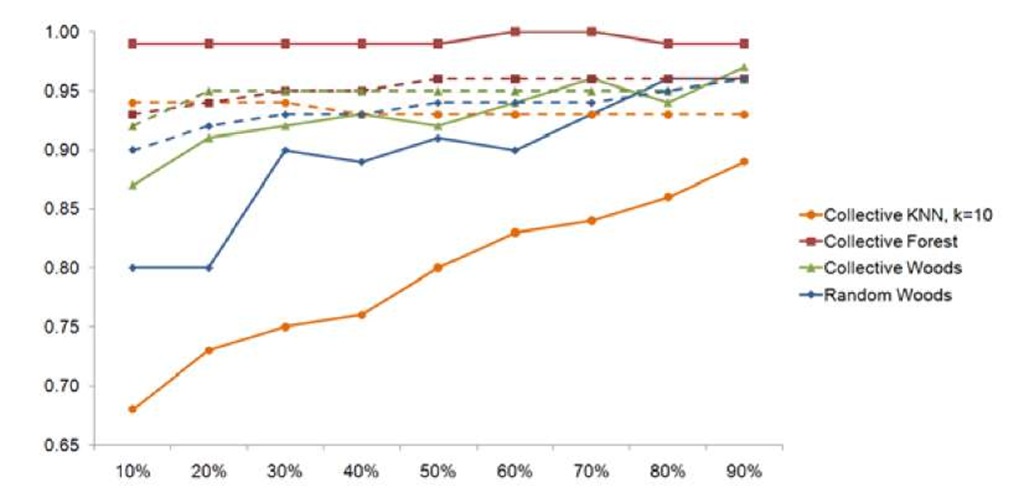

Fig. 1 shows the precision of the different algorithms. Collective KNN shows significant improvements with Ling Spam when the number of known instances increases (from 0.68 with 10% to 0.89 with 90%), but remains constant with SpamAssassin (between 0.93 and 0.94).

Fig. 1. Precision of the evaluation of collective algorithms for spam filtering with different sizes for the X set of known instances. Solid lines correspond to Ling Spam and dashed lines correspond to SpamAssassin.

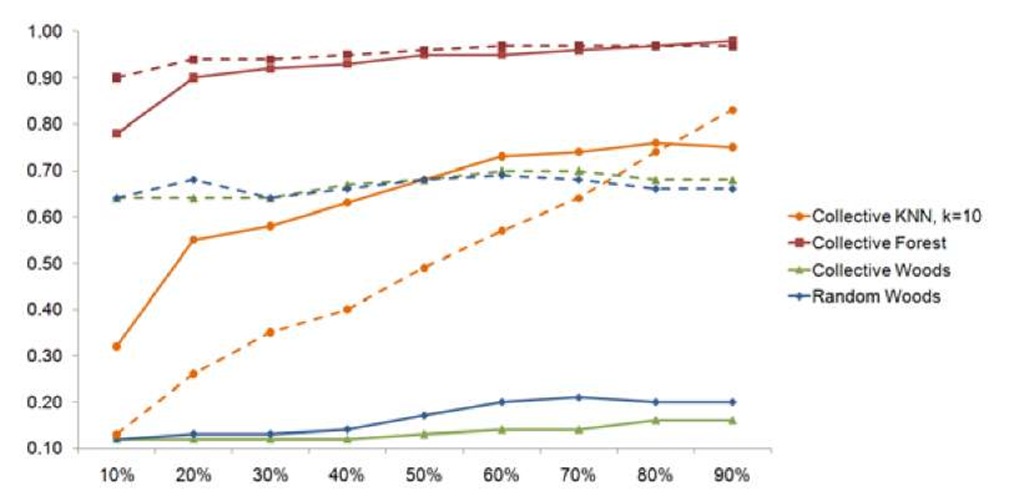

Fig. 2. Recall of the evaluation of collective algorithms for spam filtering with different sizes for the X set of known instances. Solid lines correspond to Ling Spam and dashed lines correspond to SpamAssassin.

Collective Forest was the best collective algorithm when evaluating the precision achieving between 0.99 and 1.00 for Ling Spam and no less than 0.93 for SpamAssassin. Finally, Collective Woods and Random Woods experience some improvements when increasing the number of known instances when testing with both datasets.

Fig. 2 shows the recall of the different algorithms. Again, Collective KNN shows better results, although not good enough, when the number of known instances increases: from a 0.32 with 10% to 0.75 with 90% for Ling Spam and from 0.13 with 10% to 0.83 with 90%. Collective Forest presents a poor 0.78 for 10% with Ling Spam but behaves better with the rest of configurations in both datasets: a minimum of 90% and a maximum of 0.97. Finally, Collective Woods and Random Woods behave similar, with very poor recall, achieving maximums with 90% of 0.16 and 0.20 respectively for Ling Spam and 0.68 and 0.66 for SpamAssassin.

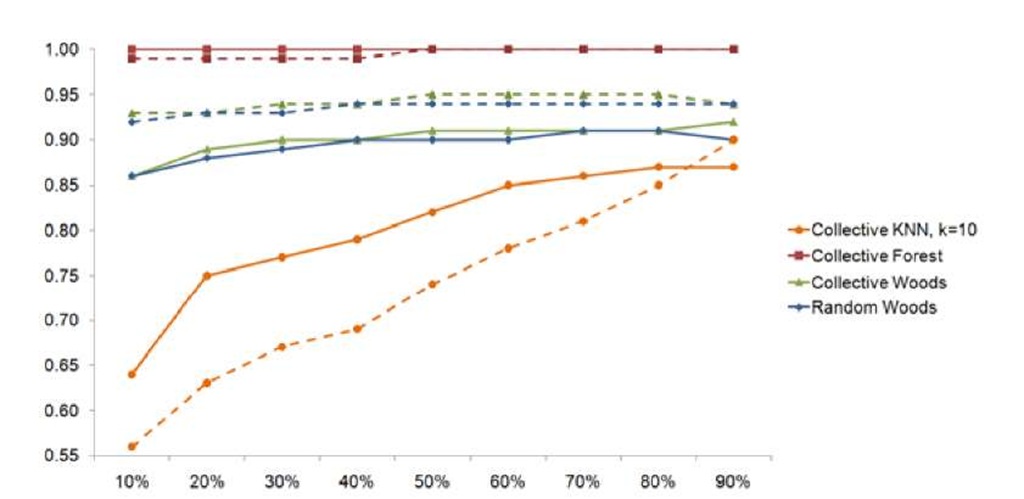

Fig. 3. Area under de ROC curve (AUC) evaluation of collective algorithms for spam filtering with different sizes for the X set of known instances. Solid lines correspond to Ling Spam and dashed lines correspond to SpamAssassin.

Finally, Fig. 3 shows the Area under de ROC curve (AUC) of the different algorithms. Once more, the performance of Collective KNN increases with more known instances: from 0.64 with 10% to 0.87 with 90% for Ling Spam and from 0.56 to 0.90 for SpamAssassin. Collective Forest offers a perfect 1.00 for every configuration with Ling Spam and a minimum of 0.99 with SpamAssassin posing as a suitable choice for collective classification. Finally, Collective Woods and Random Woods offer similar results, increasing from 0.86 both to 0.92 and 0.90 respectively with Ling Spam and from 0.93 and 0.92 to 0.94 both with SpamAssassin.

Discussion and Concluding Remarks

Collective Classification algorithms for spam filtering pose as a suitable approach for optimising the classification of partially-labelled data and, therefore, overcome the amount of unclassified spam e-mails that are created every day.

In particular, Collective Forest shows great results for every configuration of known instances (i.e., different sizes for the X set of known instances), with values above 0.93 of precision, above 0.90 of recall (only offering a poor recall of 0.78 with a 10% of X) and almost 1.00 for all configurations of AUC.

Since precision and AUC are slightly affected with the variation of known instances, values of X, to determine the optimal size of labelled data, and assuming that Collective Forest is the chosen algorithm, the recall should be the factor to take into account. For a value of X = 60%, CollectiveForest achieves its maximums, only experiencing a loss of 0.03 of recall for Ling Spam.

As the number of unsolicited bulk messages increases, the classification and labelling steps, that commonly supervised methods make use of, become more unattainable. To revert this situation, we propose the first spam filtering system that uses collective classification to optimise classification performance. Through the algorithms introduced, the necessity of labelled e-mails is minimised, by a 40%, without a significant penalisation in the detection capabilities.

Future work will be focused on three main directions. First, we plan to extend our study of collective classification by applying more algorithms to the spam problem. Second, we will select different features as data to train the models. Finally, we will perform a more complete analysis on the effects of the labelled degree of the data.