perhaps you just want to spend six months and get as much improvement as you can. Your starting

point will be a specific release of your program, a specific machine to run it on, and a very well-

defined set of input data. You absolutely must have an unambiguous, repeatable test case for

which you know the statistics.

Things you may have to control for include other activity on the test machine, unanticipated

network traffic, file layout on your disk(!), etc. Once you have all of that, you will generally find

that most of your time is used by a few small loops. Once you're convinced that these loops really

are the right ones, you'll separate them out into their own little testbeds and verify that you can

produce the same behavior there. Finally, you will apply your efforts to these testbeds,

investigating them in great detail and experimenting with the different techniques below.

When you feel confident that you've done your best with them, you'll compare the before and after

statistics in the testbeds, then integrate the changes and repeat the tests in the original system. It is

vitally important that you repeat the test in both original version and in the new version. Far, far

too many times people have discovered that "something changed," that the original program now

completes the test faster than before, and that the extensive optimizations they performed didn't

actually make any improvement at all.

Is It Really Faster?

Even "simple, deterministic programs" show variation in their runtimes. External interrupts, CPU

selection, VM page placement, file layout on disks, etc., can cause wide variation in runtimes. A

difference of 20% between two runs of the same "deterministic" CPU-bound program is not

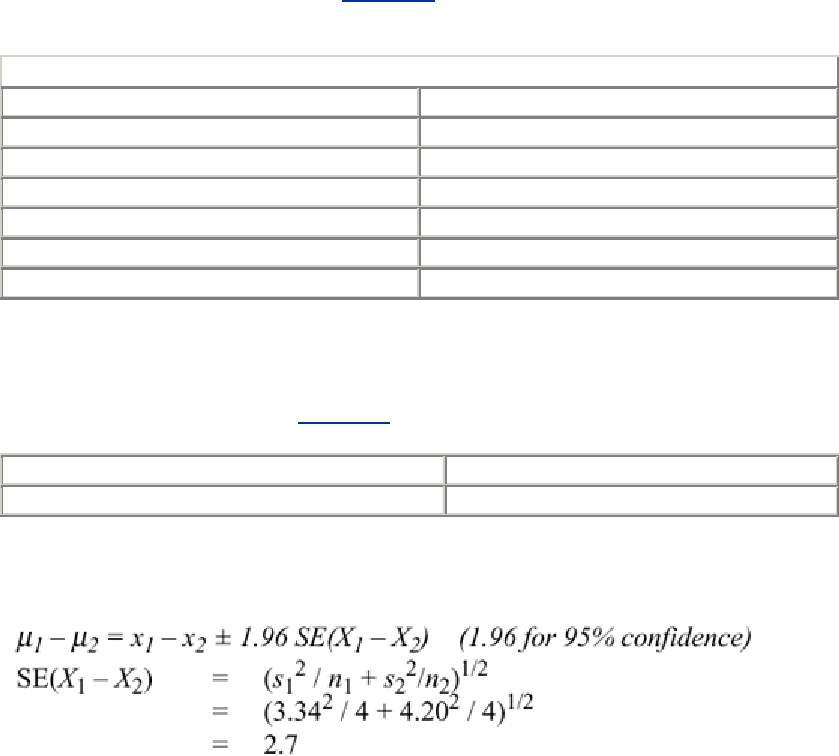

unusual. Consider the runtimes listed in Table 15-1. A program was run four times, giving the first

set of results. It was changed, recompiled, and gave the second set of results.

Table 15-1. Runtimes for Four Trials

Run 1

Run 2

rate: 27.665667/s

rate: 28.560094/s

rate: 23.503779/s

rate: 28.000473/s

rate: 20.414748/s

rate: 25.274012/s

rate: 20.653608/s

rate: 35.249477/s

Mean rate: 23.05/s

Mean rate: 28.27/s

Standard deviation: 3.34

Standard deviation: 4.20

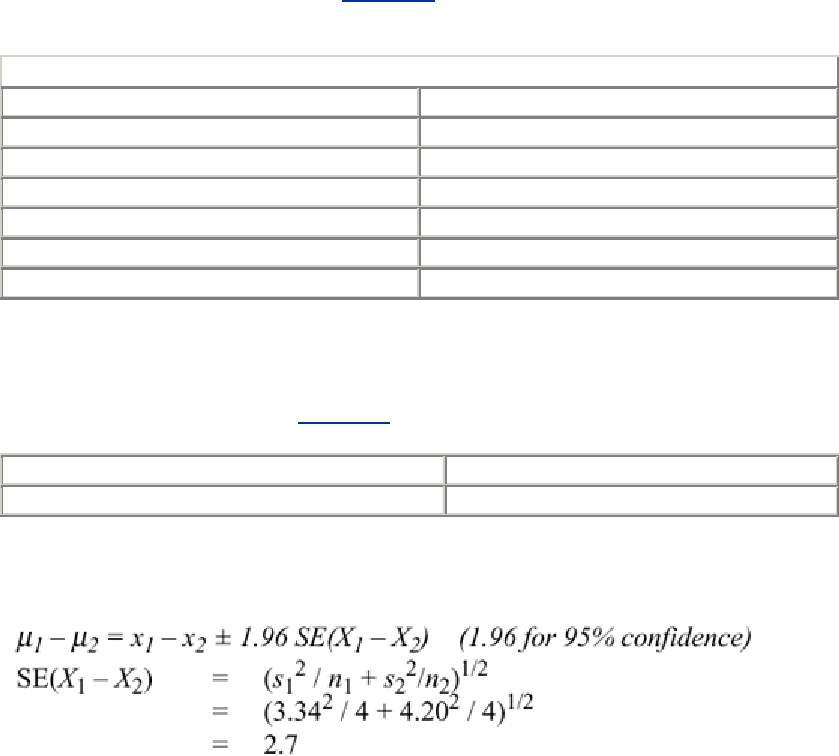

The question is: How sure are we that the difference we measured is the difference between the

actual means? The answer requires a tiny bit of statistics which you can take straight from a topic

or even "eyeball" the data. You just have to know what you're looking for. We want to know this:

x1 = 23.05

s1 = 3.34

x2 = 28.27

s2 = 4.20

The answer is that for four measurements (which isn't very many), looking for the usual 95%

confidence level:

Search WWH :