Preface

One day like many, on a JBoss AS Forum:

"Hi

I am running the Acme project using JBoss 5.1.0. My requirement is to allow 1000 concurrent users to access the application. But when I try to access the application with 250 users, the server slows down and finally throws an exception "Could not establish a connection with the database. Does anyone have an idea please help me to solve my problem."

In the beginning, performance was not a concern for software. Early programming languages like C or Cobol were doing a decent job of developing applications and the end user was just discovering the wonders of information technology that would allow him to save a lot of time.

Today we are all aware of the rapidly changing business environment in which we work and live and the impact it has on business and information technology. We recognize that an organization needs to deliver faster services to a larger set of people and companies, and that downtime or poor responses of those services will have a significant impact on the business.

To survive and thrive in such an environment, organizations must consider it an imperative task for their businesses to deliver applications faster than their competitors or they will risk losing potential revenue and reputation among customers.

So tuning an application in today’s market is firstly a necessity for survival, but, there are even more subtle reasons, like using your system resources more efficiently. For example, if you manage to meet your system requirements with fewer fixed costs (let’s say by using eight CPU machine instead of a 16 one) you are actually using your resources more efficiently and thus saving money. As an additional benefit you can also reduce some variable costs like the price of software licenses, which are usually calculated on the amount of CPUs used.

On the basis of these premises, it’s time to reconsider the role of performance tuning in your software development cycle, and that’s what this topic aims to do.

What you will get from this topic?

This topic is an exhaustive guide to improving the performance of your Java EE applications running on JBoss AS and on the embedded web container (Jakarta Tomcat). All the guidelines and best practices contained in this topic have been patiently collected through years of experience from the trenches and from the suggestions of valuable people, and ultimately in a myriad of blogs, and each one has contributed to improve the quality of this topic.

The performance of an application running on the application server is the result of a complex interaction of many aspects. Like a puzzle, each piece contributes ultimately to define the performance of the final product. So our challenge will be to teach how to write fast applications on JBoss AS, but also how to tune all the components and hardware which are a part of the IT system. As we suppose that our prime reader will not be interested in learning the basics of the application server, nor how to get started with Java EE, we will go straight to the heart of each component and elaborate on the strategies to improve their performance.

What is performance?

The term "performance" commonly refers to how quickly an application can be executed. In terms of the user’s perspective on performance, the definition is quite easy to grasp. For example, a fast website means one that is able to load web pages very quickly. From an administrator’s point of view, the concept needs to be translated into meaningful numbers. As a matter of fact, the expert can distinguish two ways to measure the performance of an application:

• Response Time

• Throughput

The Response Time can be defined as the time it takes for one user to perform a task. For example, on a website, after the customer submits one e-commerce form, the time it takes to process the order and for rendering and displaying the result in a new page is the response time for this functionality. As you can see, the concept of performance is essentially the same as from the end user perspective, but it is translated into numbers.

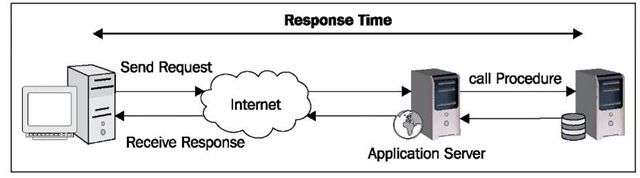

In practice, as shown in the following image, the Response Time includes the network roundtrip to the application server, the time to execute the business logic in your middleware (including the time to contact external legacy systems) and the latency to return the response to the client.

At this point the concept of Response Time should be quite clear, but you might wonder if this measurement is a constant; actually it is not. The Response Time changes accordingly with the load on the application. A single operation cannot be indicative of the overall performance: you have to consider how long the procedure takes to be executed in a production environment, where you have a considerable amount of customers running.

Another performance-related counter is Throughput. Throughput is the number of transactions that can occur in a given amount of time. This is a fundamental parameter that is used to evaluate not only the performance of a website, but also the commercial value of a software. The Throughput is usually measured in Transactions Per Second (TPS) and obviously an application that has a TPS higher than its competitors is also the one with higher commercial value — all other features standing equal.

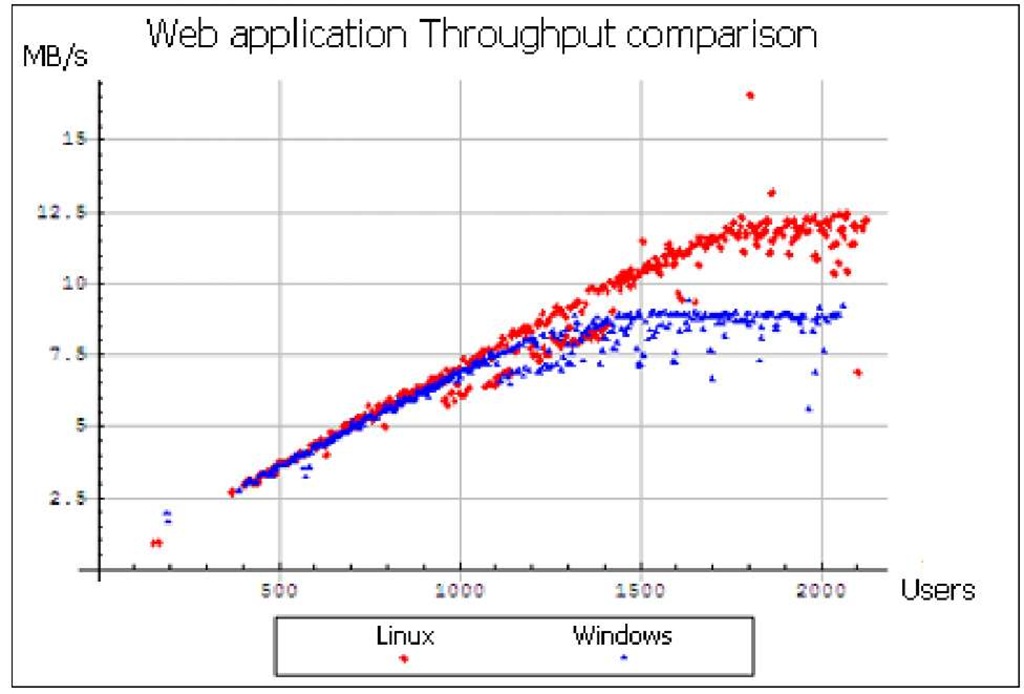

The following image, depicts a Throughput comparison between a Linux Server and a Windows Server, as part of a complete benchmark (http://www.webperformanceinc.com/library/reports/windows_vs_linux_part1/index.html):

Scalability: the other side of performance

As we have just learnt, we cannot define performance within the context of a single user who is testing the application. The performance of an application is tightly coupled with the number of users, so we need to define another variable which is known as Scalability. Scalability refers to the capability of a system to increase total Throughput under an increased load when resources are added. It can be seen from two different perspectives:

• Vertical scalability: (otherwise known as scaling up) means to add more hardware resources to the same machine, generally by adding more processors and memory.

• Horizontal scalability: (otherwise known as scaling out) means to add more machines into the mix, generally cheap commodity hardware.

The following image is a synthetic representation of the two different perspectives:

Both solutions have pros and cons: generally vertical scaling requires a greater hardware expenditure because it needs upgrading to powerful enterprise servers, but it’s easier to implement as it requires fewer changes in your configuration.

Horizontal scaling on the other hand, requires little investment on cheaper hardware (which has a linear expenditure) but it introduces a more complex programming model, thus it needs an expert hand as it concerns configuration and might require some changes in your application too.

You should also consider that concentrating all your resources on a single machine introduces a single point of failure, which is the case if you choose an extreme type of vertical scaling.

The tuning process

At this point you will have grasped that performance tuning spans over several components, including the application delivered and the environment where it is running. However, we haven’t addressed which is the right moment for starting to tune your applications. This is one of the most underestimated issues in software development and it is commonly solved by applying tuning only at two stages:

• While coding your classes

• At the end of software development

Tuning your applications as you code is a consolidated habit of software developers, at first because it’s funny and satisfying to optimize your code and see an immediate improvement in the performance of a single function. However, the other side of the coin is that most of these optimizations are useless. Why? It is statistically proven that within one application only 10-15 % of the code is executed frequently, so trying to optimize code blindly at this stage will produce little or no benefit at all to your application.

The second favorite anti-pattern adopted by developers is starting the tuning process just at the end of the software development cycle. For good reason, this can be considered a bad habit. Firstly, your tuning session will be more complex and longer: you have to analyze again the whole application roundtrip while hunting for bottlenecks again. Supposing you are able to isolate the cause of the bottleneck, you still might be forced to modify critical sections of your code, which, at this stage, can turn easily into a nightmare.

Think, for example, of an application which uses a set of JSF components to render trees and tables. If you discover that your JSF library runs like a crawl when dealing with production data, you have very little you can do at this stage: either you rewrite the whole frontend or you find a new job.

So the moral of the story is: you cannot think of performance as a single step in the software development process; it needs to be a part of your overall software development plan. Achieving maximum performance from your software requires continued effort throughout all phases of development, not just coding. In the next section we will try to uncover how performance tuning fits in the overall software development cycle.

Tuning in the software development cycle

Having determined that tuning needs to be a part of the software development cycle, let’s have a look at the software cycle with performance engineering integrated.

As you can see, the software process contains a set of activities (Analysis, Design, Coding, and Performance Tuning) which should be familiar to analyst programmers, but with two important additions: at first there is a new phase called Performance Test which begins at the end of the software development cycle and will measure and evaluate the complete application. Secondly, every software phase contains Performance focal points, which are appropriate for that software segment.

Now let’s see in more detail how a complete software cycle is carried on with performance in mind:

• Analysis: Producing high quality, fast applications always starts with a correct analysis of your software requirements. In this phase you have to define what the software is expected to do by providing a set of scenarios that illustrate your functional domain. This translates in creating use cases, which are diagrams that describe the interactions of users within the system. These use cases are a crucial step in determining what type of benchmarks are needed by your system: for example, here we assume that your application will be accessed by 500 concurrent users, each of whom will start a database connection to retrieve data from a database as well as use a JMS connection to fire an action. Software analysis, however, spans beyond the software requirements and should consider critical information, such as the kind of hardware where the application will run or the network interfaces that will support its communication.

• Design: In this phase, the overall software structure and its nuances are defined. Critical points like the number of tiers needed for the package architecture, the database design, and the data structure design are all defined in this phase. A software development model is thus created. The role of performance in this phase is fundamental, architects should perform the following:

° Quickly evaluate different algorithms, data structures, and libraries to see which are most efficient.

° Design the application so that it is possible to accommodate any changes if there are new requirements that could impact performance.

• Code: The design must be now translated into a machine-readable form. The code generation step performs this task. If the design is performed in a detailed manner, code generation can be accomplished without much complication. If you have completed the previous phases with an eye on tuning you should partially know which functions are critical for the system, and code them in the most efficient way. We say "partially" because only when you have dropped the last line of code will you be able to test the complete application and see where it runs quickly and where it needs to be improved.

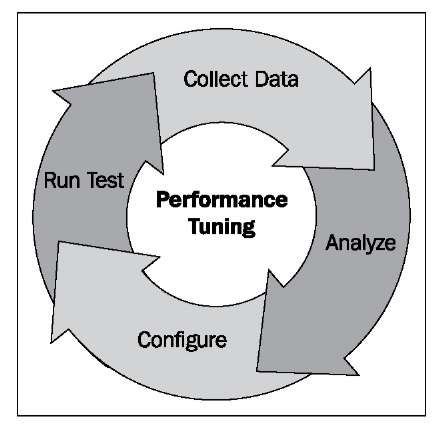

• Performance Test: This step completes the software production cycle and should be performed before releasing the application into production. Even if you have been meticulous at performing the previous steps, it is absolutely normal that your application doesn’t meet all the performance requirements on the first try. In fact, you cannot predict every aspect of performance, so it is necessary to complete your software production with a performance test. A performance test is an iterative process that you use to identify and eliminate bottlenecks until your application meets its performance objectives. You start by establishing a baseline. Then you collect data, analyze the results, and make configuration changes based on the analysis. After each set of changes, you retest and measure to verify that your application has moved closer to its performance objectives.

The following image synthesizes the cyclic process of performance tuning: