Agile Analysis

Perhaps one of the most often stated reasons for not performing live response at all is an inability to locate the source of the issue in the plethora of data that has been collected. Many of the tools available for collecting volatile (and nonvolatile) data during live response collect a great deal of data, so much so that it may appear to be overwhelming to the investigator. In the example cases in this next topic, I didn’t have to collect a great deal of data to pin down the source of the issue. The data collection tools I used in the example cases take two simple facts into account: that malware needs to run to have any effect on a system, and that malware needs to be persistent to have any continuing effect on a system (i.e., malware authors ideally want their software to survive reboots and users logging in). We also use these basic precepts in our analysis to cull through the available data and locate the source of the issues. To perform rapid, agile analysis, we need to look to automation and data reduction techniques.

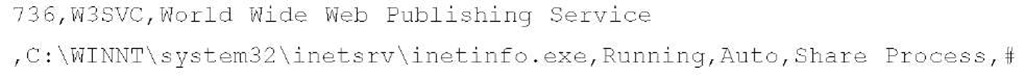

Although the example cases were simple and straightforward, they do illustrate a point. The methodology used to locate the suspicious process in each case is not too different from the methodology used to investigate the russiantopz (www.securityfocus.com/infocus/1618) bot in 2002. In fact, it’s akin to differential analysis (i.e., looking for the differences between two states). However, a big caveat to keep in mind, particularly if you’re performing live response as a law enforcement officer or consultant, is that in most cases, an original baseline of the system from prior to the incident will not be available, and you must rely on an understanding of the workings of the underlying operating system and applications for your "baseline." For instance, in the first example case, had there been only one instance of inetinfo.exe in the process information, and had I not known whether the infected system was running a Web server, I could have correlated what I knew (i.e., inetinfo.exe process running) to the output of the svc.exe tool, which in this instance appears as follows:

This correlation could be automated through the use of scripting tools, and if a legitimate service (such as shown earlier) is found to correlate to a legitimate process (inetinfo.exe with PID 736), we’ve just performed data reduction.

Note

The svc.exe tool used in the example cases collects information about services on the system, and displays the results in comma-separated value (.csv) format so that the results can be easily parsed, or opened in Excel for analysis. The column headers are the PID, service name, service display name, path to the executable image, service state, service start mode, service type, and whether (#) or not (*) the service has a description string. Many times, malware authors will fail to provide a description string for their service; a lack of this string would be a reason to investigate the service further.

A rule of thumb that a knowledgeable investigator should keep in mind while analyzing volatile data is that the existence of an inetinfo.exe process without the corresponding presence of a running W3SVC "World Wide Web Publishing" service may indicate the presence of malware, or at least of a process that merits additional attention.

However, the investigator must also keep in mind that the inetinfo.exe process also supports the Microsoft File Transfer Protocol (FTP) service and the Simple Mail Transfer Protocol (SMTP) e-mail server, as illustrated in the output of the tlist -s command:

Simply put, a running inetinfo.exe process without the corresponding services also running could point to an issue. Again, this check can also be automated. For example, if the output of the FRUC tools were parsed and entered into a database, SQL statements could be used to extract and correlate information.

Tip::

During his presentation at the BlackHat DC 2007 conference, Kevin Mandia stated that a good number of the incidents his company responded to over the previous year illustrated a move by malware authors to maintain persistence in their software by having it install itself as a Windows service. My experience has shown this to be the case, as well. In fact, in a number of engagements, I have seen malware get on a system and create a service that then "shovels" a shell (i.e., command-line connection) off the system and off the network infrastructure to a remote system. When the "bad guy" connects on the other end, he then has System-level, albeit command-line, access to the compromised system.

This shows that with some knowledge and work, issues can be addressed in a quick and thorough manner, through the use of automation and data reduction. Automation is important, as incidents are generally characterized by stress and pressure, which just happen to be the conditions under which we’re most likely to make mistakes. Automation allows us to codify a process and be able to follow that same process over and over again. If we understand the artifacts and bits of volatile data that will provide us with a fairly complete picture of the state of the system, we can quickly collect and correlate the information, and determine the nature and scope of the incident. This leads to a more agile response, moving quickly, albeit in a thorough manner using a documented process. From here, additional volatile data can be collected, if necessary. Using this minimalist approach upfront reduces the amount of data that needs to parsed and correlated, and leads to an overall better response.

With respect to analysis and automation, the "rules of thumb" used by an investigator to locate suspicious processes within the collected volatile data are largely based on experience and an understanding of the underlying operating system and applications.

Tip::

Several years ago, a friend of mine would send me volatile data that he’d collected during various incidents. He used a series of tools and a batch file to collect his volatile data, and long after the case had been completed he would send me the raw data files, asking me to find out what was "wrong." With no access to the state and nature of the original system, I had to look for clues in the data he sent me. This is a great way to develop skills and even some of the necessary correlation tools.

Some of these rules can be codified into procedures and even scripts to make the analysis and data reduction process more efficient. One example of this is the svchost.exe process. Some malware authors make use of the fact that usually several copies of svchost.exe are running on Windows systems (my experience shows two copies running on Windows 2000, five on Windows XP SP2, and seven on Windows 2003) and use that name for their malware. We know the legitimate svchost.exe process follows a couple of simple rules, one of which is that the process always originates from an executable image located in the system32 directory. Therefore, we can write a Perl script that will run through the output of the tlist -c command and immediately flag any copies of an svchost.exe process that is not running from the system32 directory.

This is a variation on the artificial ignorance method of analysis in which you perform data reduction by removing everything you know to be "good," and what’s left is most likely the stuff you need to look at. (Artificial ignorance is a term coined by Marcus Ranum, who can be found at www.ranum.com.) I’ve used this approach quite effectively in a corporate environment, not only during incident response activities but also while performing scans of the network for spyware and other issues. What I did was create a Perl script that would reach out to the primary domain controller and get a list of all the workstations it "saw" on the network. Then, I’d connect to each workstation using domain administrator credentials, extract the contents of the Run key from each system, and save that information in a file on my system. The first time I ran this script, I had quite a few pages of data to sort through. So, I began investigating some of the things I found, and determined that many of them were legitimate applications and drivers. As such, I created a list of "known good" entries, and then when I scanned systems I would check the information I retrieved against this list, and write the information to my log file only if it wasn’t on the list. In fairly short order, I reduced my log file to about half a page.

This is one approach you can use to quickly analyze the volatile data you’ve collected. However, the key to agile analysis and a rapid response it to reduce the amount of data you actually need to investigate. This may mean putting the data you have collected into a more manageable form, or it may mean weeding out artifacts that you know to be "good," thereby reducing the amount of data you need to actually investigate.

Expanding the Scope

What happens when things get a little more complicated than the scenarios we’ve looked at? We see security experts in the media all the time, saying that cybercrime is becoming increasingly sophisticated (and it is). So, how do we deal with more complicated incidents? After all, not all processes involved in an incident may be as long-lived as the ones illustrated in my example scenarios. For instance, a downloader may be on a system through a Web browser vulnerability, and once it has downloaded its designated target software, it has completed its purpose and is no longer active. Therefore, information about that process, to include network connections used by the process, will no longer be available.

Not too long ago, I dealt with an incident involving an encrypted executable that was not identified by more than two dozen antivirus scanning engines. We also had considerable trouble addressing the issue, as there was no running process with the same name as the mysterious file on any of the affected systems that we looked at. Dynamic analysis of the malware showed that the malware injected itself into the Internet Explorer process space and terminated. This bit of information accounted for the fact that we were not able to find a running process using the same name as the mysterious file, and that all of our investigative efforts were leading us back to Internet Explorer (iexplore.exe) as the culprit. We confirmed our findings by including the fact that on all of the systems from which we’d collected volatile data, not one had Internet Explorer running on the desktop. So, here was the iexplore.exe process, live and running, spewing traffic out onto the network and to the Internet, but there was no browser window open on the desktop.

The interesting thing about this particular engagement wasn’t so much the code injection technique used, or the fact that the mysterious executable file we’d found appeared to be unidentifiable by multiple antivirus engines. Rather, I thought the most interesting aspect of all this was that the issue was surprisingly close to a proof of concept worm called "Setiri" that was presented by a couple of SensePost (www.sensepost.com/research_conferences.html) researchers at the BlackHat conference in Las Vegas in 2002. Setiri operated by accessing Internet Explorer as a Component Object Model (COM) server, and generating traffic through Internet Explorer. Interestingly enough, Dave Roth wrote a Perl script (www.roth.net/perl/scripts) called IEEvents.pl that, with some minor modifications, will launch Internet Explorer invisibly (i.e., no visible window on the desktop) and retrieve Web pages and such.

What’s the point of all this? Well, I just wanted to point out how sophisticated some incidents can be. Getting a backdoor on a system through a downloader, which itself is first dropped on a system via a Web browser vulnerability, isn’t particularly sophisticated (although it is just as effective) in the face of having code injected into a process’s memory space.

If someone compromises a Windows system from the network, you may expect to find artifacts of a login (in the Security Event Log, or in an update of the last login time for that user), open files on the system, and even processes that have been launched by that user. If the attacker is not using the Microsoft login mechanisms (Remote Desktop, "net use" command, etc.), and is instead accessing the system via a backdoor, you can expect to see the running process, open handles, network connections, and so forth.

With some understanding of the nature of the incident, you can effectively target live-response activities to address the issue, not only from a data collection perspective but also from a data correlation and analysis perspective.

Reaction

Many times, the question that comes up immediately following the confirmation of an incident is "What do we do now?" I hate to say it, but that really depends on your infrastructure. For instance, in the example cases in this topic, you saw "incidents" in which the offending process was running under the Administrator account. Now, this was a result of the setup for the case, but it is not unusual when responding to an incident to find a process running within the Administrator or even the System user context. Much of the prevailing wisdom in cases such as this is that you can no longer trust anything the system is telling you (i.e., you cannot trust that the output of the tools you’re using to collect information is giving you an accurate view of the system) and that the only acceptable reaction is to wipe the system clean and start over, reinstalling the operating system from clean media (i.e., the original installation media) and reloading all data from backups.

To me, this seems like an awful lot of trouble to go through, particularly when it’s likely that you’re going to have to do it all over again fairly soon. You’re probably thinking to yourself, "What??" Well, let’s say you locate a suspicious process, and using tools such as pslist. exe you see that the process hasn’t been running for very long in relation to the overall uptime of the system itself. This tells you that the process started sometime after the system was booted. For example, as I’m sitting here writing this topic, my system has been running for more than eight hours. I can see this in the "Elapsed Time" column, on the far right in the output of pslist.exe, as illustrated here:

|

smss |

1024 |

11 |

3 |

21 |

168 |

0:00:00.062 |

8:28:38.109 |

|

csrss |

1072 |

13 |

13 |

555 |

1776 |

0:00:26.203 |

8:28:36.546 |

However, I have other processes that were started well after the system was booted:

|

uedit32 |

940 |

8 |

1 |

88 |

4888 |

0:00:03.703 |

4:07:25.296 |

|

cmd |

3232 |

8 |

1 |

32 |

2008 |

0:00:00.046 |

3:26:46.640 |

Although the Media Access Control (MAC) times on files written to the hard drive can be modified to mislead an investigator, the amount of time a process has been running is harder to fake. With this information, the investigator can develop a timeline of when the incident may have occurred, and determine the overall extent of the incident (similar to the approach used in the example cases earlier). The goal is to determine the root cause of the incident so that whatever issue led to the compromise can be rectified, and subsequently corrected on other systems, as well. If this is not done, putting a cleanly loaded system back on the network will likely result in the system being compromised all over again. If systems need to be patched, patches can be rolled out. However, if the root cause of the incident is really a weak Administrator password, no amount of patching will correct that issue. The same is true with application configuration vulnerabilities, such as exploited by network worms.

Now, let’s consider another case, in which the suspicious process is found to be a service, and the output of pslist.exe shows us that the process has been running for about the same amount of time as the system itself. Well, as there do not appear to be any Windows APIs that allow an attacker to modify the LastWrite times on Registry keys (MAC times on files can be easily modified through the use of publicly documented APIs), an investigator can extract that information from a live system and determine when the service was installed on the system. A knowledgeable investigator knows that to install a Windows service, the user context must be that of an Administrator, so checking user logins and user activity on the system may lead to the root cause of the incident.

Again, it is important to determine the root cause of an incident so that the situation can be fixed, not only on the compromised system but on other systems as well.

Warning::

Microsoft Knowledge Base article Q328691 (http://support.microsoft.com/ default.aspx?scid=kb;en-us;328691), "MIRC Trojan-related attack detection and repair," contains this statement in the Attack Vectors section: "Analysis to date indicates that the attackers appear to have gained entry to the systems by using weak or blank administrator passwords. Microsoft has no evidence to suggest that any heretofore unknown security vulnerabilities have been used in the attacks." Simply reinstalling the operating system, applications, and data on affected systems would lead to their compromise all over again, as long as the same configuration settings were used. In corporate environments, communal Administrator accounts with easy-to-remember (i.e., weak) passwords are used, and a reinstalled system would most likely use the same account name and password as it did prior to the incident.

Determining that root cause may seem like an impossible task, but with the right knowledge and right skill sets, and a copy of this topic in your hand, that job should be much easier!

Prevention

One thing that IT departments can do to make the job of responding to incidents easier (keeping in mind that first responders are usually members of the IT staff) is to go beyond simply installing the operating system and applications, and make use of system hardening guides and configuration management procedures. For example, by limiting the running services and processes on a server to only those that are necessary for the operation of the system itself, you limit the attack surface of the system. Then, for the services you do run, configure them as securely as possible. If you have an IIS Web server running, that system may be a Web server, but is it also an FTP server? If you don’t need the FTP server running, disable it, remove it, or don’t even install it in the first place. Then, configure your Web server to use only the necessary script mappings (IIS Web servers with the .ida script mapping removed were not susceptible to the Code Red worm in 2001), and you may even want to install the UrlScan tool (http://technet.microsoft.com/en-us/security/cc242650.aspx).

Tip::

The UrlScan tool, available from Microsoft, supports IIS Version 6.0, and Nazim’s IIS Security Blog (http://blogs.iis.net/nazim/default.aspx) mentions that UrlScan 3.0 is available for us in protecting the IIS Web server against some of the more recent SQL injection attacks.

This same sort of minimalist approach applies to setting up users on a system, as well. Only the necessary users should have the appropriate level of access to a system. If a user does not need access to a system, either to log in from the console or to access the system remotely from the network, he should not have an account on that system. I have responded to several instances in which old user accounts with weak passwords were left on systems and intruders were able to gain access to the systems through those accounts. In another instance, a compromised system showed logins via a user’s account during times that it was known that the person who was assigned that account was on an airplane 33,000 feet over the Midwestern United States. However, that user rarely used his account to access the system in question, and the account was left unattended.

By reducing the attack surface of a system, you can make it difficult (maybe even really difficult) for someone to gain access to that system, to either compromise data on the system or use that system as a steppingstone from which to launch further attacks. The attacker may then either generate a great deal of "noise" on a system, in the form of log entries and error messages, making the attempts more "visible" to administrators, or simply give up because compromising the system wasn’t an "easy win." Either way, I’d rather deal with a couple of megabytes’ worth of log files showing failed attempts (as when the Nimda worm was prevalent; see www.cert.org/advisories/CA-2001-26.html) than a system that was compromised repeatedly due to a lack of any sort of hardening or monitoring. At least if some steps have been taken to limit the attack surface and the level to which the system can be compromised, an investigator will have more data to work with, in log files and other forms of data.

Summary

Once you’ve collected volatile data during live response, the next step is to analyze that data and provide an effective and timely response. Many times, investigators may be overwhelmed with the sheer volume of volatile data they need to go through, and this can be more overwhelming if they’re unsure what they’re looking for. Starting with some idea of the nature of the incident, the investigator can begin to reduce the amount of data by looking for and parsing out "known good" processes, network connections, active users, and so forth. She can also automate some of the data correlation, further reducing the overall amount of data, and reducing the number of mistakes that may be made.

All of these things will lead to timely, accurate, and effective response to incidents.

Solutions Fast Track

Data Analysis

- Live response is generally characterized by stress, pressure, and confusion. Investigators can use data reduction and automation techniques to provide effective response.

- Once data has been collected and analyzed, the final response to the incident can be based upon nontechnical factors, such as the business or political infrastructure of the environment.

- Performing a root-cause analysis when faced with an incident can go a long way toward saving both time and money down the road.

- Taking a minimalist approach to system configuration can often serve to hamper or even inhibit an incident altogether. At the very least, making a system more difficult to compromise will generate "noise" and possibly even alerts during the attempts.

Frequently Asked Questions

Q: What is the difference between a "process" and a "service"?

A: From the perspective of live response, there isn’t a great deal of difference between the two, except in how each is started or launched, and the user context under which each runs. Windows services are actually processes, and can be started automatically when the system starts. When a process is started as a service, it most often runs with System-level privileges, whereas processes started automatically via a user’s Registry hive will run in that user’s context.

Q: I’m seeing some intermittent and unusual traffic in my firewall logs. The traffic seems to be originating from a system on my network and going out to an unusual system. When I see the traffic, I go to the system and collect volatile data, but I don’t see any active network connections, or any active processes using the source port I found in the traffic. I then see the traffic again six hours later. What can I do?

A: In the fruc.ini file used in the example cases in this topic, I used autorunsc.exe from Microsoft/Sysinternals to collection information about autostart locations. Be sure to check for scheduled tasks, as well as any unusual processes that may be launching a child process to generate the traffic.

Q: I have an incident that I’m trying to investigate, but I can’t seem to find any indication of the incident on the system.

A: Many times, what appears to be "unusual" or "suspicious" behavior on a Windows system is borne from a lack of familiarity with the system rather than an actual incident. I have seen responders question the existence of certain files and directories (Prefetch, etc.) for no other reason than the fact that they aren’t familiar with the system. In fact, I remember one case where an administrator deleted all the files with .pf extensions that he found in the C:\Windows\Prefetch directory.A couple of days later, many of those files had mysteriously returned, and he felt the system had been compromised by a Trojan or backdoor.