Introduction

Now that you’ve collected volatile data from a system, the question becomes "How do I ‘hear’ what it has to say?" or "How do I figure out what the data is telling me?" Once you’ve collected a process listing, how do you determine which process, if any, is malware? How do you tell whether someone has compromised the system and is currently accessing it? Finally, how can you use the volatile data you’ve collected to build a better picture of activity on the system, particularly as you acquire an image and perform postmortem analysis?

The purpose of this topic is to address these sorts of questions. What you’re looking for—what artifacts you will be digging for in the volatile data you’ve collected—depends heavily on the issue you are attempting to address. How do you dig through reams of data to find what you’re looking for? In this topic, I do not think for a moment that I will be able to answer all of your questions; rather, my hope is to provide enough data and examples so that when something occurs that I have not covered, you will have a process by which you can determine the answer on your own. Perhaps by the time you reach the end of this topic, you will have a better understanding of why you collect volatile data, and what it can tell you.

Data Analysis

A number of sources of information tell you what data you should collect from a live system to troubleshoot an errant application or assess an incident. Take a look on the Web at sites such as the e-Evidence Info site (www.e-evidence.info), which is updated monthly with new links to conference presentations, papers, and articles that discuss a wide range of topics, to include volatile data collection. Although many of these resources refer to data collection, few actually address the issue of data correlation and analysis. We will be addressing these issues in this topic.

To begin, you need to look to the output of the tools, to the data you’ve collected, to see what sort of snapshot of data is available to you. When you use tools such as those discussed in next topic, you are getting a snapshot of the state of a system at a point in time. Many times, you can quickly locate an indicator of the issue within the output from a single tool. For example, you may see something unusual in the Task Manager graphical user interface (GUI) or in the output of tlist.exe (such as an unusual executable image file path or command line). For an investigator who is familiar with Windows systems and what default or "normal" processes look like from this perspective, these indicators may be fairly obvious and may jump out immediately.

Tip::

Microsoft provides some information regarding default processes on Windows 2000 systems in Knowledge Base article Q263201 (http://support.microsoft.com/kb/263201/en-us).

However, many investigators and even system administrators are not familiar enough with Windows systems to recognize default or "normal" processes at a glance. This is especially true when you consider that the Windows version (e.g., Windows 2000, XP, 2003, or Vista) has a great deal to do with what is "normal." For example, default processes on Windows 2000 are different from those on Windows XP, and that is just for a clean, default installation, without additional applications added. Also consider that different hardware configurations often require additional drivers and applications. The list of variations can go on, but the important point to keep in mind is that what constitutes a "normal" or legitimate process can depend on a lot of different factors, so you need to have a process for examining your available data and determining the source of the issue you’re investigating. This is important, as having a process means you have steps that you can follow, and if something needs to be added or modified, you can do so easily. Without a process, how do you determine what went wrong, and what you can do to improve it? If you don’t know what you did, how do you fix it?

Perhaps the best way to get started is to dive right in. When correlating and analyzing volatile data, it helps to have an idea of what you’re looking for. One of the biggest issues that some information technology (IT) administrators and responders face when an incident occurs is tracking down the source of the incident based on the information they have available. One example is when an alert appears in the network-based intrusion detection system (NIDS) or an odd entry appears in the firewall logs. Many times, this may be the result of malware (e.g., worm) infection. Usually, the alert or log entry will contain information such as the source Internet Protocol (IP) address and port, as well as the destination IP address and port. The source IP address identifies the system from which the traffic originated, and as you saw in next topic, if you have the source port of the network traffic, you can use that information to determine the application that sent the traffic, and identify the malware.

Warning::

Keep in mind that for traffic to appear on the network, some process someplace has to have generated it. However, some processes are short-lived (e.g., a downloader that grabs and installs another file, such as a Trojan, and then terminates), and attempting to locate a process based on traffic seen in firewall logs four hours ago (and not once since then) can be frustrating. If the traffic appears on a regular basis, be sure to check all possibilities. This includes examining the source IP address of the traffic to locate the system transmitting it, and even installing a network "sniffer" to capture and examine the network traffic to determine whether it is "spoofed."

Another important point is that malware authors will often attempt to hide the presence of their applications on a system by using a familiar name, or a name similar to a legitimate file that an administrator may recognize. If the investigator searches the Web for the name, the search will return information indicating that the file is innocuous or is a legitimate file used by the operating system.

Warning::

While responding to a worm outbreak on a corporate network, I determined that part of the infection was installed on the system as a Windows service that ran from an executable image file named alg.exe. Searching for information on this filename, the administrators had determined that this was a legitimate application called the "Application Layer Gateway Service." This service appears in the Registry under the CurrentControlSet\Services key, in the ALG subkey, and points to %SystemRoot%\system32\alg.exe as its executable image file. However, the service I found was located within the "Application Layer Gateway Service" subkey (first hint: the subkey name is incorrect) and pointed to %SystemRoot%\alg.exe. Be very careful when searching for filenames, as even the best of us can be tripped up by the information that is returned via such a search. I’ve seen seasoned malware analysts make the mistake of determining the nature of a file using nothing more than the filename.

To make all of this a little clearer, let’s take a look at some examples.

Example 1

A scenario that is seen time and time again is one in which the administrator or helpdesk is informed of unusual or suspicious activity on a system. It may be unusual activity reported by a user, or a server administrator finding some unusual files on a Web server, and when she attempts to delete them she’s informed that they cannot be deleted as they are in use by another process.

In such incidents, the first responder will be faced with a system that cannot be taken down for a detailed postmortem investigation (due to time and/or business constraints), and a quick (albeit thorough) response is required. Very often, this can be accomplished through live response, in which information regarding the current state of the system is quickly collected and analyzed, with an understanding that enough information must be collected to provide as complete a picture of the system state as possible. When information is collected from a live system, although the process of collecting that information can be replicated the information itself generally cannot be duplicated, as a live system is always in a state of change.

Whenever something happens on a system, it is the result of some process that is running on that system. Although this statement may appear to be "intuitively obvious to the most casual observer" (a statement one of my graduate school professors used to offer up several times during a class, most often in the presence of a sixth-order differential equation), often this fact is missed during the stress and pressure of responding to an incident. However, the simple truth is that for something to happen on a system, a process or thread of execution must be involved in some way.

Tip::

In his "Exploiting the Rootkit Paradox with Windows Memory Analysis" paper, Jesse Kornblum points out that rootkits, like most malware, need to run or execute. Understanding this is the key to live response.

So, how does a responder go about locating a suspicious process on a system? The answer is through live-response data collection and analysis. And believe me, I have been in the position where a client presents me with a hard drive from a system (or an acquired image of a hard drive) and asks me to tell them what processes were running on the system. The fact of the matter is that to show what was happening on a live system, you must have information collected from that system while it was running. Using tools discussed in next topic, you can collect information about the state of the system at a point in time, capturing a snapshot of that state. As the information that you’re collecting exists in volatile memory, once you shut the system down, that information no longer exists.

In this scenario, I have a Windows 2000 system that has been behaving oddly. The system is an intranet Web server running Internet Information Server (IIS) Version 5.0, and users who have attempted to access pages on the server have reported that they are unable to retrieve any information at all, and are seeing only blank pages in their browser. This is odd, as one would expect to see an error message, perhaps.

I then pick up my First Responder CD, which contains my tools and a copy of the First Responder Utility,and search for the affected system. In such incidents, I initially take a minimalist approach; I like to minimize my impact on the system (remember Locard’s Exchange Principle) and optimize my efforts and response time. To that end, over time I have developed a minimal set of state information that I would need to extract from a live system to get a view that is comprehensive enough for me to locate potentially suspicious activity.

Tip::

The media that accompanies this topic contains desktop capture videos that demonstrate the use of the components of the FSP.

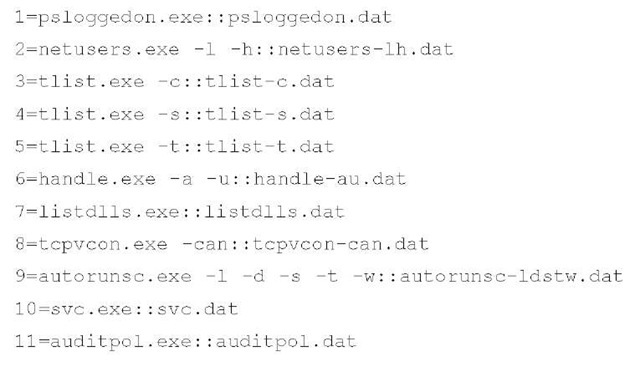

I have also identified a set of tools that I can use to extract that information (the fruc.ini file used with the First Responder Utility [FRU] in this scenario is included in the ch2\samples directory on the media that accompanies this topic). The [Commands] section of the fruc.ini file contains the following entries:

Each command is run in order, and from this list you can see commands for collecting information about logged-on users (both local and remote) as well as logon history, autostart locations, processes, network connections and open ports, services, and the audit policy on the system. This set of commands will not only provide a comprehensive view of the state of the system at a snapshot in time, but also collect data that may help direct analysis and follow-on investigative efforts.

Tip::

As you saw in next topic, you can run the FRU against multiple configuration (i.e., INI) files. In issues involving a potential violation of corporate acceptable use policies (employees misusing IT systems), you may want to have additional INI files that collect the contents of the Clipboard, perhaps Protected Storage information, for instance.

Approaching the affected Windows 2000 system, I place the FRU CD into the CD-ROM drive, launch a command prompt, and type in the following command:

Note

Whenever you’re performing live incident response, I highly recommend that you collect the complete contents of physical memory (a.k.a. RAM) before performing any other activities. This allows you to acquire the contents of RAM in as pristine a state as possible, and prior to introducing additional changes to the system state. Although this goes beyond the scope of this topic.

Within seconds, all of the volatile data that I want to collect from the system is extracted and safely stored on my forensic workstation for analysis.

Tip::

You can find the data I collected during this scenario in the ch2\samples directory on the media accompanying this topic, in the archive named testcase1.zip.

Once back at the forensic workstation, I see that, as expected, the testcasel directory contains 16 files. One of the benefits of the FSP is that it is self-documenting; the fruc.ini file contains the list of tools and command lines used to launch those tools when collecting volatile data. As this file and the tools themselves are on a CD, they cannot be modified, so as long as I maintain that CD, I will have immutable information about what tools (the version of each tool, etc.) I ran on the system, and the options used to run those tools. One of the files in the testcasel directory is the case.log file, which maintains a list of the data sent to the server by the FRU and the MD5 and SHA-1 hashes for the files to which the data was saved. Also, I see the case.hash file, which contains the MD5 and SHA-1 hashes of the case. log file after it was closed.

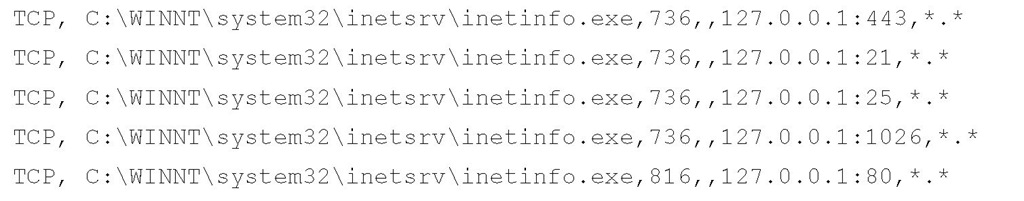

Needing more information on this, and noting that the command line for PID 816 appears to have bound the process to port 80 (which would account for the unusual behavior reported by users), I then open the file containing the output of the eighth command run from the fruc.ini file (i.e., tcpvcon.exe -can) to take a look:

Normally, I would expect to see PID 736 bound to port 80, but in this instance, PID 816 is bound to that port instead.

As you can see, I’ve identified PID 816 as a suspicious process, and it appears that this process would account for the unusual activity that was reported. Checking the output of the other commands, I don’t see any unusual services running, or any references to the process in autostart locations. The output of the handle.exe utility shows that the process is running under the Administrator account, but no files appear to be open. Also, the output of the tcpvcon.exe -can command shows that there are no current connections to port 80 on that system. At this point, I’ve identified the issue, and now need to determine how this bit of software got on the system and how it ended up running as a process.

Example 2

Another popular scenario seen in network environments is unusual traffic that originates from a system appearing in intrusion detection system (IDS) or firewall logs. Most times, an administrator sees something unusual or suspicious, such as traffic leaving the network that is not what is normally seen. Examples of this often include IRCbot and worm infections. Generally, an IRCbot will infect a system, perhaps as the result of the user surfing to a Web page that contains some code that exploits a vulnerability in the Web browser. The first thing that generally happens is that an initial downloader application is deposited on the system, which then reaches out to another Web site to download and install the actual IRCbot code itself. From there, the IRCbot accesses a channel on an Internet Relay Chat (IRC) server and awaits commands from the botmaster.

Warning::

IRCbots have been a huge issue for quite a while, as entire armies of bots, or "botnets," have been found to be involved in a number of cybercrimes. In the February 19, 2006 issue of the Washington Post Magazine, Brian Krebs presented the story of botmaster 0×80 to the world. His story clearly showed the ease with which botnets are developed and how they can be used. Just a few months later, Robert Lemos’s SecurityFocus article (www.securityfocus com/news/11390) warned us that IRCbots seem to be moving from a client/ server framework to a peer-to-peer framework, making them much harder to shut down. You can find the article at www.washingtonpost.com/wp-dyn/ content/article/2006/02/14/AR2006021401342.html.

In the case of worm infections, once a worm infects a system, it will try to reach out and infect other systems. Worms generally do this by scanning IP addresses, looking for the same vulnerability (many worms today attempt to use several different vulnerabilities to infect systems) that they used to infect the current host. Some worms are pretty virulent in their scanning; the SQL Slammer worm (www.cert.org/advisories/CA-2003-04.html) ran amok on the Internet in January 2003, generating so much traffic that servers and even ATM cash machines across the Internet were subject to massive denial of service (DoS) attacks.

The mention of DoS attacks brings another important aspect of this scenario to mind. Sometimes IT administrators are informed by an external party that they may have infected systems. In such cases, usually the owner of a system that is being scanned by a worm or is under a DoS attack will see the originating IP address of the traffic in captures of the traffic, do some research regarding the owner of that IP address (usually it’s a range and not a single IP address that is assigned to someone), and then attempt to contact them. That’s right, even in the year 2009 it isn’t unusual for someone to knock on your door to tell you that you have infected systems.

Regardless of how the administrator is notified, the issue of response remains the same. One of the difficulties of such issues is that armed with an IP address and a port number (both of which were taken from the headers of captured network traffic) the administrator must then determine the nature of the incident. Generally, the steps to do that are to determine the physical location of the system, and then to collect and analyze information from that system.

This scenario starts and progresses in much the same manner as the previous scenario, in that I launch the FSP on my forensic workstation, go to the target system with my FRUC CD, and collect volatile data from the system.

Tip::

You can find the data I collected during this scenario in the ch2\samples directory on the accompanying media, in the archive named testcase2.zip.

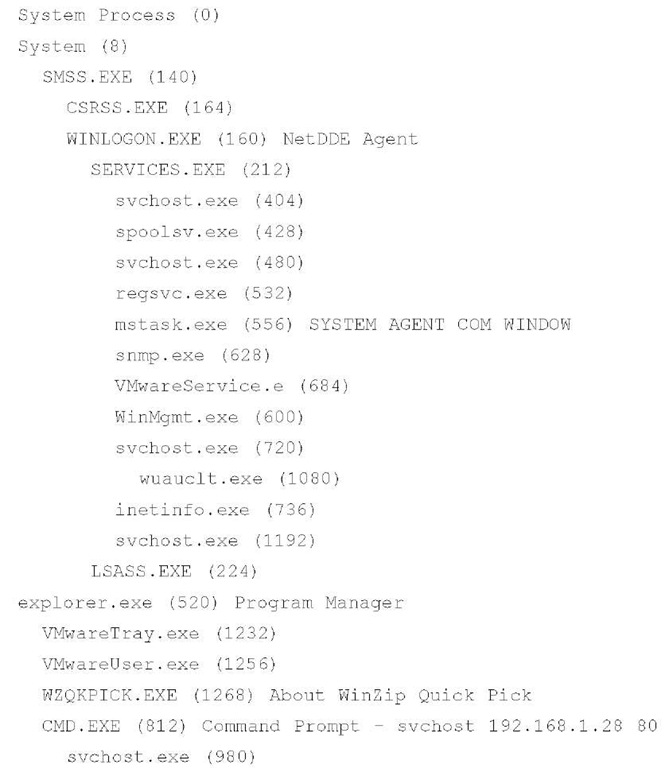

Once back at the forensic workstation, I open the output of the tlist.exe -t command (which prints the Task Tree showing each process listed, indented beneath its parent process) and PID 980 stands out as odd to me:

To see why this process appears odd, it is important to understand that on a default installation of Windows 2000, usually only two copies of svchost.exe are running.

Tip::

Microsoft Knowledge Base article Q250320 (http://support.microsoft.com/?kbid=250320) provides a description of svchost.exe on Windows 2000 (Knowledge Base article Q314056 [http://support.microsoft.com/kb/314056/EN-US/] provides a description of svchost.exe on Windows XP). The example output of the tlist -s command not only shows two copies of svchost.exe running, but also references the Registry key that lists the groupings illustrated in the article. Also see Microsoft Knowledge Base article Q263201 (http://support.microsoft.com/?kbid=263201) for a list of default processes found on Windows 2000 systems.

When you are looking at processes on a system, it helps to know a little bit about how processes are created in relation to each other. For example, as illustrated in the output of the tlist -t command earlier (taken from a Windows 2000 system), most system processes originate from the process named "System" (PID 8 on Windows 2000, PID 4 on XP), whereas most user processes originate from explorer.exe, which is the shell, or as listed by tlist.exe, the "Program Manager." Generally (and I use this word carefully, as there may be exceptions), we see that the System process is the "parent" process for the services.exe process, which in turn is the parent process for, well, many services. Services.exe is the parent process for the svchost.exe processes, for instance. On the user side, a command prompt (cmd.exe) will appear as a child process to the explorer.exe process, and any command run from within the command prompt, such as tlist -t, will appear as a child process to cmd.exe.

So, how is this important to live response? Take a look at the output from the tlist -t command again. You’ll see an instance of svchost.exe (PID 980) running as a child process to cmd.exe, which is itself a child process to explorer.exe … not at all where we would expect to see svchost.exe!

Now, let’s take this a step further. What if the running svchost.exe (PID 980) had been installed as a service? Although we would not have noticed this in the output of tlist -t, we would have seen something odd in the output of tlist -c, which shows us the command line used to launch each process. The rogue svchost.exe would most likely have had to have originated from within a directory other than the system32 directory, thanks to Windows File Protection (WFP). WFP is a mechanism used, starting with Windows 2000, in which certain system (and other very important) files are "protected," in that attempts to modify the files will cause WFP to "wake up" and automatically replace the modified file with a fresh copy from its cache (leaving evidence of this activity in the Event Log). Windows 2000 had some issues in which WFP could easily be subverted, but those have been fixed. So, assuming that WFP hasn’t been subverted in some manner, we would expect to see the rogue svchost.exe running from another directory, perhaps Windows\System or Temp, alerting us to the culprit.

Warning::

WFP can be subverted on all Windows systems, in some cases rather easily. Apparently, an undocumented application program interface (API) function is available through sfc_os.dll, exported at ordinal 5, and has been given the name SfcFileException; this is discussed at the Bitsum Technologies site (www.bitsum.com/aboutwfp.asp), in addition to other locations across the Internet. However, the notable exception is Microsoft.com. According to descriptions of this API function, properly calling it will disable WFP for one minute, enough time for a "protected" file to be modified or replaced. As WFP does not poll the protected files, once WFP is enabled again, there is nothing to notify it that the file has been changed. Under normal circumstances, when the operating system generates a file change event, WFP "wakes up," checks to see whether the change event occurred for a protected file, and if so, replaces the file with a "known good" copy from the cache and generates an Event Log record. With WFP disabled for one minute via the SfcFileException API, there is nothing to detect or alert to the fact that the file was changed.

Example 3

Microsoft’s psexec.exe (http://technet.microsoft.com/en-us/sysinternals/bb897553.aspx) is a great tool to demonstrate how you might go about looking for "unusual" or "suspicious" processes on systems. Many times, an intruder will gain access to a system through some means and take advantage of the fact that he has Administrator-level privileges, and escalate those privileges to the System level. This is done, in part, to (a) prevent an administrator from noticing that the bad guy is on the system or has a process running, and (b) prevent the administrator from being able to simply stop the "bad" process from running.

So, the first thing we’ll do is download a copy of psexec.exe from the Microsoft/ Sysinternals site, and then run it with the following command line:

At this point, we still have a command prompt, but it’s running with System-level privileges. Now, to add some data to observe, launch Solitaire by typing sol at the command prompt. You’ll notice that you won’t see the application pop up on your desktop, but you will get the command prompt back without any errors.

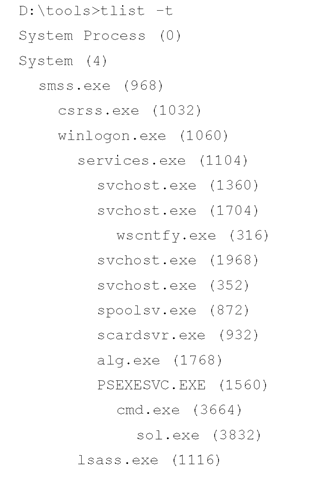

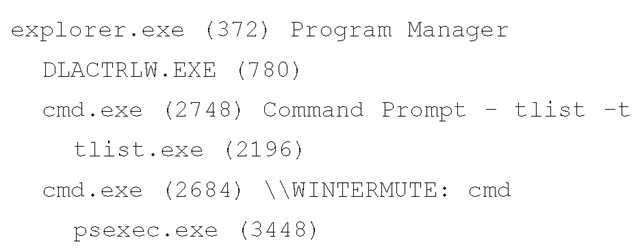

Now, open another command prompt, and run tlist.exe —t. The output should look similar to the following (output trimmed for the sake of brevity):

Notice that beneath the explorer.exe process, you see command prompts running for both tlist.exe and psexec.exe (processes with PIDs of 2748 and 2684, respectively). However, you also see PSEXESVC.EXE running above explorer.exe in the process listing tree view. This is because the process is running with System-level privileges. Beneath the PSEXESVC.EXE process, indented to indicate that it is a child process of PSEXESVC.EXE, is yet another command prompt (process with PID 3664), and beneath that process is the Solitaire child process. Processes running as services (such as sol.exe) do not run in interactive mode, as they would if they were run normally by a user.

I used this example to illustrate what can happen when the principle of least privilege isn’t followed when creating user accounts. Too often, some sort of downloader gets on a user’s system, either through the user accessing a malicious Web site or through some other means, such as an e-mail attachment. The downloader then does its job of grabbing other malware, and because the user account is running on the system with Administrator privileges, the malware then has the ability to do everything the user can, such as create Scheduled Tasks or install Windows services. With its privileges escalated beyond the reach of the Administrator account, the malware now has unfettered access to all system resources. In addition, the malware is no longer interacting with the desktop, which means a number of artifacts will no longer be available.