Event Logs

Event Logs are essentially files within the file system, but they can change. In fact, depending on how they’re configured and what events are being audited, they can change quite rapidly.

Depending on how the audit policies are configured on the "victim" system and how you’re accessing it as the first responder, entries can be generated within the Event Logs. For example, if you decide to run commands against the system from a remote location (i.e., the system is in another building or another city, and you cannot get to it quickly but you want to preserve some modicum of data) and the proper audit configuration is in place, the Security Event Log will contain entries for each time you log in. If enough of these entries are generated, you could end up losing valuable information that pertains to your investigation. Tools such as psloglist.exe and dumpevt.exe can be used to retrieve the event records, or the .evt files themselves may be copied off the system (this depends on the level of access and permissions of the account being used).

At this point, you may be thinking "Okay, given all these tools and utilities, I have an incident on my hands. What data do I need to collect to resolve the issue?" The stock answer is "It depends." I know that’s probably not the answer you wanted to hear, but let me see if, in explaining that response, we can build an understanding of why that is the response.

The volatile data that is the most useful to your investigation depends on the type of incident you’re faced with. For example, an incident involving a remote intrusion or a Trojan backdoor will generally mean that the process, network connection, and process-to-port-mapping information (and perhaps even the contents of certain Registry keys) will be the most valuable to you. However, if an employee in a corporate environment is suspected of having stolen company-proprietary data or violating the corporate acceptable use policy (AUP), information about storage devices connected to his system, Web browsing history, contents of the Clipboard, and so on could be more valuable to your investigation.

The key to all this is to know what information is available to your investigation, how you can retrieve that information, and how you can use it. As you start to consider different types of incidents and the information you need to resolve them, you will start to see an overlap between the various tools you use and the data you’re interested in for your investigation. Although you might not develop a "one size fits all" batch file that runs all the commands you will want to use for every investigation, you could decide that having several smaller batch files (or configuration files for the Forensic Server Project, which is described later in the topic) is a better approach. That way, you can collect only the information you need for each situation.

Devices and Other Information

You could choose to collect other types of information from a system that might not be volatile in nature, but you want to record it for documentation purposes. For example, perhaps you want to know something about the hard drive installed in the system. Di.pl is a Perl script that implements WMI to list the various disk drives attached to the system as well as partition information. Ldi.pl implements WMI to collect information about logical drives (C:\, D:\, etc.), including local fixed drives, removable storage devices, and remote shares. Sr.pl lists information about System Restore Points on Windows XP systems.

DevCon, available from Microsoft, can be used to document devices that are attached to a Windows system. A CLI replacement for the Device Manager, DevCon can show available device classes as well as the status of connected devices.

A Word about Picking Your Tools

Instead, what I’m trying to do is make you aware of where you need to look and show you ways in which you can collect the data you need for your investigations. Sometimes it’s simply a matter of knowing that the information is there.

When we’re collecting data from live systems, we will most often have to interact with the operating system itself, using the available API. Different tools can use different API calls to collect the same information.

It’s always a good idea to know how your tools collect information. What API calls does the executable use? What DLLs does it access? How is the data displayed, and how does that data compare to other tools of a similar nature?

Test your tools to determine the effects they have on a live system. Do they leave any artifacts on the system? If so, what are they? Be sure to document these artifacts because this documentation allows you to identify (and document) steps that you take to mitigate the effects of using, and justify the use of, these tools. For example, Windows XP performs application prefetching, meaning that when you run an application, some information about that application (e.g., code pages) is stored in a .pf file located in the %WINDIR%\Prefetch directory. This directory has a limit of 128 .pf files. If you’re performing incident-response activities and there are fewer than 128 .pf files in this directory, one of the effects of the tools you run on the system will be that .pf files for those tools will be added to the Prefetch directory. Under most circumstances, this might not be an issue. However, let’s say your methodology includes using nc.exe (netcat). If someone had already used nc.exe on the system, your use of any file by that name would have the effect of overwriting the existing .pf file for nc.exe, potentially destroying evidence (e.g., modifying MAC times or data in the file, such as the path to the executable image).

Performing your own tool testing and validation might seem like an arduous task. After all, who wants to run through a tool-testing process for every single tool? Well, you might have to, because few sites provide this sort of information for their tools; most weren’t originally written to be used for incident response or computer forensics. However, once you have your framework (tools, process, etc.) in place, it’s really not that hard, and there are some simple things you can do to document and test the tools you use. Documenting and testing your tools is very similar to testing or analyzing a suspected malware program, a topic covered in detail in next topic.

The basic steps of documenting your tools consist of static and dynamic testing. Static testing includes documenting unique identifying information about the tool, such as:

■ Where you got it (URL)

■ The file size

■ Cryptographic hashes for the file, using known algorithms

■ Retrieving information from the file, such as portable executable (PE) headers, file version information, import/export tables, etc.

This information is easy to retrieve using command-line tools and scripting languages such as Perl, and the entire collection process (as well as archiving the information in a database, spreadsheet, or flat file) is easy to automate.

Tools & Traps…

Native Tools

Most folks I talk to are averse to using native tools on Windows systems, particularly those that are resident on the systems themselves, with the idea being that if the system is compromised, and compromised deeply enough, how can you trust the output of the tools? This is an excellent point, but you can use this to your advantage in your analysis.

Incident responders tend to prefer to run their tools from a CD or DVD, which is immutable media, meaning that if there’s a virus on the system you’re responding to, the virus can’t infect your tools. Another approach taken from the Linux world is the idea of "static binaries," which are executable files that do not rely on any of the libraries (in the Windows world, DLLs) on the victim system. This is not the easiest thing to do with respect to Windows PE files, although there are techniques you can use to simulate this effect. One of these techniques involves accessing the import table of the PE file and modifying the name of the DLL accessed, changing the name of that DLL within the file system, and then copying those files to your CD. The problem with this approach is that you don’t know how deep to go within the recursive nature of the DLLs. If you look at the import header of an executable file,you’ll likely see several DLLs and their functions referenced. If you go to that DLL, you will see the functions exported within the export table, but you will also very likely see that the DLL imports functions from another DLL. As you can see, keeping track of all this just so that the tool you want to use doesn’t access any libraries on the victim system can quickly become unmanageable, and you run the risk of actually damaging the system you’re trying to rescue.

Another perspective is to use the tools you’ve decided to use and rely on the native DLLs to provide the necessary functionality through their exported API. This way, you can perform a modicum of differential analysis; that is, by using two disparate techniques to look at the same information. For example, one way to determine whether ports are open on a system is to run a port scan against the system. A technique to see what ports are being used and what network traffic is emanating from a system is to collect network traffic captures. Neither of these techniques relies on the operating system or the code on the system itself. To perform differential analysis, you need to compare the output of the command netstat -ano to either the results of the port scan or the information from the collected network traffic capture. If as a result of either of those techniques information appears that is not available or visible in the output of netstat.exe, you may have an issue with executables or the operating system itself being subverted.

Dynamic testing involves running the tools while using monitoring programs to document the changes that take place on the system. Snapshot comparison tools such as InControl5 are extremely useful for this job, as are monitoring tools such as RegMon and FileMon, both of which were originally available from Sysinternals.com but are now available from Microsoft. RegMon and FileMon let you see not only the Registry keys and files that are accessed by the process, but also those that are created or modified. You might also consider using such tools as Wireshark to monitor inbound and outbound traffic from the test system while you’re testing your tools, particularly if your static analysis of a tool reveals that it imports networking functions from other DLLs.

Live-Response Methodologies

When you’re performing live response, the actual methodology or procedure you use to retrieve the data from the systems can vary, depending on a number of factors. As a consultant and an emergency responder, I’ve found that it’s best to have a complete understanding of what’s available and what can go into your toolkit (considering issues regarding purchasing software, licensing, and other fees and restrictions) and then decide what works based on the situation.

There are two basic methodologies for performing live response on a Windows system: local and remote.

Local Response Methodology

Performing live response locally means you are sitting at the console of the system, entering commands at the keyboard, and saving information locally, either directly to the hard drive or to a removable (thumb drive, USB-connected external drive) or network resource (network share) that appears as a local resource. This is done very often in situations where the responder has immediate physical access to the system and her tools on a CD or thumb drive. Collecting information locally from several systems can often be much quicker than locating a network connection or accessing a wireless network. With the appropriate amount of external storage and the right level of access, the first responder can quickly and efficiently collect the necessary information. To further optimize her activities, the first responder might have all her tools written to a CD and managed via a batch file or some sort of script that allows for a limited range of flexibility (e.g., the USB-connected storage device is mapped to different drive letters, the Windows installation is on a D:\ drive, etc.).

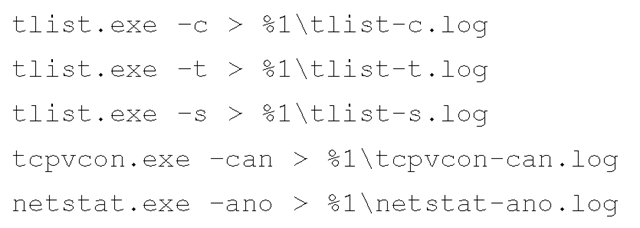

The simplest way to implement the local methodology is with a batch file. I tend to like batch files and Perl scripts because instead of typing the same commands over and over (and making mistakes over and over), I can write the commands once and have them run automatically. An example of a simple batch file that you can use during live response looks like this:

There you go; three utilities and five simple commands. Save this file as local.bat and include it on the CD, along with copies of the associated tools. You may also want to add to the CD trusted copies of the command processor (cmd.exe) for each operating system. Before you launch the batch file, take a look at the system and see what network drives are available, or insert a USB thumb drive into the system and see what drive letter it receives (say, F:\), then run the batch file like so (the D:\ drive is the CD-ROM drive):

Once the batch file completes, you’ll have five files on your thumb drive. Of course, you can add a variety of commands to the batch file, depending on the breadth of data you want to retrieve from a system.

Several freely available examples of toolkits were designed to be used in a local response fashion; among them are the Incident Response Collection Report (IRCR; up to Version 2.3 at the time of this writing and available at http://tools.phantombyte.com/) and the Windows Forensic Toolchest (WFT, available from www.foolmoon.net/security/wft/index.html and created by Monty McDougal). Although they differ in their implementation and output, the base functionality of both toolkits is substantially the same: Run external executable files controlled by a Windows batch file, and save the output locally. WFT does a great job of saving the raw data and allowing the responder to send the output of the commands to HTML reports.

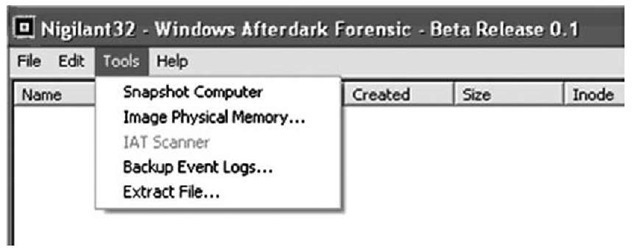

Another approach to developing a local response methodology is to encapsulate as much as possible into a single application using the Windows API, which is what tools such as Nigilant32 from Agile Risk Management LLC (www.agilerm.net, which has been incorporated into F-Response at www.f-response.com) attempt to achieve. Nigilant32 uses the same Windows API calls used by external utilities to collect volatile information from a system (see Figure 1.14) and has the added capabilities of performing file system checks and dumping the contents of physical memory (RAM).

Figure 1.14 Nigilant32 GUI

The interesting thing about the batch file-style toolkits is that a lot of folks have them. When I’m at a customer location or a conference, many times I’ll talk to folks who are interested in comparing their approach to others’.Windows Forensics and Incident Recovery, or they’ve read about other tools and incorporated them into their toolkits. Oddly enough, when it really comes down to it, there is a great deal of overlap between these toolkits. The batch file-style toolkits employ executables that use the same (or similar) Windows API calls as other tools such as Nigilant32.

Many of the tools we’ve discussed here (WFT, Nigilant32, and even many of the CLI tools) are also available as part of the Helix distribution put together by Drew Fahey and available through the e-Fense Web site (www.e-fense.com/helix/). Helix includes a bootable Linux side of the CD, as well as a Windows live-response side, and has been found by many to be extremely useful.

Remote Response Methodology

The remote response methodology generally consists of a series of commands executed against a system from across the network. This methodology is very useful in situations with many systems, because the process of logging in to the system and running commands is easy to automate. In security circles, we call this being scalable. Some tools run extremely well when used in combination with psexec.exe from Sysinternals.com, and additional information can be easily collected via the use of WMI. Regardless of the approach you take, keep in mind that (a) you’re going to need login credentials for each system, and (b) each time you log in to run a command and collect the output, you’re going to add an entry to the Security Event Log (provided the appropriate level of auditing has been enabled). Keeping that in mind, we see that the order of volatility has shifted somewhat, so I recommend that the first command you use is the one to collect the contents of the Security Event Log.

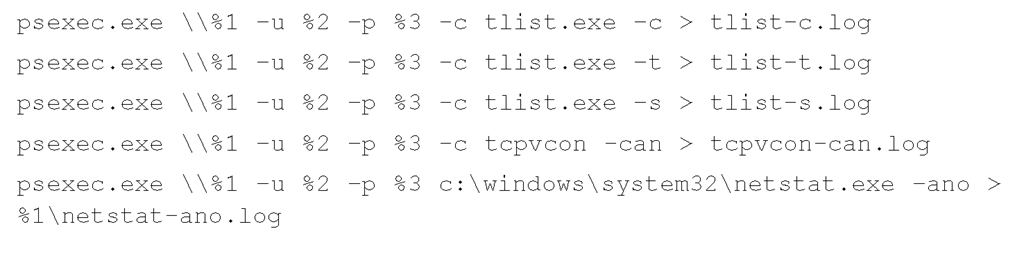

You can use a Windows batch file as the basis of implementing this methodology. Taking three arguments at the command line (the name or IP of the system and the user-name/password login credentials), you can easily script a series of commands to collect the necessary information. You will need to execute some commands using psexec.exe, which will copy the executable to the remote system, run it, and allow you to collect the output from standard output (STDOUT), or redirect the output to a file, just as though you were running the same command locally. Other commands will take a UNC path (the name of the system prefaced with \\) and the login credentials as arguments, so you will not need to use psexec.exe. Finally, you can implement WMI via VBScript or Perl to collect data. Microsoft provides a script repository (www.microsoft.com/technet/scriptcenter/default.mspx) with numerous examples of WMI code implemented in various languages to include Perl, making designing a custom toolkit something of a cut-and-paste procedure.

Implementing our local methodology batch file for the remote methodology is fairly trivial:

This batch file (remote.bat) sits on the responder’s system and is launched as follows:

Once the batch file has completed, the responder has the output of the commands in five files, ready for analysis, on her system.

If you’re interested in using WMI to collect information remotely but you aren’t a big VBScript programmer, you might want to take a look at wmic.exe, the native CLI implementation for WMI. Ed Skoudis wrote an excellent beginner’s tutorial (http://isc.sans.org/diary.php?storyid=1622) on the use of wmic.exe for the SANS Internet Storm Center, which included examples such as collecting a list of installed patches from remote systems. Pretty much anything available to you as a Win32 class via WMI can be queried with wmic. exe. For example, to display the processes running locally on your system, you can use the following command:

This is a pretty simple and straightforward command, and when it’s executed, you can see the output right there in the console. You can also redirect the output to a file, and you can even choose from among various formats, such as CSVs (for opening in Excel or parsing with Perl) or even an HTML table. Using additional switches such as /Node:, /User:, and /Password:, you can include several wmic.exe commands in a batch file and collect an even wider range of data from remote systems. Further, administrators can use these commands to compile hardware and software inventory lists, determine systems that need to be updated with patches, and more. WMI is a powerful interface into managed Windows systems in and of itself, and wmic.exe provides easy access for automating commands.

With the right error handling and recovery as well as activity logging in the code, this can be a highly effective and scalable way to quickly collect information from a number of systems, all managed from and stored in a central location. ProDiscover Incident Response (IR) from Technology Pathways (www.techpathways.com) is a commercial tool that implements this methodology. The responder can install an agent from a central location, query the agent for available information, and then delete the agent. Thanks to ProDiscover’s Perl-based ProScript API, the responder can automate the entire process. This approach minimizes the number of logins that will appear in the Security Event Log as well as the amount of software that needs to be installed on the remote system. ProDiscover IR has the added capabilities of retrieving the contents of physical memory (as of this writing, from Windows 2000, XP, and 2003 systems, but not from Windows 2003 SP1 and later) as well as performing a live acquisition of the hard drive via the network.

Another tool that must be mentioned is F-Response (www.f-response.com), designed and developed by Matt Shannon (Matt started with Nigilant32 from www.agilerm.net). Although F-Response is not the same sort of tool as ProDiscover IR or other agent-based or remote access live-response tools available today, it has, without a doubt, changed the face of incident response as we know it. The short description of F-Response is that it provides you with remote, read-only access to a remote system’s hard drive. There are three editions of F-Response (Field Kit, Consultant, and Enterprise) and they all basically work in the same way; after installing and configuring the agent on the remote system, you can access that remote drive as a read-only local drive on your workstation. This works over the local network, between buildings (across the street or in another part of the city), to a remote data center … anywhere you have TCP/IP network connectivity. The F-Response agent is available to run on Linux, Mac OS X, and Windows systems, and on Windows systems (as of Version 2.03) the system’s physical memory will appear on your local system as a virtual drive. In essence, F-Response provides you with a tool-agnostic (you can use any tool you wish to acquire an image of the remote drive) mechanism for performing response activities. Although F-Response does not allow you to, say, run tlist.exe and get a process listing from the remote system, it does provide you with the means to easily acquire the contents of RAM, a topic that we will thoroughly discuss in next topic. The media that accompanies this topic contains a PDF document that describes, in detail, how to install F-Response Enterprise Edition (EE) remotely; as it turns out, this is also a stealthy method of deploying F-Response EE. This document is also available at the F-Response Web site for registered users of the F-Response product.

The limitation of the remote response methodology is that the responder must be able to access and, in some instances, log in to the systems via the network. If the Windows-style login (via NetBIOS) has been restricted in some way (NetBIOS not installed, firewalls/ routers blocking the protocols, or similar), this methodology will not work.

The Hybrid Approach (a.k.a. Using the FSP)

I know I said there are two basic approaches to response methodologies, and that’s true. There is, however, a third approach that is really just a hybrid of the local and remote methodologies, so for the sake of simplicity, we’ll just call it the hybrid methodology (the truth is that I couldn’t think of a fancy name for it). This methodology is most often used in situations where the responder cannot log in to the systems remotely but wants to collect all information from a number of systems and store that data in a central location. The responder (or an assistant) will go to the system with a CD or thumb drive (ideally, one with a write-protect switch that is enabled), access the system, and run the tools to collect information. As the tools are executed, each one will send its output over the network to the central "forensic server." In this way, no remote logins are executed, trusted tools are run from a nonmodifiable source, and very little is written to the hard drive of the victim system. With the right approach and planning, the responder can minimize his interaction with the system, reducing the number of choices he needs to make with regard to input commands and arguments as well as reducing the chance for mistakes.

Warning::

As we know from Locard’s Exchange Principle, there will be an exchange of "material." References to the commands run will appear in the Registry, and on XP systems files will be added to the Prefetch directory. It is not possible to perform live response without leaving some artifacts; the key is to understand how to minimize those artifacts, and to thoroughly document your response actions.

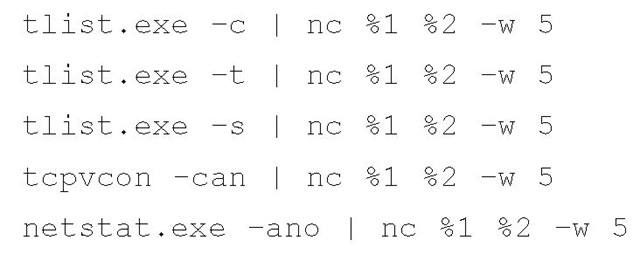

Perhaps the simplest way to implement the hybrid methodology is with a batch file. You’ve already seen various tools and utilities that you have at your disposal for collecting a variety of information. In most of the cases we’ve looked at, as with the local methodology, we’ve used CLI tools and redirected their output to a file. So, how do you get the information you’ve collected off the system? One way to do that is to use netcat, described as the "TCP/IP Swiss army knife" because of the vast array of things you can do with it. For our purposes, we won’t go into an exhaustive description of netcat; we’ll use it to transmit information from one system to another. First, we need to set up a "listener" on our forensic server, and we do that with the following command line:

D:\forensics>nc -L -p 80 > case007.txt

This command line tells netcat (nc.exe) to listen (really hard … keep the listener open after the connection has been closed) on port 80, and anything that comes in on that port gets sent (redirected, actually) to the file named case007.txt. With this setup, we can easily modify our batch file so that instead of writing the output of our commands to files, we send it through netcat to the "listener" on the forensic server:

Save this file as hybrid.bat, and then launch it from the command line, like so (D:\ is still the CD-ROM drive):

Once we run this batch file, we’ll have all our data safely off the victim system and on our forensic server for safekeeping and analysis.

Tip::

If you prefer not to pass this sort of information over the network "in the clear," an encrypted version of netcat, called cryptcat, is available on SourceForge at http://sourceforge.net/projects/cryptcat/.

Several freeware tools implement this hybrid methodology. One is the Forensic Server Project (FSP), released in my first topic (Windows Forensics and Incident Recovery, published by Addison-Wesley) in July 2004 and improved upon quite a bit through the release of the first edition of my second topic, titled Windows Forensic Analysis. The FSP is open source, written in Perl and freely available. The idea for the FSP arose from the use of netcat, whereby the responder would run a tool from a CD loaded in the CD drive of the victim system and then pipe the output of the command through netcat. Instead of displaying the output of the command on the screen (STDOUT), netcat would be responsible for sending the information to a waiting listener on the server, where the output of the command would be stored (and not written to the victim system’s hard drive). This worked well in some situations, but as the number of commands grew and the commands began to have a range of argument options, this methodology became a bit cumbersome. As more commands had to be typed, there was a greater chance for mistakes, and sometimes even a batch file to automate everything just wasn’t the answer. So, I decided to create the Forensic Server Project, a framework for automating (as much as possible) the collection, storage, and management of live-response data.

The FSP consists of two components: a server and a client. The server component is known as the FSP (really, I couldn’t come up with anything witty or smart to call it, and "Back Orifice" was already taken). You copy the files for the FSP to your forensic workstation (I use a laptop when I’m on-site), and when they’re run, the FSP will sit and listen for connections. The FSP handles simple case management tasks, logging, storage, and the like for the case (or cases) that you’re working on. When a connection is received from the client component, the FSP will react accordingly to the various "verbs" or commands that are passed to it.

The current iteration of the client component is called the First Responder Utility, or FRU. The FRU is very client-specific, because this is what is run on the victim system, from either a CD or a USB thumb drive. The FRU is really a very simple framework in itself in that it uses third-party utilities, such as the tools we’ve discussed in this topic, to collect information from the victim system. When one of these commands is run, the FRU captures the output of the command (which you normally see at the console, in a command prompt window) and sends it out to the FSP, which will store the information sent to it and log the activity. The FRU is also capable of collecting specific Registry values or all values in a specific Registry key. Once all the commands have been run and all data collected, the FRU will "tell" the FSP that it can close the log file, which the FSP will do.

The FRU is controlled by an initialization (i.e., .ini) file, which is a similar format to the old Windows 3.1 INI files and consists of four sections. The first section, [Configuration], has some default settings for the FRU to connect to the FSP—specifically, the server and port to connect to. This is useful in smaller environments or in larger environments where the incident response data collection will be delegated to regional offices. However, these settings can be overridden at the command line.

The next section is the [Commands] section, which lists the external third-party tools to be executed to collect information. Actually, these can be any Windows PE file that sends its output to STDOUT (i.e., the console). I have written a number of small tools, in Perl, and then "compiled" them into stand-alone executables so that they can be run on systems that do not have Perl installed. Many of them are useful in collecting valuable information from systems and can be launched via the FRU’s INI files. The format of this section is different from the other sections and is very important. The format of each line looks like the following:

The index is the order in which you want the command run; for example, you might want to run one command before any others, so the index allows you to order the commands. The command line is the name of the tool you’re going to run plus all the command-line options you’d want to include, just as though you were running the command from the command prompt on the system itself. These first two sections are separated by an equals sign (=) and are followed by a double colon (::). In most cases, the final sections of one of these lines would be separated by semicolons, but several tools (psloglist.exe from Sysinternals.com) have options that include the possibility of using a semicolon, so I had to choose something that likely would not be used in the command line as a separator. Finally, the last element is the name of the file to be generated, most often the name of the tool, with the .dat extension. When the output is sent to the FSP server, it will be written to a file within the designated directory, with the filename prepended with the name of the system being investigated. This way, data can be collected from multiple systems using the same running instance of the FSP.

One important comment about tools used with the FRU: Because the system you, as the investigator, are interacting with is live and running, you should change the name of the third-party tools you are using. One good idea is to prepend the filenames with something unique, such as f_ or fru_. This is in part due to the fact that your interaction with the system will be recorded in some way and due to the prefetch capability of Windows XP. Remember Locard’s Exchange Principle? Well, it’s a good idea to make sure the artifacts you leave behind on a system are distinguishable from all the other artifacts.

An example taken from an FRU INI file looks like the following:

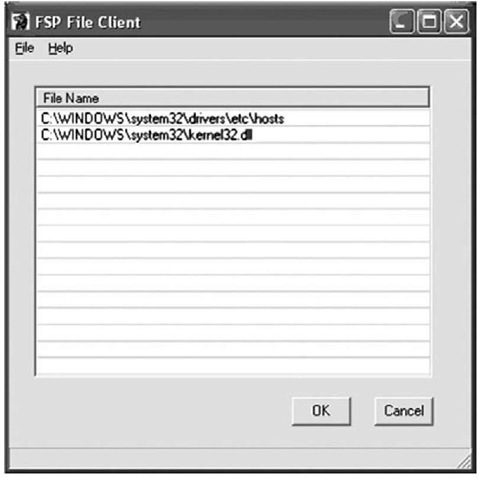

Another client is available for copying files off the victim system, if the investigator decides this is something she wants to do. Figure 1.15 illustrates the GUI for the file copy client, or FCLI.

Figure 1.15 File Copy Client GUI

To use the FCLI, the investigator simply launches it and selects File, then Config to enter the IP address and port of the FSP server. Then she selects File | Open and chooses the files she wants to copy. Once she’s selected all the files she wants to copy, she simply clicks the OK button. The FCLI will first collect the MAC times of the file and other metadata, and then compute the MD5 and SHA-1 hashes for the file. This information is sent to the FSP. Then the FCLI copies the binary contents of the file to the server. Once the file has completed the copy operation, the FSP server will compute hashes for the copied file and verify those against the hashes received from the FCLI prior to the copy operation. All the actions occur automatically, without any interaction from the investigator, and they’re all logged by the FSP.

The media that accompanies this topic includes several movie files that illustrate how to set up and use the FSP, along with instructions on where to get the necessary player.

Summary

In this topic, we took a look at live response, specifically collecting volatile (and some nonvolatile) information from live Windows systems. As we discussed, live systems contain a lot of data that we can use to enhance our understanding of an incident; we just need to collect that data before we remove power from the system so that we can acquire an image of the hard drive. We also discussed how changes to the computing landscape are increasingly presenting us with situations where our only viable option is to collect volatile data.

My intention in this topic was not to provide you with the "best practice" approach to collecting volatile data, for the simple fact that there isn’t one. My intention was to provide you with enough information such that based on your needs and the conditions of the situation you’re facing, you can not only employ your own "best practices," but when the situation changes, employ and justify the use of better practices.

All Perl scripts mentioned and described in this topic are available on the accompanying media, along with a stand-alone executable "compiled" with Perl2Exe. ProScripts for Technology Pathways’ ProDiscover product are also available on the accompanying media but are provided as Perl scripts only.

Solutions Fast Track

Live Response

- Locard’s Exchange Principle states that when two objects come into contact, material is exchanged between them. This rule pertains to the digital realm as well.

- Anything an investigator does on a live system, even nothing, will have an effect on the system and leave an artifact. Artifacts will be created on the system as it runs with no interaction from a user.

- The absence of an artifact where one is expected is itself an artifact.

- The order of volatility illustrates to us that some data has a much shorter "lifespan" or "shelf life" than other data.

- When we’re performing incident response, the most volatile data should be collected first.

- The need to perform live response should be thoroughly understood and documented.

- Without the appropriate attention being given to performing live response, an organization may be exposed to greater risk by the incident response activities than was posed by the incident itself.

- Corporate security policies may state that live response is the first step in an investigation. Responders must take care to follow documented processes, and document their actions.

What Data to Collect

- A great deal of data that can give an investigator insight into her case is available on the system while it is powered up and running, and some of that data is available for only a limited time.

- Many times, the volatile data you collect from a system will depend on the type of investigation or incident you’re presented with.

- When collecting volatile data, you need to keep both the order of volatility from RFC 3227 and Locard’s Exchange Principle in mind.

- The key to collecting volatile data and using that data to support an investigation is thorough documentation.

Nonvolatile Information

- Nonvolatile information (such as system settings) can affect your investigation, so you might need to collect that data as part of your live response.

- Some of the nonvolatile data you collect could affect your decision to proceed further in live response, just as it could affect your decision to perform a follow-on, postmortem investigation.

- The nonvolatile information you choose to collect during live response depends on factors such as your network infrastructure, security and incident response policies, or system configurations.

Live-Response Methodologies

- There are three basic live-response methodologies: local, remote, and a hybrid of the two. Knowing the options you have available and having implementations of those options will increase your flexibility for collecting information.

- The methodology you use will depend on factors such as the network infrastructure, your deployment options, and perhaps even the political structure of your organization. However, you do have multiple options available.

- When choosing your response methodology, be aware of the fact that your actions will leave artifacts on the system. Your actions will be a direct stimulus on the system that will cause changes to occur in the state of the system, since Registry keys may be added,files may be added or modified, and executable images will be loaded into memory. However, these changes are, to a degree, quantifiable, and you should thoroughly document your methodology and actions.

Frequently Asked Questions

Q: When should I perform live response?

A: There are no hard and fast rules stating when you should perform live response. However, as more and more regulatory bodies (consider SEC rules, HIPAA, FISMA, Visa PCI, and others) specify security measures and mechanisms that are to be used as well as the questions that need to be addressed and answered (was personal sensitive information accessed?), live response becomes even more important.

Q: I was involved with a case in which, after all was said and done, the "Trojan defense" was used. How would live response have helped or prepared us to address this issue?

A: By collecting information about processes running on the system, network connections, and other areas where you would have found Trojan or backdoor artifacts, you would have been able to rule out whether such things were running while the system was live. Your postmortem investigation would include an examination of the file system, including scheduled tasks and the like, to determine the likelihood that a Trojan was installed, but collecting volatile data from a live system would provide you with the necessary information to determine whether a Trojan was running at the time you were in front of the system.

Q: I’m not doing live response now. Why should I start?

A: Often an organization will opt for the "wipe-and-reload" mentality, in which the administrator will wipe the hard drive of a system thought to be compromised, then reload the operating system from clean media, reinstall the applications, and load the data back onto the system from backups. This is thought to be the least expensive approach. However, this approach does nothing to determine how the incident occurred in the first place. Some might say, "I reinstalled the operating system and updated all patches," and that’s great, but not all incidents occur for want of a patch or hotfix. Sometimes it’s as simple as a weak or nonexistent password on a user account or application (such as the sa password on SQL Server) or a poorly configured service. No amount of patching will fix these sorts of issues. Without determining how an incident occurred and addressing the issue, the incident is likely to occur again and in fairly short order after the bright, new, clean system is reconnected to the network. In addition, as I showed throughout this topic, a great deal of valuable information is available when the system is still running—information such as physical memory, running processes, network connections, and the contents of the Clipboard—that could have a significant impact on your investigation.