Consumer Media Libraries

Fig. 2.4 shows a typical user interface for authoring this level of metadata. In this example, these represent fields in the Content Description Object in Microsoft’s ASF specification [ASF04]. While these are five core attributes used by many Microsoft applications [Loomis04], ASF also supports an Extended Content Descriptor Object to include other name-value pairs and there is also a Metadata Library object for including more detailed information. Most formats support arbitrary name-value pairs but applications promote or require the use of standard attribute taxonomies. Also, as a practical matter, typical users are not aware of metadata unless the values are displayed by the applications that they use on a regular basis. Media players may make this visible through a “media properties” or “media info” dialog, but often this is not readily accessible from the top level user interface of the player application. However, with the emergence of personal media library manager applications, users become very aware of the metadata and may even be motivated to edit and maintain the data for their collections, but will certainly favor systems that manage this for them.

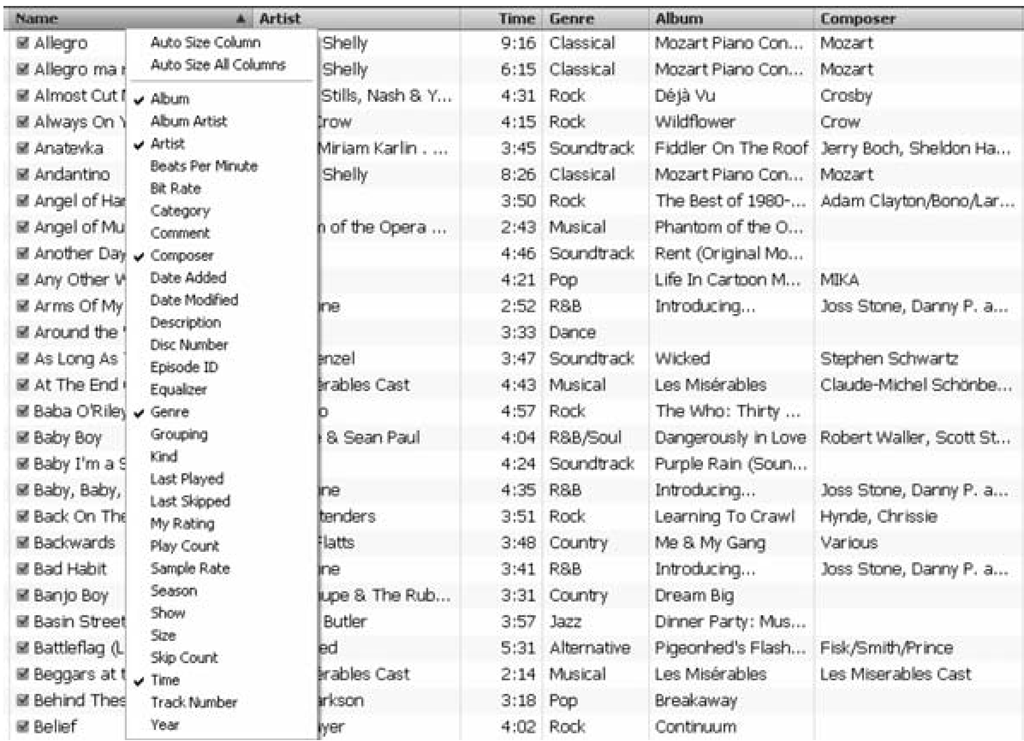

Fig. 2.5. Metadata for personal media collection (Apple® iTunes®) including items with missing metadata.

A sample media library metadata view is shown in Fig. 2.5, in which users locate content by sorting and browsing or search. A subset of the metadata fields is shown and the interface allows additional fields to be chosen (overlaid menu in the figure.) However many of these fields are of little value for search (e.g. beats per minute) or are only suitable for specific classes of content (e.g. episode ID). As can be seen in the figure, not all fields are fully populated, and there are often inconsistencies in this type of data.

Of course, all media library managers do not use the exact same set of metadata tags so if a media search engine were to ingest content using tags maintained in personal media libraries, it must perform translation or mapping of metadata tags. For example, Table 2.3 lists two major consumer media library applications tag names, and suggests a mapping. Note that metadata mapping, while trivial here, is typically much more difficult and in most cases shades of meaning are lost in this normalization process.

Table 2.3. Media library mangers label attributes differently (only a few representative differing tags names are shown; most tag names are the same).

|

Windows Media Player 10 |

Apple iTunes 7 |

|

Length |

Time |

|

Type |

Kind |

|

Mood |

N/A |

|

N/A |

Equalizer |

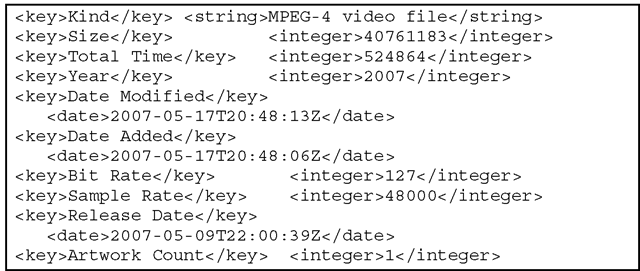

Libraries are maintained in persistent databases which may expose interfaces to allow other applications to interoperate. Alternatively, import and export of library data can be used for this purpose. For example, Apple’s iTunes exposes library data in a straightforward key-value XML format as shown in Fig. 2.6.

Fig. 2.6. Media library metadata for a single asset (extract from Apple® iTunes® library XML format).

UPnP Forum

Media library managers typically monitor the local filesystem, or a set of specified folders for the addition of new media and maintain a database of extracted metadata from media file formats that they support. While it is possible for consumers to monitor collections of media on other computers in their home network, configuring file sharing can be cumbersome due to system incompatibilities and security configurations such as firewalls. The emergence of easy to use, low cost, high capacity network-attached storage devices promotes the concept of shared media storage. To enable ease of use and to foster interoperability of networked media devices, the computing, consumer electronics and home automation industries formed the Digital Living Network Alliance (DLNA) and the Universal Plug and Play (UPnP) Forum which defines a range of standards and defines the concepts of Media Servers and Media Renderers [UPnP02.] UPnP uses the Digital Item Declaration Language defined in MPEG-21 [DIDL01] and in particular defines a subset referred to as DIDL-Lite.

MP3 ID3

ID3 tags arose out of a need for organizing MP3 music files into libraries for so called “jukebox” applications. Note that ID3 is not part of the MPEG specifications.ID3v2 supports not only global metadata, but also detailed metadata and even supports embedding of images. Beyond metadata related to the asset, ID3 supports application specific features such as encoding the number of times that a song has been played. As flexible as the ID3v2 format is, the precursor ID3v1 was extremely limited and rigid. (However, this utilitarian simplicity resulted in wide adoption.) The fields used in the ID3v1 Tag are shown in Table 2.4, and include song title, artist, album, year, comment, and genre. The strings can be a maximum of only 30 characters long and the genre is an 8 bit code referencing a static table of 80 values. Later this was extended to 148, and in ID3v1.1, the comment field was shortened to 28 characters and a track number was added.

Table 2.4. A subset of the Dublin Core metadata elements and their analogs in popular media file formats.

|

Dublin Core Elements |

Quick-Time |

Mobile MP4 |

MP3 ID3v1 |

Microsoft ASF base |

|

Title |

nam |

titl |

Song Title |

Title |

|

Creator |

aut,art |

auth |

Artist |

Author |

|

alb |

Album |

|||

|

Date |

day |

Year |

||

|

Description |

des,cmt |

dscp |

Comment |

Description |

|

Type |

Genre |

|||

|

Rights |

cpy |

cprt |

Copyright Rating |

3GP / QuickTime / MP4

The QuickTime file format [QT03] was adopted for the MPEG-4 file format (MPEG-4 part 14) and is sometimes referred to by its file extension MP4. Quicktime also uses the extension MOV for a wide range of media formats. The 3rd Generation Partnership Project (3GPP) defined the 3GPP file format (3GP) which is essentially a specific instance of MP4 with some extensions for mobile applications, such as including support for Adaptive Multi-Rate (AMR) format audio. It is used for exchanging messages using the MMS protocols. Metadata tags including author, title and description are defined in asset metadata within a user data “box” (ISO file format structure segment) [3GPP, p.29]. In addition to these fields, a box is defined to store ID3v2 tags directly without translation or mapping required.

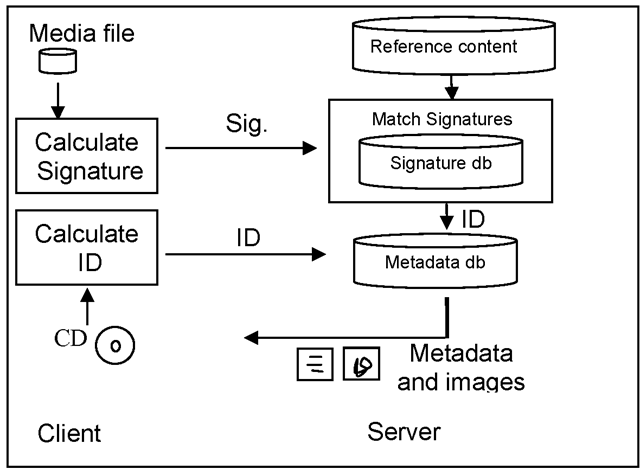

Metadata Services

In addition to parsing media files to extract metadata, personal library managers gather additional metadata from online repositories. The basic data flow is shown in Fig. 2.7. An application on the client reads an identifier from the local media or, in this example, calculates a unique identifier based on the length and number of the tracks on a compact disk, and then requests additional metadata from a server using this identifier as a query. The response returned can include detailed up to date metadata and images of cover art (or box art.) Library applications can also calculate signatures given a single media file, such as an MP3 file. The signature is used to query a database of signatures to determine a unique content identifier. This form of content identification is sometimes called “fingerprinting” and it has been used successfully to detect copyright infringement on music sharing services. User-generated content video hosting sites such as YouTube are notorious for hosting copyrighted content posted illegally by users. The sites take refuge from liability in the “safe harbor” provisions of the Digital Millennium Copyright Act [DMCA98], but are increasingly using fingerprinting to identify and remove copyrighted content. Fingerprints based on the audio component are useful for A/V applications as well -particularly in cases where users dub copyrighted audio into a video that they are producing. However, video fingerprinting systems are more recently available.

Fig. 2.7. Metadata services based on content identification.

Providers of this class of media metadata services include Gracenote® (formerly CDDB), Freedb, and All Media Guide AMG (allme-diaguide.com). For movies, the Internet Movie Database (IMDb) maintains a detailed collection of metadata for over 500,000 items (movies, TV episodes) and includes plot summaries, actors, and directors, in addition to typical metadata such as genre, rating, and title. JPEG images of “box art” are also available. The database is available for download for noncommercial applications, and imdb.com provides some basic tools for querying the database locally. Other entities such as AMG maintain similar movie metadata databases and provide subscription based services for access. Many of these services also maintain Web sites for end users to search and browse media metadata, some with a business model of driving sales of hard media (DVDs or CDs.)

Content Identification

Content identification is a critical component for these systems and several unique content ID (CID) methods have been developed for this purpose. Fingerprinting is still required for cases where the ID is not trusted, such as in user contributed video hosting applications, or for content systems that do not make use of IDs such as MP3 file sharing. Also fingerprinting systems use content IDs to link the signatures back to the source content metadata. Content identification is a general function and many standards incorporate some form of CID. In ISO, the TC 346/SC 9 group develops documentation identification standards such as ISBN, ISAN, and several others.

• ISAN: The International Standard Audiovisual Number (ISO 15706) is a system for uniquely identifying an asset, independent of the broadcast schedule or recording medium. MPEG-2 and MPEG-4 have a field for ISAN and the SMPTE Metadata dictionary supports ISAN as an identifier/locator. The ISAN has a standardized length as does the ISBN.

• UMID: Unlike the ISAN which is intended for the entire work as a unit, UMID Unique Material Identifiers (specified in SMPTE 300M) are used in Material Exchange Format (MXF) and can reference shots and even individual frames. Rather than being issued by an organization, UMIDs can easily be generated by camcorders in a manner similar to the way SMPTE timecodes are generated. Extended UMIDs go beyond identification and encode metadata such as the creation date and time, location and the organization.

• ISRC: The International Standard Recording Code, ISO 3901, is used for sound and music video recordings and includes a representation of country of origin, recording entity (record label), year and a 5 digit serial number.

• CID: This content identifier originated in Japan and is a product of the Content ID Forum or cIDf. The CID is designed to be embedded within the digital object, typically video or images.

• CRID: TV-Anytime defines the Content Reference Identifier or CRID to uniquely identify content independent of its location or instance. It is used for EPG applications. While typically refering to a single program, CRIDs may also refer to groups of programs or segments of programs.

• ISWC: The International Standard Musical Work Code, ISO 1507, is intended to uniquely identify a musical work for rights purposes as distinct from the particular recording for which the ISRC is intended.

• ISMN: The International Standard Music Number (ISO 10957) can be veiwed as a subset of the ISBN for works related to music such as scores and lyrics.

• DOI: Digital Object Idendifier (IDF – International DOI Foundation, the syntax is defined in ANSI/NISO Z39.84) offers a system for persistent identification and management of digital items. The strings are opaque unlike CID, ISRC, etc. Initally designed for text documents.

• GUID: Some systems such as RSS support a GUID or Globally Unique Identifier element. In RSS it is an optional string of arbitrary length.RSS will be explained in detail later.

Recorded Television

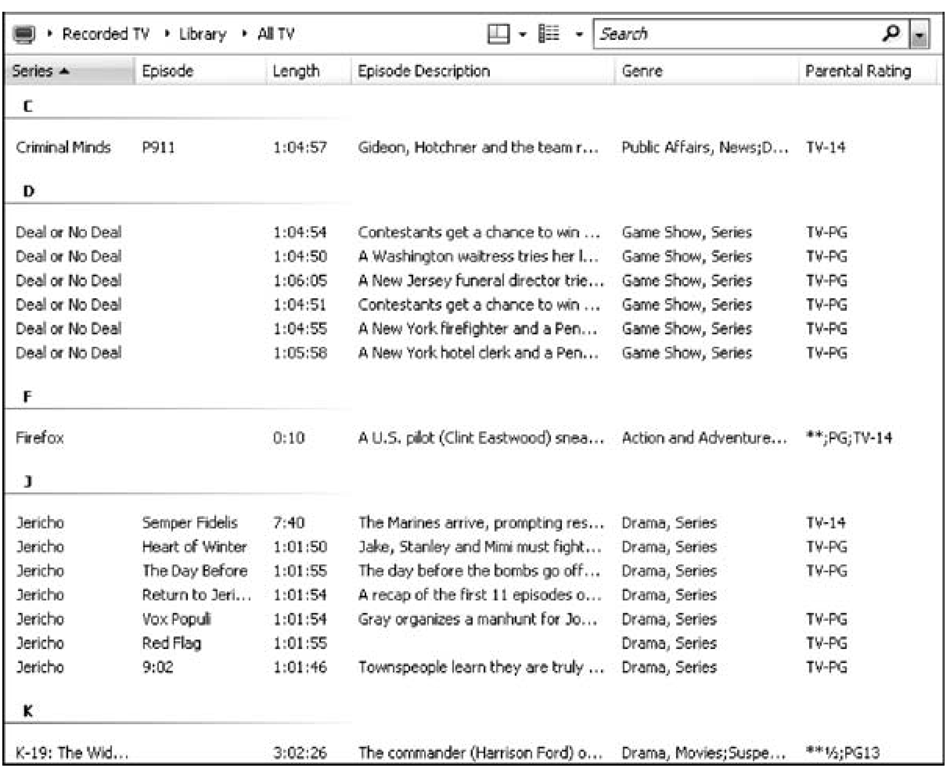

Personal media library managers used on media center PCs such as the Windows Media Center® and MythTV (Linux) organize recorded television by leveraging the EPG metadata pulled from service providers as shown in Fig. 2.8. The names of the columns across the top including series, episode, length (run time), description, genre, and rating are representative, and many other fields are available to help users find and manage their personal content collections. As is typically the case with metadata sources, some of the data is absent (e.g. episode description) or questionably formatted (e.g. the rating value of “**;PG;TV-14” seems to be a composite of multiple rating systems: MPAA, ATSC, and popularity rating).

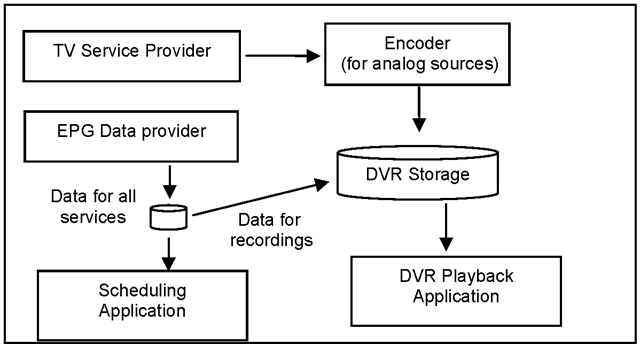

Standalone DVRs, or DVRs integrated into set-top boxes may include a networking capability to support media sharing in the home network. The library manager can display the recorded program metadata, however rights issues may restrict this sharing to only other DVRs in the home (a service feature called “whole home DVR”). Fig. 2.9 represents the dataflow for both the EPG metadata and content in a typical DVR application. Although not shown in the figure, for recorded movies, a combination of the EPG metadata and data from Internet providers such as IMdb or AMG® as discussed above may be used. We will focus on EPG in detail later.

Fig. 2.8. Media library metadata.

Fig. 2.9. Data flow for DVR EPG metadata.