To further illustrate the challenges and opportunities for video search, this topic will address the nature, availability, and attributes of different sources of video data. Search engines leverage all available information relevant to media and this topic will provide details about available metadata for different types of video including electronic program guides, content identifiers, video on demand packages, and syndication standards. We will also introduce representations of textual information associated with media such as transcripts, closed captions, and subtitles. The breadth of metadata sources is described at a high level, and more detailed information is provided for selected domains including, broadcast television, digital video recorders (DVRs), consumer video, and Internet sources such as podcasts and video blogs. After media is published on the Web, additional metadata may accrue from social sources in the form of tags, ratings, or even user contributed subtitles, all of which can be exploited by video search engines to produce more accurate results.

Evolution of Digital Media Metadata

From planning, through production, editing, distribution, and archiving, metadata is used throughout the video life cycle to manage, locate, and track rights and monetize video content. Historically, in the days of film and analog video, the tools used for this process were primitive, consisting of handwritten notes with labels and numbering schemes for tapes. As video has gone digital, so has the metadata as well as the production and distribution processes. This migration is not totally complete: film is still the dominant archival format for some content classes and there are legacy archives in a range of tape formats both analog and digital.

Consumer Video Metadata

On the consumer video side, the equipment costs and time scales are radically different. Even here, while photography has gone digital, and most cameras can capture video, digital camcorders have only recently begun to replace analog models. This move to digital consumer media capture has resulted in some limited benefits related to metadata. Looking at the file times of their personal media archives, consumers can tell the exact time and date of photos and videos that they’ve shot. However, the filename is typically only an obscure sequence number and the content of the media is only revealed when it is played. New devices, including camera phones, record each shot in a separate file, which is great for determining the time of the shot, but only adds to the problem of locating particular content due to the sheer volume of files created. In this respect, consumer video metadata is not much farther along than the pencil and paper days of video production. Some promising developments on this front include video cameras that log start and stop to provide a shot index, support GPS (Global Positioning System) information as well as advances in consumer video editing packages that encourage the addition of titles and other metadata.

Metadata Loss

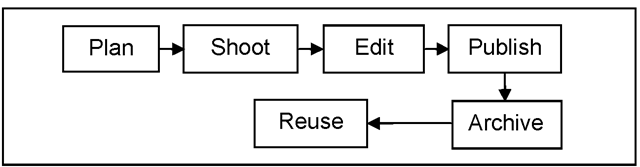

Metadata is of critical importance for search systems and it is important to capture all available metadata because in addition to the objective media parameters, good metadata tagging captures the subjective essence of the media. It is often how users refer to content (by title, actors, etc.) Today’s automated content analyses tools can augment this data, but cannot extract the high-level semantics reliably. Unfortunately, in many cases metadata is lost somewhere in the process from capture to delivery to end users. Video asset management systems preserve and manage metadata as well as the media throughout the content life cycle. The typical asset life cycle includes not only preproduction, editing / post-production, and publication or distribution but also archiving and reuse for future production cycles (see Fig. 2.1.) The problems for metadata integrity and preservation arise as media flows from one system to another in this process. The production systems typically are assembled over time from various vendors and may involve handoff of content between several organizations, each with their own policies and practices for metadata.

Fig. 2.1. Stages in the video content lifecycle. Metadata can be created and captured at each stage, but may not be preserved throughout the process.

Convergence of the TV and the PC is underway, and has been for 10 years or more, but the broadcast and Internet video communities still represent two distinct camps, and this has implications in the area of metadata standards. Having been designed for a medium that is international and online by nature, the Internet standards are most accessible for search engines and are based on technologies such as XML that are familiar to Internet engineers and application developers. Today, the concept of converged services in the telecommunications industry refers not only to TV and PC but also includes mobile handsets and connotes seamless access to media services across multiple devices and network connection scenarios. IPTV and the move to file based video production workflows will help bring the IP and broadcast communities closer together.

Metadata Standards

Metadata standards have emerged to facilitate exchange of media and its description between organizations and among systems and components in the video production, distribution, and archiving processes. Unfortunately, different industries, communities and geographic regions have developed their own standards designed and optimized for their own purposes. Therefore a truly universal video search engine must deal with a wide range of source metadata formats. Table 2.1 lists some of the metadata standards and the responsible bodies that have a bearing on video search applications, but there are many others such as ATSC (e.g. PSIP), ETSI, DVB, and ATIS/IIF. A detailed treatment of broadcast metadata is beyond our scope but we will introduce some of the metadata systems and interested readers can consult the references for more information (e.g. [Lug04]).

Table 2.1. Representative metadata standards.

|

Standard |

Body |

|

MPEG-7, MPEG-21 |

ISO / IEC – International Standards Organization / International Electrotechnical Commission, Motion Picture Experts Group |

|

UPnP |

Universal Plug and Play Forum (MPEG-21 DIDL-Lite, etc.) |

|

MXF, MDD |

SMPTE – Society of Motion Picture and Television Engineers |

|

AAF |

AWMA – Advanced Media Workflow Association |

|

ADI, OCAP |

CableLabs® (includes VoD) |

|

TV-Anytime |

Industry Forum, now ETSI – European Telecommunication Standards Institute |

|

Timed Text |

W3C, 3GPP, (MPEG) |

|

P/Meta, BWF |

EBU – European Broadcasting Union |

|

Dublin Core |

DCMI – Dublin Core Metadata Initiative, US NISO Z39.85, ISO 15836, OAI |

|

RSS |

Harvard |

|

Podcast |

Apple |

|

MediaRSS |

Yahoo |

|

ID3 |

Informal |

Dublin Core

The Dublin Core Metadata Initiative defines a set of 15 elements used for a wide range of bibliographic applications [DC03] and many media metadata systems incorporate the element tag names or a subset of the core elements. (The name refers to the origin of the initiative at the Online Computer Library Center workshop in Dublin, Ohio in 1995.) The core elements are easily understood, and are widely used in media metadata applications such as RSS Podcasts and UPnP item descriptions through the incorporation of XML name space extensions. Also, the Open Archives Initiative which promotes interoperability among XML repositories utilizes the DC element set [Lag02].

The core elements are not sufficient for most applications and can be thought of as a sort of least-common-denominator for metadata. For example, the Dublin Core defines a “date” element, but media applications may need to store multiple dates: such as the date that original work was published, the date performed, the date broadcast, etc. The Dublin Core element set has been extended (and referred to as the Qualified Dublin Core) to address this [Kur06]. There are three XML namespaces that define DC encoding (Table 2.2).

Table 2.2. Dublin Core metadata and namespaces.

|

Elements |

Namespace |

|

The 15 Dublin Core Metadata Elements (DCMES) |

http://purl.org/dc/elements/L1/ |

|

DCMI elements and qualifiers |

http://purl.org/dc/terms/ |

|

DCMI Type Vocabulary |

http ://purl.org/dc/dcmitype/ |

MPEG-7

MPEG-7 is an ISO/IEC specification titled the “Multimedia Content Description Interface” with a broad scope of standardizing interchange and representation of media metadata from low-level media descriptors, and up through semantic structure [ISO/IEC 15938]. Further, content management, navigation (e.g. summaries, decompositions) as well as usage information and user preferences are covered. Unlike some other metadata standards, MPEG-7 is not industry specific, and it is highly flexible. There are over 450 defined metadata types, and many type values can in turn be represented by classification schemes [Smi06]. To promote a wide range of applications and to allow for media analysis algorithm development, MPEG-7 does not specify how to extract or utilize media descriptors, but rather it focuses on how to represent this information. For example, visual descriptors include contour-based shape descriptors for representing image regions, but no assumptions are made about preferred image segmentation algorithms. MPEG-7 components are used in other metadata systems such at TV-Anytime and MPEG-21.

MPEG-21

MPEG-21 defines packages of multimedia assets and promotes interoperability of systems throughout the asset value-chain [ISO/IEC 210000.] The concept of a digital item (DI) is introduced as well as a digital item declaration language (DIDL). MPEG-21 Digital Item Identifiers (DII) serve the purpose of uniquely identifying content, and incorporate the use of application-specific identifiers (such as ISRC, see Sect. 2.3.6) rather than specifying yet another competing system. MPEG-21 is broad in scope addressing a broad range of practical issues encountered in monetizing media assets such as DRM, and media adaptation for various consumption contexts.

The concept articulated in the MPEG-21 Digital Item Adaptation (DIA) is particularly interesting for video search engine systems and services that are built around them. The ultimate goal is that of promoting “Universal Multimedia Access” (UMA) to allow content producers to create a unified media item or package and allow viewers to receive the content on any device at any time. While fully automating this process may not yield ideal results, the standard allows content creators to specify in as much detail as possible, the manner in which the content is to be adapted. Not only are the resources adaptable as would be expected, but also the descriptions of those resources are adaptable as well. Adaptation is possible at both the signal level (e.g. media transcoding) as well as at the semantic level. One aspect of adaptation involves the terminal capabilities such as available codecs, input/output capabilities, bandwidth, power, CPU, storage and DRM systems supported by the device. Beyond this, MPEG-21 supports adaptability for channel conditions, accessibility (for users with disabilities), as well as consumption context parameters such as location, time, and the visual and audio environment. Of particular interest for the current subject of media metadata that we are considering is the MPEG-21 notion of “metadata adaptability.” Three major classifications are brought to light:

1. Filtering – a particular application will use only a subset of all of the possible metadata available for a particular digital item;

2. Scaling – reducing the size or volume of metadata as required by the consumption context (e.g. bandwidth or memory constraints);

3. Integration – merging descriptions from various sources for the digital item of interest.

MPEG-21 supports many more capabilites relevant for video services, such as session mobility. Interested readers are referred to [Burnett06] from which we have drawn to provide a breif introduction to this topic.

The Universal Plug and Play (UPnP) specification defines a “DIDL-Lite” which is a subset of the MPEG-21 DIDL [UPnP02]. This is an example of the incorporation of multiple metadata specifications since the Dublin Core elements are also supported via XML namespace declarations in addition to the defined “UPnP” namespace which augments the DIDL-Lite to form the basis of the specification. Further, this mechanism can be extended, for example, to include “vendor metadata” such as DIG35, XrML or even MPEG-7 [UPnP02].