Discussion

The high accuracy shown for the results in Figures 6.4(c) and 6.5(c) indicates that the Hopfield neural network has considerable potential for the accurate mapping of land cover class proportions within pixels and, consequently reducing the uncertainty of the soft classification it was derived from. Spatial resolution and accuracy increases over hard classification also demonstrate that the Hopfield neural network predictions have reduced classification uncertainty by mapping class proportions at the sub-pixel scale. While the soft classifications retain more information than hard classification, the resultant predictions still display a large degree of uncertainty. The results in Figures 6.4(c) and 6.5(c) demonstrate that it is possible to map land cover at the sub-pixel scale, thus, reducing the error and uncertainty inherent in maps produced solely by hard or soft classification.

Landsat TM

Visual assessment of Figure 6.4 suggests immediately that the Hopfield neural network has mapped the land cover more accurately than the hard classifier. By forcing each 30 x 30 m pixel to represent just one land cover class, Figure 6.4(b) appears blocky, and does not recreate the boundaries between classes well. The use of such large classification units, in comparison to the land cover features of interest, resulted in uncertainty as to whether the classification represented correctly the land cover. In contrast, the Hopfield neural network prediction map appeared to have recreated each land cover feature more precisely.

The statistics shown in Tables 6.1 and 6.3 confirm the above visual assessments. For all classes, the Hopfield neural network produced an increase in correlation coefficient over the hard classification, particularly for the two grass classes, with increases of greater than 0.1 in both cases. These increases in precision were mirrored by the closeness and RMSE values, which were less for the Hopfield neural network approach than for the hard classification for all classes, and overall. These statistics indicate that the Hopfield neural network approach produced a reduction in classification uncertainty over the hard classification-derived prediction.

SPOT HRV

Visual assessment of Figure 6.5 suggests that the Hopfield neural network approach was able to map land cover more accurately than the hard classifier. The 20 x 20 m pixels of the hard classification appeared insufficient to recreate accurately the boundaries between classes, whereas the Hopfield neural network approach appears to have achieved this accurately.

Tables 6.2 and 6.4 confirm the above assessments. Again, the Hopfield neural network approach maintained class area proportions more accurately than the hard classification, as shown by the AEP statistics. The Hopfield neural network approach also recreated each land cover class more precisely with correlation coefficient values of above 0.96 for all classes, and as high as 0.991 for theploughed class. Such increases were also apparent in the closeness and RMSE values, indicating that the Hopfield neural network reduced the uncertainty shown in the hard-classified prediction.

Comparisons between the two Hopfield neural network predictions in Figures 6.4(c) and 6.5(c) show that, as expected, the prediction map derived from simulated SPOT HRV imagery produced the more accurate results. The fact that the SPOT HRV image had a finer spatial resolution meant that by using the same zoom factor, its prediction map was also of a finer spatial resolution. This enabled the Hopfield neural network to recreate each land cover feature more accurately and with less uncertainty about pixel composition.

Further work on the application of this technique to simulated and real Landsat TM imagery can be found in Tatem et al. (2002a) and Tatem et al. (2001b), respectively.

Super-resolution Land Cover Pattern Prediction

The focus of each of the techniques described so far on land cover features larger than a pixel (e.g. agricultural fields), enables the utilization of information contained in surrounding pixels to produce super-resolution maps and, consequently, reduce uncertainty. However, this source of information is unavailable when examining imagery of land cover features that are smaller than a pixel (e.g. trees in a forest). Consequently, while these features can be detected within a pixel by soft classification techniques, surrounding pixels hold no information for inference of sub-pixel class location. Attempting to map such features is, therefore, an under-constrained task fraught with uncertainty. This section describes briefly a novel and effective approach introduced in Tatem et al. (2001c, 2002b), which forms an extension to the Hopfield neural network approach described previously. The technique is based on prior information on the spatial arrangement of land cover. Wherever prior information is available in a land cover classification situation, it should be utilized to further constrain the problem at hand, therefore, reducing uncertainty. This section will show that the utilization of prior information can aid the derivation of realistic prediction maps. For this, a set of functions to match land cover distribution within each pixel to this prior information was built into the Hopfield neural network.

The new energy function

The goal and constraints of the sub-pixel mapping task were defined such that the network energy function was,

where![]() represents the output values for neuron

represents the output values for neuron![]() of the z semivariance functions.

of the z semivariance functions.

The semivariance functions

The semivariance functions aim to model the spatial pattern of each land cover at the sub-pixel scale. Prior knowledge about the spatial arrangement of the land cover in question is utilized, in the form of semivariance values, calculated by:

where![]() is the semivariance at lag l, and N(l) is the number of pixels at lag l from the centre pixel (h,i,j ). The semivariance is calculated for n lags, where n = the zoom factor, z, of the network, from, for example, an aerial photograph. This provides information on the typical spatial distribution of the land cover under study, which can then be used to reduce uncertainty in land cover simulation from remotely sensed imagery at the sub-pixel scale. By using the values of

is the semivariance at lag l, and N(l) is the number of pixels at lag l from the centre pixel (h,i,j ). The semivariance is calculated for n lags, where n = the zoom factor, z, of the network, from, for example, an aerial photograph. This provides information on the typical spatial distribution of the land cover under study, which can then be used to reduce uncertainty in land cover simulation from remotely sensed imagery at the sub-pixel scale. By using the values of![]() from equation (17), the output of the centre neuron,

from equation (17), the output of the centre neuron,![]() which produces a semivariance of

which produces a semivariance of![]() can be calculated using:

can be calculated using:

The

semivariance function value for lag

s then given by:

If the output of neuron![]() is lower than the target value,

is lower than the target value,![]() calculated in equation (18), a negative gradient is produced that corresponds to an increase in neuron output to counteract this problem. An overestimation of neuron output results in a positive gradient, producing a decrease in neuron output. Only when the neuron output is identical to the target output, does a zero gradient occur, corresponding to

calculated in equation (18), a negative gradient is produced that corresponds to an increase in neuron output to counteract this problem. An overestimation of neuron output results in a positive gradient, producing a decrease in neuron output. Only when the neuron output is identical to the target output, does a zero gradient occur, corresponding to![]() in the energy function (equation (16)). The same calculations are carried out for lags 2 to z.

in the energy function (equation (16)). The same calculations are carried out for lags 2 to z.

Results

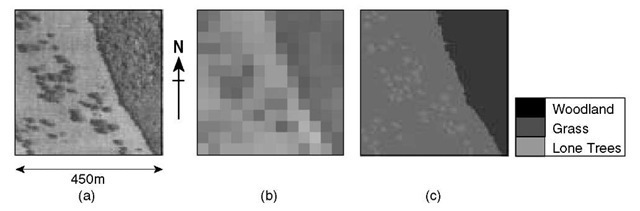

The new network set up was tested on simulated SPOT HRV imagery. Figure 6.6(a) shows an aerial photograph of the chosen test area, which contained both a large area of woodland (feature larger than the image support), and lone trees (smaller than the image support) amongst grassland. The reference image in Figure 6.6(c) was degraded, using a square mean filter, to produce three class proportion images that provided input to the Hopfield neural network.

Figure 6.6 Construction of simulated SPOT HRV image. (a) Aerial photograph of an agricultural area near Bath, UK. (b) Image with a spatial resolution equivalent to SPOT HRV (20m). (c) Reference image derived from aerial photographs

Table 6.5 Accuracy assessment results for the hard classification map shown in Figure 6.7(b) (reproduced, with permission, from Tatem et al., ‘Super-resolution mapping of multiple scale land cover features using a Hopfield neural network.

|

Class |

CC |

AEP |

S |

RMSE |

|

Lone Trees |

N/A |

1.0 |

0.062 7 |

0.251 |

|

Woodland |

0.887 |

0.0095 |

0.0476 |

0.218 |

|

Grass |

0.761 |

- 0.101 |

0.11 |

0.331 |

|

Entire Image |

0.00434 |

0.073 |

0.271 |

Table 6.6 Accuracy assessment results for the Hopfield neural network prediction map shown in Figure 6.7(c) (reproduced, with permission, from Tatem et al., ‘Super-resolution mapping of multiple scale land cover features using a Hopfield neural network,’ Proceedings of the International Geoscience and Remote Sensing Symposium, Sydney) © 2001 IEEE

|

Class |

CC |

AEP |

S |

RMSE |

|

Lone Trees |

0.43 |

- 0.161 |

0.0721 |

0.269 |

|

Woodland |

0.985 |

0.0085 |

0.0066 |

0.0809 |

|

Grass |

0.831 |

0.0136 |

0.0786 |

0.28 |

|

Entire Image |

0.00711 |

0.052 |

0.229 |

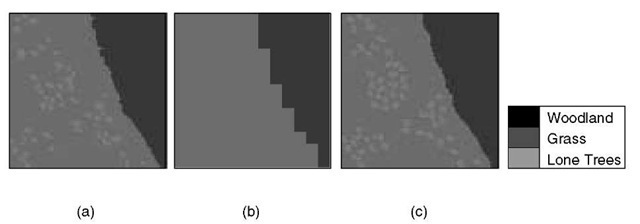

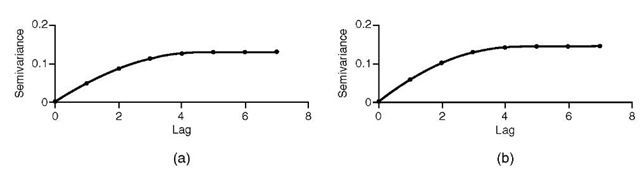

In addition, a variogram (Figure 6.8(a)) was calculated from a small section of Figure 6.6(c) to provide the prior spatial information on the lone tree class, required by the SV function. After convergence of the network with z = 7, the map shown in Figure 6.7(c) was produced, and a traditional hard classification was undertaken for comparison. Both images were compared to the reference data, and accuracy statistics calculated. These included correlation coefficients between classes, area error proportion, closeness and RMSE, all shown in Tables 6.5 and 6.6.

Discussion

The results show clearly that the super-resolution technique provided an increase in mapping accuracy over that derived with a traditional hard classification. Visual inspection of Figure 6.7 revealed that the hard classification failed to identify the lone tree class, and produced an uneven woodland boundary. The hard classifier has attempted to minimizetheprobability of mis-classification and, as each lone tree covers less than half a pixel, no pixels were assigned to this class. In contrast, the Hopfield network prediction appeared to have identified and mapped both features correctly. This was confirmed after inspection of the accuracy statistics and variograms. While there was little difference between the AEP values in Tables 6.5 and 6.6, showing that both techniques maintained class area to a similar degree, the other statistics showhow accuratetheHopfield network was. Thewoodlandclasswasmapped accurately, with a correlation coefficient of 0.985, compared to just 0.887 using hard classification.

Figure 6.7 Result of super-resolution mapping. (a) Reference image. (b) Hard classification of the image shown in Figure 6.6(b) (spatial resolution 20m). (c) Hopfield neural network prediction map (spatial resolution 2.9 m).

Figure 6.8 (a) Variogram of lone tree class in reference image (Figure 6.6(c)). (b) Variogram of lone tree class in Hopfield network prediction image (Figure 6.7(c)).

Overall image results also show an increase in accuracy, with closeness and RMSE values of just 0.052 and 0.229, respectively. The only low accuracy was for the lone tree class, with a correlation coefficient of just 0.43. H owever, as the aim of the SV function is to recreate the spatial arrangement of sub-pixel scale features, rather than accurately map their locations, this was expected. The most appropriate way to test the performance of this function was, therefore, to compare the shape of the variograms, which confirms that a similar spatial arrangement of trees to that of the reference image had been derived (Figure 6.8). Such results demonstrate the importance of utilizing prior information to constrain inherently uncertain problems in order to increase the accuracy and reduce the uncertainty in predictions.

Summary

Accurate land cover maps with low levels of uncertainty are required for both scientific research and management. Remote sensing has the potential to provide the information needed to produce these maps. Traditionally, hard classification has been used to assign each pixel in a remotely sensed image to a single class to produce a thematic map. However, where mixed pixels are present, hard classification has been shown to be inappropriate, inaccurate and the source of uncertainty.

Soft classification techniques have been developed to predict class composition of image pixels, but their output are images of great uncertainty that provide no indication of how these classes are distributed spatially within each pixel. This topic has described techniques for locating these class proportions and, consequently, reducing this uncertainty to produce super-resolution maps from remotely sensed imagery.

Pixels of mixed land cover composition have long been a source of inaccuracy and uncertainty within land cover classification from remotely sensed imagery. By attempting to match the spatial resolution of land cover classification predictions to the spatial frequency of the features of interest, it has been shown that this source of uncertainty can be reduced. Additionally, extending such approaches to include extra sources of prior information can further reduce uncertainty to produce accurate land cover predictions. This topic demonstrated advancement in the field of land cover classification from remotely sensed imagery and it is hoped that it stimulates further research to continue this advancement.

![tmp255-234_thumb[2][2] tmp255-234_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/02/tmp255234_thumb22_thumb.png)

![tmp255-239_thumb[2][2] tmp255-239_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/02/tmp255239_thumb22_thumb.png)

![tmp255-248_thumb[2][2] tmp255-248_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/02/tmp255248_thumb22_thumb.jpg)

![tmp255-249_thumb[2][2] tmp255-249_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/02/tmp255249_thumb22_thumb.jpg)

![tmp255-251_thumb[2][2] tmp255-251_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2012/02/tmp255251_thumb22_thumb.jpg)