Simulated Remotely Sensed Imagery

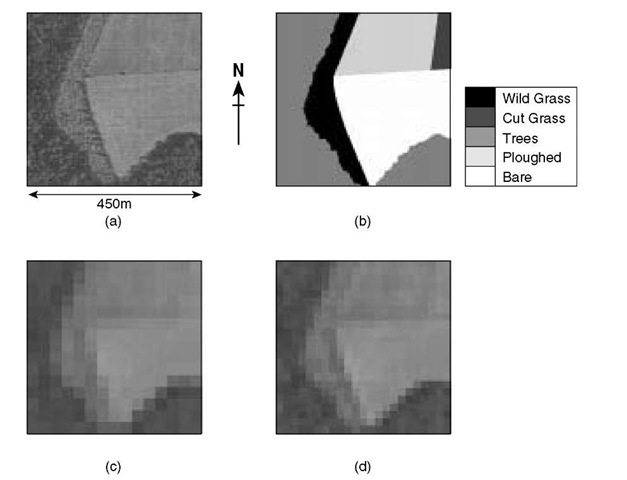

In this section, simulated remotely sensed imagery from two sensors, Landsat TM and SPOT HRV, are used to enable refinement of the technique and clear demonstration of the workings of the network. The use of simulated imagery avoids the uncertainty inherent in real imagery caused by the sensor’s PSF, atmospheric and geometric effects and classification error. These effects are explored in Tatem et al. (2001b). Figure 6.1 shows the area near Bath, UK, used for this study, the ground or reference data and the simulated satellite sensor images. The corresponding class proportion images are shown in Figures 6.2 and 6.3. All theproportion images werederived by degrading the reference data using a square mean filter, to avoid the potential problems of incorporating error from the process of soft classification. For the simulated Landsat TM imagery, each class within the reference data was degraded to produce pixels with a spatial resolution of 30 m. For the simulated SPOT HRV imagery, the reference data were degraded by a smaller amount to produce pixels of 20 m.

Network settings

The class proportion images shown in Figures 6.2 and 6.3 provided the inputs to the Hopfield neural network. These proportions were used to initialize the network and provide area predictions for the proportion constraint.

Figure 6.1 (a) Aerial photograph of an agricultural area near Bath, UK. (b) Reference data map derived from aerial photographs. (c) Image with a spatial resolution equivalent to Landsat TM (30m). (d) Image with a spatial resolution equivalent to SPOT HRV (20m)

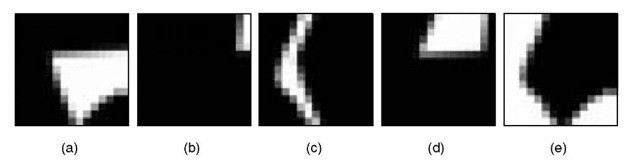

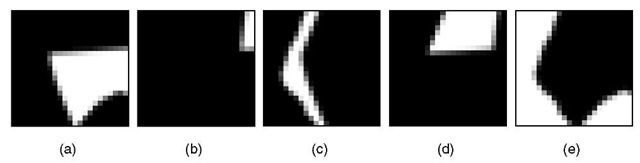

Figure 6.2 Class area proportions for the simulated Landsat TM imagery: (a) Bare, (b) Cut Grass, (c) Wild Grass, (d) Ploughed, (e) Trees. Pixel grey level represents class area proportion, ranging from white (100%) to black (0%)

For both sets of proportions, a zoom factor of z = 7 was used. This meant that from the simulated Landsat TM input images (with a spatial resolution of 30 m), a prediction map with a spatial resolution of 4.3 m was produced. From the simulated SPOT HRV input images (with a spatial resolution of 20 m), a prediction map with a spatial resolution of 2.9 m was produced. In both cases, the constraint weightings ki, k2, k3 and k4 were set to 1.0, to ensure that no single function had a dominant effect on the energy function.

Figure 6.3 Class area proportions for the simulated SPOT HRV imagery: (a) Bare, (b) Cut Crass, (c) Wild Crass, (d) Ploughed, (e) Trees. Pixel grey level represents class area proportion, ranging from white (100%) to black (0%)

Hard classification

To evaluate the success of the Hopfield neural network technique, the traditional method of producing a land cover map from a satellite sensor image was also carried out for comparison. This involved undertaking a ‘hard’ classification of the imagery using a maximum likelihood classifier (Campbell, 1996). Comparison of the results of this classification to the Hopfield neural network approach should highlight the error and uncertainty issues which arise from using hard classification on imagery where the support is large relative to the land cover features.

Accuracy assessment

Four measures of accuracy were estimated to assess the difference between each network prediction and the reference images. These were:

(i) Area Error Proportion (AEP)

One of the simplest measures of agreement between a set of known proportions in matrix y, and a set of predicted proportions in matrix a, is the area error proportion (AEP) per class,

where, q is the class and n is the total number of pixels. This statistic informs about bias in the prediction image.

(ii) Correlation Coefficient (CC)

The correlation coefficient, r, measures the amount of association between a target, y, and predicted, a, set of proportions,

where,![]() is the covariance between y and a for class q and

is the covariance between y and a for class q and![]() are the standard deviations of y and a for class q. This statistic informs about the prediction variance.

are the standard deviations of y and a for class q. This statistic informs about the prediction variance.

(iii) Closeness (S)

This measures the separation of the two data sets, per pixel, based on the relative proportion of each class in the pixel. It is calculated as:

where![]() is the proportion of class i in a pixel from reference data,

is the proportion of class i in a pixel from reference data,![]() is the measure of the strength of membership to class q, taken to represent the proportion of the class in the pixel from the soft classification, and c is the total number of classes.

is the measure of the strength of membership to class q, taken to represent the proportion of the class in the pixel from the soft classification, and c is the total number of classes.

(iv) Root Mean Square Error (RMSE)

The root mean square error (RMSE) per class,

informs about the inaccuracy of the prediction (bias and variance). 4.4 Results Illustrative results were produced using the Hopfield network run on the class proportions derived from the simulated satellite sensor imagery.

Landsat TM

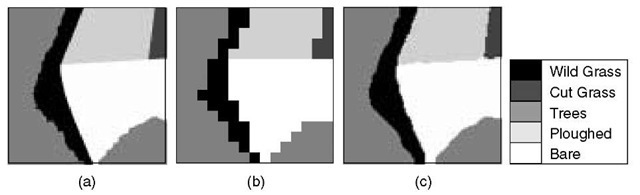

After convergence of the network with a zoom factor of z = 7, a prediction image was produced (Figure 6.4(c)) with spatial resolution seven times finer than that of the input class proportions in Figure 6.2. In addition, a maximum likelihood classification was produced for comparison (Figure 6.4(b)). Per-class and overall accuracy statistics were calculated to assess the differences between each map and the reference data (Tables 6.1 and 6.3).

Figure 6.4 Result of super-resolution mapping. (a) Reference image. (b) Hard classification of the simulated Landsat TM imagery (spatial resolution 30m). (c) Hopfield neural network prediction map (spatial resolution 4.3 m)

Table 6.1 Accuracy assessment results for the Landsat TM hard classification map shown in Figure 6.4(b)

|

Class |

CC |

AEP |

S |

RMSE |

|

Bare |

0.934 |

- 0.055 |

0.029 |

0.169 |

|

Cut Grass |

0.871 |

0.037 |

0.008 |

0.089 |

|

Wild Grass |

0.832 |

0.058 |

0.038 |

0.194 |

|

Ploughed |

0.917 |

0.059 |

0.024 |

0.153 |

|

Trees |

0.931 |

0.002 |

0.032 |

0.178 |

|

Entire Image |

0.0072 |

0.026 |

0.161 |

Table 6.2 Accuracy assessment results for the SPOT HRV hard classification map shown in Figure 6.5(b)

|

Class |

CC |

AEP |

S |

RMSE |

|

Bare |

0.954 |

0.019 |

0.019 |

0.139 |

|

Cut Grass |

0.907 |

0.023 |

0.006 |

0.076 |

|

Wild Grass |

0.899 |

- 0.011 |

0.023 |

0.153 |

|

Ploughed |

0.956 |

0.008 |

0.013 |

0.113 |

|

Trees |

0.963 |

- 0.009 |

0.017 |

0.131 |

|

Entire Image |

0.0025 |

0.016 |

0.125 |

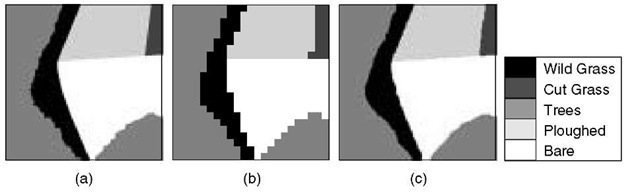

SPOT HRV

After convergence of the network with a zoom factor of z = 7, a prediction image was produced (Figure 6.5(c)) with spatial resolution seven times finer than that of the input class proportions in Figure 6.3. In addition, a maximum likelihood classification was produced for comparison (Figure 6.5(b)). Per-class and overall accuracy statistics were calculated to assess the differences between each map and the reference data (Tables 6.2 and 6.4).

Figure 6.5 Result of super-resolution mapping. (a) Reference image. (b) Hard classification of the simulated SPOT HRV imagery (spatial resolution 30 m). (c) Hopfield neural network prediction map (spatial resolution 2.9m)

Table 6.3 Accuracy assessment results for the Landsat TM Hopfield neural network prediction map shown in Figure 6.4(c)

|

Class |

CC |

AEP |

S |

RMSE |

|

Bare |

0.98 |

0.007 |

0.008 |

0.091 |

|

Cut Grass |

0.976 |

0.012 |

0.002 |

0.038 |

|

Wild Grass |

0.96 |

-0.021 |

0.009 |

0.096 |

|

Ploughed |

0.986 |

0.001 |

0.004 |

0.064 |

|

Trees |

0.983 |

-0.002 |

0.008 |

0.088 |

|

Entire Image |

0.0012 |

0.006 |

0.079 |

Table 6.4 Accuracy assessment results for the SPOT HRV Hopfield neural network prediction map shown in Figure 6.5(c)

|

Class |

CC |

AEP |

S |

RMSE |

|

Bare |

0.988 |

-0.001 |

0.005 |

0.07 |

|

Cut Grass |

0.98 |

0.026 |

0.001 |

0.035 |

|

Wild Grass |

0.968 |

-0.012 |

0.007 |

0.086 |

|

Ploughed |

0.991 |

-0.004 |

0.002 |

0.051 |

|

Trees |

0.985 |

-0.004 |

0.007 |

0.083 |

|

Entire Image |

0.001 |

0.005 |

0.069 |

![tmp255-220_thumb[2] tmp255-220_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/02/tmp255220_thumb2_thumb.jpg)

![tmp255-221_thumb[2] tmp255-221_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/02/tmp255221_thumb2_thumb.png)

![tmp255-226_thumb[2] tmp255-226_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/02/tmp255226_thumb2_thumb.png)

![tmp255-231_thumb[2] tmp255-231_thumb[2]](http://what-when-how.com/wp-content/uploads/2012/02/tmp255231_thumb2_thumb.png)