In 1885, Francis Galton, a British biologist, published a paper in which he demonstrated with graphs and tables that the children of very tall parents were, on average, shorter than their parents, while the children of very short parents tended to exceed their parents in height (cited in Walker 1929). Galton referred to this as ”reversion” or the ”law of regression” (i.e., regression to the average height of the species). Galton also saw in his graphs and tables a feature that he named the ”co-relation” between variables. The stature of kinsmen are ”co-related” variables, Galton stated, meaning, for example, that when the father was taller than average, his son was likely also to be taller than average. Although Galton devised a way of summarizing in a single figure the degree of ”co-relation” between two variables, it was Galton’s associate Karl Pearson who developed the ”coefficient of correlation,” as it is now applied. Galton’s original interest, the phenomenon of regression toward the mean, is no longer germane to contemporary correlation and regression analysis, but the term ”regression” has been retained with a modified meaning.

Although Galton and Pearson originally focused their attention on bivariate (two variables) correlation and regression, in current applications more than two variables are typically incorporated into the analysis to yield partial correlation coefficients, multiple regression analysis, and several related techniques that facilitate the informed interpretation of the linkages between pairs of variables. This summary begins with two variables and then moves to the consideration of more than two variables.

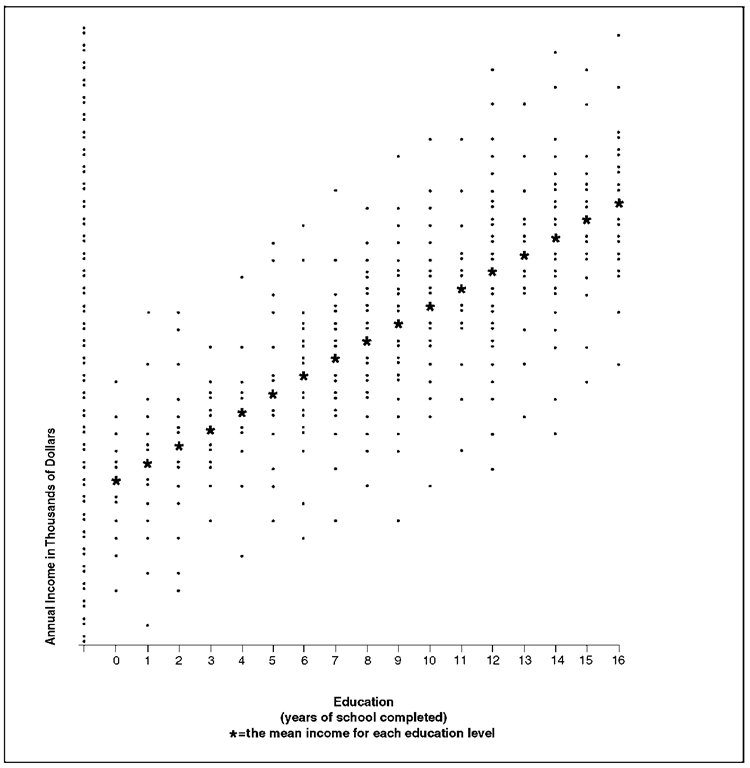

Consider a very large sample of cases, with a measure of some variable, X, and another variable, Y, for each case. To make the illustration more concrete, consider a large number of adults and, for each, a measure of their education (years of school completed = X) and their income (dollars earned over the past twelve months = Y). Subdivide these adults by years of school completed, and for each such subset compute a mean income for a given level of education. Each such mean is called a conditional mean and is represented by Y|X, that is, the mean of Y for a given value of X.

Imagine now an ordered arrangement of the subsets from left to right according to the years of school completed, with zero years of school on the left, followed by one year of school, and so on through the maximum number of years of school completed in this set of cases, as shown in Figure 1.

Assume that each of the Y|X values (i.e., the mean income for each level of education) falls on a straight line, as in Figure 1. This straight line is the regression line of Y on X. Thus the regression line of

Y on X is the line that passes through the mean Y for each value of X—for example, the mean income for each educational level.

If this regression line is a straight line, as shown in Figure 1, then the income associated with each additional year of school completed is the same whether that additional year of school represents an increase, for example, from six to seven years of school completed or from twelve to thirteen years. While one can analyze curvilinear regression, a straight regression line greatly simplifies the analysis. Some (but not all) curvilinear regressions can be made into straight-line regressions by a relatively simple transformation of one of the variables (e.g., taking a logarithm). The common assumption that the regression line is a straight line is known as the assumption of rectilinearity, or more commonly (even if less precisely) as the assumption of linearity.

The slope of the regression line reflects one feature of the relationship between two variables. If the regression line slopes ”uphill,” as in Figure 1, then Y increases as X increases, and the steeper the slope, the more Y increases for each unit increase in X. In contrast, if the regression line slopes ”downhill” as one moves from left to right,

Y decreases as X increases, and the steeper the slope, the more Y decreases for each unit increase in X. If the regression line doesn’t slope at all but is perfectly horizontal, then there is no relationship between the variables. But the slope does not tell how closely the two variables are ”co-related” (i.e., how closely the values of Y cluster around the regression line).

A regression line may be represented by a simple mathematical formula for a straight line. Thus:

where Y|X = the mean Y for a given value of X, or the regression line values of Y given X; ayx = the Y intercept (i.e., the predicted value of Y|X when X = 0); and byx = the slope of the regression of Y on X (i.e., the amount by which Y|X increases or decreases—depending on whether b is positive or negative—for each one-unit increase in X).

Figure 1. Hypothetical Regression of Income on Education

Equation 1 is commonly written in a slightly different form:

where Y = the regression prediction for Y for a given value of X, and ayx and byx are as defined above, with Y substituted for YjX.

Equations 1 and 2 are theoretically equivalent. Equation 1 highlights the fact that the points on the regression line are assumed to represent conditional means (i.e., the mean Y for a given X). Equation 2 highlights the fact that points on the regression line are not ordinarily found by computing a series of conditional means, but are found by alternative computational procedures.

Typically the number of cases is insufficient to yield a stable estimate of each of a series of conditional means, one for each level of X. Means based on a relatively small number of cases are inaccurate because of sampling variation, and a line connecting such unstable conditional means may not be straight even though the true regression line is. Hence, one assumes that the regression line is a straight line unless there are compelling reasons for assuming otherwise; one can then use the X and Y values for all cases together to estimate the Y intercept, a^x, and the slope, byx, of the regression line that is best fit by the criterion of least squares. This criterion requires predicted values for Y that will minimize the sum of squared deviations between the predicted values and the observed values. Hence, a ”least squares” regression line is the straight line that yields a lower sum of squared deviations between the predicted (regression line) values and the observed values than does any other straight line. One can find the parameters of the ”least squares” regression line for a given set of X and Y values by computing

These parameters (substituted in equation 2) describe the straight regression line that best fits by the criterion of least squares. By substituting the X value for a given case into equation 2, one can then find Y for that case. Otherwise stated, once ayx and byx have been computed, equation 2 will yield a precise predicted income level (Y) for each education level.

These predicted values may be relatively good or relatively poor predictions, depending on whether the actual values of Y cluster closely around the predicted values on the regression line or spread themselves widely around that line. The variance of the Y values (income levels in this illustration) around the regression line will be relatively small if the Y values cluster closely around the predicted values (i.e., when the regression line provides relatively good predictions). On the other hand, the variance of Y values around the regression line will be relatively large if the Y values are spread widely around the predicted values (i.e., when the regression line provides relatively poor predictions). The variance of the Y values around the regression predictions is defined as the mean of the squared deviations between them. The variances around each of the values along the regression line are assumed to be equal. This is known as the assumption of homoscedasticity (homogeneous scatter or variance). When the variances of the Y values around the regression predictions are larger for some values of X than for others (i.e., when homoscedasticity is not present), then X serves as a better predictor of Y in one part of its range than in another. The homoscedasticity assumption is usually at least approximately true.

The variance around the regression line is a measure of the accuracy of the regression predictions. But it is not an easily interpreted measure of the degree of correlation because it has not been ”normed” to vary within a limited range. Two other measures, closely related to each other, provide such a normed measure. These measures, which are always between zero and one in absolute value (i.e., sign disregarded) are: (a) the correlation coefficient, r, which is the measure devised by Karl Pearson; and (b) the square of that coefficient, r2, which, unlike r, can be interpreted as a percentage.

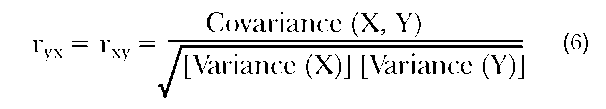

Pearson’s correlation coefficient, r, can be computed using the following formula:

The numerator in equation 5 is known as the covariance of X and Y. The denominator is the square root of the product of the variances of X and Y. Hence, equation 5 may be rewritten:

While equation 5 may serve as a computing guide, neither equation 5 nor equation 6 tells why it describes the degree to which two variables covary. Such understanding may be enhanced by stating that r is the slope of the least squares regression line when both X and Y have been transformed into ”standard deviates” or ”z measures.” Each value in a distribution may be transformed into a ”z measure” by finding its deviation from the mean of the distribution and dividing by the standard deviation (the square root of the variance) of that distribution. Thus

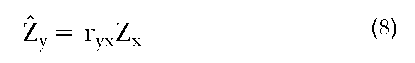

When both the X and Y measures have been thus standardized, ryx = rxy is the slope of the regression of Y on X, and of X on Y. For standard deviates, the Y intercept is necessarily 0, and the following equation holds:

Like the slope byx, for unstandardized measures, the slope for standardized measures, r, may be positive or negative. But unlike byx, r is always between 0 and 1.0 in absolute value. The correlation coefficient, r, will be 0 when the standardized regression line is horizontal so that the two variables do not covary at all—and, incidentally, when the regression toward the mean, which was Galton’s original interest, is complete. On the other hand, r will be 1.0 or – 1.0 when all values of Zy fall precisely on the regression line rZx. This means that when r = + 1.0, for every case Zx = Zy—that is, each case deviates from the mean on X by exactly as much and in the same direction as it deviates from the mean on Y, when those deviations are measured in their respective standard deviation units. And when r = – 1.0, the deviations from the mean measured in standard deviation units are exactly equal, but they are in opposite directions. (It is also true that when r = 1.0, there is no regression toward the mean, although this is very rarely of any interest in contemporary applications.) More commonly, r will be neither 0 nor 1.0 in absolute value but will fall between these extremes, closer to 1.0 in absolute value when the Zy values cluster closely around the regression line, which, in this standardized form, implies that the slope will be near 1.0, and closer to 0 when they scatter widely around the regression line.

But while r has a precise meaning—it is the slope of the regression line for standardized meas-ures—that meaning is not intuitively understandable as a measure of the degree to which one variable can be accurately predicted from the other. The square of the correlation coefficient, r2, does have such an intuitive meaning. Briefly stated, r2 indicates the percent of the possible reduction in prediction error (measured by the variance of actual values around predicted values) that is achieved by shifting from (a) Y as the prediction, to (b) the regression line values as the prediction. Otherwise stated,

The denominator of Equation 9 is called the total variance of Y. It is the sum of two components: (1) the variance of the Y values around Y, and (2) the variance of the Y around Y. Hence the numerator of equation 9 is equal to the variance of the Y values (regression values) around Y. Therefore

Even though it has become common to refer to r2 as the proportion of variance ”explained,” such terminology should be used with caution. There are several possible reasons for two variables to be correlated, and some of these reasons are inconsistent with the connotations ordinarily attached to terms such as ”explanation” or ”explained.” One possible reason for the correlation between two variables is that X influences Y. This is presumably the reason for the positive correlation between education and income; higher education facilitates earning a higher income, and it is appropriate to refer to a part of the variation in income as being ”explained” by variation in education. But there is also the possibility that two variables are correlated because both are measures of the same dimension. For example, among twentieth-century nation-states, there is a high correlation between the energy consumption per capita and the gross national product per capita. These two variables are presumably correlated because both are indicators of the degree of industrial development. Hence, one variable does not ”explain” variation in the other, if ”explain” has any of its usual meanings. And two variables may be correlated because both are influenced by a common cause, in which case the two variables are ”spuriously correlated.” For example, among elementary-school children, reading ability is positively correlated with shoe size. This correlation appears not because large feet facilitate learning, and not because both are measures of the same underlying dimension, but because both are influenced by age. As they grow older, schoolchildren learn to read better and their feet grow larger. Hence, shoe size and reading ability are ”spuriously correlated” because of the dependence of both on age. It would therefore be misleading to conclude from the correlation between shoe size and reading ability that part of the variation in reading ability is ”explained” by variation in shoe size, or vice versa.

In the attempt to discover the reasons for the correlation between two variables, it is often useful to include additional variables in the analysis. Several techniques are available for doing so.

PARTIAL CORRELATION

One may wish to explore the correlation between two variables with a third variable ”held constant.” The partial correlation coefficient may be used for this purpose. If the only reason for the correlation between shoe size and reading ability is because both are influenced by variation in age, then the correlation should disappear when the influence of variation in age is made nil—that is, when age is held constant. Given a sufficiently large number of cases, age could be held constant by considering each age grouping separately—that is, one could examine the correlation between shoe size and reading ability among children who are six years old, among children who are seven years old, eight years old, etc. (And one presumes that there would be no correlation between reading ability and shoe size among children who are homogeneous in age.) But such a procedure requires a relatively large number of children in each age grouping.

Lacking such a large sample, one may hold age constant by ”statistical adjustment.”

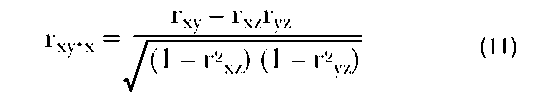

To understand the underlying logic of partial correlation, one considers the regression residuals (i.e., for each case, the discrepancy between the regression line value and the observed value of the predicted variable). For example, the regression residual of reading ability on age for a given case is the discrepancy between the actual reading ability and the predicted reading ability based on age. Each residual will be either positive or negative (depending on whether the observed reading ability is higher or lower than the regression prediction). Each residual will also have a specific value, indicating how much higher or lower than the age-specific mean (i.e., regression line values) the reading ability is for each person. The complete set of these regression residuals, each being a deviation from the age-specific mean, describes the pattern of variation in reading abilities that would obtain if all of these schoolchildren were identical in age. Similarly, the regression residuals for shoe size on age describe the pattern of variation that would obtain if all of these schoolchildren were identical in age. Hence, the correlation between the two sets of residuals—(1) the regression residuals of shoe size on age and (2) the regression residuals of reading ability on age—is the correlation between shoe size and reading ability, with age ”held constant.” In practice, it is not necessary to find each regression residual to compute the partial correlation, because shorter computational procedures have been developed. Hence,

where r-z; = the partial coefficient between X and Y, holding Z constant; rxy = the bivariate correlation coefficient between X and Y; rxz = the bivari-ate correlation coefficient between X and Z; and ryz = the bivariate correlation coefficient between Y and Z.

It should be evident from equation 11 that if Z is unrelated to both X and Y, controlling for Z will yield a partial correlation that does not differ from the bivariate correlation. If all correlations are positive, each increase in the correlation between the control variable, Z, and each of the focal variables, X and Y, will move the partial, r , closer to 0, and in some circumstances a positive bivariate correlation may become negative after controlling for a third variable. When rxy is positive and the algebraic sign of ryz differs from the sign of rxz (so that their product is negative), the partial will be larger than the bivariate correlation, indicating that Z is a suppressor variable—that is, a variable that diminishes the correlation between X and Y unless it is controlled. Further discussion of partial correlation and its interpretation will be found in Simon 1954; Mueller, Schuessler, and Costner 1977; and Blalock 1979.

Any correlation between two sets of regression residuals is called a partial correlation coefficient. The illustration immediately above is called a first-order partial, meaning that one and only one variable has been held constant. A second-order partial means that two variables have been held constant. More generally, an nth-order partial is one in which precisely n variables have been ”controlled” or held constant by statistical adjustment.

When only one of the variables being correlated is a regression residual (e.g., X is correlated with the residuals of Y on Z), the correlation is called a part correlation. Although part correlations are rarely used, they are appropriate when it seems implausible to residualize one variable. Generally, part correlations are smaller in absolute value than the corresponding partial correlation.

MULTIPLE REGRESSION

Earned income level is influenced not simply by one’s education but also by work experience, skills developed outside of school and work, the prevailing compensation for the occupation or profession in which one works, the nature of the regional economy where one is employed, and numerous other factors. Hence it should not be surprising that education alone does not predict income with high accuracy. The deviations between actual income and income predicted on the basis of education are presumably due to the influence of all the other factors that have an effect, great or small, on one’s income level. By including some of these other variables as additional predictors, the accuracy of prediction should be increased. Otherwise stated, one expects to predict Y better using both X1 and X2 (assuming both influence Y) than with either of these alone.

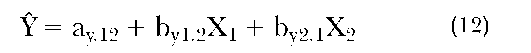

A regression equation including more than a single predictor of Y is called a multiple regression equation. For two predictors, the multiple regression equation is:

where Y = the least squares prediction of Y based on X1 and X2; ay12 = the Y intercept (i.e., the predicted value of Y when both X1 and X2 are 0); by12 = the (unstandardized) regression slope of Y on X1, holding X2 constant; and by21 = the (unstandardized) regression slope of Y on X2, holding X1 constant. In multiple regression analysis, the predicted variable (Y in equation 12) is commonly known as the criterion variable, and the X’s are called predictors. As in a bivariate regression equation (equation 2), one assumes both rectilinearity and homoscedasticity, and one finds the Y intercept (ay12 in equation 12) and the regression slopes (one for each predictor; they are by12 and by21 in equation 12) that best fit by the criterion of least squares. The b’s or regression slopes are partial regression coefficients. The correlation between the resulting regression predictions (Y) and the observed values of Y is called the multiple correlation coefficient, symbolized by R.

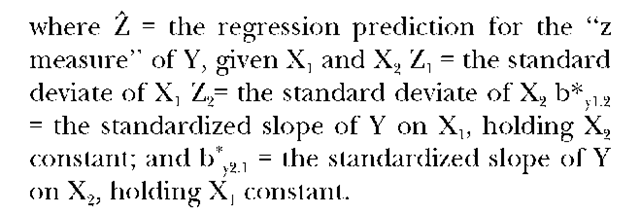

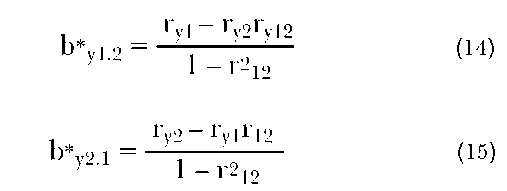

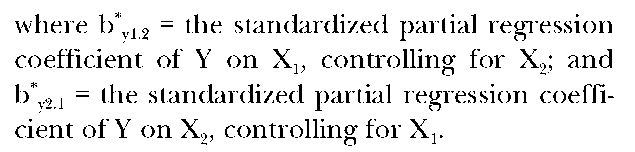

In contemporary applications of multiple regression, the partial regression coefficients are typically the primary focus of attention. These coefficients describe the regression of the criterion variable on each predictor, holding constant all other predictors in the equation. The b’s in equation 12 are unstandardized coefficients. The analogous multiple regression equation for all variables expressed in standardized form is

The standardized regression coefficients in an equation with two predictors may be calculated from the bivariate correlations as follows:

Standardized partial regression coefficients, here symbolized by b* (read ”b star”), are frequently symbolized by the Greek letter beta, and they are commonly referred to as ”betas,” ”beta coefficients,” or ”beta weights.” While this is common usage, it violates the established practice of using Greek letters to refer to population parameters instead of sample statistics.

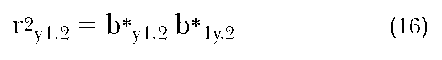

A comparison of equation 14, describing the standardized partial regression coefficient, b*y1.2, with equation 11, describing the partial correlation coefficient, ry1.2, will make it evident that these two coefficients are closely related. They have identical numerators but different denominators. The similarity can be succinctly expressed by

If any one of the quantities in equation 16 is 0, all are 0, and if the partial correlation is 1.0 in absolute value, both of the standardized partial regression coefficients in equation 16 must also be 1.0 in absolute value. For absolute values between 0 and 1.0, the partial correlation coefficient and the standardized partial regression coefficient will have somewhat different values, although the general interpretation of two corresponding coefficients is the same in the sense that both coefficients represent the relationship between two variables, with one or more other variables held constant. The difference between them is rather subtle and rarely of major substantive import. Briefly stated, the partial correlation coefficient—e.g., ry12—is the regression of one standardized residual on another standardized residual. The corresponding standardized partial regression coefficient, b*y1.2, is the regression of one residual on another, but the residuals are standard measure discrepancies from standard measure predictions, rather than the residuals themselves having been expressed in the form of standard deviates.

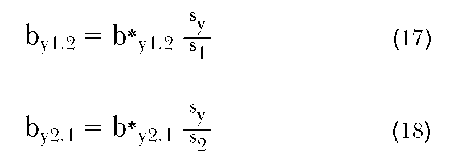

A standardized partial regression coefficient can be transformed into an unstandardized partial regression coefficient by

where by12 = the unstandardized partial regression coefficient of Y on X1, controlling for X2; by2.1 = the unstandardized partial regression coefficient of Y on X2, controlling for X1; b*y2.1 and b*y2.1 are standardized partial regression coefficients, as defined above; sy = the standard deviation of Y; s1 = the standard deviation of X1; and s2 = the standard deviation of X2.

Under all but exceptional circumstances, standardized partial regression coefficients fall between -1.0 and +1.0. The relative magnitude of the standardized coefficients in a given regression equation indicates the relative magnitude of the relationship between the criterion variable and the predictor in question, after holding constant all the other predictors in that regression equation. Hence, the standardized partial regression coefficients in a given equation can be compared to infer which predictor has the strongest relationship to the criterion, after holding all other variables in that equation constant. The comparison of unstandardized partial regression coefficients for different predictors in the same equation does not ordinarily yield useful information because these coefficients are affected by the units of measure. On the other hand, it is frequently useful to compare unstandardized partial regression coefficients across equations. For example, in separate regression equations predicting income from education and work experience for the United States and Great Britain, if the unstandardized regression coefficient for education in the equation for Great Britain is greater than the unstandardized regression coefficient for education in the equation for the United States, the implication is that education has a greater influence on income in Great Britain than in the United States. It would be hazardous to draw any such conclusion from the comparison of standardized coefficients for Great Britain and the United

States because such coefficients are affected by the variances in the two populations.

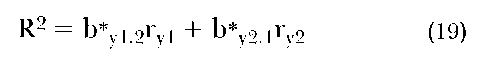

The multiple correlation coefficient, R, is defined as the correlation between the observed values of Y and the values of Y predicted by the multiple regression equation. It would be unnecessarily tedious to calculate the multiple correlation coefficient in that way. The more convenient computational procedure is to compute R2 (for two predictors, and analogously for more than two predictors) by the following:

Like r2, R2 varies from 0 to 1.0 and indicates the proportion of variance in the criterion that is ”explained” by the predictors. Alternatively stated, R2 is the percent of the possible reduction in prediction error (measured by the variance of actual values around predicted values) that is achieved by shifting from (a) Y as the prediction to (b) the multiple regression values, Y, as the prediction.

VARIETIES OF MULTIPLE REGRESSION

The basic concept of multiple regression has been adapted to a variety of purposes other than those for which the technique was originally developed. The following paragraphs provide a brief summary of some of these adaptations.

As originally conceived, the correlation coefficient was designed to describe the relationship between continuous, normally distributed variables. Dichotomized predictors such as gender (male and female) were introduced early in bivariate regression and correlation, which led to the ”point biserial correlation coefficient” (Walker and Lev 1953). For example, if one wishes to examine the correlation between gender and income, one may assign a ”0” to each instance of male and a ”1” to each instance of female to have numbers representing the two categories of the dichotomy. The unstandardized regression coefficient, computed as specified above in equation 3, is then the difference between the mean income for the two categories of the dichotomous predictor, and the computational formula for r (equation 5), will yield the point biserial correlation coefficient, which can be interpreted much like any other r. It was then only a small step to the inclusion of dichotomies as predictors in multiple regression analysis, and then to the creation of a set of dichotomies from a categorical variable with more than two subdivisions—that is, to dummy variable analysis (Cohen 1968; Bohrnstedt and Knoke 1988; Hardy 1993).

Religious denomination—e.g., Protestant, Catholic, and Jewish—serves as an illustration. From these three categories, one forms two dichotomies, called ”dummy variables.” In the first of these, for example, cases are classified as ”1” if they are Catholic, and ”0” otherwise (i.e., if Protestant or Jewish). In the second of the dichotomies, cases are classified as ”1” if they are Jewish, and ”0” otherwise (i.e., if Protestant or Catholic). In this illustration, Protestant is the ”omitted” or ”reference” category (but Protestants can be identified as those who are classified ”0” on both of the other dichotomies). The resulting two dichotomized ”dummy variables” can serve as the only predictors in a multiple regression equation, or they may be combined with other predictors. When the dummy variables mentioned are the only predictors, the unstandardized regression coefficient for the predictor in which Catholics are classified ”1” is the difference between the mean Y for Catholics and Protestants (the ”omitted” or ”reference” category). Similarly, the unstandardized regression coefficient for the predictor in which Jews are classified ”1” is the difference between the mean Y for Jews and Protestants. When the dummy variables are included with other predictors, the unstandardized regression coefficients are the same except that the difference of each mean from the mean of the ”reference” category has been statistically adjusted to control for each of the other predictors in the regression equation.

The development of ”dummy variable analysis” allowed multiple regression analysis to be linked to the experimental statistics developed by R. A. Fisher, including the analysis of variance and covariance. (See Cohen 1968.)

Logistic Regression. Early students of correlation anticipated the need for a measure of correlation when the predicted or dependent variable was dichotomous. Out of this came (a) the phi coefficient, which can be computed by applying the computational formula for r (equation 5) to two dichotomies, each coded ”0” or ”1,” and (b) the tetrachoric correlation coefficient, which uses information in the form of two dichotomies to estimate the Pearsonian correlation for the corresponding continuous variables, assuming the dichotomies result from dividing two continuous and normally distributed variables by arbitrary cutting points (Kelley 1947; Walker and Lev 1953; Carroll 1961).

These early developments readily suggested use of a dichotomous predicted variable, coded ”0” or ”1,” as the predicted variable in a multiple regression analysis. The predicted value is then the conditional proportion, which is the conditional mean for a dichotomized predicted variable. But this was not completely satisfactory in some circumstances because the regression predictions are, under some conditions, proportions greater than 1 or less than 0. Logistic regression (Retherford 1993; Kleinman 1994; Menard 1995) is responsive to this problem. After coding the predicted variable ”0” or ”1,” the predicted variable is transformed to a logistic—that is, the logarithm of the ”odds,” which is to say the logarithm of the ratio of the number of 1′s to the number of 0′s. With the logistic as the predicted variable, impossible regression predictions do not result, but the unstandardized logistic regression coefficients, describing changes in the logarithm of the ”odds,” lack the intuitive meaning of ordinary regression coefficients. An additional computation is required to be able to describe the change in the predicted proportion for a given one-unit change in a predictor, with all other predictors in the equation held constant.

Path Analysis. The interpretation of multiple regression coefficients can be difficult or impossible when the predictors include an undifferentiated set of causes, consequences, or spurious correlates of the predicted variable. Path analysis was developed by Sewell Wright (1934) to facilitate the interpretation of multiple regression coefficients by making explicit assumptions about causal structure and including as predictors of a given variable only those variables that precede that given variable in the assumed causal structure. For example, if one assumes that Y is influenced by X1 and X2, and X1 and X2 are, in turn, both influenced by Z1, Z2, and Z3, this specifies the assumed causal structure. One may then proceed to write multiple regression equations to predict X1, X2, and Y, including in each equation only those variables that come prior in the assumed causal order. For example, the Z variables are appropriate predictors in the equation predicting X1 because they are assumed causes of X1. But X2 is not an appropriate predictor of X1 because it is assumed to be a spurious correlate of X1 (i.e., X1 and X2 are presumed to be correlated only because they are both influenced by the Z variables, not because one influences the other). And Y is not an appropriate predictor of X1 because Y is assumed to be an effect of X1, not one of its causes. When the assumptions about the causal structure linking a set of variables have been made explicit, the appropriate predictors for each variable have been identified from this assumed causal structure, and the resulting equations have been estimated by the techniques of regression analysis, the result is a path analysis, and each of the resulting coefficients is said to be a ”path coefficient” (if expressed in standardized form) or a ”path regression coefficient” (if expressed in unstandardized form).

If the assumed causal structure is correct, a path analysis allows one to ”decompose” a correlation between two variables into ”direct effects”; ”indirect effects”; and, potentially, a ”spurious component” as well (Land 1969; Bohrnstedt and Knoke 1988; McClendon 1994).

For example, we may consider the correlation between the occupational achievement of a set of fathers and the occupational achievement of their sons. Some of this correlation may occur because the father’s occupational achievement influences the educational attainment of the son, and the son’s educational attainment, in turn, influences his occupational achievement. This is an ”indirect effect” of the father’s occupational achievement on the son’s occupational achievement ”through” (or ”mediated by”) the son’s education. A ”direct effect,” on the other hand, is an effect that is not mediated by any variable included in the analysis. Such mediating variables could probably be found, but if they have not been identified and included in this particular analysis, then the effects mediated through them are grouped together as the ”direct effect”—that is, an effect not mediated by variables included in the analysis. If the father’s occupational achievement and the son’s occupational achievement are also correlated, in part, because both are influenced by a common cause (e.g., a common hereditary variable), then that part of the correlation that is attributable to that common cause constitutes the ”spurious component” of the correlation. If the variables responsible for the ”spurious component” of a correlation have been included in the path analysis, the ”spurious component” can be estimated; otherwise such a ”spurious component” is merged into the ”direct effect,” which, despite the connotations of the name, absorbs all omitted indirect effects and all omitted spurious components.

“Stepwise” Regression Analysis. The interpretation of regression results can sometimes be facilitated without specifying completely the presumed causal structure among a set of predictors. If the purpose of the analysis is to enhance understanding of the variation in a single dependent variable, and if the various predictors presumed to contribute to that variation can be grouped, for example, into proximate causes and distant causes, a stepwise regression analysis may be useful. Depending on one’s primary interest, one may proceed in two different ways. For example, one may begin by regressing the criterion variable on the distant causes, and then, in a second step, introduce the proximate causes into the regression equation. Comparison of the coefficients at each step will reveal the degree to which the effects of the distant causes are mediated by the proximate causes included in the analysis. Alternatively, one may begin by regressing the criterion variable on the proximate causes, and then introduce the distant causes into the regression equation in a second step. Comparing the coefficients at each step, one can infer the degree to which the first-step regression of the criterion variable on the proximate causes is spurious because of the dependence of both on the distant causes. A stepwise regression analysis may proceed with more than two stages if one wishes to distinguish more than two sets of predictors. One may think of a stepwise regression analysis of this kind as analogous to a path analysis but without a complete specification of the causal structure.

Nonadditive Effects in Multiple Regression.

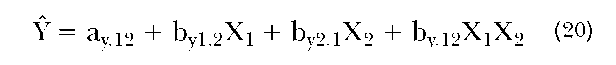

In the illustrative regression equations preceding this section, each predictor has appeared only once, and never in a ”multiplicative” term. We now consider the following regression equation, which includes such a multiplicative term:

In this equation, Y is said to be predicted, not simply by an additive combination of X1 and X2 but also by their product, X1X2. Although it may not be intuitively evident from the equation itself, the presence of a multiplicative effect (i.e., the regression coefficient for the multiplicative term, by.12, is not 0) implies that the effect of X1 on Y depends on the level of X2, and vice versa. This is commonly called an interaction effect (Allison 1977; Blalock 1979; Jaccard, Turrisi and Wan 1990; Aiken and West 1991; McClendon 1994). The inclusion of multiplicative terms in a regression equation is especially appropriate when there are sound reasons for assuming that the effect of one variable differs for different levels of another variable. For example, if one assumes that the ”return to education” (i.e., the annual income added by each additional year of schooling) will be greater for men than for women, this assumption can be explored by including all three predictors: education, gender, and the product of gender and education.

When product terms have been included in a regression equation, the interpretation of the resulting partial regression coefficients may become complex. For example, unless all predictors are ”ratio variables” (i.e., variables measured in uniform units from an absolute 0), the inclusion of a product term in a regression equation renders the coefficients for the additive terms uninterpretable (see Allison 1977).

SAMPLING VARIATION AND TESTS AGAINST THE NULL HYPOTHESIS

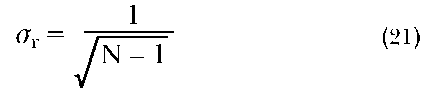

Descriptions based on incomplete information will be inaccurate because of ”sampling variation.” Otherwise stated, different samplings of information will yield different results. This is true of sample regression and correlation coefficients, as it is for other descriptors. Assuming a random selection of observed information, the ”shape” of the distribution of such sampling variation is often known by mathematical reasoning, and the magnitude of such variation can be estimated. For example, if the true correlation between X and Y is 0, a series of randomly selected observations will rarely yield a correlation that is precisely 0. Instead, the observed correlation will fluctuate around 0 in the ”shape” of a normal distribution, and the standard deviation of that normal sampling distribution—called the standard error of r—will be

where or = the standard error of r (i.e. the standard deviation of the sampling distribution of r, given that the true correlation is 0); and N = the sample size (i.e., the number of randomly selected cases used in the calculation of r). For example, if the true correlation were 0, a correlation coefficient based on a random selection of 400 cases will have a standard error of approximately 1/20 or .05. An observed correlation of .15 or greater in absolute value would thus be at least three standard errors away from 0 and hence very unlikely to have appeared simply because of random fluctuations around a true value of 0. This kind of conclusion is commonly expressed by saying that the observed correlation is ”significantly different from 0” at a given level of significance (in this instance, the level of significance cited could appropriately be .01). Or the same conclusion may be more simply (but less precisely) stated by saying that the observed correlation is ”significant.”

The standard error for an unstandardized bivariate regression coefficient, and for an unstandardized partial regression coefficient, may also be estimated (Cohen and Cohen 1983; Kleinman, Kupper and Muller 1988; Hamilton 1992; McClendon 1994; Fox 1997). Other things being equal, the standard error for the regression of the criterion on a given predictor will decrease as (1) the number of observations (N) increases; (2) the variance of observed values around predicted values decreases; (3) the variance of the predictor increases; and (4) the correlation between the predictor and other predictors in the regression equation decreases.

PROBLEMS IN REGRESSION ANALYSIS

Multiple regression is a special case of a very general and adaptable model of data analysis known as the general linear model (Cohen 1968; Fennessey 1968; Blalock 1979). Although the assumptions underlying multiple regression seem relatively demanding (see Berry 1993), the technique is remarkably ”robust,” which is to say that the technique yields valid conclusions even when the assumptions are met only approximately (Bohrnstedt and Carter 1971). Even so, restricted or biased samples may lead to conclusions that are misleading if they are inappropriately generalized. Furthermore, regression results may be misinterpreted if interpretation rests on an implicit causal model that is misspecified. For this reason it is advisable to make the causal model explicit, as in path analysis or structural equation modeling and to use regression equations that are appropriate for the model as specified. ”Outliers” and ”deviant cases” (i.e., cases extremely divergent from most) may have an excessive impact on regression coefficients, and hence may lead to erroneous conclusions. (See Berry and Feldman 1985; Fox 1991; Hamilton 1992.) A ubiquitous but still not widely recognized source of misleading results in regression analysis is measurement error (both random and non-random) in the variables (Stouffer 1936; Kahneman 1965; Gordon 1968; Bohrnstedt and Carter 1971; Fuller and Hidiroglou 1978; Berry and Feldman 1985). In bivariate correlation and regression, the effect of measurement error can be readily anticipated: on the average, random measurement error in the predicted variable attenuates (moves toward zero) the correlation coefficient (i.e., the standardized regression coefficient) but not the unstandardized regression coefficient, while random measurement error in the predictor variable will, on the average, attenuate both the standardized and the unstandardized coefficients. In multiple regression analysis, the effect of random measurement error is more complex. The unstandardized partial regression coefficient for a given predictor will be biased, not simply by random measurement error in that predictor, but also by other features. When random measurement error is entailed in a given predictor, X, that predictor is not completely controlled in a regression analysis. Consequently, the unstandardized partial regression coefficient for every other predictor that is correlated with X will be biased by random measurement error in X. Measurement error may be non-random as well as random, and anticipating the effect of non-random measurement error on regression results is even more challenging than anticipating the effect of random error. Non-random measurement errors may be correlated errors (i.e., errors that are correlated with other variables in the system being analyzed), and therefore they have the potential to distort greatly the estimates of both standardized and unstandardized partial regression coefficients. Thus, if the measures used in regression analysis are relatively crude, lack high reliability, or include distortions (errors) likely to be correlated with other variables in the regression equation (or with measurement errors in those variables), regres sion analysis may yield misleading results. In these circumstances, the prudent investigator should interpret the results with considerable caution, or, preferably, shift from regression analysis to the analysis of structural equation models with multi ple indicators. (See Herting 1985; Schumacker and Lomax 1996.) This alternative mode of analysis is well suited to correct for random measurement error and to help locate and correct for non random measurement error.