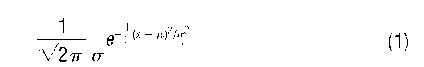

In scientific and social investigations, researchers may need to make decisions through statistical-hypothesis testing guided by underlying theory and empirical observations. The Z-test is one of the most popular techniques for statistical inference based on the assumption of normal distribution. Many social and natural phenomena follow the law of normal (Gaussian) distribution, which was discovered by Carl F. Gauss (1777-1855), a German mathematician, in the early nineteenth century. The normal distribution is one of the fundamental statistical distributions used in many fields of research, and it has a bell-shaped density function with f (mean) representing the central location and a2 (variance) measuring the dispersion. The normal density function is

A general normal random variable X with a mean of

mal random variable Z with mean 0 and variance 1 using

where a is the standard deviation of X.The observed value of Z is called the Z score. Almost all introductory statistics books provide the table for the probability of {Z < c} or its variants for many convenient values of c (Agresti and Finlay 1997). These values are available in all statistical software packages.

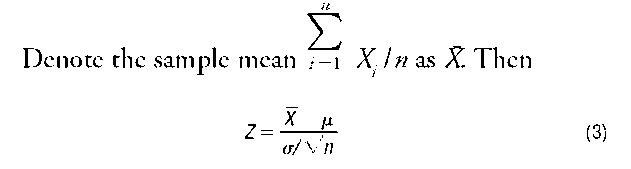

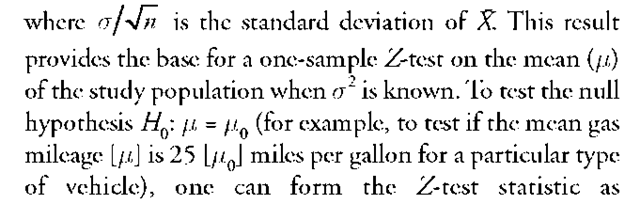

Statistical hypothesis testing and inference on the population mean are usually performed through a sample of random variables observed from the population. Let X1, X2, …, Xn be n independent and identically distributed normal random variables with a mean of f and a variance of a2, such as the gas mileages of a particular type of vehicle, the annual average income of households in a city, or the vital signs of patients under various treatments.

is distributed as the standard normal random variable,

where

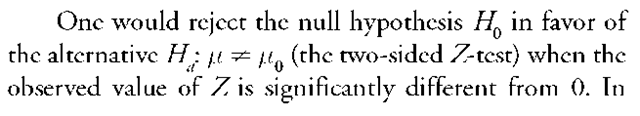

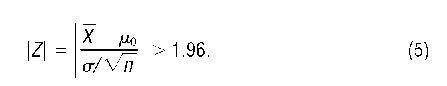

In rejecting or accepting the null hypothesis, one could commit two types of errors. The type I error (a) is the probability of rejecting the null hypothesis when it is true, and the type II error ) is the probability of accepting the null hypothesis when it is false. The Rvalue is the probability of the test statistic as contradictory to H0 as the observed Z value. A detailed study on statistical hypothesis testing is given by Erich Lehmann and Joseph P. Romano (2005). For the two-sided Z-test, one may

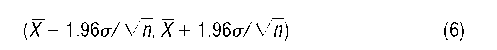

The type I error for this test is less than 0.05. The upper bound of the type I error is called the size, and 1-fi is called the power of the test. For a test, if the p-value is less than the size, one may reject the null hypothesis H0 . A commonly used size is 0.05. For the two-sided Z-test with size 0.05, the critical region is {IZI > 1.96}. The Z-test is closely related to the constructing of confidence intervals. For example, the 95 percent confidence interval for the mean ^ is

for the two-sided estimation. If X , X ., X are not independent and identically normally distributed, under some conditions, the central limit theorem shows that

is approximately standard normal when n is large (typically n > 30). That is, one can still use the Z-test when n is large.

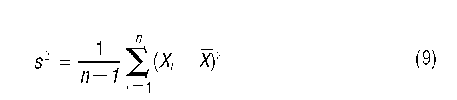

In many applications, the standard deviation of the population is unknown. In these cases, one can replace a with the sample standard deviation s and form a test statistic as

where

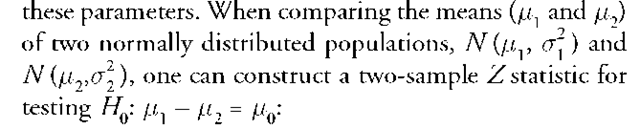

is the sample variance. The test follows the ^-distribution of n-1 degrees of freedom. As n increases, tconverges to Z. Hence, even when the standard deviation is unknown, the Ztest can be used if n is large. In fact, the estimates of parameters from many parametric models, such as regression models, are approximately normally distributed. The Z-test is therefore applicable for statistical inference of

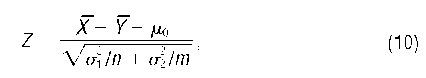

pendent random samples from these two populations respectively.

The two-sample Z-test can be carried out in the same way as the one-sample Z-test. Similar to the case of the one-sample Z-test, the two-sample Z-test corresponds to the two-sample f-test when the population variances are replaced by their sample variances. However, when n and m are large, there is not much difference between the two-sample ^test and the two-sample f-test. The two-sample f-test is a special case of Analysis of Variance (ANOVA), which compares the means of multiple populations. For a small sample size, it is preferable to use nonparametric methods instead of the Z-test, particularly when it is difficult to verify the assumption of normality.