Classical statistical analysis seeks to describe the distribution of a measurable property (descriptive statistics) and to determine the reliability of a sample drawn from a population (inferential statistics). Classical statistical analysis is based on repeatedly measuring properties of objects and aims at predicting the frequency with which certain results will occur when the measuring operation is repeated at random or stochastically.

Properties can be measured repeatedly of the same object or only once per object. However, in the latter case, one must measure a number of sufficiently similar objects. Typical examples are measuring the outcome of tossing a coin or rolling a die repeatedly and count the occurrences of the possible outcomes as well as measuring the chemical composition of the next hundred or thousand pills produced in the production line of a pharmaceutical plant. In the former case the same object (one and the same die cast) is "measured" several times (with respect to the question which number it shows); in the latter case many distinguishable, but similar objects are measured with respect to their composition which in the case of pills is expected to be more or less identical, such that the repetition is not with the same object, but with the next available similar object.

One of the central concepts of classical statistical analysis is to determine the empirical frequency distribution that yields the absolute or relative frequency of the occurrence of each of the possible results of the repeated measurement of a property of an object or a class of objects when only a finite number of different outcomes is possible (discrete case). If one thinks of an infinitely repeated and arbitrarily precise measurement where every outcome is (or can be) different (as would be the case if the range of the property is the set of real numbers), then the relative frequency of a single outcome would not be very instructive; instead one uses the distribution function in this (continuous) case which, for every numerical value x of the measured property, yields the absolute or relative frequency of the occurrence of all values smaller than x. This function is usually noted as F(x), and its derivative F’(x) = f(x) is called frequency density function.

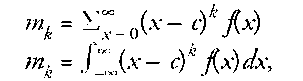

If one wants to describe an empirical distribution, the complete function table is seldom instructive. This is why the empirical frequency or distribution functions are often represented by a few parameters that describe the essential features of the distribution. The so-called moments of the distribution represent the distribution completely, and the lower-order moments represent the distribution at least in a satisfactory manner. Moments are defined as follows:

where k is the order of the moment, n is the number of repetitions or objects measured, and c is a constant that is usually either 0 (moment about the origin) or the arithmetic mean (moment about the mean), the first-order mean about the origin being the arithmetic mean.

In the frequentist interpretation of probability, frequency can be seen as the realization of the concept of probability: It is quite intuitive to believe that if the probability of a certain outcome is some number between 0 and 1, then the expected relative frequency of this outcome would be the same number, at least in the long run. From this, one of the concepts of probability is derived, yielding probability distribution and density functions as models for their empirical correlates. These functions are usually also noted as f(x) and F(x), respectively, and their moments are also defined much like in the above formula, but with a difference that takes into account that there is no finite number n of measurement repetitions:

where the first equation can be applied to discrete numerical variables (e.g., the results of counting), while the second equation can be applied to continuous variables.

Again, the first-order moment about 0 is the mean, and the other moments are usually calculated about this mean. In many important cases one would be satisfied to know the mean (as an indicator for the central tendency of the distribution) and the second-order moment about the mean, namely the variance (as the most prominent indicator for the variation). For the important case of the normal or Gaussian distribution, these two parameters are sufficient to describe the distribution completely.

If one models an empirical distribution with a theoretical distribution (any non-negative function for which the zero-order moment evaluates to 1, as this is the probability for the variable to have any arbitrary value within its domain), one can estimate its parameters from the moments of the empirical distributions calculated from the finite number of repeated measurements taken in a sample, especially in the case where the normal distribution is a satisfactory model of the empirical distribution, as in this case mean and variance allow the calculation of all interesting values of the probability density function f(x) and of the distribution function F(x).

Empirical and theoretical distributions need not be restricted to the case of a single property or variable, they are also defined for the multivariate case. Given that empirical moments can always be calculated from the measurements taken in a sample, these moments are also results of a random process, just like the original measurements. In this respect, the mean, variance, correlation coefficient or any other statistical parameter calculated from the finite number of objects in a sample is also the outcome of a random experiment (measurement taken from a randomly selected set of objects instead of exactly one object). And for these derived measurements theoretical distributions are also available, and these models of the empirical moments allow the estimation with which probability one could expect the respective parameter to fall into a specified interval in the next sample to be taken.

If, for instance, one has a sample of 1,000 interviewees of whom 520 answered they were going to vote for party A in the upcoming election, and 480 announced they were going to vote for party B, then the parameter ^a—the proportion of A-voters in the overall population—could be estimated to be 0.52, but this estimate would be a stochastic variable, which approximately obeys a normal distribution with mean 0.52 and variance 0.0002496 (or standard deviation 0.0158), and from this result one can conclude that another sample of another 1,000 interviewees from the same overall population would lead to another estimate whose value would lie within the interval [0.489, 0.551] (between 0.52 ± 1.96 0.0158) with a probability of 95 percent (the so-called 95 percent confidence interval, which in the case of the normal distribution is centered about the mean with a width of 3.92 standard deviations). Or, to put it in other words, the probability of finding more than 551 A-voters in another sample of 1,000 interviewees from the same population is 0.025. Bayesian statistics, as opposed to classical statistics, would argue from the same numbers that the probability is 0.95 that the population parameter falls within the interval [0.489, 0.551].