Abstract

The method for classification performance improvement using hidden Markov models (HMM) is proposed. The k-nearest neighbors (kNN) classifier is used in the feature space produced by these HMM. Only the similar models with the noisy original sequences assumption are discussed. The research results on simulated data for two-class classification problem are presented.

Keywords: Hidden Markov Model, Derivation, k Nearest Neighbors.

Introduction

HMM are a powerful tool for modeling of various processes and pattern recognition. By their nature, Markov models allow to deal with spatial-temporal sequence characteristics directly and therefore they became widely-used [1], [2], [3]. However, despite a wide circulation of models of this kind, HMM possess low enough classification abilities. Though these models are widespread HMM possess of sufficiently low classification properties. At the same time it is well known that the HMM have a fairly low classification ability.We understand the classification problem by the following. We have an object set discriminated on classes by expert (training with teacher). This object set is named the training set. We want to create an algorithm that will be able to classify a test object from origin set. The two-class classification problem using the matrix of distances between the objects is discussed in this article. In this case, the each object is being described by distances to all other objects from training set. The method of the nearest neighbors, the Parzen windows method and the method of potential functions work with input data of such type. The set of Gaussian time sequences generated by two HMMs with similar parameters are taken as classification objects. In order to approximate real-world examples all observed time sequences being distorted. The task was to compare the capabilities of traditional methods of classification with a simple nearest neighbor classifier [4] in the space of first derivatives of the likelihood function for the HMM parameters.

The remainder of this article is organized as follows. Section 2 introduces the method of solution of assigned task. Section 3 provides some results of computational experiments. Section 4 summarizes our findings.

The Method of Sequences Classification

An HMM is completely described by the following parameters:

1. The initial state distribution![]() where

where![]() and N – is the number of hidden states in the model.

and N – is the number of hidden states in the model.

2. The matrix of state transition probabilities![]() where

where![]() where T – is the length of observable sequence.

where T – is the length of observable sequence.

3. The matrix of observation symbols probabilities![]()

![]() where _ _

where _ _![]() _ _ is the symbol observed at the moment of time

_ _ is the symbol observed at the moment of time![]() is the number of observation states in the model. In this work the case when function observable symbol probabilitie distribution is described by a mix of normal distributions is considered in such a manner that the one hidden state is associated with one observable state:

is the number of observation states in the model. In this work the case when function observable symbol probabilitie distribution is described by a mix of normal distributions is considered in such a manner that the one hidden state is associated with one observable state:![]()

Thus, HMM is completely described by the matrix of state transition probabilities, the probabilities of observation symbols and the initial state distribution:

A classifier based on log-likelihood function is traditionally used. The sequence O is considered as being generated by model![]() if (1) is satisfied:

if (1) is satisfied:

Otherwise, it is considered that the sequence is generated by model A2.

Some authors (e.g. [5],[6]) propose to use the spaces of the so-called secondary features as a feature space in which the sequences are being classified. For example, forward-probabilities and backward-probabilities, which are used for computation of probability that the sequence is generated by model A, can be used as a secondary features. The first derivatives of space of likelihood function logarithm are also used. These derivatives are being taken with respect to different model parameters. The authors [5] offer to include the original sequence into the feature vector also. In this work the performance of two-class classification in the space of the first derivatives of the likelihood function is discussed.

The classification problem states as follows. There are two groups of training sequences: the first group consists of sequences generated by Ai, and the second group – by A2. Usually, in order to determine which class the test sequence Otest is belongs to the rule (1) is used. Because the model parameters A1 and A2 are unknown, at first one needs to estimate them (for example, the algorithm of Baum-Welch is used for it), and then calculate them according to rule (1).

If the competing models have similar parameters, and the observed sequences are not purely Gaussian sequence, the traditional classification technique using (1) does not always give acceptable results.

The following schema that increases discriminating features of HMM.

Step 1. For each training sequence![]() where Ki – the count of training sequences for class with number l, the characteristic vector

where Ki – the count of training sequences for class with number l, the characteristic vector ![]() which can consist of all or a part of the features is being formed. The likelihood function is being calculated as for true class model to which training vectors are belong to as for model of other class. As a resultthe characteristic vector for the training sequence

which can consist of all or a part of the features is being formed. The likelihood function is being calculated as for true class model to which training vectors are belong to as for model of other class. As a resultthe characteristic vector for the training sequence![]() generated by model

generated by model![]() consists of two subvectors:

consists of two subvectors:![]() , where the first subvector

, where the first subvector

consists of features, initiated by the model![]() , and the second – by the model

, and the second – by the model![]() .

.

Step 2. Similarly, the characteristic vector is calculated for the test sequence

Qtest

Step 3. Using a metric based classifier (e.g. kNN) it is become clear to which class Otest belongs to.

Computing Experiments

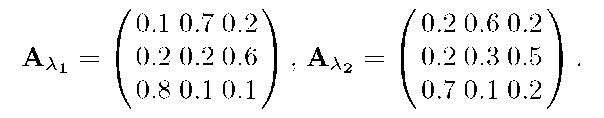

Investigations were performed under following assumptions. The models![]() and

and ![]() are defined on the hidden Markov chains with identical and they have differences in the matrix of transition probabilities only. For the first model

are defined on the hidden Markov chains with identical and they have differences in the matrix of transition probabilities only. For the first model![]() and for the second model

and for the second model![]() the difference was in transitive probabilities only:

the difference was in transitive probabilities only:

The Gaussian distribution parameters for the models![]() are chosen identical:

are chosen identical:![]() The probabilities of initial

The probabilities of initial

states are coincide also:![]() . Thus, models have turned out very close to each other, and, hence, sequences differ among themselves very weakly.

. Thus, models have turned out very close to each other, and, hence, sequences differ among themselves very weakly.

The training and testing sequences have been simulated by the Monte-Carlo method. It has been generated 5 training sets of 100 sequences for each of the classes to perform investigations. For each training set 500 test signals were generated for each class. The results of classification have been averaged. The length of the each sequence has been set to 100.

The Additive Noise

The first variant of distortion of a true sequence assumed additive superposition of the noise component distributed under some distribution law. We denoted the sequence simulated on model A through u. Then at superposition of noise e on this sequence according to the following formula we received noisy sequence with an additive noise:![]() , where w shows the influence of sequence distortion.

, where w shows the influence of sequence distortion.

The space of the first derivatives of likelihood function with respect to elements of transition probabilities matrix has been chosen as the feature space for the kNN classifier. Further in Tables 1-3 following designations are used: APD – the average percent of difference between the results of the kNN classifier and the results of traditional classification; AP – the average percent of correctly classified sequences using the kNN classifier.

Table 1. The comparison of classification’s results at the additive noise

The classification results with the normal noise distribution are shown in Table 1 in the 2nd and the 3rd columns. As follows from this experiment, the kNN classifier using the space of the first derivatives of likelihood function gives worse results than traditional classifier. It is explained by the fact that the noises and sequences have identical normal distribution, and the algorithm of Baum-Welch being used for parameters estimation is exactly tuned for parameters estimation of probability distribution function of observed sequences in the conditions when this function is the normal distribution function. The results of classification comparison at the noise distributed on the Cauchy distribution law are in Table 1 in the 4th and the 5th columns. In this case there is opposite situation: the kNN classifier gives better results at the noise level![]()

Probability Substitution of a Sequence by Noise

The second variant of distortion of a true sequence assumed partial substitution of a sequence by noise under the probability scheme, i.e. with some probability p instead of the true sequence associated with some hidden state, the noise sequence was appearing. At this time the parameters of noisy sequence were varying in the different experiments.

The results of classification comparison at the noise distributed on the normal distribution law are in Table 2 from the 2nd to the 5rd columns. In the 2nd and the 3rd columns as a result of superposition of such noise there was a displacement of the estimated parameters of expectation, but the traditional classifier showed better results than the proposed one. In the next two columns there is stable classification results improvement when the probability of noise appearance p < 0.6. It is explained by the fact that the parameters of distribution of noise generator are very big values unlike the previous case. The results of classification comparison when the noise has a Cauchy distribution are shown in Table 2 in the 6th and the 7th columns. In this case there is stable advantage of the kNN classifier based classifier.

Table 2. The comparison of classification’s results at the probability substitution of a sequence by noise that hasn’t dependence on the hidden state

Table 3. The comparison of classification’s results at the probability substitution of a sequence by noise that has dependence from the hidden state

Classification results with the normal noise distribution:![]() ,

,

![]() it is the noise appearing when the HMM is in the ith hidden state) are shown in Table 3 in the 2nd and the 3rd columns. The observed sequences have the double-mode distribution instead of single-mode distribution expected at

it is the noise appearing when the HMM is in the ith hidden state) are shown in Table 3 in the 2nd and the 3rd columns. The observed sequences have the double-mode distribution instead of single-mode distribution expected at![]() . The traditional classifier is slightly worse than the proposed kNN classifier in this situation. The results of classification comparison at the noise distributed on the Cauchy distribution law:

. The traditional classifier is slightly worse than the proposed kNN classifier in this situation. The results of classification comparison at the noise distributed on the Cauchy distribution law:![]() are in Table 3 in the 4th and the 5th

are in Table 3 in the 4th and the 5th

columns. In this case the noise substituting the original sequences is differed from the last by using distribution law of random variables only. In this experiment it is observed the constant advantage of the offered method that used the kNN classifier. Similar results were obtained in the noise parameters distributed by Cauchy distribution law:![]() , i.e. with the absence of noise displacement but with different scale of distribution.

, i.e. with the absence of noise displacement but with different scale of distribution.

Conclusion

In this article it was shown that the feature space generated by the HMM can be used for the classification of sequences generated by the similar models. The first derivatives of likelihood function logarithm with respect to the parameters of HMM were used as the features. The kNN classifier was used in this feature space. Studies have shown that with similar models and signals with the distortion the proposed method can improve the quality of classification.