How to configure the optimal size of stateful cache?

As we introduced earlier, JBoss EJB container uses a cache of bean instances to improve the performance of stateful session beans. The cache stores active EJB instances in memory so that they are immediately available for client requests. The cache contains EJBs that are currently in use by a client and instances that were recently in use. Stateful session beans in cache are bound to a particular client.

Finding out the optimal size for stateful cache follows the same pattern we have learnt with SLSBs, just you need to watch for different attributes.

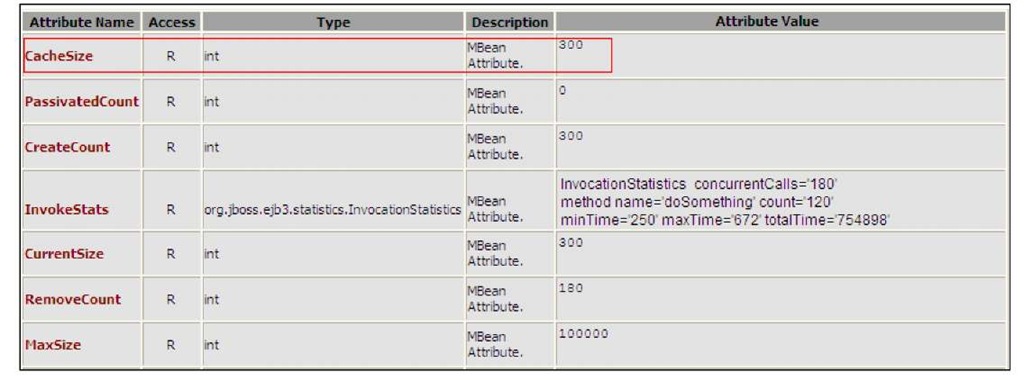

Again open your JMX console and find out your EJB 3 unit through the jboss.j2ee domain. There you will find a few attributes that will interest you:

The CacheSize is the current size of the stateful cache. If the amount of this cache approaches the MaxSize attribute, then you should consider raising the maximum element of SFSBs in the cache (refer to the earlier section for information about the configuration of the stateful cache).

Here’s how you can poll this attribute from the twiddle command line utility (Windows users):

This is the equivalent shell for Unix readers:

Finally, you should pay attention to the RemoveCount attribute, which dictates the amount of SFSBs, which have been removed after use. In a well-written application, the growth of this attribute should follow the same trend of createcount that is basically a counter of the SFSBs created. If that’s not your case or worst than all, you have a RemoveCount set to 0, then it’s time to inform your developers there’s a time bomb in your code.

Comparing SLSBs and SFSBs performance

In the last years, many dread legends have grown up about the performance of stateful session beans. You probably have heard vague warnings about their poor scalability or their low throughput.

If you think it over, the main difference between SLSB and SFSB is that stateful beans need to keep the fields contained in the EJB class in memory (unless they are passivated) so, inevitably they will demand more memory to the application server.

However, a well designed application, which reduces both the size of the session and its time span (by carefully issuing a remove on the bean as soon as its activities are terminated) should have no issues at all when using SFSB.

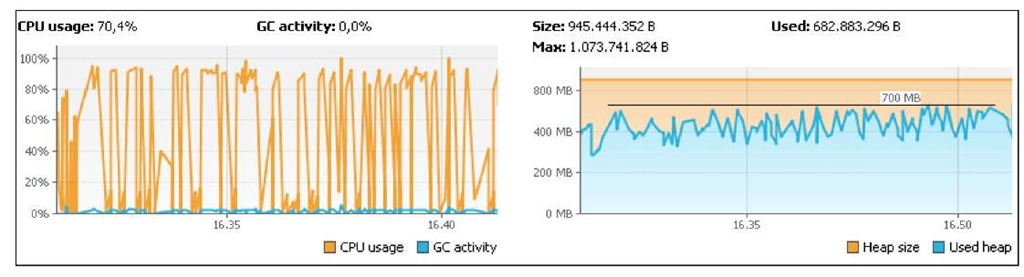

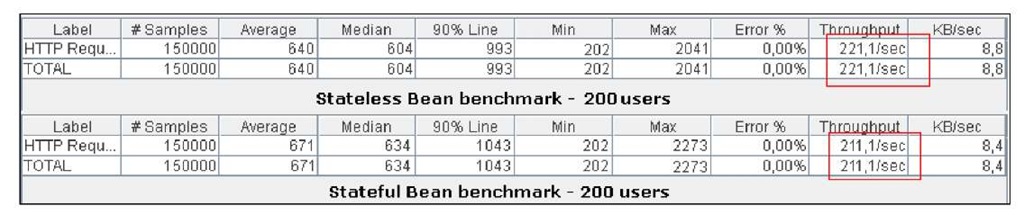

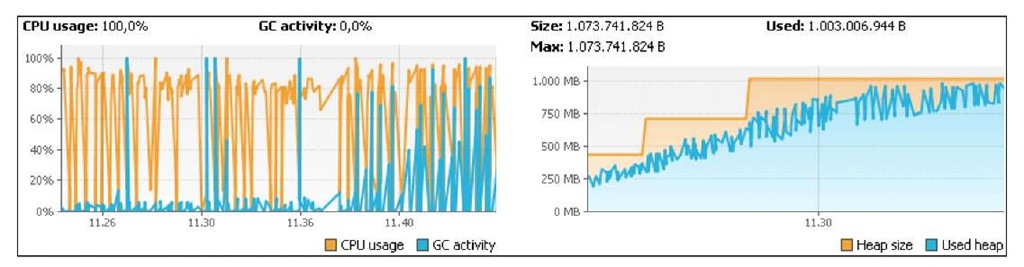

The following is a test executed on a JBoss 5.1.0 platform hosted on a 4 dual Xeon machine with 8 GB of RAM. We have set up a benchmark with 200 concurrent users, each performing a set of operations (select, insert, update) as part of a simulated web session. The following is the heap and CPU graph for the stateless bean usage:

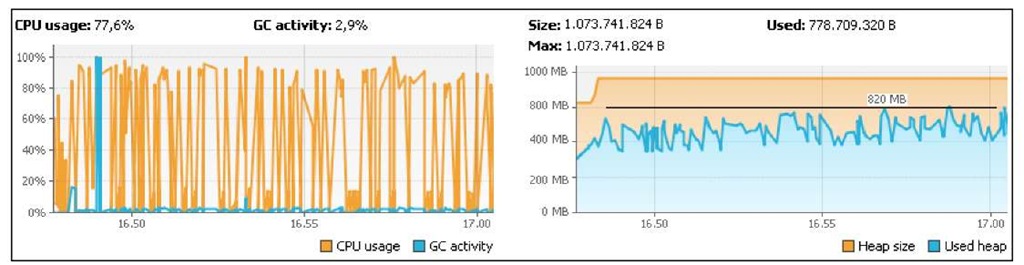

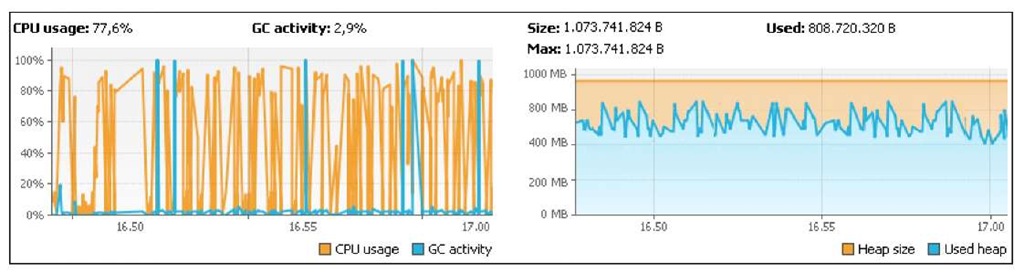

In the stateful bean benchmark, we are storing intermediate states in the EJB session as fields. The following is the VisualVM graph for the stateful bean counterpart:

As you can see, both kinds of session beans managed to complete the benchmark with a maximum of 1GB of RAM. SFSBs sit on a higher memory threshold than their SLSBs counterpart, however the memory trend is not different from the stateless session bean one.

If you carefully examine the graph, you’ll see that the most evident difference between the two components is a higher CPU usage and GC activity for the SFSB. That’s natural because the EJB container needs to perform CPU-intensive operations like marshalling/unmarshalling fields of the beans.

And what about the throughput? The following graph shows the JMeter aggregate report for both tests executed. As you can see, the difference is less than you would expect (around 10%):

When things get wilder

The preceding benchmark reflects an optimal scenario where you have short-lived sessions with few items stored as fields. In such a situation it’s common that you will see little difference in throughput between the two kinds of session beans.

Adding some nasty things to this benchmark would reveal a different scenario. Supposing that your EJB client issues the remove() method on the session bean after a longer (random) amount of time and that our session data is inflated with a 50 KB String, the memory requirements of the applications would change drastically, leading to an OutOfMemory Exception with the current configuration:

While this second approach simulates some evident architectural mistakes, most of the applications sit somehow between these two scenarios. Ultimately the requirements (and the performance) of your SFSBs can conceptually be thought of as a product of Session time and Session size (that is, session time x session size). Carefully design your architecture to reduce both these factors as much as possible and verify with a load test if your application meets the expected requirements.

Are you using over 1024 concurrent users?

Some operating systems, such as Linux limit the number of file descriptors that any process may open. The default limit is 1024 per process. Java Virtual Machine opens many files in order to read in the classes required to run an application. A file descriptor is also used for each socket that is opened. This can prevent a larger number of concurrent clients.

On Linux systems you can increase the number of allowable open file handles in the file, /etc/security/limits.conf. For example:

This would allow up to 20000 file handles to be open by the server user.

Is it possible that Stateful Beans are faster then Stateless Beans?

There are some non-official benchmarks which show that, in some scenarios, the SFSB can outperform SLSB. If you think it over, the application server tries to optimize the usage of SLSB instances and first searches for free beans in the pool, afterwards either waits until an "in-use" instance is returned to the pool, or creates a new one. There is no such optimization for SFSB, so the application server simply routes all requests to the same instance, which is sometimes faster than the SLSB approach.

One thing you could try, provided you have plenty of memory available on your application server, is disabling stateful session bean passivation. This will avoid the cost of marshalling/unmarshalling objects that have been idle for a while, thus introducing a tradeoff between memory and performance.

You have got several options to disable passivation: the simplest is to set a removal timeout smaller (but greater than zero) then the idle timeout in the file deploy/ejb3-interceptors-aop.xml.

You can also disable passivation at EJB level by changing the stateful cache strategy to the NoPassivationCache strategy.

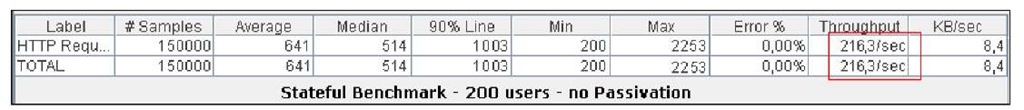

The following is the aftermath of our new benchmark with passivation disabled:

You can also disable passivation at EJB level by changing the stateful cache strategy to the NoPassivationCache strategy. This requires simply to add the annotation @ Cache(org.jboss.ejb3.cache.NoPassivationCache.class) at class level.

And the corresponding JMeter aggregate report:

Three things have basically changed:

1. The amount of memory demanded by the application has grown by about 5-7%. Consider that, however, in this benchmark the EJB session lasted about one minute. Longer sessions could lead to an OutOfMemory Exception with this configuration (1 GB RAM).

2. The Garbage collector activity has increased as well, as we are getting closer to the maximum memory available.

3. The overall throughput has increased since the lifecycle for the SFSB is now much simpler without passivation.

Definitively, this benchmark shows that it is possible, under some circumstances, that well designed SFSBs can have the same rough performance as that of SLSBs (or even outperform them), however without an increase in the application server memory, this improvement will be partly lost in additional garbage collector cycles.

Session Beans and Transactions

One of the most evident differences between an EJB and a simple RMI service is that EJB are transactional components. Simply put, a transaction is a grouping of work that represents a single unit or task.

If you are using Container Managed Transaction (the default), the container will automatically start a transaction for you because the default transaction attribute is required. This guarantees that the work performed by the method is within a global transaction context.

However transaction management is an expensive affair and you need to verify if your EJB methods really need a transaction. For example, a method, which simply returns a list of objects to the client, usually does not need to be enlisted in a transaction.

For this reason, it’s considered a tuning best practice to remove unneeded transactions from your EJB. Unfortunately, this is often underestimated by developers who find if easier to define a generic transaction policy for all methods:

Using EJB 3 annotations, you have no excuse for your negligence, you can explicitly disable transactions by setting the transaction type to not_supported with a simple annotation, as follows:

JBoss Transaction patch

One potential performance bottleneck of the JBoss Transaction service is the Transactionlmple.isAlive check. A patch has been released by JBoss team, which can be applied on top of the application server.

Customizing JBoss EJB container policies

The preceding tip is valid across any application server. You can, however, gain an additional boost in performance by customizing the JBoss EJB container. As a matter of fact the EJB container is tightly integrated with the Aspect Oriented Programming (AOP) framework. Aspects allow developers to more easily modularize the code base, providing a cleaner separation from application logic and system code.

If you take a look in the ejb3-interceptors-aop.xml configuration file, you will notice that every domain contains a list of Interceptors, which are invoked on the EJB instance. For example, consider the following key section from the stateless bean domain:

Interceptors extend the org.jboss.ejb3.aop.AbstractInterceptor class and are invoked separately on the bean instance. Supposing you are not using Transactions in your EJBs, you might consider customizing the EJB container by commenting out the interceptors which are in charge of propagating the Transactional Context.

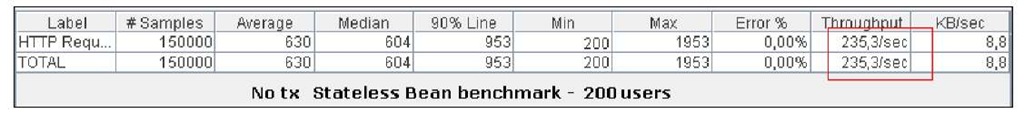

By issuing another benchmark on your stateless beans, this reveals an interesting 10% boost in the throughput of your applications:

![tmp39-164_thumb[2] tmp39-164_thumb[2]](http://what-when-how.com/wp-content/uploads/2011/09/tmp39164_thumb2_thumb.jpg)

![tmp39-167_thumb[2] tmp39-167_thumb[2]](http://what-when-how.com/wp-content/uploads/2011/09/tmp39167_thumb2_thumb.png)

![tmp39-168_thumb[2] tmp39-168_thumb[2]](http://what-when-how.com/wp-content/uploads/2011/09/tmp39168_thumb2_thumb.png)

![tmp39-169_thumb[2] tmp39-169_thumb[2]](http://what-when-how.com/wp-content/uploads/2011/09/tmp39169_thumb2_thumb.jpg)