Choosing the right garbage collector for your application

Once you have learnt the basics of garbage collector algorithms, you can elaborate a strategy for choosing the one which is best suited to your application.

The best choice is usually found after some trials. However, the following matrix will guide the reader to the available alternatives and their suggested use:

|

Collector |

Best for: |

|

Serial |

Single processor machines and small heaps. |

|

Parallel |

Multiprocessor machines and applications requiring high throughput. |

|

Concurrent |

Fast processor machines and applications with strict service level agreement. |

As you can see, by excluding the serial collector, which is fit for smaller applications, the real competition stands between the parallel collector and the concurrent collector.

If your application is deployed on a multiprocessor machine and requires completing the highest possible number of transactions in a time window, then the parallel collector would be a safe bet. This is the case of applications performing batch processing activities, billing, and payroll applications.

Be aware that the parallel collector intensively uses the processors of the machine on which it is running, so it might not be fit for large shared machines (like SunRays) where no single application should monopolize the CPU.

If, on the other hand, you have got the fastest processors on the market and you need to serve every single request by a strict amount of time, then you can opt for the concurrent collector.

The concurrent collector is particularly suited to applications that have a relatively large set of long-lived data since it can reclaim older objects without a long pause. This is generally the case of Web applications where a consistent amount of memory is stored in the HttpSession.

The G1 garbage collector

The G1 garbage collector has been released in release 1.6 update 14 of Sun’s JDK. The G1 collector is targeted at server environments with multi-core CPU’s equipped with large amounts of memory and aims to minimize delays and stop the world collections, replacing with concurrent garbage collecting while normal processing is still going on.

In the G1 Garbage collector there is no separation between younger and older regions. Rather the heap memory is organized into smaller parts called Regions. Each Region is in turn broken down into 525 byte pieces called cards. For each card, there is one entry in the global card table, as depicted by the following image:

This association helps to track which cards are modified (also known as "remembered set"), concentrating its collection and compaction activity first on the areas of the heap that are likely to be full of reclaimable objects, thus improving its efficiency.

G1 uses a pause prediction model to meet user-defined pause time targets. This helps minimize pauses that occur with the mark and sweep collector, and should show good performance improvements with long running applications.

To enable the G1 garbage collector, add the following switch to your JVM:

In terms of GC pause times, Sun engineers state that G1 is sometimes better and sometimes worse than the CMS collector.

As G1 is still under development, the goal is to make G1 perform better than CMS and eventually replace it in a future version of Java SE (the current target is Java SE 7). While the G1 collector is successful at limiting total pause time, it’s still only a soft real-time collector. In other words, it cannot guarantee that it will not impact the application threads’ ability to meet deadlines, all of the time. However, it can operate within a well-defined set of bounds that make it ideal for soft real-time systems that need to maintain high-throughput performance.

In the test we have performed throughout this topic, we have noticed frequent core dumps when adopting the G1 garbage collector algorithm on a JBoss AS 5.1 running JVM 1.6 u. 20. For this reason, we don’t advise at the moment to employ this GC algorithm for any of your applications in a production environment, at least until the new 1.7 release of Java is released (expected between the last quarter of 2010 and the beginning of 2011).

Debugging garbage collection

Once you have a solid knowledge of garbage collection and its algorithms, it’s time to measure the performance of your collections. We will be intentionally brief in this section, as there are quite a lot of tools that simplify the analysis of the garbage collector, as we will see in the next section.

Keep this information as reference if, you haven’t got the chance to use other tools, or if you simply prefer a low level inspection of your garbage collector performance.

The basic command line argument for debugging your garbage collector is – verbose:gc which prints information at every collection.

For example, here is output from a server application:

Here we see two minor collections and one major one. The first two numbers in each row indicate the size of live objects before and after garbage collection:

The number in parenthesis (765444K) (in the first line) is the total available space in the Java heap, excluding the space in the permanent generation. As you can see from the example, the first two minor collections took about half a second, while the third major collection required alone about one second and half to reclaim memory from the whole heap.

If you want additional information about the collections, you can use the flag -xx:+PrintGCDetails which prints information about the single areas of the heap (young and tenured).

Here’s an example of the output from -xx:+PrintGCDetails:

The output indicates, in the left side of the log, that the minor collection recovered about 88 percent of the young generation:

and took about 65 milliseconds.

The second part of the log shows how much the entire heap was reduced:

and the additional overhead for the collection, calculated in 12 milliseconds.

The above switches print collection information in the standard output. If you want to evict your garbage collector logs from the other application logs, you can use the switch -xioggc: which redirects the information in a separate log file.

In addition to the JVM flags, which can be used to debug the garbage collector activity, we would like to mention the jstat command utility, which can provide complete statistics about the performance of your garbage collector. This handy command line tool is particularly useful if you don’t have a graphic environment for running VisualVM garbage collector’s plugins.

Making good use of the memory

Before showing a concrete tuning example, we will add a last section to alert you to the danger of creating large Java objects in your applications. We will also give some advice regarding what to do with OutOfMemory errors.

Avoid creating large Java objects

One of the most harmful things you could do to your JVM is allocating objects which are extremely large. The definition of a large object is often ambiguous, but objects whose size exceeds 500KB are generally considered to be large.

One of the side effects of creating such large objects is the increased heap fragmentation which can potentially lead to an OutOfMemory problem.

Heap fragmentation occurs when a Java application allocates a mix of small and large objects that have different lifetimes. When you have a largely fragmented heap, the immediate effect is that the JVM triggers long GC pause times to force the heap to compact.

Consider the following example:

In this small JSP fragment, you are allocating an Object named HugeObject, which occupies around 1 MB of memory since it’s holding a large XML file flattened to a text String. The object is stored in the HttpSession using an unique identifier so that every new request will add a new item to the HttpSession.

If you try to load test your Web application, you will see that in a few minutes the application server will become irresponsive and eventually will issue an OutOfMemory error. On a tiny JBoss AS installation with -Xmx 2 5 6, you should be able to allocate about 50 Huge Objects.

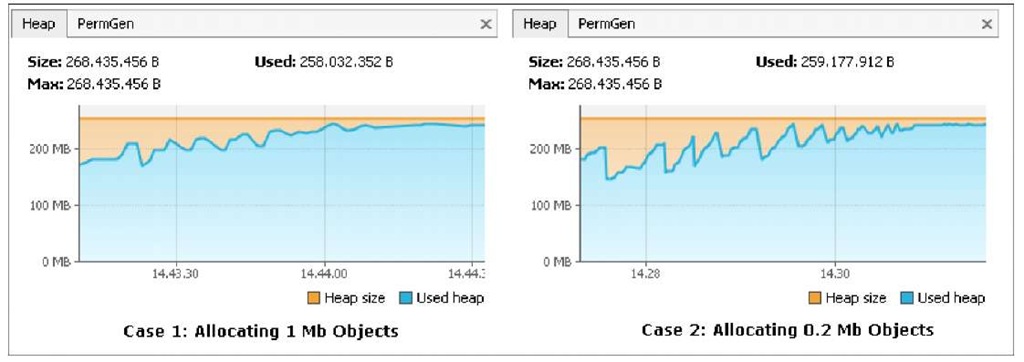

Now consider the same application, which is using a smaller set of Objects, supposing a 0.2 MB object referencing a shorter XML file:

This time the application server takes a bit more to be killed, as it tried several times to compact the heap and issue a full garbage collection. In the end, you were able to allocate about 300 Smaller Objects, counting up to 60 MB, that is 10 MB more than you could allocate with the Huge Object.

The following screenshot documents this test, monitored by VisualVM:

Summing up, the best practices when dealing with large objects are:

• Avoid creating objects over 500 KB in size. Try to split the objects into smaller chunks. For example, if the objects contain a large XML file, you could try to split them into several fragments.

• If this is not possible, try to allocate the large objects in the same lifetime. Since these allocations will all be performed by a single thread and very closely spaced in time, they will typically end up stored as a contiguous block in the Java heap.

• Consider adding the option -xx:+useParalleloidGC which can reduce as well the heap fragmentation by compacting the tenured generation.

Handling ‘Out of Memory’ errors

The infamous error message, outofMemory, has appeared at least once on the console of every programmer. It seems a very descriptive error and so you might be tempted to go for the quickest solution that is increasing the heap size of the application server. In some circumstances that could be just what you need, anyway the outofMemory error is often the symptom of a problem which resides somewhere in your code. Let’s first analyze the possible variants of this error message:

The message indicates that an object could not be allocated in the Java heap. The problem can be as simple as a configuration issue, where the specified heap size (or the default size, if not specified) is insufficient for the application. In other cases, and in particular for a long-lived application, the message might be an indication that the application is holding references to objects, and this prevents the objects from being garbage collected. This is the Java language equivalent of a memory leak.

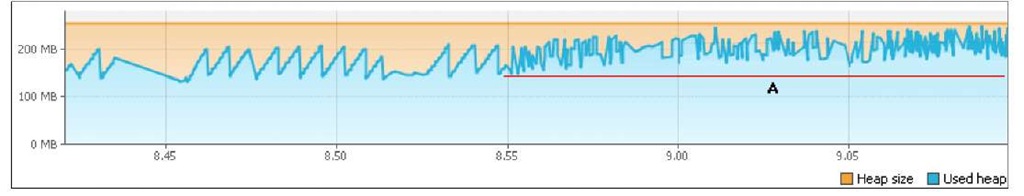

The following screenshot depicts an example of memory leak. As you can see, the JVM is not able to keep a steady level and keeps growing both the upper limit and the lower limit (A):

The detail message indicates that the permanent generation is full. The permanent generation is the area of the heap where class and method objects are stored. If an application loads a very large number of classes, then the size of the permanent generation might need to be increased using the -XX:MaxPermSize option.

Why is the Perm Gen Space usually exhausted after redeploying an application?

Every time you deploy an application, the application is loaded using its own classloader. Simply put, a classloader is a special class that loads .class files from jar files. When you undeploy the application, the class loader is discarded and all the classes that it loaded, should be garbage collected sooner or later.

The problem is that Web containers do not garbage collect the classloader itself and the classes it loads. Each time you reload the webapp context, more copies of these classes are loaded, and as these are stored in the permanent heap generation, it will eventually run out of memory.

The detail message indicates that the application (or APIs used by that application) attempted to allocate an array that is larger than the heap size. For example, if an application attempts to allocate an array of 512 MB but the maximum heap size is 256 MB then an OutOfMemory error will be thrown with the reason, Requested array size exceeds VM limit. If this object allocation is intentional, then the only way to solve this issue is by increasing the Java heap max size.

Finding the memory leak in your code

If you find that the OutOfMemory error is caused by a memory leak, the next question is, how do we find where the problem is in your code? Searching for a memory leak can sometimes be as hard as searching for a needle in a haystack, at least without the proper tools. Let’s see what VisualVM can do for you:

1. Start VisualVM and connect it to your JBoss AS, which is running a memory leaked application in it. As we will see in a minute, detecting the cause of a memory leak with VisualVM is a simple three-step procedure.

2. At first you need to know which classes are causing the memory leak. In order to do this, you need to start a Profiler Memory session, taking care to select in the settings Record allocations stack traces.

3. Start profiling and wait for a while until a tabular display of different classes, its instance count, and total byte size are displayed. At this point you need to take two snapshots of the objects: the first one with a clean memory state and the second one after the memory leak occurred (the natural assumption is that the memory leak is clearly reproducible).

4. Now we have two snapshots displayed at the left side pane:

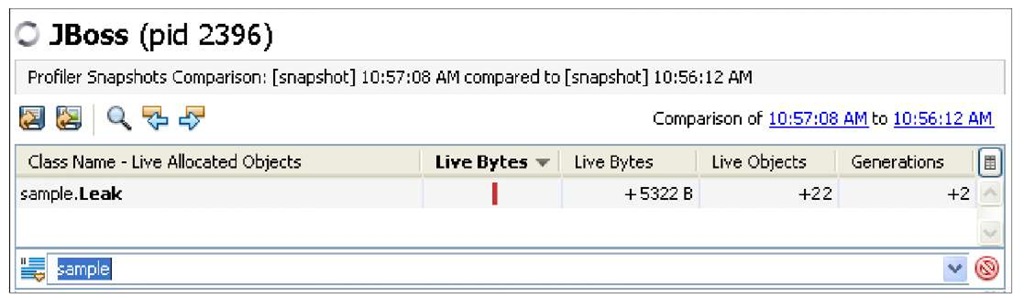

5. Select both of these snapshots (in the left side pane, by using the Ctrl key we can select multiple items), right click, and select Compare. A comparison tab will be opened in the right side pane. That tab will display items that have increased during the interval of the first and second snapshot.

As you can see from the screenshot, the class sample.Leak is the suspect for the memory leak.

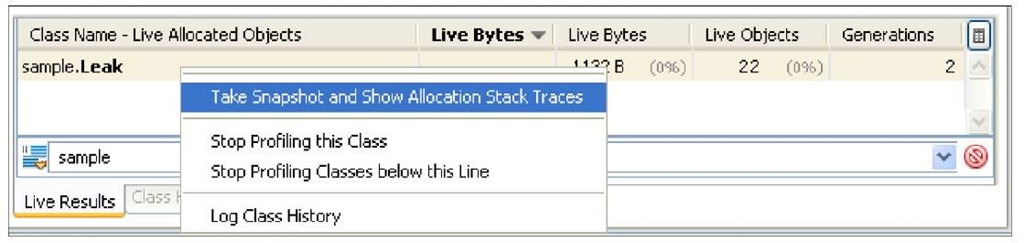

6. Now that you have a clue, you need to know where this class has been instantiated. To do this, you need to go to the Profiler tab again. Add a filter so that you can easily identify it among the others and right click and select Take snapshot and show allocation stack traces.

7. One more snapshot is generated. This time in the right side pane, an additional tab is available, named Allocation Stack Trace. By selecting it, you can check the different places where this particular item is instantiated and it’s percentage of the total count. In this example, all memory leaks are caused by the leak.jsp page:

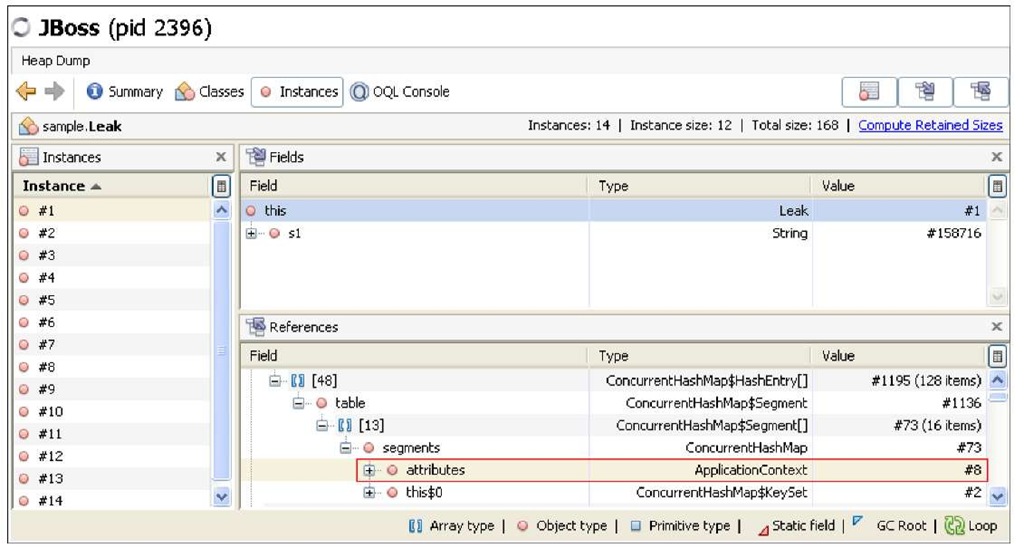

8. Good. So now you know the Class that is potentially causing the leak and where it has been instantiated. You can now complete your analysis by discovering which class is holding a reference to the leaked class.

9. You have to switch to the monitor tab and take a heap dump of the application. Once the dump is completed, filter on the sample.Leak class and go to the Classes view. You will find there the objects that are holding references to the leaked objects.

In this example, the ApplicationContext of a web application is the culprit as the unfortunate developer forgot to remove a large set of objects.

![tmp39-72_thumb[2][2] tmp39-72_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2011/09/tmp3972_thumb22_thumb.jpg)

![tmp39-74_thumb[2][2] tmp39-74_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2011/09/tmp3974_thumb22_thumb.jpg)

![tmp39-79_thumb[2][2] tmp39-79_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2011/09/tmp3979_thumb22_thumb.png)

![tmp39-80_thumb[2][2] tmp39-80_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2011/09/tmp3980_thumb22_thumb.jpg)

![tmp39-86_thumb[2][2] tmp39-86_thumb[2][2]](http://what-when-how.com/wp-content/uploads/2011/09/tmp3986_thumb22_thumb.jpg)