Abstract In this topic, a novel approach to building behavior-rich interactive 3D Web applications is presented. The approach, called Flex-VR, enables building configurable 3D applications in which content can be relatively easily created and modified by common users. Flex-VR applications are based on configurable content, i.e., content that may be interactively or automatically configured based on a library of components. Configuration of application content from components simplifies content creation allowing users without programming skills to perform this task efficiently. Experienced users—programmers and 3D designers—can add new components to the library, thus extending system capabilities. In this topic, an overview of the Flex-VR approach is provided and two important elements of the Flex-VR are described in detail: Flex-VR content structuralization and Flex-VR content model. A number of design patterns, which enable configuration of complex content structures, and an example Flex-VR application are also presented.

Introduction

One of the main problems that currently limits wide use of 3D applications on everyday basis is the difficulty of creating high-quality meaningful interactive 3D content. Presently available 3D content creation methods are conceptually complex and time-consuming, require significant technical expertise and sophisticated tools. 3D content can be arbitrarily complex, but even instances of simple interactive content require significant level of expertise and effort, which includes at least creation of 3D models through scanning or modeling, designing animations, assembling 3D models and animations into virtual scenes, and programming the behavior of these scenes.

At the same time, practical 3D applications require enormous amounts of complex content. Regardless of the employed rendering or interaction techniques, the content is what a user actually perceives. In most cases, the content must be created by domain experts (e.g., museum curators, teachers in schools, TV technicians, sales experts), who cannot be expected to have experience in 3D programming or design. The need for simplification is obvious and highly in demand.

Simplification of the 3D content creation can be achieved in two ways: by partially eliminating users from this process through automation, or by supporting users through configuration. Automation is applicable only to well-defined content. This may be a physical object or environment, which can be scanned with an automatic 3D scanner, or content described in some other way, which can be automatically converted to 3D/VR content, e.g., a dataset visualized through a 3D interface. Automation of 3D content creation is important, but it does not solve the problem of creating arbitrary content and involving domain experts in this process.

On the contrary, configuration enables full involvement of domain experts and gives them the ability to create and modify custom content. The term “configuring” is used here in the meaning “arranging constituting parts or elements.” With the use of configuration, simplification of the content creation process is achieved through defining specific rules which, applied to the content creation process, make the process significantly easier by reducing the amount and complexity of information a designer has to provide, yet keeping general flexibility of the achievable results. By analogy, using a text processor to create a text document constraints users in what they can do but is much easier than creating the set of commands controlling a printer, and the results that can be achieved are in most cases satisfactory.

In this topic, we describe a novel approach to building interactive 3D Web applications. The approach, called Flex-VR, enables building configurable 3D Web applications, in which content can be relatively easily created and modified by common users. The topic is organized as follows. Section 5.2 provides an overview of the state of the art in 3D content creation and programming. Section 5.3 contains an overview of the Flex-VR approach. In Sect. 5.4, the Beh-VR structuralization model and the VR-BML behavior modeling language are described in details. In Sect. 5.5, the Flex-VR content model is presented. Section 5.6 provides an example of a Flex-VR application. Finally, Sect. 5.7 concludes the topic.

Preparation of interactive 3D Web content involves three steps: creation of 3D models and animations, assembling the models into virtual scenes, and programming scenes’ behavior. Increasing availability of automatic or semi-automatic 3D scanning tools helps in acquisition of accurate 3D models of real objects or interiors [15, 24]. Progress is still needed to make the process fully automatic, enable scanning of objects with arbitrary shapes and animated objects, and acquisition of advanced surface properties, but the progress in this field is very fast.

Designers can use 3D design packages such as Autodesk’s 3ds Max, Maya, Softimage, or open source Blender to refine or enhance the scanned models and to create imaginary objects. The same tools can be used to assemble 3D objects into complex virtual scenes.

Programming behavior of a virtual scene is usually the most challenging task. In VRML/X3D standards [12, 13], behavior specification is based on the dataflow paradigm. Each node can have a number of input fields (eventIn) and output fields (eventOut) that receive and send events. The fields can be interconnected by routes which pass events between the nodes. For example, a touch sensor node may generate an event, which is routed to a script that implements some logic. As a result of the event processing, the script may generate another event which may be routed to some execution unit, e.g., initiate a time sensor, which is further connected to a position interpolator, which may be connected to a transformation node containing some geometry. In such a way, activation of the touch sensor may start animation of some geometry in the scene.

The dataflow programming is a powerful concept which enables efficient implementation of interactive 3D graphics, in particular providing smooth animations, inherently supporting concurrent execution, and enabling various kinds of optimization. However, this approach has also several important disadvantages, which become more and more apparent with the shift from static 3D Web content model to behavior-rich 3D Web applications model [5].

First of all, in this approach programming complex interactions is a tedious task. In the example given above, many lines of code are required to implement a simple animation, which—in fact—could be, in most cases, replaced by a single command, such as Object.MoveTo(x,y,z,time). Moreover, the code is not really readable for a programmer. For example, in many cases the nodes and routes must be separated in the scene.

More importantly, however, the dataflow graph is realized as a separate, generally non-hierarchical structure, orthogonal to the main scene graph describing the composition of a 3D scene. Interweaving the two graph structures results in the increase of conceptual complexity, but also practically precludes possibility of dynamic composition based on a hierarchical organization of the 3D scene graph structure, which is critical to building dynamic 3D applications.

In many cases, the scene structure must be changed (e.g., an object must be added or removed) as the result of user actions or changes in the application state. Since the routes are statically bound to the nodes they connect (by node names), nodes cannot be simply added or removed from the scene. Every time a node is added or removed, the set of routes in the scene must be changed, depending on the node name and the set of events it supports.

Changing the set of nodes in a scene encoded in VRML/X3D during the design phase requires either manual programming or sophisticated tools that “know” the characteristics of the scene nodes being manipulated. Changing the set of nodes in a scene during the runtime leads to complex, self-mutating code, which is difficult to develop and maintain. Supporting the change of scene contents both in the design phase and in the runtime is even more challenging.

In behavior-rich scenes, major part of the scene definition is devoted to scripts implementing the scene logic and routes connecting nodes in the scene. Dynamic scenes, in which objects are added or removed in the runtime, require meta-code in scripts responsible for creation of the scene code. A problem occurs when a dynamic element, which adds or removes objects, should also be dynamically added.

Significant research effort has been invested in the development of high-level methods, languages, and tools for programming behavior of virtual scenes. These approaches can be classified into three main groups.

The first group constitute scripting languages for describing behavior of virtual scenes. An early example of a scripting language designed for creating 3D interaction scenarios is MPML-VR (Multimodal Presentation Markup Language for VR) [18]. This language is a 3D adaptation of the MPML language originally targeted at creating multimodal Web content, in particular to enable content authors to script rich Web-based interaction scenarios featuring life-like characters. Similar solutions, also developed for controlling life-like characters, are VHML (Virtual Human Markup Language) [16] for scripting virtual characters’ animation and APML (Affective Presentation Markup Language) 11] focusing on presenting personality and emotions in agents. More recent developments in the field of programming high-level behavior of human characters include BML (Behavior Markup Language), which can be then translated into low level animation commands such as BAP/FAP in MPEG-4 [25], and PML (Player Markup Language), developed as an extension to the X3D/H-Anim standards [14].

General-purpose virtual scene behavior programming solutions include VEML (Virtual Environment Markup Language) based on the concept of atomic simulations [4]. An extension to the VRML/X3D standards enabling definition of behavior of objects, called BDL, has been described in [6]. Another approach, based on the concept of aspect oriented programming has been proposed in [17]. Ajax3D is a recently developed method of programming interactive Web 3D applications, based on combination of JavaScript and the Scene Authoring Interface (SAI) [19].

The second group of solutions are integrated application design frameworks. Such frameworks usually include some complex languages and tools that extend existing standards to provide additional functionality, in particular, enabling specification of virtual scene behavior. Works in this field include Contigra [8] and Be-havior3D [7], which are based on distributed standardized components that can be assembled into 3D scenes during the design phase. However, this approach still relies on the dataflow paradigm and standard event processing, making it difficult to specify more complex behaviors. A content adaptation framework based on Con-tigra has been described in [9]. Another solution, employing the use of distributed components accessible through Web Services has been proposed in [40].

Common motivation for developing new scripting languages and content design frameworks, as those described above, is to simplify the process of designing complex virtual scenes. However, even most high-level scripts and content representation languages lead to complex code when they are used for preparing complicated 3D content. The third group of solutions try to alleviate this problem by using graphical applications for designing content behavior. Research works in this field include [2, 26] and [20, 21]. An advanced commercial product in this category is 3DVIA Virtools, which provides a suite of tools that can be used to build interactive 3D applications and games [10]. However, even if graphical specification of behavior may be in some cases more intuitive than programming, users still must deal with complex diagrams illustrating how a scenario progresses and reacts to user interactions. Such diagrams are usually too difficult to be effectively used by non-programmers.

Creation of interactive 3D content using the above methods requires at least a 3D designer and a programmer (possibly the same person). However, as mentioned in the Introduction, practical 3D Web applications require large amounts of complex interactive content, which—in most cases—must be created by domain experts, such as sales experts, teachers or museum curators, who cannot be expected to have experience in 3D graphics design and computer programming. Therefore, the process of creation and modification of content must be much easier than it is possible today using the tools described above.

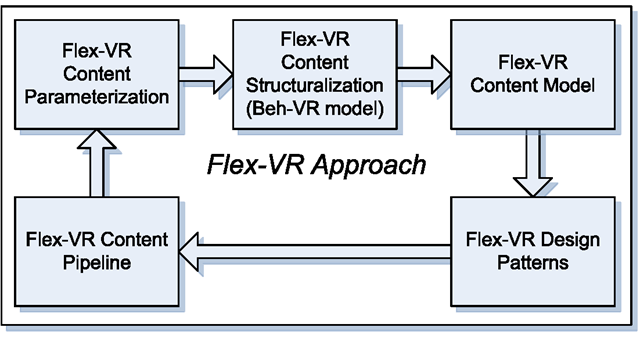

Fig. 5.1 Main elements of the Flex-VR approach