INTRODUCTION

Modern organizations are faced with many challenges with the trend toward distribution of their workforce across the planet. With this situation becoming more common, it is important for organizations to find ways of encouraging effective leadership and strong teamwork. Training and evaluation of the effectiveness of those employed can be an expensive exercise due to geographic separation of the parties involved. To this end, we propose a collaborative play scenario, using humans and artificial beings as fully equal partners (FEPs), to facilitate training and evaluation of a dispersed workforce.

While this scenario is a simple example of collaboration among human and artificial entities, moving this concept forward in other application areas creates questions about how artificial entities influence outcomes in the context of group decision making. The idea of social influence and acceptance of artificial beings as equal decision makers is explored, and how they may integrate into larger societies.

In this article, we present a simple training exercise designed to test a candidate’s leadership ability to negotiate with other members of the organization, using their influence to achieve (partially or fully) their goals. While in practice, the play scenario would consist of combinations of human and artificial beings, the training scenario presented shall consist solely of artificial FEPs in order to demonstrate how influence can affect a result in a collaborative process.

BACKGROUND

Artificial Beings as Fully Equal Partners

When the average person is confronted with the term “Artificial Intelligence” it is more likely to conjure images of science fiction than science fact (Khan, 1998). Yet throughout our daily lives, we experience various degrees of artificial intelligence in such mundane devices as washing machines and refrigerators. Beyond this, organisations have been using intelligent systems in a myriad of endeavours.

Beyond today however, “We may hope that machines will eventually compete with men in all purely intellectual fields” (Turing, 1950, p 460). Turing’s remarks may not be fully realized today; however, the integration of artificial beings into human organizations and society evoke powerful images of both positive and negative possibility.

One possibility is artificial beings emerging as partners rather than tools in various collaborative situations. Unlike past revolutions of mechanical automation, the presence of artificial beings should not imply a redundancy for human partners, but rather a complimentary relationship. Group decision making, including both humans and artificial beings as equals, increases the diversity of the knowledge pool (Dunbar 1995), improving the likelihood of positive outcomes.

In order for artificial beings to be realized as collaborative partners, as opposed to an intelligent tool, they must be able to articulate their perspectives and opinions, while taking onboard the knowledge and opinions of others. For this to occur, artificial beings require a degree of social influence. For this influence to occur, the artificial being needs to become acceptable within the social system: Society, organization or group (Kelman, Fiske, Kazdin, & Schacter, 2006). In making the transition to societal acceptance of artificial beings, there are great challenges, both technical and social. To better study artificial beings as collaborative partners, it is possible to focus on a smaller, group social setting, with an assumption of social acceptance (and therefore the capability to influence) collaborative group decision making. For this reason, computer games provide an excellent environment for understanding how humans and artificial beings can positively influence outcomes in a collaborative group situation.

Much of our work into collaboration has been influenced by the use of intelligent autonomous agents in computer games. Jennings and Wooldrige (1995) describe an intelligent agent as one that enjoys the attributes of autonomy, situatedness, social ability, reactivity and proactiveness.

Basing intelligent entities around this core concept of agency has led researchers such as Laird (2001) and Kaminka et al. (2002) to create intelligent opponents for human players.

Taking this a step further, we see future applications for intelligent artificial beings as more than just opponents or nonplayer characters (called NPCs) in computer games, but rather we see artificial beings being utilised as fully equal partners.

Extending these concepts of humans and artificial entities interacting collaboratively in computer games, it is necessary to define a type of entity that:

- Does not treat human and artificial players differently during interaction;

Can work cooperatively with other fully equal partners (including humans);

“Plays” the game as a human would;

- Does not work to a defined script or take direction from an agent “director” such as those described by Magerko et al. (2004) and Riedl, Saretto, and Young (2003) and;

- Is not necessarily aware of the nature of other FEP beings (human or artificial in nature).

Simply, a Fully Equal Partner (or FEP) is an intelligent entity that performs tasks cooperatively with other FEPs (human or artificial), but is also capable of being replaced one with another. These beings are not necessarily aware of the nature of their fellow partners.

A Collaborative Architecture

In order to facilitate the collaboration among fully equal partners, the involved computer games must support a number of key features (Thomas & Vlacic, 2003), including:

i) A clean and well-defined interface or separation between the beings and the game (Vincent et al., 1999);

ii) A concept of time and causality; and

iii) Support for experimentation (Cohen, Hanks, & Pollack, 1993).

To create a computer game that enjoys many of these features, Thomas and Vlacic (2005) developed a layered architectural approach to collaborative games. Collaborative computer games that involve FEPs have three architectural layers: A communications, a physical and a cognitive layer.

The communications layer is essentially the protocols and low-level software that facilitate interaction and communication. The physical layer describes items and entities within the game world and how they may be manipulated. The cognitive layer describes the processes required to facilitate intelligent collaboration.

Collaborative process

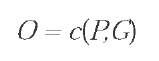

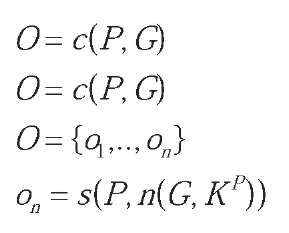

If considered at a high level, the collaborative process c involves taking a set of fully equal partners P with a set of goals G and producing a set of outcomes O. An outcome may not necessarily satisfy the set of goals (e.g., a failure outcome).

In order to obtain these outcomes to the collaborative process, FEPs engage in conversations. The result of these conversations are pieces of group collective knowledge K; that is, knowledge that is known to the group. Outcomes of the collaborative group are a result of the collaborative process between the group of FEPs and the goals of the collaborative process.

where s is a function of all partners P applied to an interpretation function n of the set of goals G, the set of group collective knowledge across the entire set of partners KP, resulting in an outcome on.

INFLUENCE IN THE COLLABORATIVE PROCESS

In human to human interactions, we see many forces at play that influence one person to agree or take the side of another in a discussion. These influences need to be taken into account when collaborative work is undertaken. Even the size of a group (Fay, Garrod, & Carletta, 2000) can change the way in which partners are influenced, and by whom.

Collaborative FEPs may create an affinity with one or more entities and are more likely to accept their position during negotiation. Possible methods for obtaining an affinity with one or more FEPs include:

1. The degree to which one FEP’s responses convey a perception/opinion that matches that of another FEP. The more that one partner’s position matches that of another partner, it becomes more likely that the partner will “trust” the statements of that partner.

2. Some arbitrary/authoritative influence factor that has the partner tending toward the position of one or more other partners. An example in a business sense might be seniority or position within an organization.

3. Trade: changing a given position in order to influence another partner’s position on another item in the collaborative process.

4. A pre-existing relationship (e.g., a friendship) that exists beyond the scope of the collaborative process.

The scope of the play scenario discussed shall focus on two forms of influence, that of arbitrary/authoritative influence and common interest.

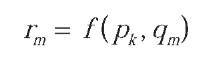

During the collaborative process, any partner pl, where l^ k, may ask a question qm of any other partner pk in order to receive a response rm, where m = j+i

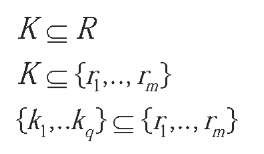

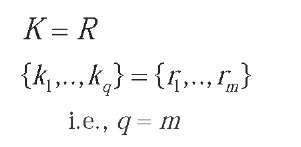

The set of Group Collective Knowledge K obtained by the group through the collaborative process is a subset of the responses obtained during the collaborative process.

To influence group collective knowledge, resulting responses r that contribute to K must be changed in some way. Assuming that all responses contribute to collective knowledge:

During the negotiation phase, an influence function changes the response for a given partner’s initial decision based on the degree of influence the other partners have with the first partner. The influence function that is used here is simply the sum of the proportion difference between one partner’s response (obtained during the conversation process) and that of another partner:

Where i(rpk) is the influenced response which is the sum of all influence factors pnf multiplied by the response difference partner pn and the partner under influence p. FEPs using this influence function cannot influence themselves.

PLAY SCENARIO: PROJECT PLANNING

In order to investigate influence in the collaborative process, a play scenario was devised based upon a documented real-world project planning process from industry. The scenario is used as a training exercise for management candidates to investigate their negotiation skills in representing their business group’s interests in a collaborative situation.

The scenario involves a particular enterprise software developer that utilises a common infrastructure upon which all its enterprise solutions are based. When a new version of the infrastructure is to be developed, the managers and architects of this business unit formulate a list of potential projects that can be pursued within the next version’s development timeframe. Each project has an estimated development time budget.

Unfortunately, the list of potential projects greatly exceeds the number of development days available within the new version development period.

In order to satisfy the needs of the enterprise product business groups, the manager responsible for infrastructure therefore contacts the managers of the various enterprise products to obtain their feedback on what projects are most desirable in their respective areas.

Because there are timeframe limitations, and the enterprise products address different business needs, a significant amount of time is involved in negotiating a “best fit” for all parties. As such, each of the business group managers wants to influence the decisions of the others in order to influence the inclusion in the development plan of their highest priority requirements.

Table 1 documents the business units involved.

The Experiment

The play scenario is calibrated against the original business data that was collected prior to the meetings. Each business group was requested to review the list of potential projects (88 in total) and place a numbered priority next to each item until the development days from the items totaled approximately 400 days.

When a candidate undertakes the training scenario, they must represent their business group’s interests, maximising the number of inclusions within the project.

Unlike a regular play scenario, the following exercises have been undertaken with artificial FEPs only, in order to investigate their effectiveness in influencing the final outcomes. The following influence methods were undertaken:

1. No Influence (baseline);

2. Arbitrary Influence; and

3. Common Interest.

The participating FEPs utilize a collaborative fuzzy logic process (Thomas & Vlacic, 2007) to determine the responses given during the collaborative process.

Determining Influence

In order to determine influence between the FEPs involved, the following methods were used.

An arbitrary percentage influence from 0% to 10% was allocated to each business unit representative. As the collaborative process progressed, the responses by each representative were influenced by the other 10 member’s influence. The breakdown of the allocated influence was:

Common interest influence was determined by informing each FEP of the other member’s “Top 5″ projects. If a project matched one of the other’s in the top five, an influence percentage was awarded between 1% and 5%. This influence was allowed to compound if more than one project was in common. A 5% influence was awarded when there was a total match (i.e., Partner ^’s first project choice is the same as Partner B), reducing to 0% the further away a project was in terms of order (i.e., Partner ^’s project is ranked 3, and partner B’s project is ranked 5; therefore, the influence is 3%). Table 2 documents the common influence factors across the business units.

Results

After completing each run of the play scenario, the results were compared to the actual results collected during the industry process.

Across the 88 projects identified, approximately 70% of projects were common to all results. It can also be seen from the captured results that influence does impact the final makeup of the projects to be included within the allowable project budget timeframe.

While the percentage of match with the industry results is fairly high, of more interest are the reasons of difference between the industry and the play scenarios. As stated earlier, factors such as trade and pre-existing relationships were beyond the scope of this experiment. In addition, given the business unit group size of 11, they may also be factors of influence such as the group size (Fay et al., 2000). Subsequent play scenarios will require the incorporation of these additional factors. Closing this “collaborative gap” between a human social group and an artificial social group with the same goals and objectives is a compelling area of research, and a large step along the road to achieving social acceptance of artificial beings as FEPs.

Actual Results

As noted earlier, the data collected for this play scenario is sourced from real-world business data. As such, the collected data does not have the rigour true experimental collection. For example, the business groups involved in the real process interpreted the ranking instructions sent out by the infrastructure team in different ways. While the scenario itself was a compelling real-world case, further work on industry scenarios will require more explicit information to ensure the resulting data collection is of experimental quality.

FUTURE TRENDS

As artificial entities become more widely used in collaborative cognitive applications, negotiation skills become more important. There are many applications where the use of collaborative human and artificial FEPs may be utilised. Broadly speaking, to apply these concepts can be applied to any collaborative decision making scenario, as long as the following criteria are well-defined: The scenario’s objectives and goals must be established; the communication, physical and cognitive layers are clearly defined; and the collaborative process is applied as a framework to facilitate the collection and application of group collective knowledge in order to achieve the defined objectives/goals.

In the enterprise, collaborative FEPs can be applied in training scenarios such as the project planning exercise. Beyond this, FEPs can also play an effective role in collaborative business intelligence and strategic planning. Stepping out of the enterprise, the nature of FEPs being human or artificial, allows artificial FEPs to find application in automated transport systems that can “collaborate” with other human and artificial road users. In addition, there are compelling reasons for artificial FEPs to be used in pure entertainment applications, by allowing computer game developers to create richer, more interactive experiences. Interestingly, collaborative FEPs could facilitate the study of human behaviour and interaction, through the creation of safe environments for behavioural studies and clinical psychology (we strongly believe that it may be used for the purpose of assisting patients in overcoming difficulties of expression and engaging in collaborative play scenarios).

For artificial beings emerging as equal partners in society, the future becomes more speculative. As stated earlier, artificial beings need to move from the position of an intelligent tool to some form of acceptance of equality, in order to become “collaboratively equal” to their human counterparts. For this kind of acceptance to occur, FEP applications such as business analysis, transportation and entertainment need to form the groundwork for basic acceptance.

Beyond overcoming the technical challenges of integrating artificial beings into collaborative partnerships with humans, there are also the challenges of acceptance. Preconceived notions of science fiction (Khan, 1998) and cultural perceptions between different cultures (Kaplan, 2004) are some of these challenges. The positive benefits of collaborative artificial FEPs also faces challenges of negative perceptions within different social groups and societies. Addressing these issues effectively shall ensure that collaboration among human and artificial beings is an effective method of group decision making.

CONCLUSION

Human and artificial beings as Fully Equal Partners (FEPs) offer compelling applications in industry. There are many challenges faced in the integration and acceptance of artificial FEPs into the social groups of humans. One challenge described was the capability of artificial FEPs to influence group decision making outcomes. FEPs and the collaborative process were described, showing how these are applied in a simple training play scenario. The negotiation processes of the artificial FEPs were presented and how different forms of influence may be used to affect a collaborative outcome. The outcome of the play scenarios indicates a “collaborative gap” between humans and artificial beings. Further work on reducing the size of this gap will contribute to the acceptance of artificial beings as collaborative entities within the group decision making process.

Beyond this play scenario and its application in training, FEPs offer a wide variety of applications across varied business sectors including training, business analysis, transportation, entertainment and behavioural studies. By understanding the scenario objectives, architectural layers and collaborative process framework, it is possible to apply this concept to any collaborative process. As humans work more closely with artificial entities in the future, collaborative FEPs offer a mechanism to ensure that work is mutually productive to all entities involved, regardless of their physical nature.

KEY TERMS

Fully Equal Partner (FEP): An intelligent entity that performs tasks cooperatively with other FEPs (biological or artificial), but is also capable of being replaced one with another. These beings are not necessarily aware of the nature of their fellow partners.

Group Collective Knowledge: Information that is presented to a group of FEPs collaboration.

Collaborative Process: Involves the interaction of FEPs to achieve defined outcomes. The process involves questions, responses, actions and negotiation.

Influence: Among FEPs is the ability of a FEP, during collaborative negotiation, to align another FEPs response with their own.

Collaborative Gap: The difference between the outcomes of groups of human and artificial entities in a collaborative decision-making scenario, where both sets of entities have the same beliefs and objectives.

Intelligent Tools: Intelligent artificial entities that are utilised by humans but do not impact any collaborative decision-making process via social influences.

Social Acceptance: Social acceptance of artificial FEPs is the ability of human FEPs to accept Artificial FEPs into the collaborative process and influence, and be influenced, by these entities.