Checking and saving

The next stage of OCR is manual checking. The output is displayed on the screen, with problems highlighted in color. One color may be reserved for unrecognized and uncertainly recognized characters, another for words that do not appear in the dictionary. Different display options can suppress some of this information. The original image is displayed too, perhaps with an auxiliary magnification window that zooms in on the region in question. An interactive dialog, similar to the spell-check mode of word processors, focuses on each error and allows the user to ignore this instance, ignore all instances, correct the word, or add it to the dictionary. Other options allow users to ignore words with digits and other nonalphabetic characters, ignore capitalization mismatches, normalize spacing around punctuation marks, and so on.

You may also want to edit the format of the recognized document, including font type, font size, character properties like italics and bold, margins, indentation, table operations, and so on. Ideally, general word-processor options will be offered within the OCR package, to save having to alternate between the OCR program and a word processor.

The final stage is to save the OCR result, usually to a file (alternatives include copying it to the clipboard or sending it by e-mail). Supported formats might include plain text, HTML, RTF, Microsoft Word, and PDF. There are many possible options. You may want to remove all formatting information before saving, or include the "uncertain character" highlighting in the saved document, or include pictures in the document. Other options control page size, font inclusion, and picture resolution. In addition, it may be necessary to save the original page image as well as the OCR text. In PDF format (described in Section 4.5), you can save the text and pictures only, or save the text under (or over) the page image, where the entire image is saved as a picture and the recognized text is superimposed upon it, or hidden underneath it. This hybrid format has the advantage of faithfully replicating the look of the original document—which can have useful legal implications. It also reduces the requirement for super-accurate OCR. Alternatively, you might want to save the output in a way that is basically textual, but with the image form substituted for the text of uncertainly recognized words.

Page handling

Let us return to the process of scanning the page images and consider some practical issues. Physically handling the pages is easiest if you can "disbind" the documents by cutting off their bindings (obviously, this destroys the source material and is only possible when spare copies exist). At the other extreme, originals cans be unique and fragile, and specialist handling is essential to prevent their destruction. For example, most books produced between 1850 and 1950 were printed on acid paper (paper made from wood pulp generated by an acidic process), and their life span is measured in decades—far shorter than earlier or later books. Toward the end of their lifetime they decay and begin to fall apart (see topic 9).

Sometimes the source material has already been collected on microfiche or microfilm, and the expense of manual paper handling can be avoided. Although microfilm cameras are capable of very high resolution, quality is compromised because an additional generation of reproduction is inter posed; furthermore, the original microfilming may not have been done carefully enough to permit digitized images of sufficiently high quality for OCR. Even if the source material is not already in this form, microfilming may be the most effective and least damaging means of preparing content for digitization. It capitalizes on substantial institutional and vendor expertise, and as a side benefit the microfilm masters provide a stable long-term preservation format.

Generally the two most expensive parts of the whole process are handling the source material on paper, and the manual interactive processes of OCR. A balance must be struck. Perhaps it is worth the extra generation of reproduction that microfilm involves to reduce paper handling, at the expense of more labor-intensive OCR; perhaps not.

Microfiche is more difficult to work with than microfilm, because it is harder to reposition automatically from one page to the next. Moreover, it is often produced from an initial microfilm, in which case one generation of reproduction can be eliminated by digitizing directly from the film.

Image digitization may involve other manual processes apart from paper handling. Best results may be obtained by manually adjusting settings like contrast and lighting individually for each page or group of pages. The images may have to be manually deskewed. In some cases, pictures and illustrations will need to be copied from the digitized images and pasted into other files.

Planning an image digitization project

Any significant image digitization project will normally be outsourced. The cost varies greatly with the size of the job and the desired quality. For a small simple job, with material in a form that can easily be handled (e.g., books whose bindings can be removed), text that is clear and problem-free, and with few images and tables that need to be handled manually, you could expect to pay for scanning and OCR, with a discount for large quantities.

• 50t/page for fully automated OCR processing

• $1.25/page for 99.9 percent quality (3 incorrect characters per page)

• $2/page for 99.95 percent quality (1.5 incorrect characters per page)

• $4/page for 99.995 percent quality (1 incorrect character every 6 pages)

If difficulties arise, costs increase to many dollars per page. Using a third-party service bureau eliminates the need for you to become an expert in state-of-the-art image digitization and OCR. However, you will need to set standards for the project and to ensure that they are adhered to.

Most of the factors that affect digitization can be evaluated only by practical tests. You should arrange for samples to be scanned and OCR’d by competing companies and then compare the results. For practical reasons (because it is expensive or infeasible to ship valuable source materials around), the scanning and OCR stages may be contracted out separately. Once scanned, images can be transmitted electronically to potential OCR vendors for evaluation. You should probably obtain several different scanned samples—at different resolutions, different numbers of gray levels, from different sources, such as microfilm and paper—to give OCR vendors a range of different conditions. You should select sample images that span the range of challenges that your material presents.

Quality control is clearly a central concern in any image digitization project. An obvious quality-control approach is to load the images into your system as soon as they arrive from the vendor and to check them for acceptable clarity and skew. Images that are rejected are returned to the vendor for rescanning. However, this strategy is time-consuming and may not provide sufficiently timely feedback to allow the vendor to correct systematic problems. It may be more effective to decouple yourself from the vendor by batching the work. Quality can then be controlled on a batch-by-batch basis, where you review a statistically determined sample of the images and accept or reject whole batches.

Inside an OCR shop

Because it’s labor-intensive, OCR work is often outsourced to developing countries like India, the Philippines, and Romania. Ten years ago, one of the authors visited an OCR shop in a small two-room unit on the ground floor of a high-rise building in a country town in Romania. It contained about a dozen terminals, and every day from 7:00 am through 10:30 pm the terminals were occupied by operators who were clearly working with intense concentration. There were two shifts a day, with about a dozen people in each shift and two supervisors—25 employees in all.

Most of the workers were university students who were delighted to have this kind of employment— it compared well with the alternatives available in their town. Pay was by results, not by the hour— and this was quite evident as soon as you walked into the shop and saw how hard people worked. They regarded their shift at the terminal as an opportunity to earn money, and they made the most of it.

This firm uses two different commercial OCR programs. One is better for processing good copy, has a nicer user interface, and makes it easy to create and modify custom dictionaries. The other is preferred for tables and forms; it has a larger character set with many unusual alphabets (e.g., Cyrillic). The firm does not necessarily use the latest version of these programs; sometimes earlier versions have special advantages.

The principal output formats are Microsoft Word and HTML. Again, the latest release of Word is not necessarily the one that is used. A standalone program is used for converting Word documents to HTML because it greatly outperforms Word’s built-in facility. The people in the firm are expert at decompiling software and patching it. For example, they were able to fix some errors in the conversion program that affected how nonstandard character sets are handled. Most HTML is edited by hand, although they use a WYSIWYG (What You See Is What You Get) HTML editor for some of the work.

A large part of the work involves writing scripts or macros to perform tasks semiautomatically. Extensive use is made of Visual Basic for Applications (VBA). Although Photoshop is used for image work, they also employ a scriptable image processor for repetitive operations. MySQL, an open-source SQL implementation, is used for forms databases. Java is used for animation and for implementing Web-based questionnaires.

These people have a wealth of detailed knowledge about the operation of different versions of the software packages they use, and they constantly review and reassess the situation as new releases emerge. But perhaps their chief asset is their set of in-house procedures for dividing up work, monitoring its progress, and checking the quality of the result. They claim an accuracy of around 99.99 percent for characters, or 99.95 percent for words—an error rate of 1 word in 2,000. This is achieved by processing every document twice, with different operators, and comparing the result. In 1999, their throughput was around 50,000 pages/month, although the firm’s capability is flexible and can be expanded rapidly on demand. Basic charges for ordinary work are around $1 per page (give or take a factor of two), but vary greatly depending on the difficulty of the job.

An example project

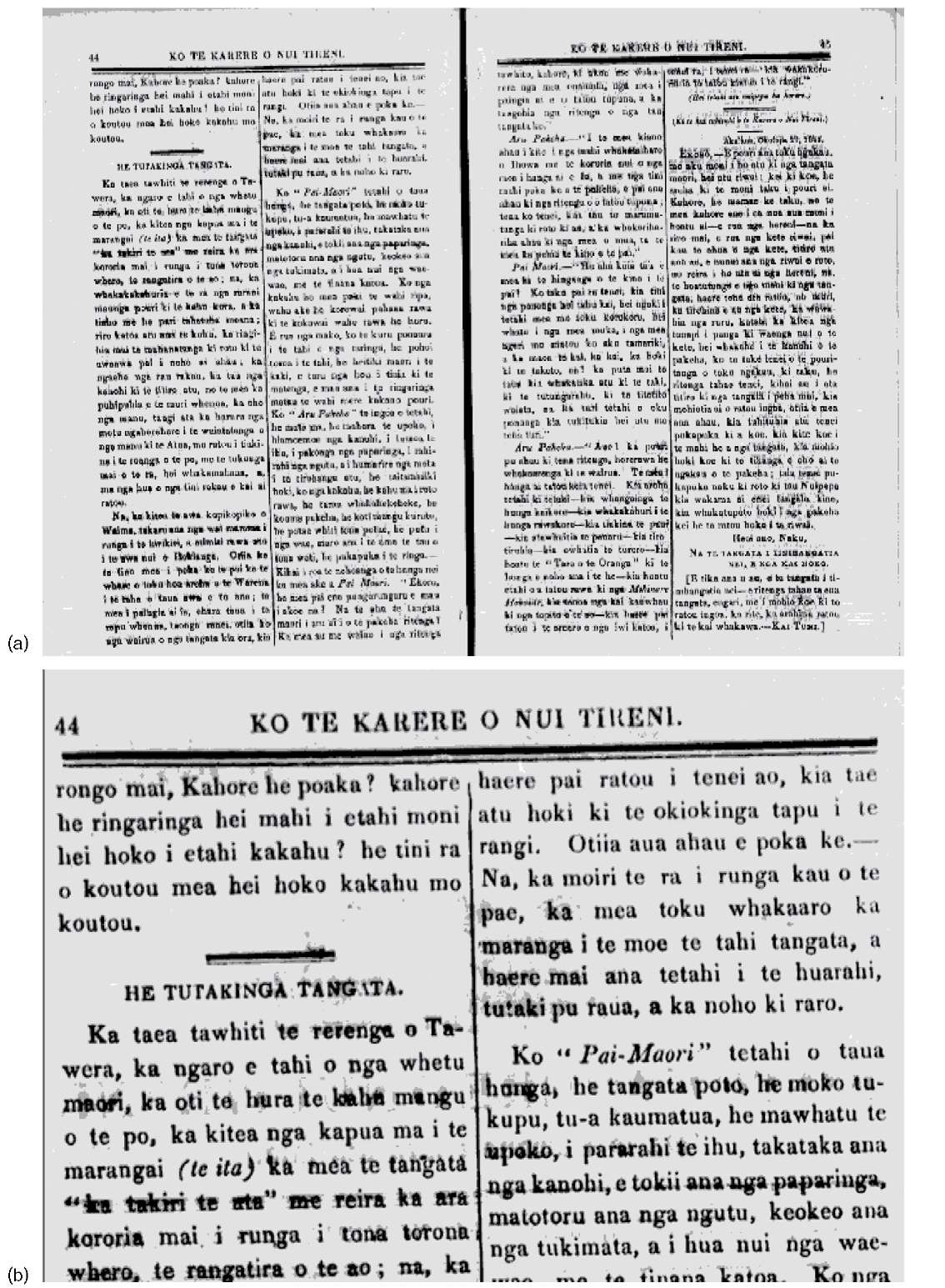

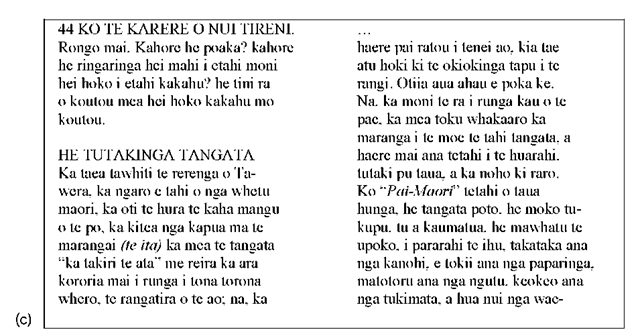

The New Zealand Digital Library undertook a project to put a collection of historical New Zealand Maori newspapers on the Web, in fully indexed and searchable form. There were about 20,000 original images, most of them double-page spreads. Figure 4.4 shows a sample image, an enlarged version of the beginning, and some of the text captured using OCR. The particular image shown was difficult to work with because some areas are smudged by water-staining. Fortunately, not all the images were so poor. As you can see by attempting to decipher it yourself, high accuracy requires a good knowledge of the language in which the document is written.

The first task was to scan the images into digital form. Gathering together paper copies of the newspapers would have been a massive undertaking, since the collection comprises 40 different newspaper titles that are held in a number of libraries and collections scattered throughout the country. Fortunately, New Zealand’s national archive library had previously produced a microfiche containing all the newspapers for the purposes of historical research. The library provided us with access not just to the microfiche result, but also to the original 35-mm film master from which it had been produced. This simultaneously reduced the cost of scanning and eliminated one generation of reproduction. The photographic images were of excellent quality because they had been produced specifically to provide microfiche access to the newspapers.

Once the image source has been settled on, the quality of scanning depends on the scanning resolution and the number of gray levels or colors. These factors also determine how much storage is required for the information. After some testing, it was determined that a resolution of approximately 300 dpi on the original printed newspaper was adequate for the OCR process. Higher resolutions yielded no noticeable improvement in recognition accuracy. OCR results from a good black-and-white image were found to be as accurate as those from a grayscale one. Adapting the threshold to each image, or each batch of images, produced a black-and-white image of sufficient quality for the OCR work. However, grayscale images were often more satisfactory and pleasing for the human reader.

Following these tests, the entire collection was scanned by a commercial organization. Because the images were supplied on 35-mm film, the scanning could be automated and proceeded reasonably quickly. Both black-and-white and grayscale images were generated at the same time to save costs, although it was still not clear whether both forms would be used. The black-and-white images for the entire collection were returned on eight CD-ROMs; the grayscale images occupied 90 CD-ROMs.

Figure 4.4: (a) Double-page spread of a Maori newspaper; (b) enlarged version;

Figure 4.4, cont’d: (c) OCR text

Once the images had been scanned, the OCR process began. First attempts used Omnipage, a widely used proprietary OCR package. But a problem quickly arose: the Omnipage software is language-based and insists on utilizing one of its known languages to assist the recognition process. Because the source material was in the Maori language, additional errors were introduced when the text was automatically "corrected" to more closely resemble English. Although other language versions of the software were available, Maori was not among them, and it proved impossible to disable the language-dependent correction mechanism. In previous versions of Omnipage one can subvert the language-dependent correction by simply deleting the dictionary file (and the Romanian organization described above used an obsolete version for precisely this reason.) The result was that recognition accuracies of not much more than 95 percent were achieved at the character level. This meant a high incidence of word errors in a single newspaper page, and manual correction of the Maori text proved extremely time-consuming.

A number of alternative software packages and services were considered. For example, a U.S. firm offered an effective software package for around $10,000 and demonstrated its use on some sample pages with impressive results. The same firm offers a bureau service and was prepared to undertake the basic OCR for only $0.16 per page (plus a $500 setup fee). Unfortunately, this did not include verification, which had been identified as the most critical and time-consuming part of the process— partly because of the Maori language material.

Eventually, we located an inexpensive software package that had high accuracy and allowed for the provision of a tailor-made language dictionary. It was decided that the OCR process would be done in house, and this proved to be an excellent decision; however, it is heavily conditioned on the unusual language in which the collection is written and the local availability of fluent Maori speakers.

A parallel task to OCR was to segment the double-page spreads into single pages for the purposes of display, in some cases correcting for skew and page-border artifacts. Software was produced for segmentation and skew detection, and a semiautomated procedure was used to display segmented and deskewed pages for approval by a human operator. The result of these labors, turned in to a digital library, is described in Section 8.1.